【Pytorch框架实战】之CIFAR-10图像分类

1.main.py

import torch

from torchvision import datasets

import torch.nn as nn

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

import torch.optim as optim

import numpy as np

from matplotlib import pyplot as plt

from lesson.cifar10.model import Net

from lesson.cifar10.model import Lenet

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

MAX_EPOCH = 100

log_interval = 10

val_interval = 1

trainset = datasets.CIFAR10(root='./data', train=True, download=True,

transform=transforms.Compose([transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std)])

)

trainloader = DataLoader(trainset, batch_size=1024, shuffle=True)

testset = datasets.CIFAR10(root='./data', train=False, download=True,

transform=transforms.Compose([transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std)])

)

testloader = DataLoader(testset, batch_size=1024, shuffle=True)

net = Lenet(classes=10).to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=1e-3)

train_curve = list()

valid_curve = list()

net.train()

for epoch in range(MAX_EPOCH):

loss_mean = 0.

correct = 0.

total = 0.

for i, data in enumerate(trainloader, 0):

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).squeeze().cpu().sum().numpy()

loss_mean += loss.item()

train_curve.append(loss.item())

if (i + 1) % log_interval == 0:

loss_mean = loss_mean / log_interval

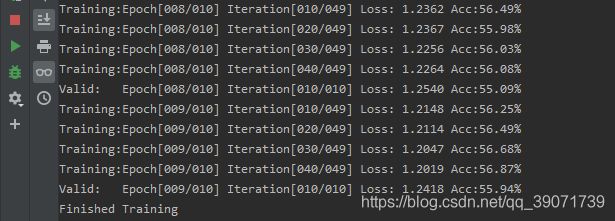

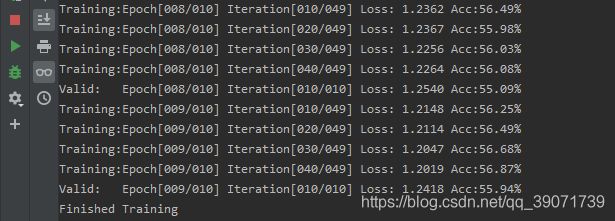

print("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, i + 1, len(trainloader), loss_mean, correct / total))

loss_mean = 0.

if (epoch+1) % val_interval == 0:

correct_val = 0.

total_val = 0.

loss_val = 0.

net.eval()

with torch.no_grad():

for j, data in enumerate(testloader):

images, labels = data

images, labels = images.to(device), labels.to(device)

outputs = net(images)

loss = criterion(outputs, labels)

_, predicted = torch.max(outputs.data, 1)

total_val += labels.size(0)

correct_val += (predicted == labels).squeeze().cpu().sum().numpy()

loss_val += loss.item()

loss_val_mean = loss_val / len(testloader)

valid_curve.append(loss_val_mean)

print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, j + 1, len(testloader), loss_val_mean, correct_val / total_val))

net.train()

print('Finished Training')

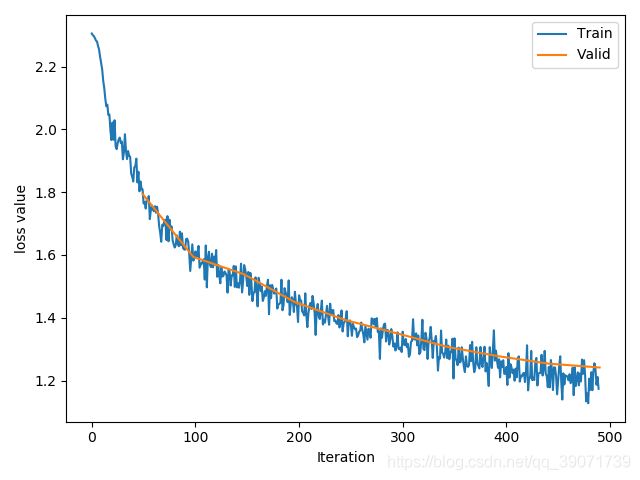

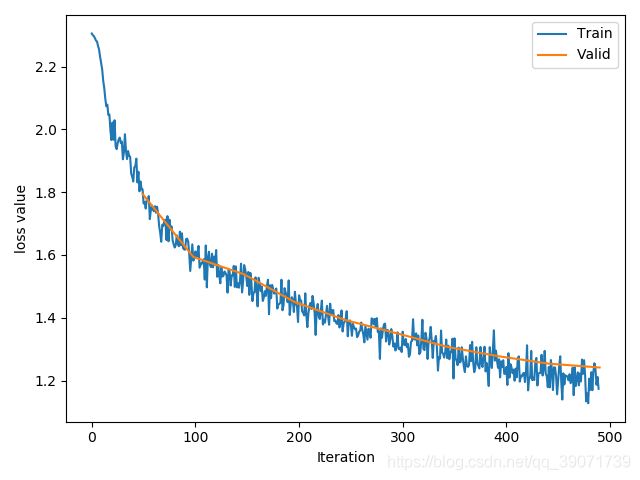

train_x = range(len(train_curve))

train_y = train_curve

train_iters = len(trainloader)

valid_x = np.arange(1, len(valid_curve)+1) * train_iters*val_interval

valid_y = valid_curve

plt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')

plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.show()

2.model.py

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 5 * 5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

class Lenet(nn.Module):

def __init__(self, classes):

super(Lenet, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 6, 5),

nn.ReLU(),

nn.MaxPool2d(2, 2),

nn.Conv2d(6, 16, 5),

nn.ReLU(),

nn.MaxPool2d(2, 2)

)

self.classifier = nn.Sequential(

nn.Linear(16*5*5, 120),

nn.ReLU(),

nn.Linear(120, 84),

nn.ReLU(),

nn.Linear(84, classes)

)

def forward(self, x):

x = self.features(x)

x = x.view(x.size()[0], -1)

x = self.classifier(x)

return x

3.结果(10轮)