Hadoop2.5.2+ubuntu14.04+eclispe+hadoop2x-eclipse-plugin-master搭建开发环境

Hadoop2.5.2+ubuntu14.04+eclispe(Version:Luna Service Release 2 (4.4.2))

+hadoop2x-eclipse-plugin-master搭建开发环境

环境:

ubuntu14.04(或者linux系统)

hadoop2.5.2(hadoop2.x.y应该都可以)

eclipse

hadoop2x-eclipse-plugin-master

ant

linux系统的安装就不说了,假设您已经安装好了

hadoop2.5.2安装参考http://www.powerxing.com/install-hadoop/

我的eclipse版本是Version:Luna Service Release 2 (4.4.2),网上的教程都是较低版本的,但是低版本应该也同样适用

下载hadoop2x-eclipse-plugin-master,网址:https://github.com/winghc/hadoop2x-eclipse-plugin,可以直接使用git

[email protected]:winghc/hadoop2x-eclipse-plugin.git或者下载zip压缩包

ant是java项目的一个构建工具,安装很简单,下载压缩包之后解压到相应目录,之后设置环境变量即可exportANT_HOME=你的

ant目录,exportPATH=$PATH:$ANT_HOME/bin,可以参考其他教程,本教程主要是搭建。

以上任务完成之后,需要先编译hadoop2x-eclipse-plugin-master,得到eclipse的hadoop插件hadoop-eclipse-plugin-

2.5.2.jar。不同版本的hadoop编译参数会不同,hadoop2.x.y对应hadoop-eclipse-plugin-2.x.y.jar。因此,如果您不

是hadoop2.5.2,那么设置的参数以及编译依赖的包也不一样。我编译的时候下载下来的hadoop2x-eclipse-plugin-master里已经

有编译好的2.6.0版本和2.4.1版本和2.2.0的插件了,可以直接使用

编译hadoop2x-eclipse-plugin-master。

打开文件hadoop2x-eclipse-plugin-master/ivy/libraries.properties

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS"BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express orimplied.

# See the License for the specific language governing permissions and

# limitations under the License.

#Thisproperties file lists the versions of the various artifacts used byhadoop and components.

#Itdrives ivy and the generation of a maven POM

#This is the version of hadoop we are generating

hadoop.version=2.6.0

hadoop-gpl-compression.version=0.1.0

#Theseare the versions of our dependencies (in alphabetical order)

apacheant.version=1.7.0

ant-task.version=2.0.10

asm.version=3.2

aspectj.version=1.6.5

aspectj.version=1.6.11

checkstyle.version=4.2

commons-cli.version=1.2

commons-codec.version=1.4

commons-collections.version=3.2.1

commons-configuration.version=1.6

commons-daemon.version=1.0.13

commons-httpclient.version=3.0.1

commons-lang.version=2.6

commons-logging.version=1.0.4

commons-logging-api.version=1.0.4

commons-math.version=2.1

commons-el.version=1.0

commons-fileupload.version=1.2

commons-io.version=2.1

commons-net.version=3.1

core.version=3.1.1

coreplugin.version=1.3.2

hsqldb.version=1.8.0.10

htrace.version=3.0.4

ivy.version=2.1.0

jasper.version=5.5.12

jackson.version=1.9.13

#notable to figureout the version of jsp & jsp-api version to get itresolved throught ivy

#but still declared here as we are going to have a local copy from thelib folder

jsp.version=2.1

jsp-api.version=5.5.12

jsp-api-2.1.version=6.1.14

jsp-2.1.version=6.1.14

jets3t.version=0.6.1

jetty.version=6.1.26

jetty-util.version=6.1.26

jersey-core.version=1.8

jersey-json.version=1.8

jersey-server.version=1.8

junit.version=4.5

jdeb.version=0.8

jdiff.version=1.0.9

json.version=1.0

kfs.version=0.1

log4j.version=1.2.17

lucene-core.version=2.3.1

mockito-all.version=1.8.5

jsch.version=0.1.42

oro.version=2.0.8

rats-lib.version=0.5.1

servlet.version=4.0.6

servlet-api.version=2.5

slf4j-api.version=1.7.5

slf4j-log4j12.version=1.7.5

wagon-http.version=1.0-beta-2

xmlenc.version=0.52

xerces.version=1.4.4

protobuf.version=2.5.0

guava.version=11.0.2

netty.version=3.6.2.Final

以上文件有几处是需要修改的,修改过的部分如下:

hadoop.version=2.5.2

commons-collections.version=3.2.1

commons-httpclient.version=3.1

commons-lang.version=2.6

commons-logging.version=1.1.3

commons-math.version=3.1.1

commons-io.version=2.4

jackson.version=1.9.13

jersey-core.version=1.9

jersey-json.version=1.9

jersey-server.version=1.9

junit.version=4.11

仔细比对每一项参数,然后做相应的修改。然后将该文件复制到hadoop2x-eclipse-plugin-master/src/ivy目录和hadoop2x-

eclipse-plugin-master/src/contrib/eclipse-plugin/ivy目录下,覆盖里面的libraries.properties

进入hadoop2x-eclipse-plugin-master/src/contrib/eclipse-plugin/目录,修改makePlus.sh

ant jar -Dversion=hadoop版本 -Declipse.home=你的eclipse根目录 -Dhadoop.home=hadoop根目录

我的是:

ant jar -Dversion=2.5.2 -Declipse.home=/usr/local/eclipse -Dhadoop.home=/usr/local/hadoop

然后chmod

777 makePlus.sh,执行./makePlus.sh

编译过程中可能会遇到找不到/usr/local/hadoop/share/hadoop/common/lib/htrace-core-3.0.4.jar文件,可以从网上下载一个htrace-core-3.0.4.jar包,然后放入HADOOP_HOME/share/hadoop/common/lib/目录下,重新编译即可

编译成功后,会在hadoop2x-eclipse-plugin-master/build/contrib/eclipse-plugin/目录下生成

hadoop-eclipse-plugin-2.5.2.jar包,放入/usr/local/eclipse/plugins/目录下,重新启动eclipse即可。

进入Window->Show View->other,在MapReduce Tools文件夹下选择Map/Reduce Locations即可加入视图。

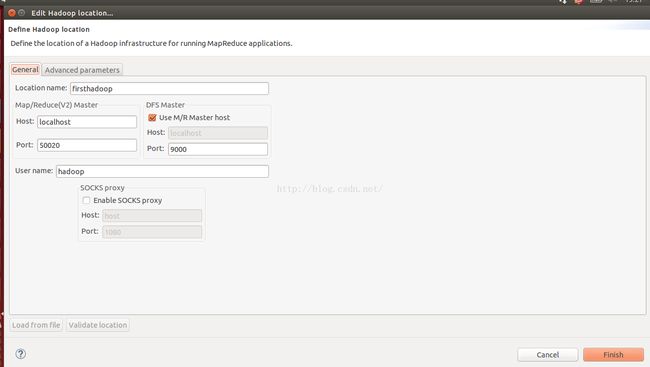

然后右键,新建一个new hadoop location,进入下面的界面

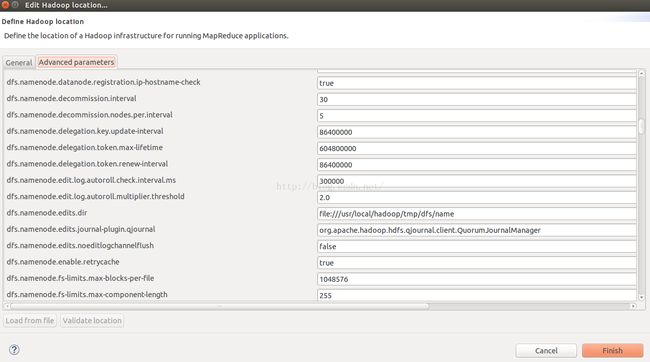

然后配置,这里的配置需要和core-site.xmlhdfs-site.xml一致,找到对应的参数,修改成一样的值。

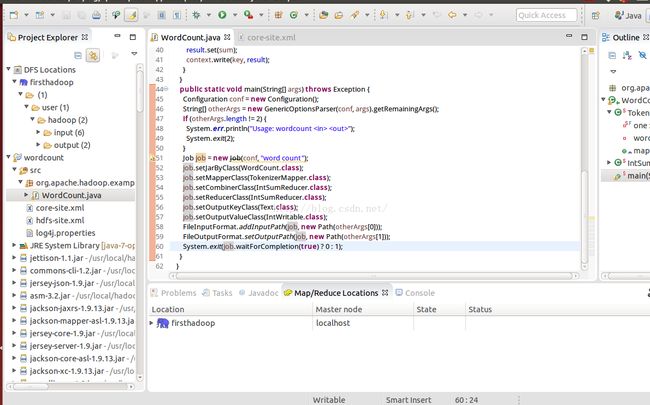

完成之后,注意要启动hadoop!!可以从Dfs Location看到hdfs的文件目录

File->New->project新建一个map/reduce项目wordcount

并将core-site.xml和hdfs-site.xml log4j.properties从$HADOOP_HOME/etc/hadoop/目录拷贝到工程源代码src目录下

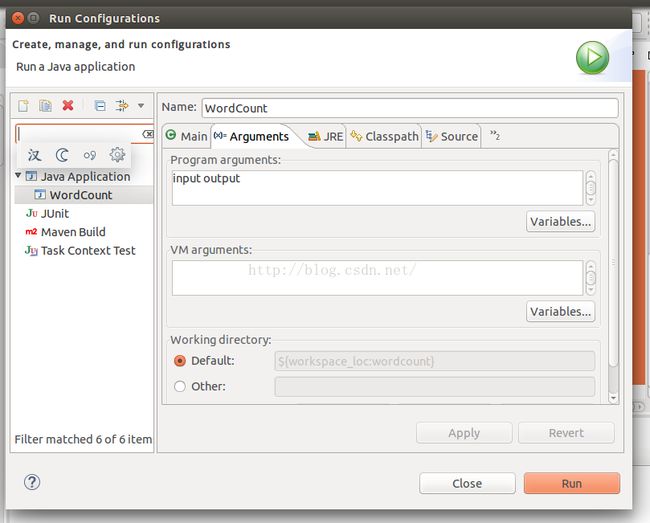

最后新建一个WordCount类,代码如下:

package org.apache.hadoop.examples;

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class WordCount {

public static class TokenizerMapper

extends Mapper{

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(Object key, Text value, Context context

) throws IOException, InterruptedException {

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

}

}

}

public static class IntSumReducer

extends Reducer {

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable values,

Context context

) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if (otherArgs.length != 2) {

System.err.println("Usage: wordcount ");

System.exit(2);

}

Job job = new Job(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

} 最后运行,右键原则Run On Hadoop即可。