TensorRT优化原理和TensorRT Plguin总结

文章目录

- 1. TensorRT优化原理

- 2. TensorRT开发基本流程

- 3. TensorRT Network Definition API

- 4. TensorRT Plugin

- 4.1 实现plugin

- 4.2 编译plugin.so动态库

- 4.3 在TensorRT中加载plugin

- 5. plugin实例

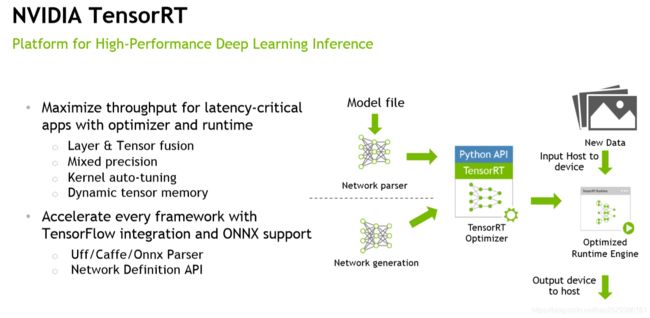

1. TensorRT优化原理

TensorRT加速DL Inference的能力来源于optimizer和runtime。其优化原理包括四个方面:

Layer & Tensor fusion: 将整个网络中的convolution、bias和ReLU层进行融合,调用一个统一的kernel进行处理,让数据传输变快,kernel lauch时间减少,实现加速。此外,还会消除一些output未被使用的层、聚合一些相似的参数和相同的源张量。Mix precision:使用混合精度,降低数据的大小,减少计算量。kernel auto-tuning:基于采用的硬件平台、输入的参数合理的选择一些层的算法,比如不同卷积的算法,自动选择GPU上的kernel或者tensor core等。Dynamic tensor memory:tensorrt在运行中会申请一块memory,最大限度的重复利用此内存,让计算变得高效。

2. TensorRT开发基本流程

下面代码介绍了TensorRT开发基本流程:

from random import randint

from PIL import Image

import numpy as np

import pycuda.driver as cuda

# This import causes pycuda to automatically manage CUDA context creation and cleanup.

import pycuda.autoinit

import tensorrt as trt

import sys, os

sys.path.insert(1, os.path.join(sys.path[0], ".."))

import common

TRT_LOGGER = trt.Logger(trt.Logger.WARNING)

class ModelData(object):

MODEL_FILE = "lenet5.uff"

INPUT_NAME ="input_1"

INPUT_SHAPE = (1, 28, 28)

OUTPUT_NAME = "dense_1/Softmax"

def allocate_buffers(engine):

inputs = []

outputs = []

bindings = []

stream = cuda.Stream()

for binding in engine:

size = trt.volume(engine.get_binding_shape(binding)) * engine.max_batch_size

dtype = trt.nptype(engine.get_binding_dtype(binding))

# Allocate host and device buffers

host_mem = cuda.pagelocked_empty(size, dtype)

device_mem = cuda.mem_alloc(host_mem.nbytes)

# Append the device buffer to device bindings.

bindings.append(int(device_mem))

# Append to the appropriate list.

if engine.binding_is_input(binding):

inputs.append(HostDeviceMem(host_mem, device_mem))

else:

outputs.append(HostDeviceMem(host_mem, device_mem))

return inputs, outputs, bindings, stream

def do_inference(context, bindings, inputs, outputs, stream, batch_size=1):

# Transfer input data to the GPU.

[cuda.memcpy_htod_async(inp.device, inp.host, stream) for inp in inputs]

# Run inference.

context.execute_async(batch_size=batch_size, bindings=bindings, stream_handle=stream.handle)

# Transfer predictions back from the GPU.

[cuda.memcpy_dtoh_async(out.host, out.device, stream) for out in outputs]

# Synchronize the stream

stream.synchronize()

# Return only the host outputs.

return [out.host for out in outputs]

def build_engine(model_file):

with trt.Builder(TRT_LOGGER) as builder, builder.create_network() as network, trt.UffParser() as parser:

builder.max_workspace_size = common.GiB(1)

# Parse the Uff Network

parser.register_input(ModelData.INPUT_NAME, ModelData.INPUT_SHAPE)

parser.register_output(ModelData.OUTPUT_NAME)

parser.parse(model_file, network)

# Build and return an engine.

return builder.build_cuda_engine(network)

# Loads a test case into the provided pagelocked_buffer.

def load_normalized_test_case(data_path, pagelocked_buffer, case_num=randint(0, 9)):

test_case_path = os.path.join(data_path, str(case_num) + ".pgm")

# Flatten the image into a 1D array, normalize, and copy to pagelocked memory.

img = np.array(Image.open(test_case_path)).ravel()

np.copyto(pagelocked_buffer, 1.0 - img / 255.0)

return case_num

def main():

data_path, _ = common.find_sample_data(description="Runs an MNIST network using a UFF model file", subfolder="mnist")

model_path = os.environ.get("MODEL_PATH") or os.path.join(os.path.dirname(__file__), "models")

model_file = os.path.join(model_path, ModelData.MODEL_FILE)

with build_engine(model_file) as engine:

# Build an engine, allocate buffers and create a stream.

inputs, outputs, bindings, stream = common.allocate_buffers(engine)

with engine.create_execution_context() as context:

case_num = load_normalized_test_case(data_path, pagelocked_buffer=inputs[0].host)

[output] = common.do_inference(context, bindings=bindings, inputs=inputs, outputs=outputs, stream=stream)

pred = np.argmax(output)

print("Test Case: " + str(case_num))

print("Prediction: " + str(pred))

if __name__ == '__main__':

main()

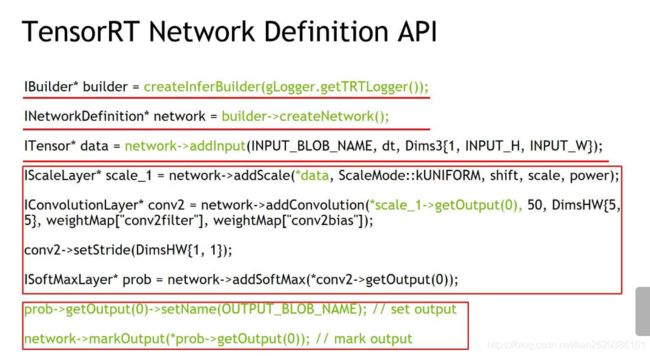

3. TensorRT Network Definition API

除了使用parse的方式解析模型,还可以使用Network Definition API重构整个模型,重构的方式如下图所示:

首先创建一个builder和INetworkDefinition对象network,然后开始构建network架构。一开始调用network->addInput函数添加一个input层,然后在Input的基础上再继续添加其它层,如:卷积层、Scale层、Softmax层等,构建完整个网络之后,最后设置一下整个网络的output name,并标记整个网络的输出。

这只是定义网络的骨架,那网络的权重如何导入呢?

在使用INetorkDefinition API构建network之前,需要先从checkpoint文件中将Weight导出来,在定义每一层网络时,再将Weight塞进去。如上面定义Convolution时,给出weightMap的参数,将weight导入进去。

上面介绍了TensorRT导入模型的两种方式:

- Paser: 解析模型文件。

- Network Definition API: 重新定义整个模型。

使用Paser方式,一般支持的层数只有十几层,那对于那些额外的不能解析的层怎么办呢?(如RNN,目前RNN还不能直接被Paser解析)

一般做法是使用Network Definition API重构这个模型,然后通过network.addRNN()函数添加RNN layer的方式解决这个问题。

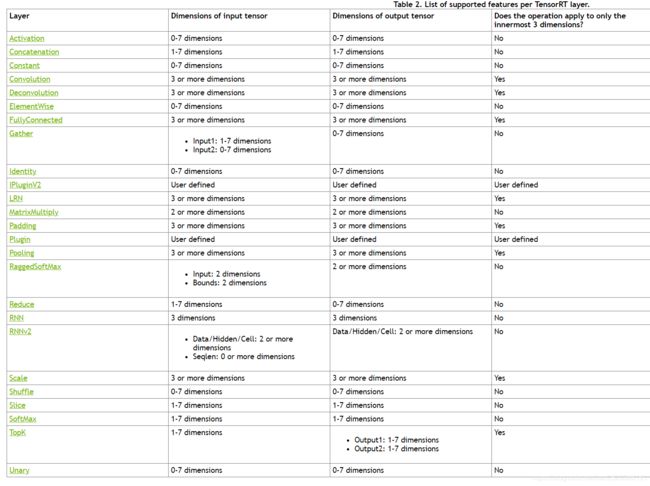

目前TensorRT5.1 Network Definition API支持的层为:

如果我有一些非常不标准的层或者是我自己想定制化的层,没有办法通过Paser或者Network Definition API构建,那么该怎么办呢?

TensorRT提供了第三种方式——自定义Plugin的方式,通过自己开发CUDA,实现自定义的Layer,然后把它封装成TensortRT的Plugin,这样TensorRT可以识别自定义的层。

4. TensorRT Plugin

首先要明确Plugin是做什么的?Plugin是我们针对某个需要定制化的层或目前TensorRT还不支持的层进行实现、封装。

TensorRT Plugin是对网络层功能的扩展,TensorRT官方已经预构建了一些在目标检测中经常使用的Plugin,如:NMS、PriorBOX等,我们可以在TensorRT直接使用,其他的Plugin则需要我们自己创建。

下图显示了TensorRT官方已经构建的Plugin:

4.1 实现plugin

- Kernel代码实现:这部分是该layer需要做的具体的CUDA操作,一般放在xxx.cu、xxx.h文件中。

- IPluginV2:plugin的基类,所有的插件都需要继承该类。

- IPluginCreator: 该类用于在build network时创建plugin对象,或者在inference时deserialize创建plugin对象。

4.2 编译plugin.so动态库

当上面的三个类型文件实现后,即可创建Plugin.so动态库文件了,一般用CMake混合编译C++与cuda。具体可以参考:

- https://blog.csdn.net/fb_help/article/details/79330815

- https://pytorch.org/tutorials/advanced/cpp_extension.html

由于torch包实现CUDAExtension编译,因此这里使用此方式来混合编译,代码如下:

from setuptools import setup

from torch.utils.cpp_extension import BuildExtension, CUDAExtension

setup(

name='retinanet_plugin',

ext_modules=[

CUDAExtension('retinanet_plugin',

['plugins/DecodePlugin.cpp', 'plugins/NMSPlugin.cpp', 'cuda/decode.cu', 'cuda/nms.cu'],

extra_compile_args={

'cxx': ['-std=c++11', '-O2', '-Wall'],

'nvcc': [

'-std=c++11', '--expt-extended-lambda','-Xcompiler', '-Wall',

'-gencode=arch=compute_30,code=sm_30', '-gencode=arch=compute_35,code=sm_35',

'-gencode=arch=compute_61,code=sm_61', '-gencode=arch=compute_62,code=sm_62',

'-gencode=arch=compute_70,code=sm_70', '-gencode=arch=compute_72,code=sm_72',

'-gencode=arch=compute_75,code=sm_75', '-gencode=arch=compute_75,code=compute_75'

],

},

libraries=['nvinfer', 'nvinfer_plugin', 'nvonnxparser', 'opencv_highgui', 'opencv_imgproc', 'opencv_imgcodecs'])

],

cmdclass={

'build_ext': BuildExtension

})

执行下面命令,可以得到retinanet_plugin.so文件:

# python setup.py install

# ll /usr/local/lib/python3.5/dist-packages/retinanet_plugin-0.0.0-py3.5-linux-x86_64.egg

total 4008

drwxr-sr-x 4 root staff 144 Aug 9 07:01 ./

drwxrwsr-x 1 root staff 289 Aug 9 07:00 ../

drwxr-sr-x 2 root staff 161 Aug 9 07:00 EGG-INFO/

drwxr-sr-x 2 root staff 53 Aug 9 07:00 __pycache__/

-rwxr-xr-x 1 root staff 4097408 Aug 9 07:00 retinanet_plugin.cpython-35m-x86_64-linux-gnu.so*

-rw-r--r-- 1 root staff 321 Aug 9 07:00 retinanet_plugin.py

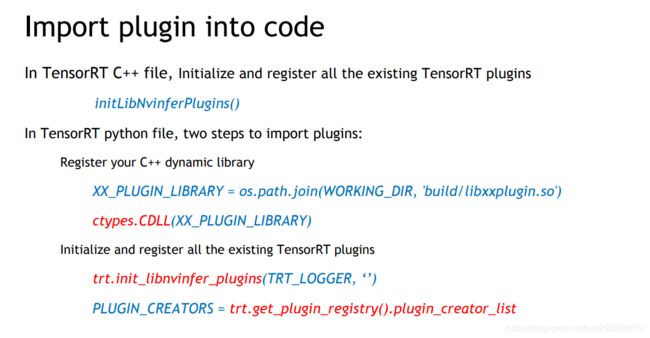

4.3 在TensorRT中加载plugin

1) 使用C++时,直接调用initLibNvinferPlugins()即可将TensorRT pre-build plugin和我们自定义的plugin全部注册进来。

2)当使用python时,需要先使用ctypes.CDLL(XX_PLUGIN_LIBRARY)将动态库文件载入进来,然后调用trt.init_libnvinfer_plugins(TRT_LOGGER, ‘’)方法将TensorRT pre-build plugin和我们自定义的plugin全部注册进来。对于uff模型,parser在解析网络的时候TensorRT会自动map layer到plugin。而对于engine文件,tensorrt会自动搜索并使用注册进来plugin。

5. plugin实例

例1:使用Plugin替换原pb模型中的layer,作成uff模型

主要分为以下几步:

- 设置原pb模型node与plugin node的映射关系,并进行“手术”

参照sample:/usr/src/tensorrt/samples/python/u_ssd/utils/model.py

def ssd_unsupported_nodes_to_plugin_nodes(ssd_graph):

"""Makes ssd_graph TensorRT comparible using graphsurgeon.

This function takes ssd_graph, which contains graphsurgeon

DynamicGraph data structure. This structure describes frozen Tensorflow

graph, that can be modified using graphsurgeon (by deleting, adding,

replacing certain nodes). The graph is modified by removing

Tensorflow operations that are not supported by TensorRT's UffParser

and replacing them with custom layer plugin nodes.

Note: This specific implementation works only for

ssd_inception_v2_coco_2017_11_17 network.

Args:

ssd_graph (gs.DynamicGraph): graph to convert

Returns:

gs.DynamicGraph: UffParser compatible SSD graph

"""

# Create TRT plugin nodes to replace unsupported ops in Tensorflow graph

channels = ModelData.get_input_channels()

height = ModelData.get_input_height()

width = ModelData.get_input_width()

Input = gs.create_plugin_node(name="Input",

op="Placeholder",

dtype=tf.float32,

shape=[1, channels, height, width])

PriorBox = gs.create_plugin_node(name="GridAnchor", op="GridAnchor_TRT",

minSize=0.2,

maxSize=0.95,

aspectRatios=[1.0, 2.0, 0.5, 3.0, 0.33],

variance=[0.1,0.1,0.2,0.2],

featureMapShapes=[19, 10, 5, 3, 2, 1],

numLayers=6

)

NMS = gs.create_plugin_node(

name="NMS",

op="NMS_TRT",

shareLocation=1,

varianceEncodedInTarget=0,

backgroundLabelId=0,

confidenceThreshold=1e-8,

nmsThreshold=0.6,

topK=100,

keepTopK=100,

numClasses=91,

inputOrder=[0, 2, 1],

confSigmoid=1,

isNormalized=1

)

concat_priorbox = gs.create_node(

"concat_priorbox",

op="ConcatV2",

dtype=tf.float32,

axis=2

)

concat_box_loc = gs.create_plugin_node(

"concat_box_loc",

op="FlattenConcat_TRT",

dtype=tf.float32,

)

concat_box_conf = gs.create_plugin_node(

"concat_box_conf",

op="FlattenConcat_TRT",

dtype=tf.float32,

)

# 设置映射关系

# Create a mapping of namespace names -> plugin nodes.

namespace_plugin_map = {

"MultipleGridAnchorGenerator": PriorBox,

"Postprocessor": NMS,

"Preprocessor": Input,

"ToFloat": Input,

"image_tensor": Input,

"MultipleGridAnchorGenerator/Concatenate": concat_priorbox,

"MultipleGridAnchorGenerator/Identity": concat_priorbox,

"concat": concat_box_loc,

"concat_1": concat_box_conf

}

# Create a new graph by collapsing namespaces

ssd_graph.collapse_namespaces(namespace_plugin_map)

# Remove the outputs, so we just have a single output node (NMS).

# If remove_exclusive_dependencies is True, the whole graph will be removed!

ssd_graph.remove(ssd_graph.graph_outputs, remove_exclusive_dependencies=False)

return ssd_graph

2)生成uff模型文件

def model_to_uff(model_path, output_uff_path, silent=False):

"""Takes frozen .pb graph, converts it to .uff and saves it to file.

Args:

model_path (str): .pb model path

output_uff_path (str): .uff path where the UFF file will be saved

silent (bool): if True, writes progress messages to stdout

"""

dynamic_graph = gs.DynamicGraph(model_path)

dynamic_graph = ssd_unsupported_nodes_to_plugin_nodes(dynamic_graph)

uff.from_tensorflow(

dynamic_graph.as_graph_def(),

[ModelData.OUTPUT_NAME],

output_filename=output_uff_path,

text=True

)

3)加载.so库文件

ctypes.CDLL(PATHS.get_flatten_concat_plugin_path())

4)加载uff模型文件,创建engine

加载所有自定义的plugin

trt.init_libnvinfer_plugins(TRT_LOGGER, '')

创建engine

def build_engine(uff_model_path, trt_logger, trt_engine_datatype=trt.DataType.FLOAT, batch_size=1, silent=False):

with trt.Builder(trt_logger) as builder, builder.create_network() as network, trt.UffParser() as parser:

builder.max_workspace_size = 1 << 30

if trt_engine_datatype == trt.DataType.HALF:

builder.fp16_mode = True

builder.max_batch_size = batch_size

parser.register_input(ModelData.INPUT_NAME, ModelData.INPUT_SHAPE)

parser.register_output("MarkOutput_0")

parser.parse(uff_model_path, network)

if not silent:

print("Building TensorRT engine. This may take few minutes.")

return builder.build_cuda_engine(network)

5)save、load engine

def save_engine(engine, engine_dest_path):

buf = engine.serialize()

with open(engine_dest_path, 'wb') as f:

f.write(buf)

def load_engine(trt_runtime, engine_path):

with open(engine_path, 'rb') as f:

engine_data = f.read()

engine = trt_runtime.deserialize_cuda_engine(engine_data)

return engine

例2:使用Network De×nition API及Plugin创建网络模型

在使用Network Denition API及Plugin创建网络模型时,则只需要使用network.add_plugin_v2方法来构建网络即可。参照以下例子:

import tensorrt as trt

import numpy as np

TRT_LOGGER = trt.Logger()

trt.init_libnvinfer_plugins(TRT_LOGGER, '')

PLUGIN_CREATORS = trt.get_plugin_registry().plugin_creator_list

def get_trt_plugin(plugin_name):

plugin = None

for plugin_creator in PLUGIN_CREATORS:

if plugin_creator.name == plugin_name:

lrelu_slope_field = trt.PluginField("neg_slope", np.array([0.1], dtype=np.float32), trt.PluginFieldType.FLOAT32)

field_collection = trt.PluginFieldCollection([lrelu_slope_field])

plugin = plugin_creator.create_plugin(name=plugin_name, field_collection=field_collection)

return plugin

def main():

with trt.Builder(TRT_LOGGER) as builder, builder.create_network() as network:

builder.max_workspace_size = 2**20

input_layer = network.add_input(name="input_layer", dtype=trt.float32, shape=(1, 1))

lrelu = network.add_plugin_v2(inputs=[input_layer], plugin=get_trt_plugin("LReLU_TRT"))

lrelu.get_output(0).name = "outputs"

network.mark_output(lrelu.get_output(0))