Apollo ADS platform study

History:

Apollo 2.5 aims for Level-2 autonomous driving with low cost sensors.

Study path:

- https://github.com/ApolloAuto/apollo

-

Build and Release using Docker - This step is required

-

Launch and Run Apollo-- 单纯软件

Setup:

移植apollo 到特有车辆上。

准备 software

- 1.关联系统要求, 在官网已经提供了checklist.http://apollo.auto/docs/procedure_cn.html , 开环检查是否符合要求

- 2. 准备dbc 文件

- 3. 使用工具完成dbc信号mapping (适配)到Apollo的信号定义上。实现Apollo ADS 与车辆底盘间的通信https://github.com/ApolloAuto/apollo/tree/master/modules/tools/gen_vehicle_protocol

- https://github.com/ApolloAuto/apollo/blob/master/docs/howto/how_to_add_a_new_vehicle.md

- 具体如何准备DBC,如何用工具

- https://github.com/ApolloAuto/apollo/blob/master/docs/technical_tutorial/apollo_vehicle_adaption_tutorial_cn.md

hardware 设备安装:

硬件安装https://github.com/ApolloAuto/apollo/blob/master/docs/quickstart/apollo_1_0_hardware_system_installation_guide.md

开始调试

设备安装连通性检查 & 循迹测试验证车辆能力(闭环测试)--走”8“字https://github.com/ApolloAuto/apollo/blob/master/docs/quickstart/apollo_1_0_quick_start.md

离线 模拟环境https://github.com/ApolloAuto/apollo/blob/master/docs/demo_guide/README.md

标定---车辆性能标定(粗糙的),匹配刹车和油门的---请求和实际响应的跟随效果, 做法是记录数据,然后做匹配(3维拟合)

速度段--请求X(加速、刹车、转向)---响应X (背后就是等同于PID 调教。---减小请求,响应的误差) 注意这个是闭环。--感觉比实车做的效果要好啊。。。而且快。车辆是处于自动驾驶状态下(不用人开,TBC-)

-

Six brake conditions at different speed level

- low speed (<10 mps) brake pulse

- middle speed (10 mps ~ 20 mps ) brake pulse

- high speed (>=20 mps) brake pulsing

- low speed ( <10 mps) brake tap

- middle speed (10 mps ~ 20 mps ) brake tap

- high speed (>=20 mps) brake tap

-

Eight steering angle conditions

- left 0% ~ 20%

- left 20% ~ 40%

- left 40% ~ 60%

- left 60% ~ 100%

- right 0% ~ 20%

- right 20% ~ 40%

- right 40% ~ 60%

- right 60% ~ 100%

https://github.com/ApolloAuto/apollo/blob/master/docs/howto/how_to_update_vehicle_calibration.md

自动驾驶状态下(不用人开,? 写的auto speed 是什么mode?-)应该是人开,算法选择可用的数据,拟合曲线 (这个CNN 学习拟合的过程,baidu提供了云服务)

https://github.com/ApolloAuto/apollo/blob/master/docs/specs/calibration_table/control_calibration.md

https://arxiv.org/pdf/1808.10134.pdf 这篇论文描述了背后的原理:相当的自动,减少了很多人力物力。常规一个车型标定ACC,LKA 需要至少1个月时间。多车型一般需要3个月!!!

https://arxiv.org/pdf/1808.04913.pdf 扩展阅读

示例图如下:Apollo E2E auto_vehicle perfomance calibration

Apollo 1.5 addin

+ velodyne Lidar - HDL-64ES3, 功耗大约 3A at 12V.

安装:https://github.com/ApolloAuto/apollo/blob/master/docs/specs/Lidar/HDL64E_S3_Installation_Guide.md

高度要求: 雷达射线不能被车身挡住。 (避让前引擎盖,后背箱盖)--如果真被挡住了,需要数据处理时,把这些部分滤掉。

估算:安装高度H, 安装点(中心)到前保距离L1, 后保L2, arctan (L1/H or L2/H) <= Lidar 的HFOV.

mkz:The vertical tilt angle of the lasers normally ranges from +2~-24.8 degrees relative to the horizon. For a standard Lincoln MKZ, it is recommended that you mount the LiDAR at a minimum height of 1.8 meters (from ground to the base of the LiDAR)

LIDAR 和GPS/IMU 有一个硬线同步信号- GPS 输出给lidar ( a simple Y-split cable may also provide adequate signal for more than one LiDARs--分线器)

The HDL64E S3 LiDAR requires the Recommended minimum specific GPS/Transit data (GPRMC) and pulse per second (PPS)signal to synchronize to the GPS time. A customized connection is needed to establish the communication between the GPS receiver and the LiDAR:

HW setup-- 标定 LiDAR->GNSS/IMU

目的,统一坐标系,(外参的获取过程,后面数据融合做准备) , 具体见 独立的博客

Apollo 2.0

硬件安装:

https://github.com/ApolloAuto/apollo/blob/master/docs/quickstart/apollo_2_0_hardware_system_installation_guide_v1.md

Addin

+lidar 也可选用禾赛科技的 Pandora - 40线

安装:https://github.com/ApolloAuto/apollo/blob/master/docs/specs/Lidar/Hesai_Pandora_Installation_Guide.md

The 40 line LiDAR system Pandora

Key Features:

- 40 Channels

- 200m range (20% reflectivity)

- 720 kHz measuring frequency

- 360° Horizontal FOV

- 23° Vertical FOV (-16° to 7°)

- 0.2° angular resolution (azimuth)

- <2cm accuracy

- Vertical Resolution: 0.33° ( from -6° to +2°); 1° (from -16° to -6°, +2° to +7°)

- User selectable frame rate

- 360° surrounding view with 4 mono cameras and long disatance front view with 1 color camera

![]() Webpage for Hesai Pandora: http://www.hesaitech.com/pandora.html

Webpage for Hesai Pandora: http://www.hesaitech.com/pandora.html

比较特殊: 40线激光,集成了4个 360 环视摄像头129deg,+ 一个52deg的FLC. (硬件层的融合,可能是更小延时,高效) ONE BOX 方案 ,图像处理+融合方案是百度提供 下图红色。hesai提供硬件+点云处理

特点:不需要camear-->lidar 标定了, 时间完全同步

+ radar --ARS-408

安装在前保支架上,向上倾斜不超过2deg.

![]() You can find more information can be found on the product page:

You can find more information can be found on the product page:

https://www.continental-automotive.com/Landing-Pages/Industrial-Sensors/Products/ARS-408-21

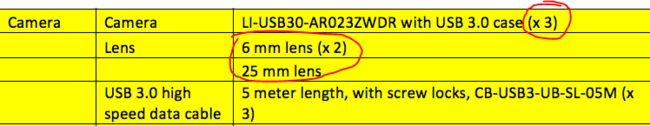

+ camera , 双目镜头 6mm X2 ???? --长焦telephoto + 广角wide-angle 具体选型不明。

Cameras — Leopard Imaging LI-USB30-AR023ZWDR with USB 3.0 case :FOV 69deg,

Apollo 2.0推荐用2个镜头--- 6 mm and 25 mm lens , 这种配置,获得深度信息应该不是很容易!TBD

Apollo 2.5 的配置如下:

-

The camera with the 25 mm focal length should be tilted up 向上翘起2度 by about two degrees. After you make this adjustment, the 25 mm camera should be able to observe the traffic light from 100 m away to the stop line at the intersection.

-

The lenses of the cameras, out of the package, are not in the optimal position. Set up the correct position by adjusting the focus of the lens to form a sharp image of a target object at a distance. A good target to image is a traffic sign or a street sign within the FOV. After adjusting the focus, use the lock screw to secure the position of the lens."

可能用的是标准52deg 6mm镜头。频率25hz-50ms周期

45-50mm, 所以此焦距段的镜头称“标准镜头”。由于它的视角和人眼接近

人眼的前后焦距不等,前焦距f等于17.1毫米,后焦距f′等于22.8毫米,

http://www.visionfocus.cn/news/318.html

传感器尺寸:与任何其他镜头一样,必须首先考虑传感器尺寸。镜头的图像圈必须覆盖整个传感器尺寸,以提供最佳的图像质量,而不会出现阴影或渐晕。如果传感器小于镜头的图像圈,则视野看起来“切掉”。传感器尺寸越小,M12镜头的范围就越大,特别是对于FOV高达180°或200°的鱼眼镜头。传感器尺寸的上限通常为2/3“,无需修改。

传感器坐标转换--即标定:!

https://github.com/ApolloAuto/apollo/blob/master/docs/quickstart/apollo_2_0_sensor_calibration_guide.md

- Camera-->Camra (双目镜头)--first need 每个镜头内参标定!

- radar-->LIDAR 注意: The Camera-to-LiDAR Calibration is more dependent on initial extrinsic values. A large deviation can lead to calibration failure. Therefore, it is essential that you provide the most accurate, initial extrinsic value as conditions allow. ( 初始的外参一定要尽可能准确,要不然标定不会成功的)

- radar-->camera

IMU--> Vehicle 即(lidar--> GNSS/IMU)

deep dive:--> SW architecture:

- Localization — The localization module leverages various information sources such as GPS, LiDAR and IMU to estimate where the autonomous vehicle is located.

- HD-Map — This module is similar to a library. Instead of publishing and subscribing messages, it frequently functions as query engine support to provide ad-hoc structured information regarding the roads.--

只提供红绿灯??提供道路结构信息 - Routing (high level) — The routing module tells the autonomous vehicle how to reach its destination via a series of lanes or roads.

- Planning (low level) — The planning module plans the spatio-temporal(时间和空间)轨迹规范 trajectory for the autonomous vehicle to take.

红绿灯检测

baidu 建议 红绿灯检测,依赖HD map, 输入的何时,何地--, 摄像头开始在图片中检测交通灯信号。(限速牌同样适用)--

-提前控制,可以舒适性更好-提前减速etc,燃油经济性等等, 有目的的图像检测,GPU开销也小。--

对于异常处理。比如红绿灯一个都不亮。或都亮了, 这个时候 所见即所得,依赖于camera.

update by luke:

Apollo 2.5

+软件升级--

地图构建。

需要在TCL:top center 安装一个额外的VLP 16 用来探测红绿灯和限速标识。下图红圈:

安装指南:https://github.com/ApolloAuto/apollo/blob/master/docs/specs/Lidar/VLP_Series_Installation_Guide.md

VLP-16 LiDAR is mounted with an upward tilt of 20±2°

Apollo 3.0

https://github.com/ApolloAuto/apollo/blob/master/docs/quickstart/apollo_3_0_quick_start.md

+Lidar 添加型号支持:

- Hesai's Pandora

- velodyne Puck series + (VLP 16--32)

安装指南:https://github.com/ApolloAuto/apollo/blob/master/docs/specs/Lidar/VLP_Series_Installation_Guide.md

- Innovusion LiDAR +

+Camera 添加型号:

- Argus Camera 3目-- 6mm*2, 25mm

- Wissen Camera 3目 -- 6 mm lens, one with 12 mm lens and the last one with 2.33 mm (应该写错了,23.3mm)

+Radar 添加型号:

- Delphi ESR 2.5

Racobit B01HC-- 国产,信息无。

+ultrasonic sensor provides the distance of a detected object through the CANBus. (实现AEB )--效果太差了吧!

deepdive:-->software architecture

+Guardian — 新的安全模块,用于干预监控检测到的失败和action center相应的功能。 执行操作中心功能并进行干预的新安全模块应监控检测故障。

感知

- 异步传感器融合 – 因为不同传感器的帧速率差异——雷达为10ms,相机为33ms,LiDAR为100ms,所以异步融合LiDAR,雷达和相机数据,并获取所有信息并得到数据点的功能非常重要。

- 在线姿态估计 - 在出现颠簸或斜坡时确定与估算角度变化,以确保传感器随汽车移动且角度/姿态相应地变化。

- 全线支持 - 粗线支持,可实现远程精确度。相机安装有高低两种不同的安装方式。

- 视觉定位 – 基于相机的视觉定位方案正在测试中。 TBC ?

- CIPV --closet in-path vehicle (最近路径车辆)--第一主目标

https://github.com/ApolloAuto/apollo/blob/master/docs/specs/Apollo_3.0_Software_Architecture_cn.md

Apollo 3.5

https://github.com/ApolloAuto/apollo/blob/master/docs/quickstart/apollo_3_5_hardware_system_installation_guide.md

Lidar + 支持了

- velodyne VLS-128

- 添加了3个16-线 360deg lidar ---具体安装说明不是特别清楚。

- 一个前雷达,+一个后雷达

- +一个前16-lidar, 2个16线的后角lidar

radar :

Delphi ESR 2.5

baidu : 黑盒 ; TBA

- Apollo Sensor Unit (ASU)

- Apollo Extension Unit (AXU)

deepdive-->sw architecure

https://github.com/ApolloAuto/apollo/blob/master/docs/specs/Apollo_3.5_Software_Architecture.md

- Advanced traffic light detection how?

- Configurable sensor fusion how ?

- Obstacle detection though multiple cameras

- 具体如何安装。 型号 TBD ---5 cameras (2 front, 2 on either side and 1 rear) and 2 radars (front and rear) along with 3 16-line LiDARs (2 rear and 1 front) and 1 128-line LiDAR to recognize obstacles and fuse their individual tracks to obtain a final track list. The obstacle sub-module detects, classifies and tracks obstacles. This sub-module also predicts obstacle motion and position information (e.g., heading and velocity). For lane line, we construct lane instances by postprocessing lane parsing pixels and calculate the lane relative location to the ego-vehicle (L0, L1, R0, R1, etc.).

the planning module takes the routing output. Under certain scenarios, the planning module might also trigger a new routing computation by sending a routing request if the current route cannot be faithfully followed. 如果当前路线行不通时,规划会向router模块请求一条新路径

Control 比3.0 变换了。 多了几个接口

障碍物的路径预测,可能有多条路径。---这个放在freespce 中,以概率的形式标识可能会暂掉的grid..

Apollo 5.0

- HW no changes--同3.5

Dynamic Model - Control(=vehicle) -in-loop Simulation (控制在环(前面得到了控制映射图?)-模型仿真)

-

https://github.com/ApolloAuto/apollo/blob/master/docs/specs/dynamic_model.md

-

A paper has been written by our engineers on the Dynamic Model which will provide an in-depth explanation of the concepts mentioned above. This paper has already been approved and will be published in ArXiv soon. Please stay tuned!