Keras卷积神经网络玛丽莲梦露与爱因斯坦的识别Part2:使用预训练模型VGG16

从Keras加载vgg16

from keras.applications import VGG16

import os

conv_base = VGG16(weights = 'imagenet',

include_top = False,

input_shape = (128, 128, 3))

conv_base.summary()

'''

VGG16卷积层结构

'''

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) (None, 128, 128, 3) 0

_________________________________________________________________

block1_conv1 (Conv2D) (None, 128, 128, 64) 1792

_________________________________________________________________

block1_conv2 (Conv2D) (None, 128, 128, 64) 36928

_________________________________________________________________

block1_pool (MaxPooling2D) (None, 64, 64, 64) 0

_________________________________________________________________

block2_conv1 (Conv2D) (None, 64, 64, 128) 73856

_________________________________________________________________

block2_conv2 (Conv2D) (None, 64, 64, 128) 147584

_________________________________________________________________

block2_pool (MaxPooling2D) (None, 32, 32, 128) 0

_________________________________________________________________

block3_conv1 (Conv2D) (None, 32, 32, 256) 295168

_________________________________________________________________

block3_conv2 (Conv2D) (None, 32, 32, 256) 590080

_________________________________________________________________

block3_conv3 (Conv2D) (None, 32, 32, 256) 590080

_________________________________________________________________

block3_pool (MaxPooling2D) (None, 16, 16, 256) 0

_________________________________________________________________

block4_conv1 (Conv2D) (None, 16, 16, 512) 1180160

_________________________________________________________________

block4_conv2 (Conv2D) (None, 16, 16, 512) 2359808

_________________________________________________________________

block4_conv3 (Conv2D) (None, 16, 16, 512) 2359808

_________________________________________________________________

block4_pool (MaxPooling2D) (None, 8, 8, 512) 0

_________________________________________________________________

block5_conv1 (Conv2D) (None, 8, 8, 512) 2359808

_________________________________________________________________

block5_conv2 (Conv2D) (None, 8, 8, 512) 2359808

_________________________________________________________________

block5_conv3 (Conv2D) (None, 8, 8, 512) 2359808

_________________________________________________________________

block5_pool (MaxPooling2D) (None, 4, 4, 512) 0

=================================================================

添加全连接层

《Python深度学习》中提到了使用这个卷积网络的两种方法,第一种是先让图片经过VGG16卷积层,保存得到的向量,再在全连接层上训练,这样前面的卷积层运算只需要进行一次。第二种是直接连接上全连接层,将卷积层“冻结”,对全连接层进行训练。这里采用简单的第二种方法,不需要对数据进行处理,但是计算成本比较高。

from keras import models, layers

model = models.Sequential()

model.add(conv_base)

model.add(layers.Flatten())

model.add(layers.Dropout(0.5))

model.add(layers.Dense(256,activation = 'relu'))

model.add(layers.Dense(1,activation = 'sigmoid'))

conv_base.trainable = False

model.summary()

进行训练

from keras import optimizers

from keras.preprocessing.image import ImageDataGenerator

base_dir = r'dir\Desktop\Einstein'

train_dir = os.path.join(base_dir, 'train')

validation_dir = os.path.join(base_dir, 'v')

model.compile(loss='binary_crossentropy',

optimizer=optimizers.RMSprop(lr=1e-4),

metrics=['acc'])

train_datagen = ImageDataGenerator(rescale=1./255)

validation_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size=(128,128),

batch_size=5,

class_mode='binary')

validation_generator = validation_datagen.flow_from_directory(

validation_dir,

target_size=(128, 128),

batch_size=5,

class_mode='binary')

history = model.fit_generator(train_generator,steps_per_epoch=128,epochs=2 ,

validation_data=validation_generator,validation_steps=50)

model.save('EMvgg.h5')

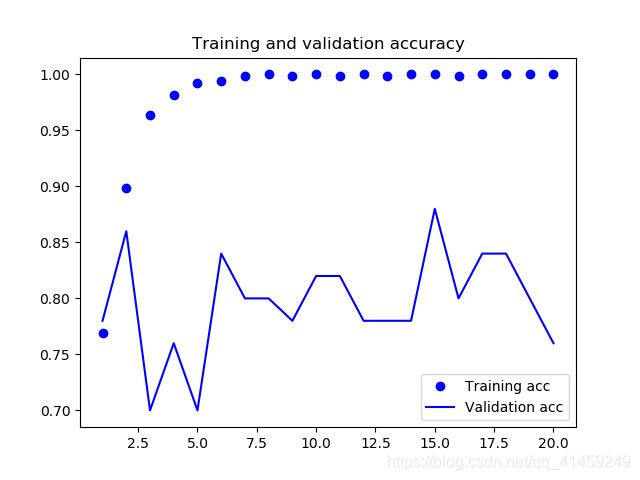

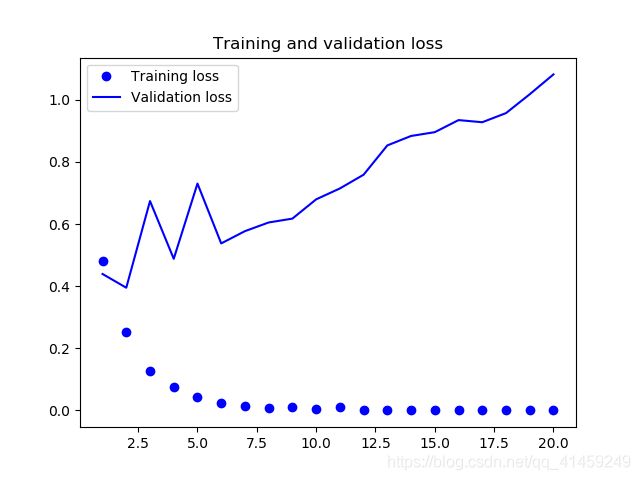

#绘制训练曲线

import matplotlib.pyplot as plt

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc) + 1)

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

loss: 0.0015 - acc: 0.9996 - val_loss: 2.8083 - val_acc: 0.7217

可以看到也过拟合,验证集的正确率在振荡,我们关注的是模型的泛化能力。可以选择提前结束训练,第二次的验证集的识别正确率很可观。让模型在第二次停止训练。正确率达到了86%,相比自己搭建的网络提高了15%的正确率。

loss: 0.2335 - acc: 0.9016 - val_loss: 0.3862 - val_acc: 0.8600

检验

from keras.preprocessing import image

from keras import models

import numpy as np

img = image.load_img(r'dir\Einstein\EM.jpg',target_size=(128,128,3))

img = np.array(img)

img = img/255

model = models.load_model(r'dir\Einstein\EMvgg_1.h5')

img = img.reshape(1,128,128,3)

pre = model.predict(img)

print('预测结果:',pre)

预测结果: [[0.00213499]]也可以使用predict_classes函数,直接输出类别。

pre = model.predict_classes(img)

print('预测结果:',pre)

预测结果: [[0]]0也就是爱因斯坦图像的标签。

结论

综合两次实验,可以知道,卷积神经网络认为这张图片中包含着更多的爱因斯坦的特征。