使用python的requests库实现书籍比价工具

python实现书籍比价工具

目录

- python实现书籍比价工具

- 一 功能说明

- 二 效果截图

- 三 程序代码

- 3.1 当当网

- 3.2京东网

- 3.3 一号店

- 3.4 淘宝网

- 四 参考

一 功能说明

用户输入书籍的ISBN,则依次爬取当当网、京东、一号店、淘宝的第一页搜索结果,按价格由高到低排序显示。

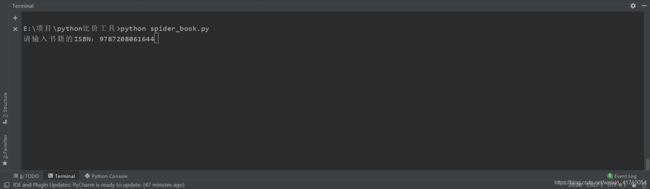

二 效果截图

三 程序代码

3.1 当当网

import requests

from lxml import html

def spider(sn,books=[]):

url = 'http://search.dangdang.com/?key={sn}&act=input'.format(sn=sn)

# 获取html内容

html_data = requests.get(url).text # 注意:不要命名为html,不然会和import html发生覆盖

# xpath对象

selector = html.fromstring(html_data)

# 找到书本列表

lis = selector.xpath('//div[@id="search_nature_rg"]/ul/li')

for li in lis:

print('---------------------------')

# 标题

title = li.xpath('./a/@title')[0]

print(title)

# 购买链接

link = li.xpath('./a/@href')[0]

print(link)

# 价格

origin_price = li.xpath('./p[contains(@class,"price")]/span[@class="search_now_price"]/text()')

if origin_price:

pass

else:

origin_price = li.xpath('./div[contains(@class,"ebook_buy")]/p[contains(@class,"price")]/span/text()')

price=origin_price[0].replace('¥', '')

print(price)

# 商家

store = li.xpath('./p[@class="search_shangjia"]/a[@name="itemlist-shop-name"]/text()')

if store:

pass

else:

store = ['当当自营']

print(store[0])

books.append({

'title':title,

'link':link,

'price':price,

'store':store[0]

})

if __name__ == '__main__':

sn = 9787208061644

spider(sn)

3.2京东网

import requests

from lxml import html

def spider(sn,books=[]):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.103 Safari/537.36',

}

url = 'https://search.jd.com/Search?keyword={sn}'.format(sn=sn)

html_data = requests.get(url, headers=headers).content.decode('utf-8')

selector = html.fromstring(html_data)

lis = selector.xpath('//div[@id="J_goodsList"]/ul/li')

for li in lis:

print('---------------------------')

# 标题

title = li.xpath('./div/div[@class="p-name"]/a/em/text()')[0]

print(title)

# 购买链接

link = li.xpath('./div/div[@class="p-img"]/a/@href')[0]

print('https:' + link)

# 价格

price = li.xpath('./div/div[@class="p-price"]//i/text()')[0].replace('¥', '')

print(price)

# 商家

store = li.xpath('./div/div[@class="p-icons"]/i[1]/text()') #注意:下标从1开始

if store == ['自营']:

pass

else:

store = li.xpath('./div/div[@class="p-shopnum"]/a/@title')

print(store[0])

books.append({

'title':title,

'link':'https:' + link,

'price':price,

'store':store[0]

})

if __name__ == '__main__':

sn = 9787115428028

spider(sn)

3.3 一号店

import requests

from lxml import html

def spider(sn,books=[]):

url='https://search.yhd.com/c0-0/k{0}'.format(sn)

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.103 Safari/537.36',

}

html_data = requests.get(url, headers=headers).content.decode('utf-8')

selector = html.fromstring(html_data)

divs = selector.xpath('//div[@id="itemSearchList"]/div')

for div in divs:

print('---------------------------')

# 标题

title = div.xpath('./div/p[contains(@class,"proName")]/a/@title')[0]

print(title)

# 购买链接

link = div.xpath('./div/p[contains(@class,"proName")]/a/@href')[0]

print('https:' + link)

# 价格

price = div.xpath('./div/p[@class="proPrice"][1]/em/@yhdprice')[0]

print(price)

# 商家

store = div.xpath('./div/p[contains(@class,"searh_shop_storeName")]/span/text()')

if store==['自营']:

pass

else:

store = div.xpath('./div/p[contains(@class,"searh_shop_storeName")]/a/@title')

print(store[0])

books.append({

'title':title,

'link':'https:' + link,

'price':price,

'store':store[0]

})

if __name__ == '__main__':

sn=9787115428028

spider(sn)

3.4 淘宝网

记得添加cookie信息

import requests

import json

import re

import random

def spider(sn, books=[]):

DATA = []

url = 'https://s.taobao.com/search?q={0}&imgfile=&js=1&stats_click=search_radio_all%3A1&initiative_id=staobaoz_20191027&ie=utf8'.format(

sn)

user_agents = ["Mozilla/5.0 (iPod; U; CPU iPhone OS 4_3_2 like Mac OS X; zh-cn) AppleWebKit/533.17.9 (KHTML, like Gecko) Version/5.0.2 Mobile/8H7 Safari/6533.18.5",

"Mozilla/5.0 (iPhone; U; CPU iPhone OS 4_3_2 like Mac OS X; zh-cn) AppleWebKit/533.17.9 (KHTML, like Gecko) Version/5.0.2 Mobile/8H7 Safari/6533.18.5",

"MQQBrowser/25 (Linux; U; 2.3.3; zh-cn; HTC Desire S Build/GRI40;480*800)",

"Mozilla/5.0 (Linux; U; Android 2.3.3; zh-cn; HTC_DesireS_S510e Build/GRI40) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1",

"Mozilla/5.0 (SymbianOS/9.3; U; Series60/3.2 NokiaE75-1 /110.48.125 Profile/MIDP-2.1 Configuration/CLDC-1.1 ) AppleWebKit/413 (KHTML, like Gecko) Safari/413",

"Mozilla/5.0 (iPad; U; CPU OS 4_3_3 like Mac OS X; zh-cn) AppleWebKit/533.17.9 (KHTML, like Gecko) Mobile/8J2",

"Mozilla/5.0 (Windows NT 5.2) AppleWebKit/534.30 (KHTML, like Gecko) Chrome/12.0.742.122 Safari/534.30",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_2) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/14.0.835.202 Safari/535.1",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_2) AppleWebKit/534.51.22 (KHTML, like Gecko) Version/5.1.1 Safari/534.51.22",

"Mozilla/5.0 (iPhone; CPU iPhone OS 5_0 like Mac OS X) AppleWebKit/534.46 (KHTML, like Gecko) Version/5.1 Mobile/9A5313e Safari/7534.48.3",

"Mozilla/5.0 (iPhone; CPU iPhone OS 5_0 like Mac OS X) AppleWebKit/534.46 (KHTML, like Gecko) Version/5.1 Mobile/9A5313e Safari/7534.48.3",

"Mozilla/5.0 (iPhone; CPU iPhone OS 5_0 like Mac OS X) AppleWebKit/534.46 (KHTML, like Gecko) Version/5.1 Mobile/9A5313e Safari/7534.48.3",

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/14.0.835.202 Safari/535.1",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows Phone OS 7.5; Trident/5.0; IEMobile/9.0; SAMSUNG; OMNIA7)",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0; XBLWP7; ZuneWP7)",

"Mozilla/5.0 (Windows NT 5.2) AppleWebKit/534.30 (KHTML, like Gecko) Chrome/12.0.742.122 Safari/534.30",

"Mozilla/5.0 (Windows NT 5.1; rv:5.0) Gecko/20100101 Firefox/5.0",

"Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.2; Trident/4.0; .NET CLR 1.1.4322; .NET CLR 2.0.50727; .NET4.0E; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729; .NET4.0C)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.2; .NET CLR 1.1.4322; .NET CLR 2.0.50727; .NET4.0E; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729; .NET4.0C)",

"Mozilla/4.0 (compatible; MSIE 60; Windows NT 5.1; SV1; .NET CLR 2.0.50727)",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E)",

"Opera/9.80 (Windows NT 5.1; U; zh-cn) Presto/2.9.168 Version/11.50",

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1)",

"Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0; .NET CLR 2.0.50727; .NET CLR 3.0.04506.648; .NET CLR 3.5.21022; .NET4.0E; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729; .NET4.0C)",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN) AppleWebKit/533.21.1 (KHTML, like Gecko) Version/5.0.5 Safari/533.21.1",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; ) AppleWebKit/534.12 (KHTML, like Gecko) Maxthon/3.0 Safari/534.12",

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; .NET CLR 2.0.50727; TheWorld)"

]

headers = {

'User-Agent': random.choice(user_agents),

'referer':'https://s.taobao.com/search?q=9787115428028&imgfile=&js=1&stats_click=search_radio_all%3A1&initiative_id=staobaoz_20191027&ie=utf8',

'cookie':''# 放cookie信息

}

proxy = {

'HTTP': '139.199.19.174:8114'

}

html_data = requests.get(url, headers=headers,proxies=proxy).text

print(html_data)

# f = open('./static/test.html', 'r', encoding='utf-8')

# html_data = f.read()

content = re.findall(r'g_page_config = (.*?)g_srp_loadCss', html_data, re.S)[0]

# 格式化,将json格式的字符串切片。去掉最后一个字符;

content = content.strip()[:-1]

# 将json转为dict

content = json.loads(content)

# 借助json在线解析分析,取dict里的具体data

data_list = content['mods']['itemlist']['data']['auctions']

# 提取数据

for item in data_list:

temp = {

'title': re.findall('(.*?)四 参考

- 慕课网-手把手教你把Python应用到实际开发