pytorch学习笔记——数据归一化(8)

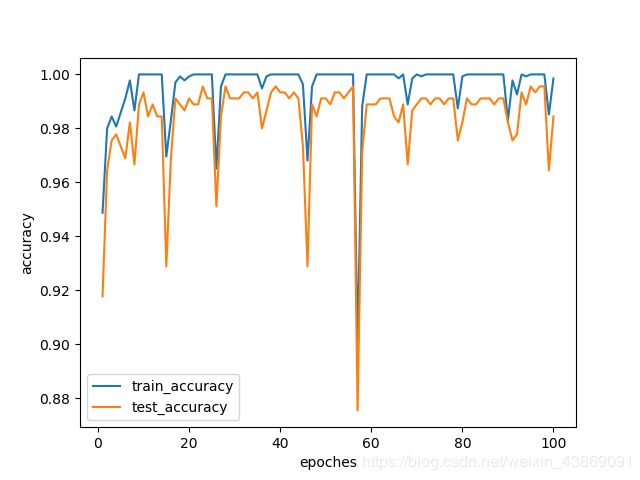

卧槽!我们之前光顾着调架构,一直都忘了数据归一化了!!!虽然归一化以后之前调整得到的部分超参数得进一步调整,但是没关系,相信结果不会太差。训练出来的结果如图所示:

还不错,20epoch以后几乎所有的测试集精度都达到了99%以上。下一次我们将尝试在这一个微型数据集上使用数据增强,看看准确率还能不能进一步提高~~

附相关代码:

import torch

import torch.nn as nn

import torch.utils.data as Data

import torchvision

from sklearn.datasets import load_digits

import numpy as np

from sklearn.linear_model import LogisticRegression

from sklearn.datasets import load_digits

from sklearn.model_selection import train_test_split

import torch as t

import torch.nn as nn

import torch.utils.data as Data

import torchvision

import matplotlib.pyplot as plt

from graphviz import Digraph

import torch

from torch.autograd import Variable

from torchsummary import summary

from PIL import Image

from torchvision.transforms import transforms

class train_mini_mnist(t.utils.data.Dataset):

def __init__(self,transform=None):

self.X,self.y=load_digits(return_X_y=True)

self.X=self.X/15.

self.X_train,self.X_test,self.y_train,self.y_test=train_test_split(self.X,self.y,random_state=0)

self.transform=transform

def __getitem__(self, index):

if self.transform is None:

return t.tensor(self.X_train[index].reshape(1,8,8),dtype=torch.float32),self.y_train[index]

else:

img, target = self.X_train[index].reshape(8,8), int(self.y_train[index])

plt.imshow(img)

img = Image.fromarray(img, mode='L')

img.show()

img = self.transform(img)

#img =transforms.ToTensor()(img)

return img,target

pass

def __len__(self):

return len(self.y_train)

class test_mini_mnist(t.utils.data.Dataset):

def __init__(self):

self.X,self.y=load_digits(return_X_y=True)

self.X=self.X/16.

self.X_train,self.X_test,self.y_train,self.y_test=train_test_split(self.X,self.y,random_state=0)

def __getitem__(self, index):

return t.tensor(self.X_test[index].reshape(1,8,8),dtype=torch.float32),self.y_test[index]

def __len__(self):

return len(self.y_test)

BATCH_SIZE=4

LEARNING_RATE=3e-3

EPOCHES=100

train_data=train_mini_mnist(transform=transforms.Compose([

#transforms.RandomRotation(15),

transforms.ToTensor()

#transforms.ColorJitter(brightness=1, contrast=0.1, hue=0.5)

]))

#train_data=train_mini_mnist()

test_data=test_mini_mnist()

train_loader = Data.DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

test_loader = Data.DataLoader(dataset=test_data, batch_size=BATCH_SIZE, shuffle=True)

class SimpleNet(nn.Module):

def __init__(self):

super(SimpleNet, self).__init__()

self.conv1 = nn.Sequential(#(1, 8, 8)

nn.Conv2d(in_channels=1, out_channels=4, kernel_size=3, stride=1, padding=1),#(4, 8, 8)

nn.BatchNorm2d(4),

nn.Conv2d(in_channels=4, out_channels=8, kernel_size=3, stride=1, padding=1),#(8, 8, 8)

nn.BatchNorm2d(8),

nn.ReLU(),#(8,8,8)

nn.MaxPool2d(kernel_size=2)#(8,4,4)

)

self.conv2 = nn.Sequential(

nn.Conv2d(in_channels=8, out_channels=16, kernel_size=3, stride=1, padding=1),#(16, 4, 4)

nn.BatchNorm2d(16),

nn.ReLU(),#(16,2,2)

nn.MaxPool2d(kernel_size=2)#(16,2,2)

)

self.fc = nn.Linear(16*2*2, 10)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = x.view(x.size(0), -1)#相当于Flatten

x = self.fc(x)

return x

def eval_on_dataloader(name,loader,len):

acc = 0.0

with torch.no_grad():

for data in loader:

images, labels = data

outputs = net(images)

predict_y = torch.max(outputs, dim=1)[1]#torch.max返回两个数值,一个是最大值,一个是最大值的下标

acc += (predict_y == labels).sum().item()

accurate = acc / len

return accurate

def plot_train_and_test_result(train_accs,test_accs):

epoches=np.arange(1,len(train_accs)+1,dtype=np.int32)

plt.plot(epoches,train_accs,label="train_accuracy")

plt.plot(epoches,test_accs,label="test_accuracy")

plt.xlabel('epoches')

plt.ylabel('accuracy')

plt.legend()

net = SimpleNet()

summary(net, (1, 8, 8))

loss_fn = nn.CrossEntropyLoss()

optim = torch.optim.Adam(net.parameters(), lr = LEARNING_RATE,weight_decay=3e-4)

for name,parameters in net.named_parameters():

print(name,":",parameters.size())

best_acc = 0.0

train_accs,test_accs=[],[]

for epoch in range(EPOCHES):

net.train()#切换到训练模式

for step, data in enumerate(train_loader, start=0):

images, labels = data

optim.zero_grad()#将优化器的梯度清零

logits = net(images)#网络推断的输出

loss = loss_fn(logits, labels.long())#计算损失函数

loss.backward()#反向传播求梯度

optim.step()#优化器进一步优化

rate = (step+1)/len(train_loader)

a = "*" * int(rate * 50)

b = "." * int((1 - rate) * 50)

print("\rtrain loss: {:^3.0f}%[{}->{}]{:.4f}".format(int(rate*100), a, b, loss), end="")

print()

net.eval()#切换到测试模式

train_acc=eval_on_dataloader("train",train_loader,train_data.__len__())

test_acc=eval_on_dataloader("test",test_loader,test_data.__len__())

train_accs.append(train_acc)

test_accs.append(test_acc)

print("epoch:",epoch,"train_acc:",train_acc," test_acc:",test_acc)

print('Finished Training')

plot_train_and_test_result(train_accs,test_accs)

plt.show()