废话不说,直接上代码.....

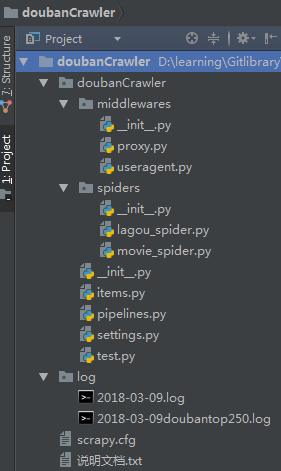

目录结构

items.py

import scrapy

class DoubanCrawlerItem(scrapy.Item):

# 电影名称

movieName = scrapy.Field()

# 电影id

movieId = scrapy.Field()

# 海报地址

img = scrapy.Field()

# 电影信息网址

info_website = scrapy.Field()

# 评分

data_score = scrapy.Field()

# 片长

data_duration = scrapy.Field()

# 上映日期

data_release = scrapy.Field()

# 导演

data_director = scrapy.Field()

# 主演

data_actors = scrapy.Field()

# 制作国家/地区

data_region = scrapy.Field()

# 编剧

data_attrs = scrapy.Field()

# 评论人数

data_number = scrapy.Field()

# 简介

introduction = scrapy.Field()

# 类型

movie_type = scrapy.Field()

# 语言

movie_language = scrapy.Field()

# 又名

also_called = scrapy.Field()

# 排名

movie_ranking = scrapy.Field()

# 这里是重点

spiders

movie_spider.py

import scrapy

from scrapy.spiders import Rule, CrawlSpider

from ..items import DoubanCrawlerItem

from scrapy.linkextractors import LinkExtractor

from selenium import webdriver

from scrapy import log

# 请求添加cookies和headers

cookies = {}

headers = {''}

class DoubanspiderSpider(CrawlSpider):

# spider名称

name = 'douban'

# 爬取规则,获取给定request中的电影信息界面url

rules = (

Rule(LinkExtractor(allow=r'^https://movie.douban.com/subject/\d+/$'), callback='parse_item', follow=True),

)

# 初始化request

def start_requests(self):

for i in range(0, 250, 25):

url = 'https://movie.douban.com/top250?start={}&filter='.format(i)

request = scrapy.Request(url, cookies=cookies, headers=headers)

yield request

# 爬取数据并处理

def parse_item(self, response):

info_url = response.url

item = self.selenium_js(info_url)

item['website'] = info_url

url_list = info_url.split('/')

for i in url_list:

if i == '':

url_list.remove(i)

item['movieId'] = url_list[-1]

movie_introduction = response.xpath('//*[@id="link-report"]/span[1]/text()').extract()

introduction = ''

for i in movie_introduction:

introduction += i.strip().replace('\n', '').replace('\t', '')

item['introduction'] = introduction

item['movie_ranking'] = response.xpath('//*[@id="content"]/div[1]/span[1]/text()').extract()

img_list = response.xpath('//*[@id="mainpic"]/a/img/@src').extract()

item['movieName'] = response.xpath('//*[@id="content"]/h1/span[1]/text()').extract()

for img in img_list:

item['img'] = img.replace('.webp', '.jpg')

log.msg(item)

yield item

# 使用 selenium 爬取一些动态数据

def selenium_js(self, info_url):

item = DoubanCrawlerItem()

driver = webdriver.Chrome()

driver.get(info_url)

driver.maximize_window()

driver.implicitly_wait(10)

data = driver.find_element_by_xpath('//div[@class="subject clearfix"]/div[2]').text

data_list = data.split('\n')

for d in data_list:

if d != '':

j = d.split(':', 1)

if '导演' in j[0]:

item['data_director'] = j[1]

elif '编剧' in j:

item['data_attrs'] = j[1]

elif '主演' in j:

item['data_actors'] = j[1]

elif '类型' in j:

item['movie_type'] = j[1]

elif '制片国家/地区' in j:

item['data_region'] = j[1]

elif '语言' in j:

item['movie_language'] = j[1]

elif '上映日期' in j:

item['data_release'] = j[1]

elif '片长' in j:

item['data_duration'] = j[1]

elif '又名' in j:

item['also_called'] = j[1]

else:

pass item['data_number'] = driver.find_element_by_xpath('//*[@id="interest_sectl"]/div[1]/div[2]/div/div[2]/a/span').text

driver.close()

return item

pipelines.py

import pymysql

import time

from scrapy.exceptions import DropItem

from scrapy import log

class DoubancrawlerPipeline:

def process_item(self, item, spider):

# 判断如果没有本科人数字段,则丢弃该item

if not item.get('img'):

raise DropItem('缺少字段:{}'.format('img'))

# 判断如果没有研究生人数字段,则丢弃该item

if not item.get('movieName'):

raise DropItem('缺少字段:{}'.format('movieName'))

if not item.get('also_called'):

item['also_called'] = ''

return item

class MysqlPipeline(object):

def __init__(self):

""""初始化mysql链接和游标对象"""

self.conn = None

self.cur = None

# self.movies = False

# self.commentary = False

def open_spider(self, spider):

""""初始化mysql链接"""

self.conn = pymysql.connect(

host='localhost',

port=3306,

user='root',

password='xxxxx',

db='douban',

charset='utf8mb4',

)

# 初始化游标对象

self.cur = self.conn.cursor()

# 初始化数据库

# self.delete_data()

def delete_data(self):

"""在保存爬取数据前,清空库 递归清空"""

sql = 'select `movieName` from `top250`'

self.cur.execute(sql)

if self.cur.fetchone():

sql = 'delete from `top250`'

self.cur.execute(sql)

self.conn.commit()

time.sleep(1)

self.delete_data()

else:

log.msg('数据库初始化完成!')

def check_data(self, table):

sql = 'select `movieId` from {}'.format(table)

self.cur.execute(sql)

self.conn.commit()

s = self.cur.fetchall()

id_list = []

# 判断数据是否已经存在

for i in range(len(s)):

for j in s[i]:

id_list.append(j)

return set(id_list)

def process_item(self, item, spider):

"""处理,保存爬取数据"""

if spider.name == 'douban':

id_list = self.check_data('top250')

if int(item['movieId']) not in id_list:

sql = 'insert into `top250`(`movieName`, `movieId`, `data_number`,`data_release`,`img`, ' \

'`introduction`, `data_duration`, `data_region`, `data_director`, `data_actors`, `data_attrs`, ' \

'`website`, `movie_ranking`, `movie_type`, `movie_language`, `also_called`) values ' \

'(%s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s)'

self.cur.execute(sql, (item['movieName'], item['movieId'], item['data_number'], item['data_release'],

item['img'], item['introduction'], item['data_duration'],

item['data_region'], item['data_director'], item['data_actors'],

item['data_attrs'], item['website'], item['movie_ranking'], item['movie_type'],

item['movie_language'], item['also_called']))

self.conn.commit()

log.msg('{},保存成功!'.format(item['movieName']))

return item

def close_spider(self, spider):

"""关闭mysql链接和游标"""

self.cur.close()

self.conn.close()

middlewares

proxy.py

import random

from urllib.request import _parse_proxy

import requests

from scrapy.exceptions import NotConfigured

from scrapy import log

def reform_url(url):

# 重组url,返回不带用户名密码的格式

proxy_type, *_, hostport = _parse_proxy(url)

return '{}://{}'.format(proxy_type, hostport)

class RandomProxyMiddleware:

# 代理的最多失败次数,超过此值的代理,从代理池中删除

max_failed = 3

def __init__(self, settings):

# 从设置中获取代理池

# self.proxies = settings.getlist('PROXIES')

self.proxies = self.choice_proxies()

if self.proxies:

# 初始化统计信息,一开始失败次数都是0

self.stats = {}.fromkeys(map(reform_url, self.proxies), 0)

def choice_proxies(self):

self.proxies = []

# 1个ip

url = '可以返回ip的url'

# 30个

# url = 'xxxx'

r = requests.get(url)

# eval() 计算字符串中的有效表达式

ip_dict = eval(r.text)

if ip_dict['code'] == '0':

for i in ip_dict['msg']:

# 拼接成有效的代理ip

ip = 'http://' + i['ip'] + ':' + i['port']

self.proxies.append(ip)

log.msg(self.proxies)

return self.proxies

else:

log.msg('代理ip接口返回状态码异常...{}'.format(ip_dict['code']))

return '-1'

# 判断是否启用了代理

@classmethod

def from_crawler(cls, crawler):

if not crawler.settings.getbool('HTTPPROXY_ENABLED'):

raise NotConfigured

return cls(crawler.settings)

def process_request(self, request, spider):

# 如果request.meta中没有设置proxy,则从代理池中随机设置一个,作为本次请求的代理

if 'proxy' not in request.meta:

request.meta['proxy'] = random.choice(self.proxies)

def process_response(self, request, response, spider):

# 获取当前使用的proxy

cur_proxy = request.meta['proxy']

# 判断http code是否大于400,响应是否出错

if response.status >= 400:

# 将该代理的失败次数加1

self.stats[cur_proxy] += 1

# 判断该代理的总失败次数是否已经超过最大失败次数

if self.stats[cur_proxy] >= self.max_failed:

log.msg('{} 获得一个 {} 返回结果'.format(cur_proxy, response.status))

# 从代理池中删除该代理

# if cur_proxy in self.proxies:

# self.proxies.remove(cur_proxy)

for proxy in self.proxies:

if reform_url(proxy) == cur_proxy:

self.proxies.remove(proxy)

break

log.msg('{} 超过最大失败次数,从代理列表删除'.format(cur_proxy))

# 将本次请求重新设置一个代理,并返回

if not self.proxies:

self.proxies = self.choice_proxies()

log.msg('超过最大失败次数,代理池为空...再次请求api')

# return

request.meta['proxy'] = random.choice(self.proxies)

return request

return response

def process_exception(self, request, exception, spider):

cur_proxy = request.meta['proxy']

# 如果出现网络超时或者链接被拒绝,则删除该代理

if cur_proxy in self.proxies:

self.proxies.remove(cur_proxy)

log.msg('{} 代理ip出现错误,从代理列表删除'.format(cur_proxy))

# 将本次请求重新设置一个d代理并返回

if not self.proxies:

self.choice_proxies()

log.msg('代理ip出现错误,代理池为空...再次请求api')

# return

request.meta['proxy'] = random.choice(self.proxies)

return request

useragent.py

import faker

class RandomUserAgentMiddleware(object):

"""该中间件负责给每个请求随机分配一个user agent"""

def __init__(self, settings):

self.faker = faker.Faker()

@classmethod

def from_crawler(cls, crawler):

# 创建一个中间件实例,并返回

return cls(crawler.settings)

def process_request(self, request, spider):

# 设置request头信息内的user-Agent字段

request.headers['User-Agent'] = self.faker.user_agent()

def process_response(self, request, response, spider):

# print(request.headers['User-Agent'])

return response

settings.py

import time

# 是否使用代理

HTTPPROXY_ENABLED = True

# 启用log

LOG_ENABLED = True

# log文件编码

LOG_ENCODING = 'utf-8'

# 打印日志文件位置

today = time.strftime('%Y-%m-%d')

LOG_FILE = "./log/{}.log".format(today)

# 提高日志级别

LOG_LEVEL = 'INFO'

# 禁⽤用重定向

REDIRECT_ENABLED = False

# 请求之间的间隔

DOWNLOAD_DELAY = 2

DOWNLOADER_MIDDLEWARES = {

'doubanCrawler.middlewares.useragent.RandomUserAgentMiddleware': 543,

'doubanCrawler.middlewares.proxy.RandomProxyMiddleware': 749,

}

ITEM_PIPELINES = {

'doubanCrawler.pipelines.DoubancrawlerPipeline': 300,

# # 'doubanCrawler.pipelines.RedisPipeline': 301,

'doubanCrawler.pipelines.MysqlPipeline': 302,

}

run:scrapy crawl douban

result:

so, 大家多多指教...