Spring Boot+Echarts+HBase绘制动态数据饼图(Spring Boot打包提交到服务器上运行)

echarts官网

Spring Boot整合Echarts绘制静态数据柱状图、饼图

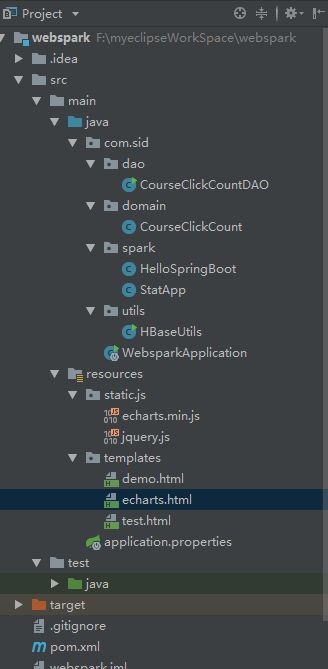

项目目录

需要echarts.min.js和jquery.js

pom.xml

4.0.0

com.sid.spark

webspark

0.0.1-SNAPSHOT

jar

webspark

Demo project for Spring Boot

org.springframework.boot

spring-boot-starter-parent

2.0.3.RELEASE

UTF-8

UTF-8

1.8

org.springframework.boot

spring-boot-starter-web

org.springframework.boot

spring-boot-starter-test

test

org.springframework.boot

spring-boot-starter-thymeleaf

org.apache.hbase

hbase-client

1.2.0

net.sf.json-lib

json-lib

2.4

jdk15

org.springframework.boot

spring-boot-maven-plugin

CourseClickCountDAO.javapackage com.sid.dao;

import com.sid.domain.CourseClickCount;

import com.sid.utils.HBaseUtils;

import org.springframework.stereotype.Component;

import java.util.ArrayList;

import java.util.List;

import java.util.Map;

/**

* 实战课程访问数量数据访问层

*/

@Component

public class CourseClickCountDAO {

/**

* 根据天查询

*/

public List query(String day) throws Exception {

List list = new ArrayList<>();

// 去HBase表中根据day获取实战课程对应的访问量

Map map = HBaseUtils.getInstance().query("course_click_spark_streaming",day);

for(Map.Entry entry: map.entrySet()) {

CourseClickCount model = new CourseClickCount();

model.setName(entry.getKey());

model.setValue(entry.getValue());

list.add(model);

}

return list;

}

public static void main(String[] args) throws Exception{

CourseClickCountDAO dao = new CourseClickCountDAO();

List list = dao.query("20180723");

System.out.println("executor for");

for(CourseClickCount model : list) {

System.out.println("into for");

System.out.println(model.getName() + " : " + model.getValue());

}

System.out.println("end for");

}

} CourseClickCount.javapackage com.sid.domain;

import org.springframework.stereotype.Component;

/**

* 实战课程访问数量实体类

*/

@Component

public class CourseClickCount {

//返回到前端的数据变量名要用name value 因为demo.html里面展示时候用的这两个变量接收数据

private String name;

private long value;

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public long getValue() {

return value;

}

public void setValue(long value) {

this.value = value;

}

}

StatApp.javapackage com.sid.spark;

import com.sid.dao.CourseClickCountDAO;

import com.sid.domain.CourseClickCount;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestMethod;

import org.springframework.web.bind.annotation.ResponseBody;

import org.springframework.web.bind.annotation.RestController;

import org.springframework.web.servlet.ModelAndView;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

/**

* web层

*/

@RestController

public class StatApp {

private static Map courses = new HashMap<>();

static {

courses.put("128","Spark SQL实战");

courses.put("130","Hadoop基础");

courses.put("131","Storm实战");

courses.put("146","Spark Streaming实战");

courses.put("145","MapReduce实战");

courses.put("112","理论");

}

@Autowired

CourseClickCountDAO courseClickCountDAO;

// @RequestMapping(value = "/course_clickcount_dynamic", method = RequestMethod.GET)

// public ModelAndView courseClickCount() throws Exception {

//

// ModelAndView view = new ModelAndView("index");

//

// List list = courseClickCountDAO.query("20180723");

// for(CourseClickCount model : list) {

// model.setName(courses.get(model.getName().substring(9)));

// }

// JSONArray json = JSONArray.fromObject(list);

//

// view.addObject("data_json", json);

//

// return view;

// }

@RequestMapping(value = "/course_clickcount_dynamic", method = RequestMethod.POST)

@ResponseBody

public List courseClickCount() throws Exception {

List list = courseClickCountDAO.query("20180723");

for(CourseClickCount model : list) {

model.setName(courses.get(model.getName().substring(9)));

}

return list;

}

@RequestMapping(value = "/echarts", method = RequestMethod.GET)

public ModelAndView echarts(){

return new ModelAndView("echarts");

}

}

HBaseUtils.javapackage com.sid.utils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.filter.Filter;

import org.apache.hadoop.hbase.filter.PrefixFilter;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

import java.util.HashMap;

import java.util.Map;

/**

* HBase操作工具类

*/

public class HBaseUtils {

HBaseAdmin admin = null;

Configuration conf = null;

/**

* 私有构造方法:加载一些必要的参数

*/

private HBaseUtils() {

conf = new Configuration();

conf.set("hbase.zookeeper.quorum", "node1:2181,node2:2181,node3:2181");

conf.set("hbase.rootdir", "hdfs://hadoopcluster/hbase");

try {

admin = new HBaseAdmin(conf);

} catch (IOException e) {

e.printStackTrace();

}

}

private static HBaseUtils instance = null;

public static synchronized HBaseUtils getInstance() {

if (null == instance) {

instance = new HBaseUtils();

}

return instance;

}

/**

* 根据表名获取到HTable实例

*/

public HTable getTable(String tableName) {

HTable table = null;

try {

table = new HTable(conf, tableName);

} catch (IOException e) {

e.printStackTrace();

}

return table;

}

/**

* 根据表名和输入条件获取HBase的记录数

*/

public Map query(String tableName, String condition) throws Exception {

Map map = new HashMap<>();

HTable table = getTable(tableName);

String cf = "info";

String qualifier = "click_count";

Scan scan = new Scan();

//根据rowkey的前缀来查询

Filter filter = new PrefixFilter(Bytes.toBytes(condition));

scan.setFilter(filter);

ResultScanner rs = table.getScanner(scan);

for(Result result : rs) {

String row = Bytes.toString(result.getRow());

long clickCount = Bytes.toLong(result.getValue(cf.getBytes(), qualifier.getBytes()));

map.put(row, clickCount);

}

return map;

}

public static void main(String[] args) throws Exception {

Map map = HBaseUtils.getInstance().query("course_click_spark_streaming" , "20180723_131");

for(Map.Entry entry: map.entrySet()) {

System.out.println(entry.getKey() + " : " + entry.getValue());

}

}

}

echarts.html

Demo

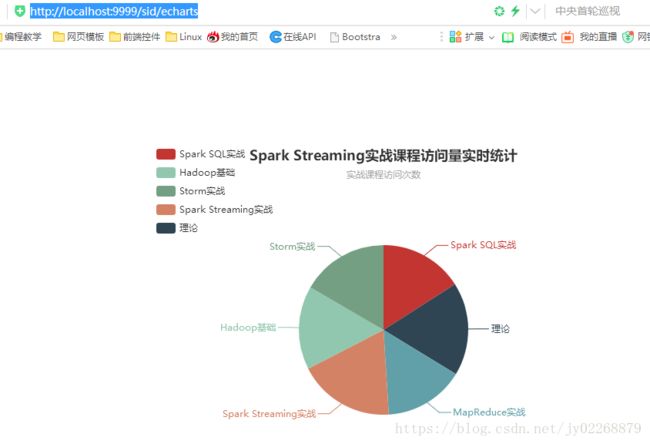

运行

访问http://localhost:9999/sid/echarts

本地能跑通后打包提交到服务器上运行

cd /app

java -jar webspark-0.0.1-SNAPSHOT.jar

访问http://node1:9999/sid/echarts