cs231n assignment2 Dropout

Dropout forward pass

np.random.seed(231)

x = np.random.randn(500, 500) + 10

for p in [0.25, 0.4, 0.7]:

out, _ = dropout_forward(x, {'mode': 'train', 'p': p})

out_test, _ = dropout_forward(x, {'mode': 'test', 'p': p})

print('Running tests with p = ', p)

print('Mean of input: ', x.mean())

print('Mean of train-time output: ', out.mean())

print('Mean of test-time output: ', out_test.mean())

print('Fraction of train-time output set to zero: ', (out == 0).mean())

print('Fraction of test-time output set to zero: ', (out_test == 0).mean())

print()dropout_forward 实现:

这里在training中多除以一个keep_prob,在testing中就不用做任何操作了

def dropout_forward(x, dropout_param):

p, mode = dropout_param['p'], dropout_param['mode']

if 'seed' in dropout_param:

np.random.seed(dropout_param['seed'])

mask = None

out = None

if mode == 'train':

#######################################################################

# TODO: Implement training phase forward pass for inverted dropout. #

# Store the dropout mask in the mask variable. #

#######################################################################

keep_prob = 1 - p

mask = (np.random.rand(*x.shape) < keep_prob) / keep_prob

out = mask * x

#######################################################################

# END OF YOUR CODE #

#######################################################################

elif mode == 'test':

#######################################################################

# TODO: Implement the test phase forward pass for inverted dropout. #

#######################################################################

out = x

#######################################################################

# END OF YOUR CODE #

#######################################################################

cache = (dropout_param, mask)

out = out.astype(x.dtype, copy=False)

return out, cache

Dropout backward pass

np.random.seed(231)

x = np.random.randn(10, 10) + 10

dout = np.random.randn(*x.shape)

dropout_param = {'mode': 'train', 'p': 0.2, 'seed': 123}

out, cache = dropout_forward(x, dropout_param)

dx = dropout_backward(dout, cache)

dx_num = eval_numerical_gradient_array(lambda xx: dropout_forward(xx, dropout_param)[0], x, dout)

# Error should be around e-10 or less

print('dx relative error: ', rel_error(dx, dx_num))dropout_backward 实现:

def dropout_backward(dout, cache):

dropout_param, mask = cache

mode = dropout_param['mode']

dx = None

if mode == 'train':

#######################################################################

# TODO: Implement training phase backward pass for inverted dropout #

#######################################################################

dx = mask * dout

#######################################################################

# END OF YOUR CODE #

#######################################################################

elif mode == 'test':

dx = dout

return dx

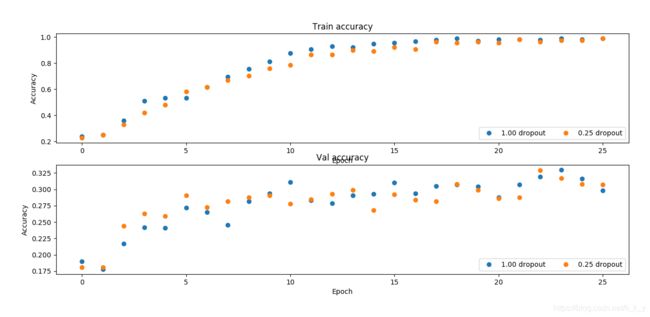

Regularization experiment

# Regularization experiment

data = get_CIFAR10_data()

for k, v in data.items():

print('%s: ' % k, v.shape)

# Train two identical nets, one with dropout and one without

np.random.seed(231)

num_train = 500

small_data = {

'X_train': data['X_train'][:num_train],

'y_train': data['y_train'][:num_train],

'X_val': data['X_val'],

'y_val': data['y_val'],

}

solvers = {}

dropout_choices = [1, 0.25]

for dropout in dropout_choices:

model = FullyConnectedNet([500], dropout=dropout)

print(dropout)

solver = Solver(model, small_data,

num_epochs=25, batch_size=100,

update_rule='adam',

optim_config={

'learning_rate': 5e-4,

},

verbose=True, print_every=100)

solver.train()

solvers[dropout] = solver

# Plot train and validation accuracies of the two models

train_accs = []

val_accs = []

for dropout in dropout_choices:

solver = solvers[dropout]

train_accs.append(solver.train_acc_history[-1])

val_accs.append(solver.val_acc_history[-1])

plt.subplot(3, 1, 1)

for dropout in dropout_choices:

plt.plot(solvers[dropout].train_acc_history, 'o', label='%.2f dropout' % dropout)

plt.title('Train accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.legend(ncol=2, loc='lower right')

plt.subplot(3, 1, 2)

for dropout in dropout_choices:

plt.plot(solvers[dropout].val_acc_history, 'o', label='%.2f dropout' % dropout)

plt.title('Val accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.legend(ncol=2, loc='lower right')

plt.gcf().set_size_inches(15, 15)

plt.show()可见,dropout 限制了在 training data 上的表现,在 val data 上的表现有所提高