CNN网络模型大总结【持续更新中...】

本文将总结从LeNet5开始到现在,具有代表性或具有创新意义的CNN网络模型架构。本文特点是,

一、总结内容非常精简,不详细,适于想快速了解的人进行阅读;

二、附带有相关网络图,来源于论文或网络,直观不枯燥;

三、附带论文链接地址,需要详细学习该网络的人可直接点开下载;

四、附带pytorch代码实现,代码来源于网络或自己写…

目录

- LeNet5

- 网络结构解析

- Pytorch实现

- AlexNet

- 网络结构解析

- 创新点

- Pytorch实现

- VGG

- 网络结构解析

- 创新点:

- Pytorch实现

- NiN

- 网络结构解析

- 创新点

- Pytorch实现

- GoogLeNet

- 网络结构解析

- 创新点

- Pytorch实现

- HighwayNet

- 创新点

- Pytorch实现

- ResNet

- 网络结构解析

- 创新点

- DenseNet

- 网络结构解析

- 创新点

LeNet5

LeNet5(Gradient-Based Learning Applied to Document Recognition) 1998

论文链接:https://ieeexplore.ieee.org/document/726791?reload=true&arnumber=726791

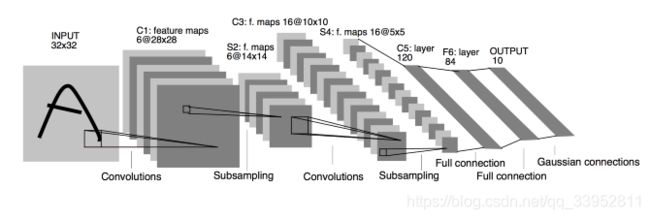

LeNet5算是早期时代的CNN架构,结构比较简单。如图所示,经过一系列操作,解释了CNN网络的主要部件包括,输入层+卷积层+池化层+全连接层+输出层:

输入:输入图像,一般是HxW((H,W)代表图像尺寸)大小的单通道或三通道(RGB)图像

卷积层:卷积层利用filter进行卷积操作,对上一层传入的数据进行特征提取,是CNN的核心

池化层:池化层用于下采样,控制数据处理量,减少计算量,有利于关键信息的提取

全连接层:x与y之间的映射关系,往往都可以通过一定的关键函数来得到,而复杂的全连接网络,恰恰可以拟合出我们想要的函数,全连接层的目的就主要在此

输出:LeNet网络的输出,是10个概率预测值,即对每个类别的概率值,当然,输出也可是其他方式,比如一幅图像等

网络结构解析

Pytorch实现

'''1.LeNet'''

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.conv = nn.Sequential(

nn.Conv2d(1, 6, 5),#(32-5)/1+1=28

nn.Sigmoid(),

nn.AvgPool2d(2,2),#(28-2)/2+1=14

nn.Conv2d(6, 16, 5),#(14-5)/1+1=10

nn.Sigmoid(),

nn.AvgPool2d(2, 2),#5

)

self.fc = nn.Sequential(

nn.Linear(5*5*16, 120),

nn.Sigmoid(),

nn.Linear(120, 84),

nn.Sigmoid(),

nn.Linear(84, 10),

)

def forward(self,x):

feature = self.conv(x)

out = self.fc(feature.view(x.shape[0],-1)) # x.shape[0]张图片一批

return out

def LeNet_T():

net = LeNet()

print(net)

X = torch.rand(1, 1, 32, 32) # 单通道

print(net(X))

LeNet_T()

AlexNet

AlexNet(ImageNet Classification with Deep Convolutional Neural Networks)2012

论文链接:http://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf

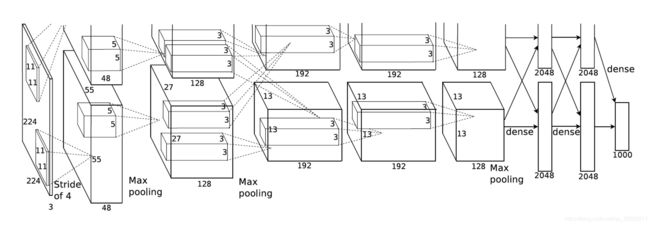

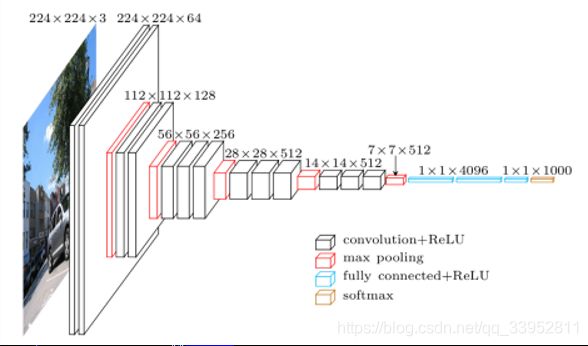

网络结构解析

创新点

1.提出ReLU,收敛更快,由于ReLU是没有界限的,所以需要进行局部相应归一化(Local Response Normalization),使之归一化到0~1的位置

2.提出数据增强(Data augmentation)和Dropout来缓解过拟合(Overfitting)

3.使用双GPU进行网络的训练

Pytorch实现

'''2.AlexNet'''

class AlexNet(nn.Module):

def __init__(self):

super(AlexNet,self).__init__()

self.conv = nn.Sequential(

nn.Conv2d(in_channels=3,out_channels=96,kernel_size=11,stride=4,padding=2),

nn.ReLU(),

nn.MaxPool2d(3,2),

nn.Conv2d(96,256,3,1,1),

nn.ReLU(),

nn.MaxPool2d(3,2),

nn.Conv2d(256,384,3,1,1),

nn.ReLU(),

nn.Conv2d(384,384,3,1,1),

nn.ReLU(),

nn.Conv2d(384,256,3,1,1),

nn.ReLU(),

nn.MaxPool2d(3,2),

)

self.fc = nn.Sequential(

nn.Linear(256*6*6,4096),

nn.ReLU(),

nn.Dropout(0.5),

nn.Linear(4096,4096),

nn.ReLU(),

nn.Dropout(0.5),

nn.Linear(4096,1000)

)

def forward(self,img):

# assert img.size[2]==1

feature = self.conv(img)

return self.fc(feature.view(img.shape[0],-1))

def AlexNet_T():

net = AlexNet()

print(net)

X = torch.rand(1, 3, 224, 224)

print(net(X))

AlexNet_T()

VGG

VGG(Very Deep Convolutional Networks for Large-Scale Image Recognition)2014年

论文链接:https://arxiv.org/abs/1409.1556

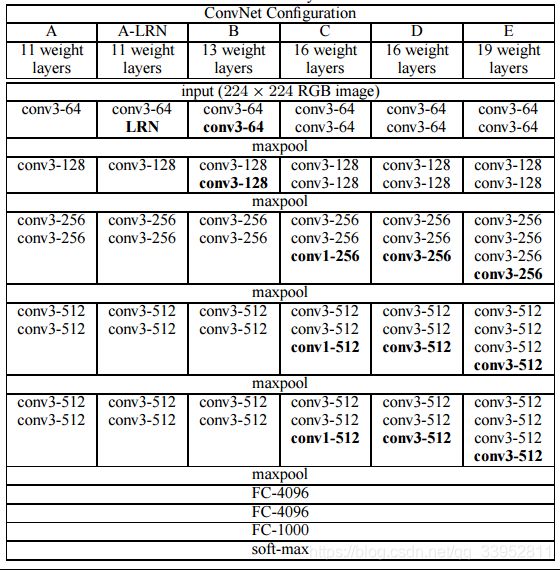

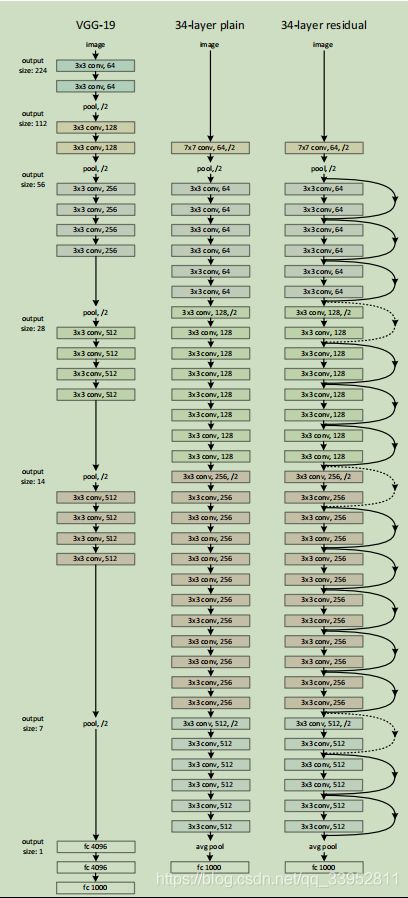

网络结构解析

创新点:

1.多个卷积层加一个最大池化层组成一个VGG块,最后接3个全连接层,相比之下,使用2个3x3相当于使用1个5x5或7x7的卷积,以此类之,但2个3x3的计算量,就更少一些,这也是VGG的一大特点。

2.开始尝试较为深度的层。

上图中,D,E分别代表了VGG16和VGG19的网络,也是目前各项视觉任务中常用到的网络,官方也提供了基于ImageNet的VGG预训练参数。

Pytorch实现

def vgg_block(num_convs,in_channels,out_channels):

blk = []

for i in range(num_convs):

if i == 0 :

blk.append(nn.Conv2d(in_channels,out_channels,3,1,padding=1))

else:

blk.append(nn.Conv2d(out_channels,out_channels,3,1,padding=1))

blk.append(nn.ReLU()) # 每个卷积层后借一个ReLU

blk.append(nn.MaxPool2d(2,2)) # 每一个block最后接一个maxpool

return nn.Sequential(*blk)

vgg_fc_featrues = 512*7*7

vgg_fc_hidden = 4096

vgg_fc = (vgg_fc_featrues,vgg_fc_hidden)

class vgg(nn.Module):

def __init__(self,vgg_fc):

super(vgg,self).__init__()

self.net = nn.Sequential()

self.net.add_module('vgg_block_1', vgg_block(2, 3, 64))

self.net.add_module('vgg_block_2', vgg_block(2, 64, 128))

self.net.add_module('vgg_block_3', vgg_block(3, 128, 256))

self.net.add_module('vgg_block_4', vgg_block(3, 256, 512))

self.net.add_module('vgg_block_5', vgg_block(3, 512, 512))

self.net.add_module('fc',nn.Sequential(

nn.Flatten(),

nn.Linear(vgg_fc[0],vgg_fc[1]),

nn.ReLU(),

nn.Dropout(0.5),

nn.Linear(vgg_fc[1],vgg_fc[1]),

nn.ReLU(),

nn.Dropout(0.5),

nn.Linear(vgg_fc[1],10),

))

def forward(self,img):

return self.net(img)

def VGG_T():

net = vgg(vgg_fc)

print(net)

X = torch.rand(1, 3, 224, 224)

print(net(X))

VGG_T()

NiN

NiN(Network In Network)2014年

论文链接:https://arxiv.org/abs/1312.4400

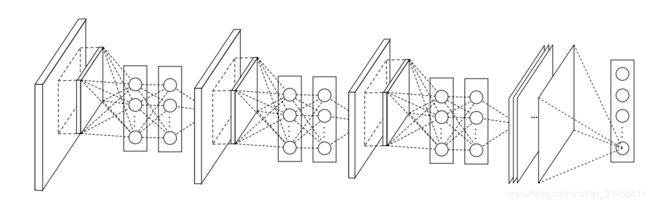

网络结构解析

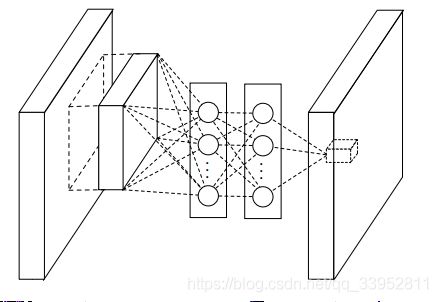

每个NiN_Block呈现为如下图所示的,顺序为:正常卷积–ReLU–1x1卷积–ReLU–1x1卷积–ReLU,上表中,NiN_Block中的size,stride,pad仅代表第一个正常卷积的,而1x1卷积默认stride=1,pad=0

创新点

1.1x1卷积,大大减少了计算量,增加了非线性拟合能力

2.提出全局平均池化操作,用以代替全连接层,减少了过拟合发生,进一步加强特征信息的提取

Pytorch实现

def nin_block(in_channel,out_channel,k,s,p):

return nn.Sequential(

nn.Conv2d(in_channel,out_channel,k,s,p),

nn.ReLU(),

nn.Conv2d(out_channel,out_channel,1),

nn.ReLU(),

nn.Conv2d(out_channel, out_channel, 1),

nn.ReLU()

)

class GlobalAvgPool(nn.Module):

def __init__(self):

super(GlobalAvgPool, self).__init__()

def forward(self,x):

return F.avg_pool2d(x,x.size()[2:])

class NiN(nn.Module):

def __init__(self):

super(NiN, self).__init__()

self.model = nn.Sequential(

nin_block(3,96,11,4,0),

nn.MaxPool2d(3,2),

nin_block(96, 256, 5, 1, 2),

nn.MaxPool2d(3, 2),

nin_block(256,384,3,1,1),

nn.MaxPool2d(3,2),

nn.Dropout(0.5),

nin_block(384,10,3,1,1),

GlobalAvgPool(),

nn.Flatten(),

)

self.con1 = nn.Conv2d(3,96,11,4,0)

self.relu1 = nn.ReLU()

self.con2 = nn.Conv2d(96,96,1)

self.relu2 = nn.ReLU()

self.con3 = nn.Conv2d(96, 96, 1),

self.pool1 = nn.MaxPool2d(3,2)

def forward(self,img):

# return self.model(img)

x = self.con1(img)

print(x.shape)

x = self.relu1(x)

x = self.con2(x)

x = self.pool1(x)

print(x.shape)

return self.model(img)

def NiN_T():

net = NiN()

X = torch.rand(1, 3, 224, 224)

print(net(X))

NiN_T()

GoogLeNet

GoogLeNet(Going deeper with convolutions) 2014年

论文地址(v1):https://arxiv.org/abs/1409.4842

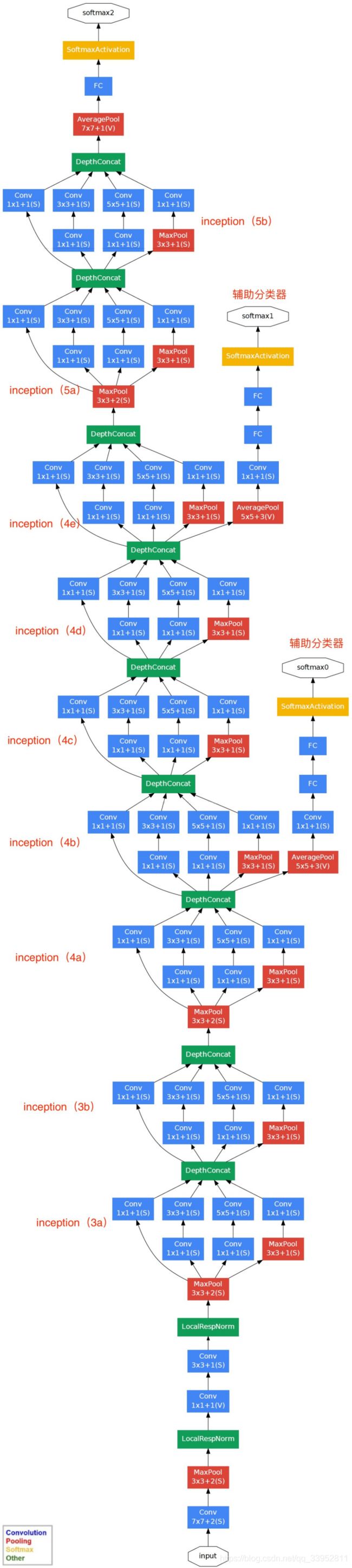

网络结构解析

表中的结果可以概述网络GoogLeNet的网络结构,输入224x224x3图像,输出1000个概率值。

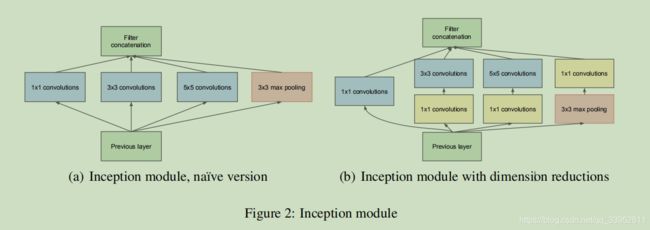

主要的特点就是如下图的Inception结构

创新点

2014年的GoogLeNet,又叫做Inception-v1,至今为止还更新了v2,v3,v4版本

1.提出了如上图Inception结构的模块,纳入多尺度卷积变换思想,使用分裂、转换、合并进行卷积操作,减少了计算量提高了精确度。使用大尺寸核之前,用1x1卷积调节计算(借鉴NiN),用以降低通道数,减少计算量

2.最后一层用全局平均池化进行连接,(这应该不算是创新处,它也借鉴了上一网络NiN的思想,最后证明是有效的,提升了0.6%)

3.引入辅助学习概念,加快收敛速度

Pytorch实现

class Inception(nn.Module):

def __init__(self,c_in,c_1,c_2,c_3,c_4):

super(Inception, self).__init__()

self.p1_1 = nn.Conv2d(c_in,c_1,1)

self.p2_1 = nn.Conv2d(c_in,c_2[0],1)

self.p2_2 = nn.Conv2d(c_2[0],c_2[1],3,padding=1)

self.p3_1 = nn.Conv2d(c_in,c_3[0],1)

self.p3_2 = nn.Conv2d(c_3[0],c_3[1],5,padding=2)

self.p4_1 = nn.MaxPool2d(3,1,1)

self.p4_2 = nn.Conv2d(c_in,c_4,1)

def forward(self,x):

p1 = F.relu(self.p1_1(x))

p2 = F.relu(self.p2_2(F.relu(self.p2_1(x))))

p3 = F.relu(self.p3_2(F.relu(self.p3_1(x))))

p4 = F.relu(self.p4_2(self.p4_1(x)))

return torch.cat((p1,p2,p3,p4),dim=1)

class GoogLeNet(nn.Module):

def __init__(self):

super(GoogLeNet, self).__init__()

self.b1 = nn.Sequential(

nn.Conv2d(3,64,7,2,3),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3,stride=2,padding=1)

)

self.b2 = nn.Sequential(

nn.Conv2d(64,64,1),

nn.Conv2d(64,192,3,1),

nn.MaxPool2d(3,2,1)

)

self.b3 = nn.Sequential(

Inception(192,64,(96,128),(16,32),32),

Inception(256,128,(128,192),(32,96),64),

nn.MaxPool2d(3,2,1)

)

self.b4 = nn.Sequential(

Inception(480, 192, (96, 208), (16, 48), 64),

Inception(512, 160, (112, 224), (24, 64), 64),

Inception(512, 128, (128, 256), (24, 64), 64),

Inception(512, 112, (144, 288), (32, 64), 64),

Inception(528, 256, (160, 320), (32, 128), 128),

nn.MaxPool2d(3,2,1)

)

self.b5 = nn.Sequential(

Inception(832,256,(160,320),(32,128),128),

Inception(832,384,(192,384),(48,128),128),

nn.AvgPool2d(7)

)

self.fc = nn.Sequential(

nn.Flatten(),

nn.Dropout(0.4),

nn.Linear(1024,1000),

# nn.Softmax(dim=1)

)

def forward(self,x):

b1 = self.b1(x)

b2 = self.b2(b1)

b3 = self.b3(b2)

b4 = self.b4(b3)

b5 = self.b5(b4)

return self.fc(b5)

def GoogLeNet_T():

net = GoogLeNet()

X = torch.rand(1, 3, 224, 224)

print(net(X))

GoogLeNet_T()

HighwayNet

HighwayNet(Training Very Deep Networks)

论文链接:https://arxiv.org/pdf/1507.06228.pdf

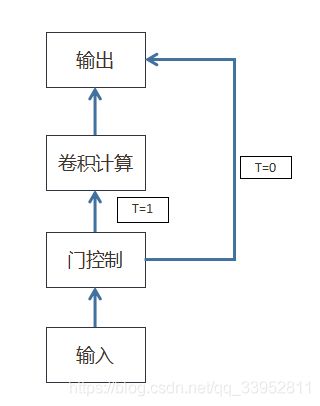

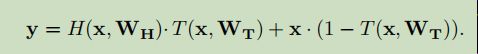

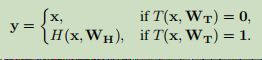

下面这个是解释上图,也就是HighwayNet关键部分“门控制”的公式

创新点

受LSTM的灵感,借鉴“门”机制,提出一种新的网络,如上图所示,T=1的时候,运行卷积运算,T=0的时候,直接传递输入(简化后),这样,就可以训练出很深层的网络,借用随机梯度下降策略就可以很好地进行训练(而且很快),在反向传播梯度计算的时候,部分参数为一个常系数,避免了梯度的消失,保留了关键的信息。

Pytorch实现

'''HighwayNet''' # 参考https://github.com/kefirski/pytorch_Highway

class Highway(nn.Module):

def __init__(self, size, num_layers, f):

super(Highway, self).__init__()

self.num_layers = num_layers

self.nonlinear = nn.ModuleList([nn.Linear(size, size) for _ in range(num_layers)])

self.linear = nn.ModuleList([nn.Linear(size, size) for _ in range(num_layers)])

self.gate = nn.ModuleList([nn.Linear(size, size) for _ in range(num_layers)])

self.f = f

def forward(self, x):

"""

:param x: tensor with shape of [batch_size, size]

:return: tensor with shape of [batch_size, size]

applies σ(x) ⨀ (f(G(x))) + (1 - σ(x)) ⨀ (Q(x)) transformation | G and Q is affine transformation,

f is non-linear transformation, σ(x) is affine transformation with sigmoid non-linearition

and ⨀ is element-wise multiplication

"""

for layer in range(self.num_layers):

gate = F.sigmoid(self.gate[layer](x))

nonlinear = self.f(self.nonlinear[layer](x))

linear = self.linear[layer](x)

x = gate * nonlinear + (1 - gate) * linear

return x

ResNet

ResNet(Deep Residual Learning for Image Recognition) 2016年

论文链接:https://arxiv.org/pdf/1512.03385.pdf

网络结构解析

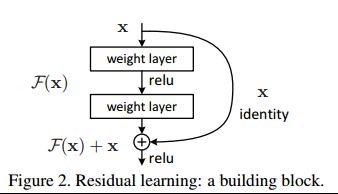

基于残差块block,也就是上面那个图,输出是输入和输入的卷积操作两个的fuse

ResNet34基于VGG19进行设计,所谓34,就是卷积+全连接一共34层,

ResNet_Block的顺序分别是,卷积–BN–ReLU–卷积–BN,最后的卷积与输入x进行融合,除了第一个卷积的stride为用户自定义,其他默认为1,最后一个卷积没有激活函数,为了保持与x相同的维度和尺寸

创新点

借鉴了HighwayNet中提到的skip connection方法,建立了shortcut connection,也就是多出来的输入x,与输入的卷积进行融合,最后得到输出。这样做的好处,一是减少了计算量,二是基于深层次的网络,提高了特征提取的性能(相比HighwayNet,效果要好一些),三是在一定程度上避免了深层次网络的梯度问题。另外,残差函数的出现,易于优化,可提高精确度,加快收敛速度。

class ResidualBlock(nn.Module):

def __init__(self, inchannel, outchannel, stride=1, shortcut=None):

super(ResidualBlock, self).__init__()

self.left = nn.Sequential(

nn.Conv2d(inchannel, outchannel, 3, stride, 1, bias=False),

nn.BatchNorm2d(outchannel),

nn.ReLU(inplace=True),

nn.Conv2d(outchannel, outchannel, 3, 1, 1, bias=False),

nn.BatchNorm2d(outchannel)

)

self.right = shortcut

def forward(self, x):

out = self.left(x)

residual = x if self.right is None else self.right(x)

out += residual

return F.relu(out)

class ResNet(nn.Module):

def __init__(self):

super(ResNet, self).__init__()

self.pre = nn.Sequential(

nn.Conv2d(3, 64, 7, 2, 3, bias=False),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.MaxPool2d(3, 2, 1)

)

self.layer1 = self.resnet_block(64, 64, 3)

self.layer2 = self.resnet_block(64, 128, 4, stride=2)

self.layer3 = self.resnet_block(128, 256, 6, stride=2)

self.layer4 = self.resnet_block(256, 512, 3, stride=2)

# 分类用的全连接

self.fc = nn.Linear(512, 10)

def resnet_block(self, inchannel, outchannel, block_num, stride=1):

shortcut = nn.Sequential(

nn.Conv2d(inchannel, outchannel, 1, stride, bias=False),

nn.BatchNorm2d(outchannel)

)

layers = []

layers.append(ResidualBlock(inchannel, outchannel, stride, shortcut))

for i in range(1, block_num):

layers.append(ResidualBlock(outchannel, outchannel))

return nn.Sequential(*layers)

def forward(self, x):

x = self.pre(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = F.avg_pool2d(x, 7)

print(x.shape)

x = x.view(x.size(0), -1)

return self.fc(x)

def ResNet_T():

net = ResNet()

X = torch.rand(1, 3, 224, 224)

print(net(X))

ResNet_T()

DenseNet

DenseNet(Densely Connected Convolutional Networks)2017

论文链接:https://arxiv.org/pdf/1608.06993.pdf

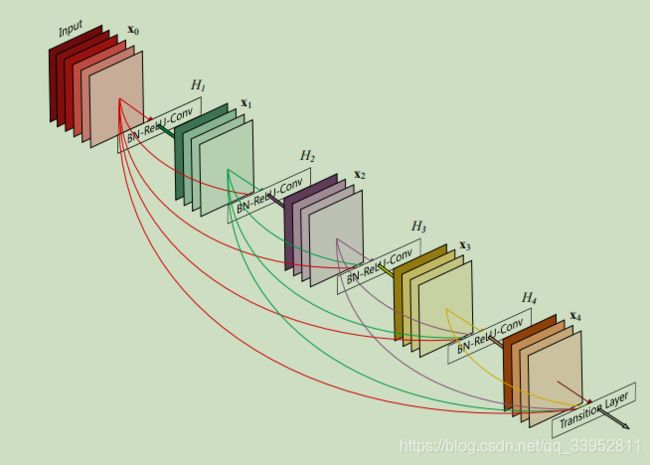

网络结构解析

DenseNet的核心在于DenseBlock,其特点在于,在Block中,每个卷积层的输入都来自于前面所有卷积层的输出,不同于ResNet的融合,DenseNet是作为输入,也就是在第i层卷积层,就会有i-1个输入,如果每一层产生k个特征图,则有k*(i-1)个输出,这里的k,在论文中也被称为增长率。Block在上上图也有,就不必详细解释了。

创新点

提出Dense块,引入了相同特征图尺寸的任意两层网络的直接连接,特点是看起来非常“密集”,特征重用,参数更少,DenseNet有效的降低了过拟合的出现,易于优化,加强了特征的传播

def Conv_block_dense(inchannels,outchannels):

return nn.Sequential(

nn.BatchNorm2d(inchannels),

nn.ReLU(),

nn.Conv2d(inchannels,outchannels,3,padding=1)

)

class DenseBlock(nn.Module):

def __init__(self,num_conv,in_channels,out_channels):

super(DenseBlock, self).__init__()

net = []

for i in range(num_conv):

in_c = in_channels + i * out_channels

net.append(Conv_block_dense(in_c,out_channels))

self.net = nn.ModuleList(net)

self.out_channels = in_channels + num_conv * out_channels

def forward(self,X):

for blk in self.net:

Y = blk(X)

X = torch.cat((X,Y),dim=1)

return X

def transition_block(in_channel,out_channel):

blk = nn.Sequential(

nn.BatchNorm2d(in_channel),

nn.ReLU(),

nn.Conv2d(in_channel,out_channel,1),

nn.AvgPool2d(kernel_size=2,stride=2)

)

return blk

class DenseNet(nn.Module):

def __init__(self):

super(DenseNet, self).__init__()

net = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

num_channels, growth_rate = 64, 32 # num_channels为当前的通道数

num_convs_in_dense_blocks = [4, 4, 4, 4]

for i, num_convs in enumerate(num_convs_in_dense_blocks):

DB = DenseBlock(num_convs, num_channels, growth_rate)

net.add_module("DenseBlosk_%d" % i, DB)

# 上一个稠密块的输出通道数

num_channels = DB.out_channels

# 在稠密块之间加入通道数减半的过渡层

if i != len(num_convs_in_dense_blocks) - 1:

net.add_module("transition_block_%d" % i, transition_block(num_channels, num_channels // 2))

num_channels = num_channels // 2

net.add_module("BN", nn.BatchNorm2d(num_channels))

net.add_module("relu", nn.ReLU())

net.add_module("global_avg_pool", GlobalAvgPool()) # GlobalAvgPool2d的输出: (Batch, num_channels, 1, 1)

net.add_module("fc", nn.Sequential(nn.Flatten(), nn.Linear(num_channels, 10)))

self.net = net

def forward(self,x):

return self.net(x)

def DenseNet_T():

net = DenseNet()

X = torch.rand(1, 3, 224, 224)

print(net(X))

DenseNet_T()