pytorch搭建VGG16网络模型(以CIFAR10为例)

前言

最近在学习pytorch框架,跟着敲总觉得少了点什么,就搭建一个网络模型试试,选择VGG16模型,数据集为cifar10(仅用来测试网络能运行)

加载数据

此处加载数据方式与官方教程一致,可忽略。

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

trainset = torchvision.datasets.CIFAR10(root='./data', train=True,

download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=4,

shuffle=True)

testset = torchvision.datasets.CIFAR10(root='./data', train=False,

download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=4,

shuffle=False)

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

VGG16

- 模型:博客有介绍:

https://blog.csdn.net/qq_43542339/article/details/104486300 - 代码:

class VGG16_torch(nn.Module):

def __init__(self):

super(VGG16_torch,self).__init__()

# GROUP 1

self.conv1_1 = nn.Conv2d(in_channels=3,out_channels=64,kernel_size=3,stride=1,padding=(1,1)) #output:32*32*64

self.conv1_2 = nn.Conv2d(in_channels=64,out_channels=64 ,kernel_size=3,stride=1,padding=(1,1))#output:32*32*64

self.maxpool1 = nn.MaxPool2d(2)#池化后长宽减半 output:16*16*64

# GROUP 2

self.conv2_1 = nn.Conv2d(in_channels=64,out_channels=128,kernel_size=3,stride=1,padding=(1,1))#output:16*16*128

self.conv2_2 = nn.Conv2d(in_channels=128,out_channels=128,kernel_size=3,stride=1,padding=(1,1))#output:16*16*128

self.maxpool2 = nn.MaxPool2d(2)#池化后长宽减半 output:8*8*128

# GROUP 3

self.conv3_1 = nn.Conv2d(in_channels=128,out_channels=256,kernel_size=3,stride=1,padding=(1,1))#output:8*8*256

self.conv3_2 = nn.Conv2d(in_channels=256,out_channels=256,kernel_size=3,stride=1,padding=(1,1))#output:8*8*256

self.conv3_3 = nn.Conv2d(in_channels=256,out_channels=256,kernel_size=1,stride=1)#output:8*8*256

self.maxpool3 = nn.MaxPool2d(2) # 池化后长宽减半 output:4*4*256

# GROUP 4

self.conv4_1 = nn.Conv2d(in_channels=256,out_channels=512,kernel_size=3,stride=1,padding=1)#output:4*4*512

self.conv4_2 = nn.Conv2d(in_channels=512,out_channels=512,kernel_size=3,stride=1,padding=1)#output:4*4*512

self.conv4_3 = nn.Conv2d(in_channels=512,out_channels=512,kernel_size=1,stride=1)#output:4*4*512

self.maxpool4 = nn.MaxPool2d(2) # 池化后长宽减半 output:2*2*512

# GROUP 5

self.conv5_1 = nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1) # output:14*14*512

self.conv5_2 = nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1) # output:14*14*512

self.conv5_3 = nn.Conv2d(in_channels=512, out_channels=512, kernel_size=1, stride=1) # output:14*14*512

self.maxpool5 = nn.MaxPool2d(2) # 池化后长宽减半 output:1*1*512

self.fc1 = nn.Linear(in_features=512*1*1,out_features=256)

self.fc2 = nn.Linear(in_features=256,out_features=256)

self.fc3 = nn.Linear(in_features=256,out_features=10)

#定义前向传播

def forward(self,x):

input_dimen = x.size(0)

#GROUP 1

output = self.conv1_1(x)

output = F.relu(output)

output = self.conv1_2(output)

output = F.relu(output)

output = self.maxpool1(output)

#GROUP 2

output = self.conv2_1(output)

output = F.relu(output)

output = self.conv2_2(output)

output = F.relu(output)

output = self.maxpool2(output)

#GROUP 3

output = self.conv3_1(output)

output = F.relu(output)

output = self.conv3_2(output)

output = F.relu(output)

output = self.conv3_3(output)

output = F.relu(output)

output = self.maxpool3(output)

#GROUP 4

output = self.conv4_1(output)

output = F.relu(output)

output = self.conv4_2(output)

output = F.relu(output)

output = self.conv4_3(output)

output = F.relu(output)

output = self.maxpool4(output)

#GROUP 5

output = self.conv5_1(output)

output = F.relu(output)

output = self.conv5_2(output)

output = F.relu(output)

output = self.conv5_3(output)

output = F.relu(output)

output = self.maxpool5(output)

output = output.view(x.size(0),-1)

output = self.fc1(output)

output = self.fc2(output)

output = self.fc3(output)

#返回输出

return output

训练

训练过程与官方教程相同,可忽略

epoch = 10 #训练次数

learning_rate = 1e-4 #学习率

net = VGG16_torch()

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=learning_rate)

for epoch in range(epoch): #迭代

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

inputs, labels = data

#初始化梯度

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# 打印loss

running_loss += loss.item()

if i % 20 == 19: # print every 2000 mini-batches

print('[%d, %5d] loss: %.5f' %

(epoch + 1, i + 1, running_loss / 2000))

running_loss = 0.0

print('Finished Training')

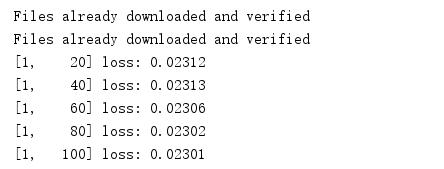

运行结果

小结

- VGG网络模型搭建时注意1*1卷积核可以不需要加padding(复制粘贴注意一下)

- 网络模型参数根据输入图像进行调整,当特征图尺寸很小时便可停止卷积,展平后接FC层输出。