Hyperledger Fabric入门实战(八)——Solo多机多节点部署

1. Solo多机多节点部署

所有的节点分离部署, 每台主机上有一个节点

| 名称 | IP | Hostname | 组织机构 |

|---|---|---|---|

| orderer | 118.31.35.238 | orderer.itcast.com | Orderer |

| peer0 | 121.40.33.98 | peer0.orggo.com | OrgGo |

| peer1 | peer1.orggo.com | OrgGo | |

| peer0 | 47.94.242.61 | peer0.orgcpp.com | OrgCpp |

| peer1 | peer1.orgcpp.com | OrgCpp |

-

准备工作 - 创建工作目录

1. n台主机需要创建一个名字相同的工作目录 # 121.40.33.98 mkdir ~/testwork # 118.31.35.238 mkdir ~/testwork # 47.94.242.61 mkdir ~/testwork -

# crypto-config.yaml -> 名字可以改 OrdererOrgs: # --------------------------------------------------------------------------- # Orderer # --------------------------------------------------------------------------- - Name: Orderer Domain: test.com # --------------------------------------------------------------------------- # "Specs" - See PeerOrgs below for complete description # --------------------------------------------------------------------------- Specs: - Hostname: orderer PeerOrgs: # --------------------------------------------------------------------------- # OrgGo # --------------------------------------------------------------------------- - Name: OrgGo Domain: orgGo.test.com EnableNodeOUs: false Template: Count: 2 Users: Count: 1 # --------------------------------------------------------------------------- # OrgCpp # --------------------------------------------------------------------------- - Name: OrgCpp Domain: orgCpp.test.com EnableNodeOUs: false Template: Count: 2 Users: Count: 1 -

# configtx.yaml -> 名字不能变 Organizations: # SampleOrg defines an MSP using the sampleconfig. It should never be used # in production but may be used as a template for other definitions - &OrdererOrg # DefaultOrg defines the organization which is used in the sampleconfig # of the fabric.git development environment Name: OrdererOrg # ID to load the MSP definition as ID: OrdererMSP # MSPDir is the filesystem path which contains the MSP configuration MSPDir: crypto-config/ordererOrganizations/test.com/msp - &OrgGo # DefaultOrg defines the organization which is used in the sampleconfig # of the fabric.git development environment Name: OrgGoMSP # ID to load the MSP definition as ID: OrgGoMSP MSPDir: crypto-config/peerOrganizations/orgGo.test.com/msp AnchorPeers: # AnchorPeers defines the location of peers which can be used # for cross org gossip communication. Note, this value is only # encoded in the genesis block in the Application section context - Host: peer0.orgGo.test.com Port: 7051 - &OrgCpp Name: OrgCppMSP ID: OrgCppMSP MSPDir: crypto-config/peerOrganizations/orgCpp.test.com/msp AnchorPeers: - Host: peer0.orgCpp.test.com Port: 7051 Capabilities: Global: &ChannelCapabilities V1_1: true Orderer: &OrdererCapabilities V1_1: true Application: &ApplicationCapabilities V1_2: true Application: &ApplicationDefaults Organizations: Orderer: &OrdererDefaults # Orderer Type: The orderer implementation to start # Available types are "solo" and "kafka" OrdererType: solo Addresses: - orderer.test.com:7050 # Batch Timeout: The amount of time to wait before creating a batch BatchTimeout: 2s # Batch Size: Controls the number of messages batched into a block BatchSize: # Max Message Count: The maximum number of messages to permit in a batch MaxMessageCount: 10 # Absolute Max Bytes: The absolute maximum number of bytes allowed for # the serialized messages in a batch. AbsoluteMaxBytes: 99 MB # Preferred Max Bytes: The preferred maximum number of bytes allowed for # the serialized messages in a batch. A message larger than the preferred # max bytes will result in a batch larger than preferred max bytes. PreferredMaxBytes: 512 KB Kafka: # Brokers: A list of Kafka brokers to which the orderer connects # NOTE: Use IP:port notation Brokers: - 127.0.0.1:9092 Organizations: Profiles: TwoOrgsOrdererGenesis: Capabilities: <<: *ChannelCapabilities Orderer: <<: *OrdererDefaults Organizations: - *OrdererOrg Capabilities: <<: *OrdererCapabilities Consortiums: SampleConsortium: Organizations: - *OrgGo - *OrgCpp TwoOrgsChannel: Consortium: SampleConsortium Application: <<: *ApplicationDefaults Organizations: - *OrgGo - *OrgCpp Capabilities: <<: *ApplicationCapabilities

生成的具体过程参考第四章即可。

1.1 部署 orderer 排序节点

-

编写orderer节点启动的docker-compose.yaml配置文件

version: '2' services: orderer.test.com: container_name: orderer.test.com image: hyperledger/fabric-orderer:latest environment: - CORE_VM_DOCKER_HOSTCONFIG_NETWORKMODE=testwork_default - ORDERER_GENERAL_LOGLEVEL=INFO - ORDERER_GENERAL_LISTENADDRESS=0.0.0.0 - ORDERER_GENERAL_LISTENPORT=7050 - ORDERER_GENERAL_GENESISMETHOD=file - ORDERER_GENERAL_GENESISFILE=/var/hyperledger/orderer/orderer.genesis.block - ORDERER_GENERAL_LOCALMSPID=OrdererMSP - ORDERER_GENERAL_LOCALMSPDIR=/var/hyperledger/orderer/msp # enabled TLS - ORDERER_GENERAL_TLS_ENABLED=true - ORDERER_GENERAL_TLS_PRIVATEKEY=/var/hyperledger/orderer/tls/server.key - ORDERER_GENERAL_TLS_CERTIFICATE=/var/hyperledger/orderer/tls/server.crt - ORDERER_GENERAL_TLS_ROOTCAS=[/var/hyperledger/orderer/tls/ca.crt] working_dir: /opt/gopath/src/github.com/hyperledger/fabric command: orderer volumes: - ./channel-artifacts/genesis.block:/var/hyperledger/orderer/orderer.genesis.block - ./crypto-config/ordererOrganizations/test.com/orderers/orderer.test.com/msp:/var/hyperledger/orderer/msp - ./crypto-config/ordererOrganizations/test.com/orderers/orderer.test.com/tls/:/var/hyperledger/orderer/tls networks: default: aliases: - testwork # 这个名字使用当前配置文件所在的目录 的名字 ports: - 7050:7050

1.2 部署 peer0.orggo 节点

-

切换到peer0.orggo主机 - 121.40.33.98

-

进入到

~/testwork -

拷贝文件

$ tree -L 1 . ├── channel-artifacts └── crypto-config -

编写

docker-compose.yaml配置文件version: '2' services: peer0.orgGo.test.com: container_name: peer0.orgGo.test.com image: hyperledger/fabric-peer:latest environment: - CORE_VM_ENDPOINT=unix:///host/var/run/docker.sock - CORE_VM_DOCKER_HOSTCONFIG_NETWORKMODE=testwork_default - CORE_LOGGING_LEVEL=INFO #- CORE_LOGGING_LEVEL=DEBUG - CORE_PEER_GOSSIP_USELEADERELECTION=true - CORE_PEER_GOSSIP_ORGLEADER=false - CORE_PEER_PROFILE_ENABLED=true - CORE_PEER_LOCALMSPID=OrgGoMSP - CORE_PEER_ID=peer0.orgGo.test.com - CORE_PEER_ADDRESS=peer0.orgGo.test.com:7051 - CORE_PEER_GOSSIP_BOOTSTRAP=peer0.orgGo.test.com:7051 - CORE_PEER_GOSSIP_EXTERNALENDPOINT=peer0.orgGo.test.com:7051 # TLS - CORE_PEER_TLS_ENABLED=true - CORE_PEER_TLS_CERT_FILE=/etc/hyperledger/fabric/tls/server.crt - CORE_PEER_TLS_KEY_FILE=/etc/hyperledger/fabric/tls/server.key - CORE_PEER_TLS_ROOTCERT_FILE=/etc/hyperledger/fabric/tls/ca.crt volumes: - /var/run/:/host/var/run/ - ./crypto-config/peerOrganizations/orgGo.test.com/peers/peer0.orgGo.test.com/msp:/etc/hyperledger/fabric/msp - ./crypto-config/peerOrganizations/orgGo.test.com/peers/peer0.orgGo.test.com/tls:/etc/hyperledger/fabric/tls working_dir: /opt/gopath/src/github.com/hyperledger/fabric/peer command: peer node start networks: default: aliases: - testwork ports: - 7051:7051 - 7053:7053 extra_hosts: # 声明域名和IP的对应关系 - "orderer.test.com:118.31.35.238" cli: container_name: cli image: hyperledger/fabric-tools:latest tty: true stdin_open: true environment: - GOPATH=/opt/gopath - CORE_VM_ENDPOINT=unix:///host/var/run/docker.sock #- CORE_LOGGING_LEVEL=DEBUG - CORE_LOGGING_LEVEL=INFO - CORE_PEER_ID=cli - CORE_PEER_ADDRESS=peer0.orgGo.test.com:7051 - CORE_PEER_LOCALMSPID=OrgGoMSP - CORE_PEER_TLS_ENABLED=true - CORE_PEER_TLS_CERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/orgGo.test.com/peers/peer0.orgGo.test.com/tls/server.crt - CORE_PEER_TLS_KEY_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/orgGo.test.com/peers/peer0.orgGo.test.com/tls/server.key - CORE_PEER_TLS_ROOTCERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/orgGo.test.com/peers/peer0.orgGo.test.com/tls/ca.crt - CORE_PEER_MSPCONFIGPATH=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/orgGo.test.com/users/[email protected]/msp working_dir: /opt/gopath/src/github.com/hyperledger/fabric/peer command: /bin/bash volumes: - /var/run/:/host/var/run/ - ./chaincode/:/opt/gopath/src/github.com/chaincode - ./crypto-config:/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/ - ./channel-artifacts:/opt/gopath/src/github.com/hyperledger/fabric/peer/channel-artifacts depends_on: # 启动顺序 - peer0.orgGo.test.com networks: default: aliases: - testwork extra_hosts: - "orderer.test.com:118.31.35.238" - "peer0.orgGo.test.com:121.40.33.98" -

在

~/testwork创建了一个子目录chaincode, 并将链码文件放进去 -

启动容器

$ docker-compose up -d -

进入到客户端容器中

docker exec -it cli bash-

创建通道

$ peer channel create -o orderer.test.com:7050 -c testchannel -f ./channel-artifacts/channel.tx --tls true --cafile /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/ordererOrganizations/test.com/msp/tlscacerts/tlsca.test.com-cert.pem -

将当前节点加入到通道中

$ peer channel join -b testchannel.block -

安装链码(舍弃)peer chaincode install -n testcc -v 1.0 -l golang -p github.com/chaincode如果在服务器A上安装链码,然后在服务器B上安装同样的链码,哪怕链码是一样的,可能因为权限或者其他一些差异,也会导致智能合约不一样。所以在这里,我们不是直接安装链码,而是使用下面的方法:

-

首先将链代码打包:

peer chaincode package -n testcc -p github.com/chaincode -v 1.0 testcc.out

之后安装:

peer chaincode install testcc.out

再然后,将testcc.out文件拷贝到宿主机

docker cp cli:/opt/gopath/src/github.com/hyperledger/fabric/peer/test.out ./

再将test.out文件复制到组织服务器B(orgCpp)上的channel-artifacts文件夹里,当启动服务器B的docker-compose时,再将该文件move到peer目录下即可

- 初始化链码(只初始化一次)

peer chaincode instantiate -o orderer.test.com:7050 --tls true --cafile /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/ordererOrganizations/test.com/msp/tlscacerts/tlsca.test.com-cert.pem -C testchannel -n testcc -l golang -v 1.0 -c '{"Args":["init","a","100","b","200"]}' -P "AND ('OrgGoMSP.member', 'OrgCppMSP.member')"

- 测试查询

peer chaincode query -C testchannel -n testcc -c '{"Args":["query","a"]}'

-

将生成的通道文件

testchannel.block从cli容器拷贝到宿主机# 拷贝操作要在宿主机中进行 $ docker cp cli:/opt/gopath/src/github.com/hyperledger/fabric/peer/testchannel.block ./

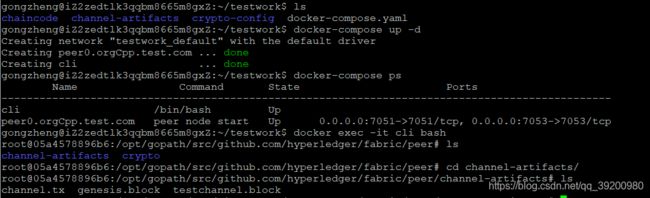

1.3 部署 peer0.orgcpp 节点

-

切换到peer0.orgcpp主机 - 47.94.242.61

-

进入到

~/testwork -

拷贝文件

$ tree -L 1 . ├── channel-artifacts └── crypto-config-

通道块文件 从宿主机 -> 当前的peer0.orgcpp上

# 为了方便操作可以将文件放入到客户端容器挂载的目录中 $ mv testchannel.block channel-artifacts/

-

- 编写

docker-compose.yaml配置文件

version: '2'

services:

peer0.orgCpp.test.com:

container_name: peer0.orgCpp.test.com

image: hyperledger/fabric-peer:latest

environment:

- CORE_VM_ENDPOINT=unix:///host/var/run/docker.sock

- CORE_VM_DOCKER_HOSTCONFIG_NETWORKMODE=testwork_default

# - CORE_LOGGING_LEVEL=INFO

- CORE_LOGGING_LEVEL=DEBUG

- CORE_PEER_GOSSIP_USELEADERELECTION=true

- CORE_PEER_GOSSIP_ORGLEADER=false

- CORE_PEER_PROFILE_ENABLED=true

- CORE_PEER_LOCALMSPID=OrgCppMSP

- CORE_PEER_ID=peer0.orgCpp.test.com

- CORE_PEER_ADDRESS=peer0.orgCpp.test.com:7051

- CORE_PEER_GOSSIP_BOOTSTRAP=peer0.orgCpp.test.com:7051

- CORE_PEER_GOSSIP_EXTERNALENDPOINT=peer0.orgCpp.test.com:7051

# TLS

- CORE_PEER_TLS_ENABLED=true

- CORE_PEER_TLS_CERT_FILE=/etc/hyperledger/fabric/tls/server.crt

- CORE_PEER_TLS_KEY_FILE=/etc/hyperledger/fabric/tls/server.key

- CORE_PEER_TLS_ROOTCERT_FILE=/etc/hyperledger/fabric/tls/ca.crt

volumes:

- /var/run/:/host/var/run/

- ./crypto-config/peerOrganizations/orgCpp.test.com/peers/peer0.orgCpp.test.com/msp:/etc/hyperledger/fabric/msp

- ./crypto-config/peerOrganizations/orgCpp.test.com/peers/peer0.orgCpp.test.com/tls:/etc/hyperledger/fabric/tls

working_dir: /opt/gopath/src/github.com/hyperledger/fabric/peer

command: peer node start

networks:

default:

aliases:

- testwork

ports:

- 7051:7051

- 7053:7053

extra_hosts:

- "orderer.test.com:118.31.35.238"

- "peer0.orgGo.test.com:121.40.33.98"

cli:

container_name: cli

image: hyperledger/fabric-tools:latest

tty: true

stdin_open: true

environment:

- GOPATH=/opt/gopath

- CORE_VM_ENDPOINT=unix:///host/var/run/docker.sock

#- CORE_LOGGING_LEVEL=DEBUG

- CORE_LOGGING_LEVEL=DEBUG

- CORE_PEER_ID=cli

- CORE_PEER_ADDRESS=peer0.orgCpp.test.com:7051

- CORE_PEER_LOCALMSPID=OrgCppMSP

- CORE_PEER_TLS_ENABLED=true

- CORE_PEER_TLS_CERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/orgCpp.test.com/peers/peer0.orgCpp.test.com/tls/server.crt

- CORE_PEER_TLS_KEY_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/orgCpp.test.com/peers/peer0.orgCpp.test.com/tls/server.key

- CORE_PEER_TLS_ROOTCERT_FILE=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/orgCpp.test.com/peers/peer0.orgCpp.test.com/tls/ca.crt

- CORE_PEER_MSPCONFIGPATH=/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/orgCpp.test.com/users/[email protected]/msp

working_dir: /opt/gopath/src/github.com/hyperledger/fabric/peer

command: /bin/bash

volumes:

- /var/run/:/host/var/run/

- ./chaincode/:/opt/gopath/src/github.com/chaincode

- ./crypto-config:/opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/

- ./channel-artifacts:/opt/gopath/src/github.com/hyperledger/fabric/peer/channel-artifacts

depends_on:

- peer0.orgCpp.test.com

networks:

default:

aliases:

- testwork

extra_hosts:

- "orderer.test.com:118.31.35.238"

- "peer0.orgGo.test.com:121.40.33.98"

- "peer0.orgCpp.test.com:47.94.242.61"

- 启动当前节点

-

进入到操作该节点的客户端中

- 加入到通道中

- 安装链码

peer chaincode install cctest.out

- 测试查询

peer chaincode query -C testchannel -n testcc -c '{"Args":["query","a"]}'

- 调用方法修改数据

# 转账

$ peer chaincode invoke -o orderer.test.com:7050 -C testchannel -n testcc --tls true --cafile /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/ordererOrganizations/test.com/orderers/orderer.test.com/msp/tlscacerts/tlsca.test.com-cert.pem --peerAddresses peer0.orgGo.test.com:7051 --tlsRootCertFiles /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/orgGo.test.com/peers/peer0.orgGo.test.com/tls/ca.crt --peerAddresses peer0.orgcpp.test.com:7051 --tlsRootCertFiles /opt/gopath/src/github.com/hyperledger/fabric/peer/crypto/peerOrganizations/orgCpp.test.com/peers/peer0.orgCpp.test.com/tls/ca.crt -c '{"Args":["invoke","a","b","10"]}'

# 查询

$ peer chaincode query -C testchannel -n testcc -c '{"Args":["query","a"]}'

$ peer chaincode query -C testchannel -n testcc -c '{"Args":["query","b"]}'

1.4 部署其他节点

关于其余节点的部署, 在此不再过多赘述, 部署方式请参考1.3内容, 步骤是完全一样的

补充:链码的打包

我们在进行多机多节点部署的时候, 所有的peer节点都需要安装链码, 有时候会出现链码安装失败的问题, 提示链码的指纹(哈希)不匹配,我们可以通过以下方法解决

-

通过客户端在第1个peer节点中安装好链码之后, 将链码打包

$ peer chaincode package -n testcc -p github.com/chaincode -v 1.0 mycc.1.0.out -n: 链码的名字 -p: 链码的路径 -v: 链码的版本号 -mycc.1.0.out: 打包之后生成的文件 -

将打包之后的链码从容器中拷贝出来

$ docker cp cli:/xxxx/mycc.1.0.out ./ -

将得到的打包之后的链码文件拷贝到其他的peer节点上

-

通过客户端在其他peer节点上安装链码

$ peer chaincode install mycc.1.0.out