大数据核心技术源码分析之-Avro篇

云计算可谓当红的发紫,而作为云计算的领头羊Hadoop的生态圈,日益增大,都知道未来的海量数据时代,掌握了制高点,就等于掌握了核心和命脉;

童鞋们,如果不了解云,如何还是,如果了解云,又该如何深入呢;

个人也是带着疑问,一步步走来,简单一个思路,看设计原理不难,搭建环境、准备Demo也不难;给出设计思路也不算很难;

但是对核心源码的分析和对设计思路的追奔溯源,需要更大的激情和毅力;

一句话,Hadoop志在必得,所以打点行囊,上路吧,从底层开始首先从Avro开始;

Apache Avro™ is a data serialization system

海量数据的核心就是无边的数据,而这些数据如果采用传统的OS模型的文件格式有何不妥呢;

为何Hadoop的HDFS需要依赖底层的Avro来完成呢?

先看看Avro的设计目标:

将数据结构或对象转化成--便于存储和传输的格式 【多个节点之前的数据存储和传输要求】

支持数据密集型应用,适合于大规模数据的存储和交换。

看看arvo的特性和功能:

1:丰富的数据结构类型

2:快速可压缩的二进制数据格式

3:存储持久数据的文件容器

4:远程过程调用(RPC)

5:简单的动态语言结合功能

arvo数据存储到文件中时,模式随着存储,这样保证任何程序都可以对文件进行处理。

模式为json格式

Java写入的,可以用C/C++读取

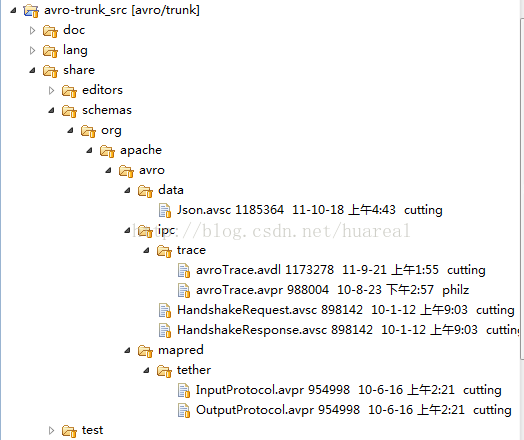

下载源码分析

对应源码部分有多种语言版本

记下了在另外一部分可以看到schema

首先看下data下的Json.avsc的schema格式

{"type": "record", "name": "Json", "namespace":"org.apache.avro.data",

"fields": [

{"name": "value",

"type": [

"long",

"double",

"string",

"boolean",

"null",

{"type": "array", "items": "Json"},

{"type": "map", "values": "Json"}

]

}

]

}

可以看下ipc和mapred下额schema

首先看下ipc的schema定义

1:handleshakerequest.avsc

{

"type": "record",

"name": "HandshakeRequest", "namespace":"org.apache.avro.ipc",

"fields": [

{"name": "clientHash",

"type": {"type": "fixed", "name": "MD5", "size": 16}},

{"name": "clientProtocol", "type": ["null", "string"]},

{"name": "serverHash", "type": "MD5"},

{"name": "meta", "type": ["null", {"type": "map", "values": "bytes"}]}

]

}

2:handleshakerespone.avsc

{

"type": "record",

"name": "HandshakeResponse", "namespace": "org.apache.avro.ipc",

"fields": [

{"name": "match",

"type": {"type": "enum", "name": "HandshakeMatch",

"symbols": ["BOTH", "CLIENT", "NONE"]}},

{"name": "serverProtocol",

"type": ["null", "string"]},

{"name": "serverHash",

"type": ["null", {"type": "fixed", "name": "MD5", "size": 16}]},

{"name": "meta",

"type": ["null", {"type": "map", "values": "bytes"}]}

]

}

3:avroTrace

{

"protocol" : "AvroTrace",

"namespace" : "org.apache.avro.ipc.trace",

"types" : [ {

"type" : "enum",

"name" : "SpanEvent",

"symbols" : [ "SERVER_RECV", "SERVER_SEND", "CLIENT_RECV", "CLIENT_SEND" ]

}, {

"type" : "fixed",

"name" : "ID",

"size" : 8

}, {

"type" : "record",

"name" : "TimestampedEvent",

"fields" : [ {

"name" : "timeStamp",

"type" : "long"

}, {

"name" : "event",

"type" : [ "SpanEvent", "string" ]

} ]

}, {

"type" : "record",

"name" : "Span",

"fields" : [ {

"name" : "traceID",

"type" : "ID"

}, {

"name" : "spanID",

"type" : "ID"

}, {

"name" : "parentSpanID",

"type" : [ "ID", "null" ]

}, {

"name" : "messageName",

"type" : "string"

}, {

"name" : "requestPayloadSize",

"type" : "long"

}, {

"name" : "responsePayloadSize",

"type" : "long"

}, {

"name" : "requestorHostname",

"type" : [ "string", "null" ]

}, {

"name" : "responderHostname",

"type" : [ "string", "null" ]

}, {

"name" : "events",

"type" : {

"type" : "array",

"items" : "TimestampedEvent"

}

}, {

"name" : "complete",

"type" : "boolean"

} ]

} ],

"messages" : {

"getAllSpans" : {

"request" : [ ],

"response" : {

"type" : "array",

"items" : "Span"

}

},

"getSpansInRange" : {

"request" : [ {

"name" : "start",

"type" : "long"

}, {

"name" : "end",

"type" : "long"

} ],

"response" : {

"type" : "array",

"items" : "Span"

}

}

}

}

mapred下的schema定义

1:inputProtocol.arsc

{"namespace":"org.apache.avro.mapred.tether",

"protocol": "InputProtocol",

"doc": "Transmit inputs to a map or reduce task sub-process.",

"types": [

{"name": "TaskType", "type": "enum", "symbols": ["MAP","REDUCE"]}

],

"messages": {

"configure": {

"doc": "Configure the task. Sent before any other message.",

"request": [

{"name": "taskType", "type": "TaskType",

"doc": "Whether this is a map or reduce task."},

{"name": "inSchema", "type": "string",

"doc": "The Avro schema for task input data."},

{"name": "outSchema", "type": "string",

"doc": "The Avro schema for task output data."}

],

"response": "null",

"one-way": true

},

"partitions": {

"doc": "Set the number of map output partitions.",

"request": [

{"name": "partitions", "type": "int",

"doc": "The number of map output partitions."}

],

"response": "null",

"one-way": true

},

"input": {

"doc": "Send a block of input data to a task.",

"request": [

{"name": "data", "type": "bytes",

"doc": "A sequence of instances of the declared schema."},

{"name": "count", "type": "long",

"default": 1,

"doc": "The number of instances in this block."}

],

"response": "null",

"one-way": true

},

"abort": {

"doc": "Called to abort the task.",

"request": [],

"response": "null",

"one-way": true

},

"complete": {

"doc": "Called when a task's input is complete.",

"request": [],

"response": "null",

"one-way": true

}

}

}

2:outputProtocal.avsc

{"namespace":"org.apache.avro.mapred.tether",

"protocol": "OutputProtocol",

"doc": "Transmit outputs from a map or reduce task to parent.",

"messages": {

"configure": {

"doc": "Configure task. Sent before any other message.",

"request": [

{"name": "port", "type": "int",

"doc": "The port to transmit inputs to this task on."}

],

"response": "null",

"one-way": true

},

"output": {

"doc": "Send an output datum.",

"request": [

{"name": "datum", "type": "bytes",

"doc": "A binary-encoded instance of the declared schema."}

],

"response": "null",

"one-way": true

},

"outputPartitioned": {

"doc": "Send map output datum explicitly naming its partition.",

"request": [

{"name": "partition", "type": "int",

"doc": "The map output partition for this datum."},

{"name": "datum", "type": "bytes",

"doc": "A binary-encoded instance of the declared schema."}

],

"response": "null",

"one-way": true

},

"status": {

"doc": "Update the task's status message. Also acts as keepalive.",

"request": [

{"name": "message", "type": "string",

"doc": "The new status message for the task."}

],

"response": "null",

"one-way": true

},

"count": {

"doc": "Increment a task/job counter.",

"request": [

{"name": "group", "type": "string",

"doc": "The name of the counter group."},

{"name": "name", "type": "string",

"doc": "The name of the counter to increment."},

{"name": "amount", "type": "long",

"doc": "The amount to incrment the counter."}

],

"response": "null",

"one-way": true

},

"fail": {

"doc": "Called by a failing task to abort.",

"request": [

{"name": "message", "type": "string",

"doc": "The reason for failure."}

],

"response": "null",

"one-way": true

},

"complete": {

"doc": "Called when a task's output has completed without error.",

"request": [],

"response": "null",

"one-way": true

}

}

}

从上面的基本Data的schema到IPC,以及mapred的schema;

可以说avro从文件传输领域提供了基础支撑,也许根据这些规则和思想,可以创造更多的东西;Drill的诞生也离不开Avro.

接下来进一步分析源码如何实现数据和模式的一起写入,以及在RPC和动态语言的支持特性......