K8S kube-proxy ipvs 原理分析

- 1 在k8s 设置ipvs模式

- 1.1 Perquisites

- 1.2 修改kube-proxy 启动参数

- 2 ipvs kube-proxy原理分析

- 2.1 集群内部发送出去的packet通过cluster ip访问到Pod

- 2.2 集群外部通过node ip 访问到Pod

- 2.3 总结

- Refer

1 在k8s 设置ipvs模式

因为iptables的效率比较低,在k8s v1.11中已经release 了ipvs。本文将描述如果enable ipvs并对ipvs在kube-proxy的应用做一介绍。

1.1 Perquisites

要设置成ipvs模式需要满足:

- k8s版本 >= v1.11

- 安装ipset, ipvsadm安装ipset, ipvsadm, yum install ipset ipvsadm -y

- 确保 ipvs已经加载内核模块, ip_vs、ip_vs_rr、ip_vs_wrr、ip_vs_sh、nf_conntrack_ipv4。如果这些内核模块不加载,当kube-proxy启动后,会退回到iptables模式。

检查是否加载

root@haofan-test-2 system]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4

nf_conntrack_ipv4 15053 1

nf_defrag_ipv4 12729 1 nf_conntrack_ipv4

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 15

ip_vs 145497 21 ip_vs_rr,ip_vs_sh,ip_vs_wrr

如果没有加载执行下面命令:

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

1.2 修改kube-proxy 启动参数

在每个worker节点上修改,kube-proxy启动参数

vi /etc/systemd/system/kube-proxy.service, 加上 --proxy-mode=ipvs \,然后执行下面命令

systemctl daemon-reload

service kube-proxy restart

2 ipvs kube-proxy原理分析

依然以上一篇文章中的guestbook例子分析在ipvs模式下流量是如何转发的。

由于 IPVS 的 DNAT 钩子挂在 INPUT 链上,因此必须要让内核识别 VIP 是本机的 IP。k8s 通过设置将service cluster ip 绑定到虚拟网卡kube-ipvs0,其中下面的172.16.x.x都是VIP,也就是cluster-ip。

root@haofan-test-2 system]# ip addr show dev kube-ipvs0

35: kube-ipvs0: mtu 1500 qdisc noop state DOWN

link/ether c6:ce:f4:03:9d:bc brd ff:ff:ff:ff:ff:ff

inet 172.16.4.76/32 brd 172.16.4.76 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 172.16.121.30/32 brd 172.16.121.30 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 172.16.101.184/32 brd 172.16.101.184 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 172.16.25.31/32 brd 172.16.25.31 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 172.16.106.235/32 brd 172.16.106.235 scope global kube-ipvs0

valid_lft forever preferred_lft forever

ipvs 会使用 iptables 进行包过滤、SNAT、masquared(伪装)。具体来说,ipvs 将使用ipset来存储需要DROP或masquared的流量的源或目标地址,以确保 iptables 规则的数量是恒定的,这样我们就不需要关心我们有多少服务了。

在以下情况时,ipvs依然依赖于iptables:

- kube-proxy 配置参数 –masquerade-all=true, 如果 kube-proxy 配置了–masquerade-all=true参数,则 ipvs 将伪装所有访问 Service 的 Cluster IP 的流量,此时的行为和 iptables 是一致的。

- 在 kube-proxy 启动时指定集群 CIDR,如果 kube-proxy 配置了–cluster-cidr=参数,则 ipvs 会伪装所有访问 Service Cluster IP 的外部流量,其行为和 iptables 相同。

- Load Balancer 类型的 Service

- NodePort 类型的 Service

- 指定 externalIPs 的 Service

下面分析下通过cluster ip 和 node ip 访问到pod的流量走向以及iptables

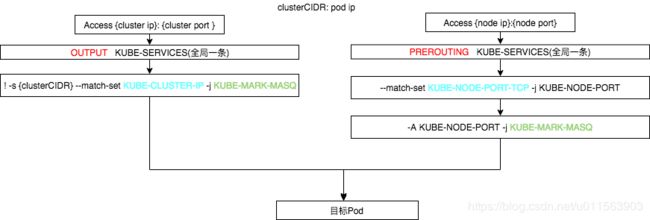

2.1 集群内部发送出去的packet通过cluster ip访问到Pod

数据包是通过本地协议发出的,因为要伪装所有访问 Service Cluster IP 的外部流量,k8s只能在OUTPUT这个链上来动手。

[root@haofan-test-2 system]# iptables -S -t nat | grep OUTPUT

-P OUTPUT ACCEPT

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

然后匹配到下面,其中只能

匹配到倒数第二条就是,类似于用iptables模式下的那条规则,就是让源地址不是172.18.0.0/16,目的地址match 到 KUBE-CLUSTER-IP 的packet 打标签,进行SNAT。

[

root@haofan-test-2 system]# iptables -S -t nat | grep KUBE-SERVICES

-N KUBE-SERVICES

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A KUBE-SERVICES -m comment --comment "Kubernetes nodeport TCP port for masquerade purpose" -m set --match-set KUBE-NODE-PORT-TCP dst -j KUBE-NODE-PORT

-A KUBE-SERVICES ! -s 172.18.0.0/16 -m comment --comment "Kubernetes service cluster ip + port for masquerade purpose" -m set --match-set KUBE-CLUSTER-IP dst,dst -j KUBE-MARK-MASQ

-A KUBE-SERVICES -m set --match-set KUBE-CLUSTER-IP dst,dst -j ACCEPT

[root@haofan-test-1 ~]# ipset -L KUBE-CLUSTER-IP

Name: KUBE-CLUSTER-IP

Type: hash:ip,port

Revision: 5

Header: family inet hashsize 1024 maxelem 65536

Size in memory: 824

References: 2

Number of entries: 11

Members:

172.16.4.76,tcp:8086

172.16.121.30,tcp:9093

172.16.0.1,tcp:443

172.16.106.235,tcp:6379

172.16.69.109,tcp:80

172.16.25.31,tcp:8081

172.16.121.30,tcp:9090

172.16.101.184,tcp:6379

172.16.0.10,udp:53

172.16.0.10,tcp:53

172.16.25.31,tcp:8080

2.2 集群外部通过node ip 访问到Pod

集群外部通过node ip 访问到后端pod服务,肯定是在PREROUTING

[root@haofan-test-2 system]# iptables -S -t nat | grep PREROUTING

-P PREROUTING ACCEPT

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

[root@haofan-test-2 system]# iptables -S -t nat | grep KUBE-SERVICES

-A KUBE-SERVICES -m comment --comment "Kubernetes nodeport TCP port for masquerade purpose" -m set --match-set KUBE-NODE-PORT-TCP dst -j KUBE-NODE-PORT

针对match KUBE-NODE-PORT-TCP的打标签,最终为了SNAT。

root@haofan-test-1 ~]# ipset -L KUBE-NODE-PORT-TCP

Name: KUBE-NODE-PORT-TCP

Type: bitmap:port

Revision: 3

Header: range 0-65535

Size in memory: 8300

References: 1

Number of entries: 1

Members:

32214

[root@haofan-test-2 system]# iptables -S -t nat | grep KUBE-NODE-PORT

-N KUBE-NODE-PORT

-A KUBE-NODE-PORT -j KUBE-MARK-MASQ

2.3 总结

Refer

- https://kubernetes.io/zh/blog/2018/07/09/ipvs-based-in-cluster-load-balancing-deep-dive/

- https://juejin.im/entry/5b7e409ce51d4538b35c03df

- https://zhuanlan.zhihu.com/p/37230013