kubernetes集群实战——资源限制(内存、CPU、NameSpace)

1.k8s容器资源限制

Kubernetes采用request和limit两种限制类型来对资源进行分配。

request(资源需求):即运行Pod的节点必须满足运行Pod的最基本需求才能 运行Pod。

limit(资源限额):即运行Pod期间,可能内存使用量会增加,那最多能使用多少内存,这就是资源限额。

资源类型:

CPU 的单位是核心数,内存的单位是字节。

一个容器申请0.5个CPU,就相当于申请1个CPU的一半,你也可以加个后缀 m 表示千分之一的概念。比如说100m的CPU,100豪的CPU和0.1个CPU都是一样的。

内存单位:

K、M、G、T、P、E ##通常是以1000为换算标准的。

Ki、Mi、Gi、Ti、Pi、Ei ##通常是以1024为换算标准的。

2.内存限制

实验

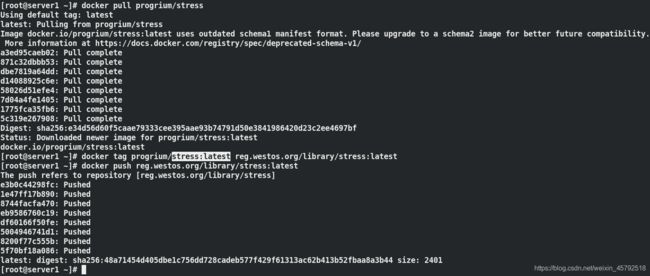

(1) 推送所需镜像stress到私有仓库,以便集群各节点拉取

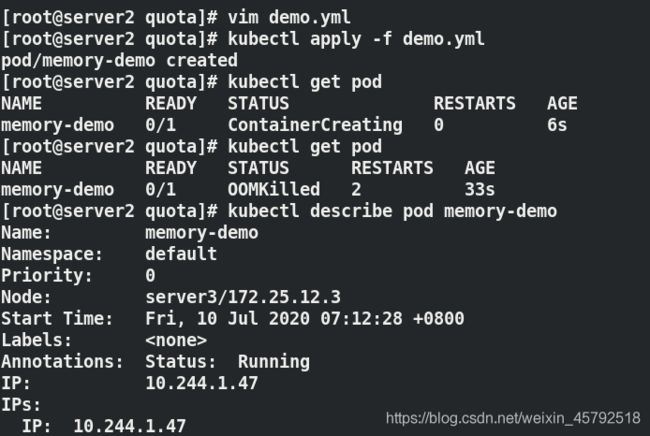

(2)做内存资源限制

启用一个占用200Mi的pod,设定内存资源上限100Mi,下限50Mi

应用yaml文件,pod调度失败

[root@server2 ~]# mkdir quota

[root@server2 ~]# cd quota

[root@server2 quota]# vim demo.yml

[root@server2 quota]# cat demo.yml

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

spec:

containers:

- name: memory-demo

image: stress

args: ##启用一个pod,他会占用200M内存

- --vm

- "1"

- --vm-bytes

- 200M

resources:

requests:

memory: 50Mi ##下限50Mi

limits:

memory: 100Mi ##上限100Mi

[root@server2 quota]# kubectl apply -f demo.yml

pod/memory-demo created

[root@server2 quota]# kubectl get pod

NAME READY STATUS RESTARTS AGE

memory-demo 0/1 ContainerCreating 0 6s

[root@server2 quota]# kubectl get pod ##超出内存限制,调度失败

NAME READY STATUS RESTARTS AGE

memory-demo 0/1 OOMKilled 2 33s

[root@server2 quota]# kubectl describe pod memory-demo

Name: memory-demo

Namespace: default

Priority: 0

Node: server3/172.25.12.3

Start Time: Fri, 10 Jul 2020 07:12:28 +0800

Labels:

Annotations: Status: Running

IP: 10.244.1.47

IPs:

IP: 10.244.1.47

Containers:

memory-demo:

Container ID: docker://4c3975e48d5fc09540e9d0b344ab2c4e70f5185f27514205b5d53e448efef587

Image: stress

Image ID: docker-pullable://stress@sha256:48a71454d405dbe1c756dd728cadeb577f429f61313ac62b413b52fbaa8a3b44

Port:

Host Port:

Args:

--vm

1

--vm-bytes

200M

State: Waiting

Reason: CrashLoopBackOff

Last State: Terminated

Reason: OOMKilled

Exit Code: 1

Started: Fri, 10 Jul 2020 07:13:20 +0800

Finished: Fri, 10 Jul 2020 07:13:20 +0800

Ready: False

Restart Count: 3

Limits:

memory: 100Mi

Requests:

memory: 50Mi

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-d8qhv (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

default-token-d8qhv:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-d8qhv

Optional: false

QoS Class: Burstable

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 69s default-scheduler Successfully assigned default/memory-demo to server3

Normal Pulling 16s (x4 over 68s) kubelet, server3 Pulling image "stress"

Normal Pulled 16s (x4 over 56s) kubelet, server3 Successfully pulled image "stress"

Normal Created 16s (x4 over 55s) kubelet, server3 Created container memory-demo

Normal Started 16s (x4 over 55s) kubelet, server3 Started container memory-demo

Warning BackOff 2s (x6 over 53s) kubelet, server3 Back-off restarting failed container

[root@server2 quota]#

如果容器超过其内存限制,则会被终止。如果可重新启动,则与所有其他类型的运行时故障一样,kubelet将重新启动。

如果一个容器超过其内存请求,那么当节点内存不足时,它的pod可能被逐出

3. CPU限制

cpu和内存的限制可以同时加

启用一个占用2核的pod,设定最低不能少于5个cpu,最高不要超过10个cpu

应用yaml文件,容器调度失败,因为集群中的节点没有一个可以满足你的调度

[root@server2 quota]# kubectl delete -f demo.yml

pod "memory-demo" deleted

[root@server2 quota]# vim demo.yml

[root@server2 quota]# cat demo.yml

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

spec:

containers:

- name: memory-demo

image: stress

args:

- -c

- "2"

resources:

requests:

cpu: 5 ##最低不能少于5个cpu

limits:

cpu: 10 ##最高不要超过10个cpu

[root@server2 quota]# kubectl apply -f demo.yml

pod/memory-demo created

[root@server2 quota]# kubectl get pod

NAME READY STATUS RESTARTS AGE

memory-demo 0/1 Pending 0 10s

[root@server2 quota]# kubectl describe pod memory-demo

Name: memory-demo

Namespace: default

Priority: 0

Node:

Labels:

Annotations: Status: Pending

IP:

IPs:

Containers:

memory-demo:

Image: stress

Port:

Host Port:

Args:

-c

2

Limits:

cpu: 10

Requests:

cpu: 5

Environment:

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-d8qhv (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

default-token-d8qhv:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-d8qhv

Optional: false

QoS Class: Burstable

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 30s (x2 over 30s) default-scheduler 0/3 nodes are available: 3 Insufficient cpu.

[root@server2 quota]#

调度失败是因为申请的CPU资源超出集群节点所能提供的资源 但CPU 使用率过高,不会被杀死

更改yaml文件,设定最低不能少于1个cpu,应用yaml文件,pod成功被调度运行

[root@server2 quota]# kubectl delete -f demo.yml

pod "memory-demo" deleted

[root@server2 quota]# vim demo.yml

[root@server2 quota]# cat demo.yml

apiVersion: v1

kind: Pod

metadata:

name: memory-demo

spec:

containers:

- name: memory-demo

image: stress

args:

- -c

- "2"

resources:

requests:

cpu: 1

limits:

cpu: 10

[root@server2 quota]# kubectl apply -f demo.yml

pod/memory-demo created

[root@server2 quota]# kubectl get pod

NAME READY STATUS RESTARTS AGE

memory-demo 1/1 Running 0 13s

[root@server2 quota]#

4. 对namespace设置资源限制

对namespace作限制,应用demo.yml文件时,最大cpu设定不符合namespace限制,报错

[root@server2 quota]# vim limits.yml

[root@server2 quota]# cat limits.yml

apiVersion: v1

kind: LimitRange

metadata:

name: limitrange-memory

spec:

limits:

- default: ##pod内没有定义资源限制时以default为准

cpu: 0.5

memory: 512Mi

defaultRequest:

cpu: 0.1

memory: 256Mi

max:

cpu: 1

memory: 1Gi

min:

cpu: 0.1

memory: 100Mi

type: Container

[root@server2 quota]# kubectl apply -f limits.yml

limitrange/limitrange-memory created

[root@server2 quota]# kubectl get limitranges

NAME CREATED AT

limitrange-memory 2020-07-09T23:43:02Z

[root@server2 quota]# kubectl describe limitranges limitrange-memory

Name: limitrange-memory

Namespace: default

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container memory 100Mi 1Gi 256Mi 512Mi -

Container cpu 100m 1 100m 500m -

[root@server2 quota]# kubectl apply -f demo.yml

Error from server (Forbidden): error when creating "demo.yml": pods "memory-demo" is forbidden: maximum cpu usage per Container is 1, but limit is 10

更改demo.yml文件,使满足namespace限制,pod成功调度

[root@server2 quota]# vim demo.yml

[root@server2 quota]# cat demo.yml

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo

spec:

containers:

- name: cpu-demo

image: myapp:v1

resources:

requests:

memory: 100Mi

cpu: 0.2

limits:

memory: 300Mi

cpu: 1

[root@server2 quota]# kubectl apply -f demo.yml

pod/cpu-demo created

[root@server2 quota]# kubectl get pod

NAME READY STATUS RESTARTS AGE

cpu-demo 1/1 Running 0 8m50s

[root@server2 quota]#

5. 对namespace设置资源配额

当多个用户或团队共享具有固定节点数目的集群时,人们会担心有人使用超过其基于公平原则所分配到的资源量。资源配额是帮助管理员解决这一问题的工具。

资源配额,通过 ResourceQuota 对象来定义,对每个命名空间的资源消耗总量提供限制。 它可以限制命名空间中某种类型的对象的总数目上限,也可以限制命令空间中的 Pod 可以使用的计算资源的总上限。

资源配额的工作方式如下:

不同的团队可以在不同的命名空间下工作,目前这是非约束性的,在未来的版本中可能会通过 ACL (Access Control List 访问控制列表) 来实现强制性约束。

集群管理员可以为每个命名空间创建一个或多个资源配额对象。

当用户在命名空间下创建资源(如 Pod、Service 等)时,Kubernetes 的配额系统会跟踪集群的资源使用情况,以确保使用的资源用量不超过资源配额中定义的硬性资源限额。

如果资源创建或者更新请求违反了配额约束,那么该请求会报错(HTTP 403 FORBIDDEN),并在消息中给出有可能违反的约束。

如果命名空间下的计算资源 (如 cpu 和 memory)的配额被启用,则用户必须为这些资源设定请求值(request)和约束值(limit),否则配额系统将拒绝 Pod 的创建。

提示: 可使用 LimitRanger 准入控制器来为没有设置计算资源需求的 Pod 设置默认值。

参考https://kubernetes.io/docs/tasks/administer-cluster/quota-api-object/

[root@server2 quota]# kubectl get limitranges

NAME CREATED AT

limitrange-memory 2020-07-09T23:43:02Z

[root@server2 quota]# cat demo.yml

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo

spec:

containers:

- name: cpu-demo

image: myapp:v1

[root@server2 quota]# kubectl apply -f demo.yml

pod/cpu-demo created

[root@server2 quota]# kubectl get pod

NAME READY STATUS RESTARTS AGE

cpu-demo 1/1 Running 0 12s

[root@server2 quota]# vim quota.yml ##为namespace设置资源配额

[root@server2 quota]# cat quota.yml

apiVersion: v1

kind: ResourceQuota

metadata:

name: mem-cpu-demo

spec:

hard:

requests.cpu: "1" ##所有容器的cpu请求总额不能超过1核

requests.memory: 1Gi ##所有容器的内存请求总额不能超过1GIB

limits.cpu: "2" ##所有容器的cpu限额总额不能超过2核

limits.memory: 2Gi##所有容器的内存限额总额不能超过2GIB

pods: "2"

[root@server2 quota]# kubectl apply -f quota.yml

resourcequota/mem-cpu-demo created

[root@server2 quota]# kubectl describe resourcequotas

Name: mem-cpu-demo

Namespace: default

Resource Used Hard

-------- ---- ----

limits.cpu 500m 2

limits.memory 512Mi 2Gi

pods 1 2

requests.cpu 100m 1

requests.memory 256Mi 1Gi

[root@server2 quota]#

创建的ResourceQuota对象将在default名字空间中添加以下限制:

每个容器必须设置内存请求(memory request),内存限额(memory limit),cpu请求(cpu request)和cpu限额(cpu limit)

[root@server2 quota]# kubectl delete -f limits.yml ##删除namespace资源限制

limitrange "limitrange-memory" deleted

[root@server2 quota]# kubectl delete -f demo.yml ##删除pod

pod "cpu-demo" deleted

[root@server2 quota]# kubectl apply -f demo.yml ##设置资源配额后pod容器中必须设置限制,否则无法运行

Error from server (Forbidden): error when creating "demo.yml": pods "cpu-demo" is forbidden: failed quota: mem-cpu-demo: must specify limits.cpu,limits.memory,requests.cpu,requests.memory

[root@server2 quota]# kubectl describe resourcequotas

Name: mem-cpu-demo

Namespace: default

Resource Used Hard

-------- ---- ----

limits.cpu 0 2

limits.memory 0 2Gi

pods 0 2

requests.cpu 0 1

requests.memory 0 1Gi

重新编辑demo.yml文件,设置限制

[root@server2 quota]# vim demo.yml

[root@server2 quota]# cat demo.yml

apiVersion: v1

kind: Pod

metadata:

name: cpu-demo

spec:

containers:

- name: cpu-demo

image: myapp:v1

resources:

requests:

memory: 100Mi

cpu: 0.2

limits:

memory: 300Mi

cpu: 1

[root@server2 quota]# kubectl apply -f demo.yml

pod/cpu-demo created

[root@server2 quota]# kubectl describe resourcequotas

Name: mem-cpu-demo

Namespace: default

Resource Used Hard

-------- ---- ----

limits.cpu 1 2

limits.memory 300Mi 2Gi

pods 1 2

requests.cpu 200m 1

requests.memory 100Mi 1Gi

[root@server2 quota]#

对namespace 配置Pod配额

[root@server2 quota]# vim quota.yml

[root@server2 quota]# cat quota.yml

apiVersion: v1

kind: ResourceQuota

metadata:

name: mem-cpu-demo

spec:

hard:

requests.cpu: "1"

requests.memory: 1Gi

limits.cpu: "2"

limits.memory: 2Gi

pods: "2" ##pod最多建立2个

[root@server2 quota]# kubectl apply -f quota.yml

resourcequota/mem-cpu-demo unchanged

[root@server2 quota]# kubectl describe resourcequotas

Name: mem-cpu-demo

Namespace: default

Resource Used Hard

-------- ---- ----

limits.cpu 1 2

limits.memory 300Mi 2Gi

pods 1 2

requests.cpu 200m 1

requests.memory 100Mi 1Gi

[root@server2 quota]# kubectl apply -f limits.yml

limitrange/limitrange-memory created

[root@server2 quota]# kubectl get limitranges

NAME CREATED AT

limitrange-memory 2020-07-10T00:19:18Z

[root@server2 quota]# kubectl run demo-1 --image=myapp:v1

pod/demo-1 created

[root@server2 quota]# kubectl get pod ##此时已经运行了两个pod

NAME READY STATUS RESTARTS AGE

cpu-demo 1/1 Running 0 7m7s

demo-1 1/1 Running 0 11s

[root@server2 quota]# kubectl run demo-2 --image=myapp:v1 ##再运行pod时,因为达到pod限额所以无法运行

Error from server (Forbidden): pods "demo-2" is forbidden: exceeded quota: mem-cpu-demo, requested: pods=1, used: pods=2, limited: pods=2

[root@server2 quota]# kubectl delete pod demo-1 ##删除其中一个pod,便可以运行我们想要运行的pod

pod "demo-1" deleted

[root@server2 quota]# kubectl get pod

NAME READY STATUS RESTARTS AGE

cpu-demo 1/1 Running 0 8m14s

[root@server2 quota]# kubectl run demo-2 --image=myapp:v1 ##

pod/demo-2 created

[root@server2 quota]# kubectl get pod

NAME READY STATUS RESTARTS AGE

cpu-demo 1/1 Running 0 8m19s

demo-2 1/1 Running 0 2s

[root@server2 quota]#