【kubernetes/k8s源码分析】 kubernetes csi external-provisioner源码分析

Git Repository: https://github.com/kubernetes-csi/external-provisioner

| Latest stable release | Branch | Min CSI Version | Max CSI Version | Container Image | Min K8s Version | Max K8s Version | Recommended K8s Version |

|---|---|---|---|---|---|---|---|

| external-provisioner v1.3.0 | release-1.3 | v1.0.0 | - | quay.io/k8scsi/csi-provisioner:v1.3.0 | v1.13 | - | v1.15 |

| external-provisioner v1.2.0 | release-1.2 | v1.0.0 | - | quay.io/k8scsi/csi-provisioner:v1.2.0 | v1.13 | - | v1.14 |

| external-provisioner v1.0.1 | release-1.0 | v1.0.0 | - | quay.io/k8scsi/csi-provisioner:v1.0.1 | v1.13 | - | v1.13 |

| external-provisioner v0.4.2 | release-0.4 | v0.3.0 | v0.3.0 | quay.io/k8scsi/csi-provisioner:v0.4.2 | v1.10 | - | v1.10 |

Check --help output for alpha/beta features in each release.

| Feature | Status | Default | Description | Provisioner Feature Gate Required |

|---|---|---|---|---|

| Topology | Beta | Off | Topology aware dynamic provisioning (requires kubelet 1.14 on nodes). | Yes |

| Snapshots | Alpha | On | Snapshots and Restore. | No |

| CSIMigration | Alpha | On | Migrating in-tree volume plugins to CSI. | No |

| Cloning | Alpha | On | Cloning. | No |

Description

external-provisioner sidecar 容器对 apiserver 进行 watch PersistentVolumeClaim 对象

调用 CreateVolume 对指定的插件进行 provision volume,前提是为 controller 设置 CREATE_DELETE_VOLUME capability

一旦成功创建 volume,则返回 pv 对象

分为三阶段就是 Provision Attach Mount

CreateVolume +------------+ DeleteVolume

+------------->| CREATED +--------------+

| +---+----+---+ |

| Controller | | Controller v

+++ Publish | | Unpublish +++

|X| Volume | | Volume | |

+-+ +---v----+---+ +-+

| NODE_READY |

+---+----^---+

Node | | Node

Stage | | Unstage

Volume | | Volume

+---v----+---+

| VOL_READY |

+------------+

Node | | Node

Publish | | Unpublish

Volume | | Volume

+---v----+---+

| PUBLISHED |

+------------+The lifecycle of a dynamically provisioned volume, from

creation to destruction, when the Node Plugin advertises the

STAGE_UNSTAGE_VOLUME capability.

external-provisoner 就是提供支持 PersistentVolumeClaim 的,一般的 provisioner 要实现 Provision 和 Delete 的接口。主要是根据 PVC 创建 PV

kube-controller-manager 里的 persistent volume manager 会把这个 pvc 打上

volume.beta.kubernetes.io/storage-provisioner的 annotation代码实现pkg/controller/volume/persistenvolume/pv_controller_base.go

func (ctrl *PersistentVolumeController) setClaimProvisioner

例如: volume.beta.kubernetes.io/storage-provisioner: rbd.csi.ceph.com

externla-provisioner 看到这个 pvc 带有自己的 annotation 以后,拿到 StorageClass 提供的一些参数,并且根据 StorageClass 和 pvc 调用 CSI driver 的

CreateVolume,创建成功以后创建 pv,并且把这个 pv 绑定到对应的 pvc

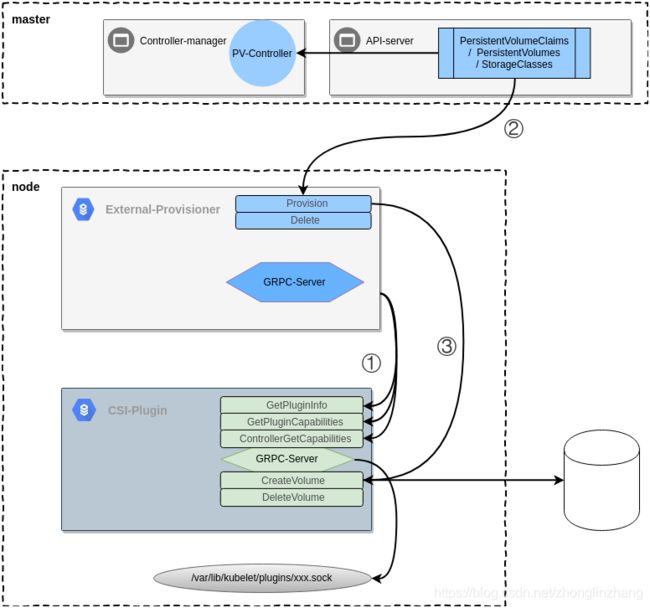

① External-provisoner 组件启动与 CSI-plugin 插件建立 GRPC 连接,发送 GetPluginInfo 获取 driver 名字,调用 GetPluginCapabilities 获取插件具有的能力,调用 ControllerGetCapabilies 获取 contoller 的能力

② 创建 PVC 是如果指定 storageclass,则 PV-controller 会为 PVC 添加注解 volume.beta.kubernetes.io/storage-provisioner,PV-controller 只处理 plugin 为 in-tree的,External_provisioner 启动了 controller 关注的资源包括 PV PVC StorageClass,如果指定的 PVC 注解的 key 为 volume.beta.kubernetes.io/storage-provisioner:,值为 自己的 driver name 则处理

③ 调用 CSI-Plugin 的 Provision 或者 Ddelete 方法

provisioner 与 plugin,Provision 则 GRPC CreateVolumeRequest 请求到插件,这个插件是与 provisioner pod

启动命令

csi-provisioner --csi-address=unix:///csi/csi-provisioner.sock --v=5

--csi-address: 默认/run/csi/socket

--kubeconfig: kubernetes配置文件,包括证书,master地址等信息

--leader-election-type: 默认为endpoints

1. main函数

1.1 获取clientset对象,创建kubernetes API客户端

这个很好理解,因为需要watch API PVC PV StorageClasses对象

// get the KUBECONFIG from env if specified (useful for local/debug cluster)

kubeconfigEnv := os.Getenv("KUBECONFIG")

if kubeconfigEnv != "" {

klog.Infof("Found KUBECONFIG environment variable set, using that..")

kubeconfig = &kubeconfigEnv

}

if *master != "" || *kubeconfig != "" {

klog.Infof("Either master or kubeconfig specified. building kube config from that..")

config, err = clientcmd.BuildConfigFromFlags(*master, *kubeconfig)

} else {

klog.Infof("Building kube configs for running in cluster...")

config, err = rest.InClusterConfig()

}

if err != nil {

klog.Fatalf("Failed to create config: %v", err)

}

clientset, err := kubernetes.NewForConfig(config)

if err != nil {

klog.Fatalf("Failed to create client: %v", err)

}1.2 与csi建立GRPC客户端

grpcClient, err := ctrl.Connect(*csiEndpoint)

if err != nil {

klog.Error(err.Error())

os.Exit(1)

}

err = ctrl.Probe(grpcClient, *operationTimeout)

if err != nil {

klog.Error(err.Error())

os.Exit(1)

}1.3 调用GetPluginCapabilities向Identify服务获取插件,controller权限

pluginCapabilities, controllerCapabilities, err := ctrl.GetDriverCapabilities(grpcClient, *operationTimeout)

if err != nil {

klog.Fatalf("Error getting CSI driver capabilities: %s", err)

}1.4 如果插件支持迁移的话

supportsMigrationFromInTreePluginName := ""

if csitranslationlib.IsMigratedCSIDriverByName(provisionerName) {

supportsMigrationFromInTreePluginName, err = csitranslationlib.GetInTreeNameFromCSIName(provisionerName)

if err != nil {

klog.Fatalf("Failed to get InTree plugin name for migrated CSI plugin %s: %v", provisionerName, err)

}

klog.V(2).Infof("Supports migration from in-tree plugin: %s", supportsMigrationFromInTreePluginName)

provisionerOptions = append(provisionerOptions, controller.AdditionalProvisionerNames([]string{supportsMigrationFromInTreePluginName}))

}1.4.1 看看哪些支持迁移

这些实现在 k8s.io/csi-translation-lib 目录下

inTreePlugins = map[string]plugins.InTreePlugin{

plugins.GCEPDDriverName: plugins.NewGCEPersistentDiskCSITranslator(),

plugins.AWSEBSDriverName: plugins.NewAWSElasticBlockStoreCSITranslator(),

plugins.CinderDriverName: plugins.NewOpenStackCinderCSITranslator(),

plugins.AzureDiskDriverName: plugins.NewAzureDiskCSITranslator(),

plugins.AzureFileDriverName: plugins.NewAzureFileCSITranslator(),

}1.5 创建CSIProvisioner对象,实现了Provisioner接口如下:

- Provision(VolumeOptions) (*v1.PersistentVolume, error)

- Delete(*v1.PersistentVolume) error

// Create the provisioner: it implements the Provisioner interface expected by

// the controller

csiProvisioner := ctrl.NewCSIProvisioner(clientset, *operationTimeout, identity, *volumeNamePrefix, *volumeNameUUIDLength, grpcClient, snapClient, provisionerName, pluginCapabilities, controllerCapabilities, supportsMigrationFromInTreePluginName)

provisionController = controller.NewProvisionController(

clientset,

provisionerName,

csiProvisioner,

serverVersion.GitVersion,

provisionerOptions...,

)1.6 如果设置 --enable-leader-election

如果未指定则默认为 endpoints

var le leaderElection

if *leaderElectionType == "endpoints" {

klog.Warning("The 'endpoints' leader election type is deprecated and will be removed in a future release. Use '--leader-election-type=leases' instead.")

le = leaderelection.NewLeaderElectionWithEndpoints(clientset, lockName, run)

} else if *leaderElectionType == "leases" {

le = leaderelection.NewLeaderElection(clientset, lockName, run)

} else {

klog.Error("--leader-election-type must be either 'endpoints' or 'lease'")

os.Exit(1)

}

2. Run函数

路径: vendor/sigs.k8s.io/sig-storage-lib-external-provisioner/controller/controller.go

2.1 runClaimWorker与runVolumeWorker

for i := 0; i < ctrl.threadiness; i++ {

go wait.Until(ctrl.runClaimWorker, time.Second, context.TODO().Done())

go wait.Until(ctrl.runVolumeWorker, time.Second, context.TODO().Done())

}2.1 runClaimWorker 函数

对 PVC 进行同步操作,shouldProvision 函数是否需要 provision,主要是 PVC 是否含有注解,volume.beta.kubernetes.io/storage-provisioner: hostpath.csi.k8s.io,

provisionClaimOperation 函数则是调用插件的 Provision 函数

// syncClaim checks if the claim should have a volume provisioned for it and

// provisions one if so.

func (ctrl *ProvisionController) syncClaim(obj interface{}) error {

claim, ok := obj.(*v1.PersistentVolumeClaim)

if !ok {

return fmt.Errorf("expected claim but got %+v", obj)

}

if ctrl.shouldProvision(claim) {

startTime := time.Now()

err := ctrl.provisionClaimOperation(claim)

ctrl.updateProvisionStats(claim, err, startTime)

return err

}

return nil

}

3. Provision函数

csiProvisioner实现了Provisioner接口,与controller-manager中的controller处理相同,不再分析,直接上重点

func (p *csiProvisioner) Provision(options controller.ProvisionOptions) (*v1.PersistentVolume, error) {

// The controller should call ProvisionExt() instead, but just in case...

pv, _, err := p.ProvisionExt(options)

return pv, err

}3.1 校验

if options.StorageClass == nil {

return nil, controller.ProvisioningFinished, errors.New("storage class was nil")

}

if options.PVC.Annotations[annStorageProvisioner] != p.driverName {

return nil, controller.ProvisioningFinished, &controller.IgnoredError{

Reason: fmt.Sprintf("PVC annotated with external-provisioner name %s does not match provisioner driver name %s. This could mean the PVC is not migrated",

options.PVC.Annotations[annStorageProvisioner],

p.driverName),

}

}3.2 如果 PVC 指定 data source,分为 snapshot 或者 clone

// Make sure the plugin is capable of fulfilling the requested options

rc := &requiredCapabilities{}

if options.PVC.Spec.DataSource != nil {

// PVC.Spec.DataSource.Name is the name of the VolumeSnapshot API object

if options.PVC.Spec.DataSource.Name == "" {

return nil, controller.ProvisioningFinished, fmt.Errorf("the PVC source not found for PVC %s", options.PVC.Name)

}

switch options.PVC.Spec.DataSource.Kind {

case snapshotKind:

if *(options.PVC.Spec.DataSource.APIGroup) != snapshotAPIGroup {

return nil, controller.ProvisioningFinished, fmt.Errorf("the PVC source does not belong to the right APIGroup. Expected %s, Got %s", snapshotAPIGroup, *(options.PVC.Spec.DataSource.APIGroup))

}

rc.snapshot = true

case pvcKind:

rc.clone = true

default:

klog.Infof("Unsupported DataSource specified (%s), the provisioner won't act on this request", options.PVC.Spec.DataSource.Kind)

}

}3.3 生成volume名字

pvName, err := makeVolumeName(p.volumeNamePrefix, fmt.Sprintf("%s", options.PVC.ObjectMeta.UID), p.volumeNameUUIDLength)

if err != nil {

return nil, err

}3.4 CreateVolume RPC请求

包括pv名,容量,权限等,参数是 storageclass 中的参数

// Create a CSI CreateVolumeRequest and Response

req := csi.CreateVolumeRequest{

Name: pvName,

Parameters: createVolumeRequestParameters,

VolumeCapabilities: volumeCaps,

CapacityRange: &csi.CapacityRange{

RequiredBytes: int64(volSizeBytes),

},

}3.5 如果支持 topology

待分析

if p.supportsTopology() {

requirements, err := GenerateAccessibilityRequirements(

p.client,

p.driverName,

options.PVC.Name,

options.StorageClass.AllowedTopologies,

options.SelectedNode,

p.strictTopology)

if err != nil {

return nil, controller.ProvisioningNoChange, fmt.Errorf("error generating accessibility requirements: %v", err)

}

req.AccessibilityRequirements = requirements

}3.2 调用controller的 Create Volume方法

发送 GRPC CreateVolumeRequest 到存储插件 create volume

ctx, cancel := context.WithTimeout(context.Background(), p.timeout)

defer cancel()

rep, err = p.csiClient.CreateVolume(ctx, &req)返回创建成功的PersistentVolume对象

部署配置 deploy 目录下

kind: Deployment apiVersion: apps/v1 metadata: name: csi-provisioner spec: replicas: 3 selector: matchLabels: app: csi-provisioner template: metadata: labels: app: csi-provisioner spec: serviceAccount: csi-provisioner containers: - name: csi-provisioner image: quay.io/k8scsi/csi-provisioner:canary args: - "--csi-address=$(ADDRESS)" - "--enable-leader-election" env: - name: ADDRESS value: /var/lib/csi/sockets/pluginproxy/mock.socket imagePullPolicy: "IfNotPresent" volumeMounts: - name: socket-dir mountPath: /var/lib/csi/sockets/pluginproxy/ - name: mock-driver image: quay.io/k8scsi/mock-driver:canary env: - name: CSI_ENDPOINT value: /var/lib/csi/sockets/pluginproxy/mock.socket volumeMounts: - name: socket-dir mountPath: /var/lib/csi/sockets/pluginproxy/ volumes: - name: socket-dir emptyDir:

参考:

https://github.com/kubernetes-csi/external-provisioner

https://kubernetes-csi.github.io/docs/external-provisioner.html

https://kubernetes-csi.github.io/docs/external-provisioner.html#description

https://github.com/container-storage-interface/spec/blob/master/spec.md