1.HybridSN 高光谱分类网络

参考了两位同学的代码:

class_num = 16 class HybridSN(nn.Module): def __init__(self): super(HybridSN, self).__init__() #三维卷积部分: #conv1:(1, 30, 25, 25), 8个 7x3x3 的卷积核 ==>(8, 24, 23, 23) #conv2:(8, 24, 23, 23), 16个 5x3x3 的卷积核 ==>(16, 20, 21, 21) #conv3:(16, 20, 21, 21),32个 3x3x3 的卷积核 ==>(32, 18, 19, 19) self.conv1 = nn.Conv3d(1,8,(7,3,3)) self.conv2 = nn.Conv3d(8,16,(5,3,3)) self.conv3 = nn.Conv3d(16,32,(3,3,3)) #二维卷积:(576, 19, 19) 64个 3x3 的卷积核,得到 (64, 17, 17) #接下来是一个 flatten 操作,变为 18496 维的向量

self.conv2d = nn.Conv2d(576,64,(3,3)) self.fc1 = nn.Linear(18496,256) self.fc2 = nn.Linear(256,128) #最后输出为 16 个节点,是最终的分类类别数。 self.out = nn.Linear(128, class_num) self.dropout = nn.Dropout(p=0.4) def forward(self,x): x = F.relu(self.conv1(x)) x = F.relu(self.conv2(x)) x = F.relu(self.conv3(x)) x = x.view(-1,x.shape[1]*x.shape[2],x.shape[3],x.shape[4]) x = F.relu(self.conv2d(x)) x = x.view(x.size(0),-1) x = self.fc1(x) x = self.dropout(x) x = self.fc2(x) x = self.dropout(x) x = self.out(x) return x # 随机输入,测试网络结构是否通 # x = torch.randn(1, 1, 30, 25, 25) # net = HybridSN() # y = net(x) # print(y.shape)

2.

代码参考了https://www.cnblogs.com/xiezhijie/p/13472076.html 同学的博客和知乎文章https://zhuanlan.zhihu.com/p/65459972

class_num = 16 class SEBlock(nn.Module): def __init__(self,in_channels,r=16): super(SEBlock,self).__init__() self.globalAvgPool = nn.AdaptiveAvgPool2d((1,1)) self.fc1 = nn.Linear(in_channels,round(in_channels/r)) self.fc2 = nn.Linear(round(in_channels/r),in_channels) def forward(self,x): out = self.globalAvgPool(x) out = out.view(out.shape[0],-1) out = F.relu(self.fc1(out)) out = F.sigmoid(self.fc2(out)) out = out.view(x.shape[0],x.shape[1],1,1) out = x * out return out class HybridSN(nn.Module): def __init__(self): super(HybridSN,self).__init__() self.conv3d1 = nn.Conv3d(1,8,kernel_size=(7,3,3),stride=1,padding=0) self.bn1 = nn.BatchNorm3d(8) self.conv3d2 = nn.Conv3d(8,16,kernel_size=(5,3,3),stride=1,padding=0) self.bn2 = nn.BatchNorm3d(16) self.conv3d3 = nn.Conv3d(16,32,kernel_size=(3,3,3),stride=1,padding=0) self.bn3 = nn.BatchNorm3d(32) self.conv2d4 = nn.Conv2d(576,64,kernel_size=(3,3),stride=1,padding=0) self.SElayer = SEBlock(64,16) self.bn4 = nn.BatchNorm2d(64) self.fc1 = nn.Linear(18496,256) self.fc2 = nn.Linear(256,128) self.fc3 = nn.Linear(128,16) self.dropout = nn.Dropout(0.4) def forward(self,x): out = F.relu(self.bn1(self.conv3d1(x))) out = F.relu(self.bn2(self.conv3d2(out))) out = F.relu(self.bn3(self.conv3d3(out))) out = F.relu(self.bn4(self.conv2d4(out.reshape(out.shape[0],-1,19,19)))) out = self.SElayer(out) out = out.reshape(out.shape[0],-1) out = F.relu(self.dropout(self.fc1(out))) out = F.relu(self.dropout(self.fc2(out))) out = self.fc3(out) return out

“Excitation操作可以看成学习到了各个channel的权重系数,从而使得模型对各个channel的特征更有辨别能力,这应该也算一种attention机制。”

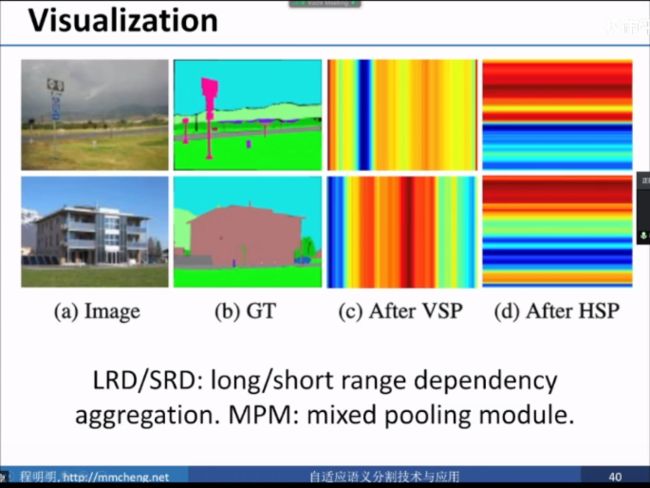

3.《语义分割中的自注意力机制和低秩重重建》:

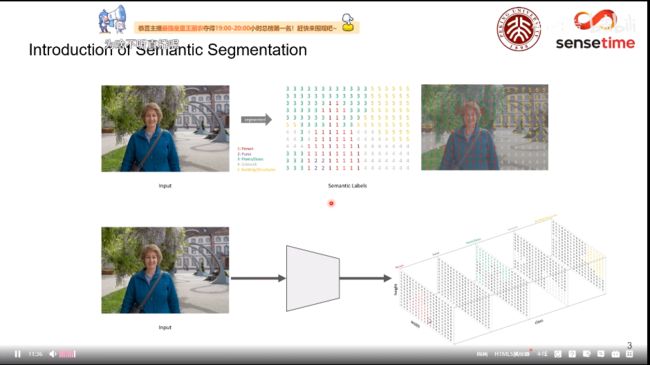

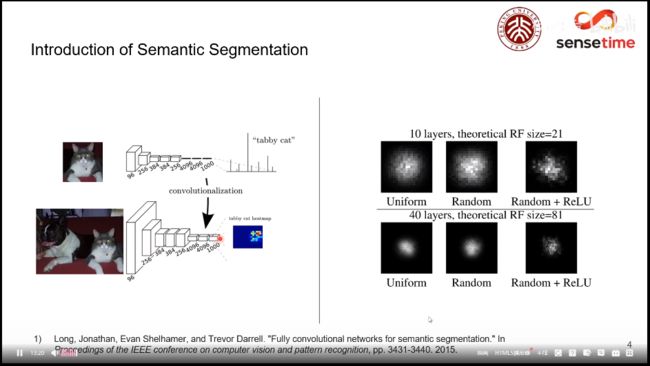

(1)语义分割

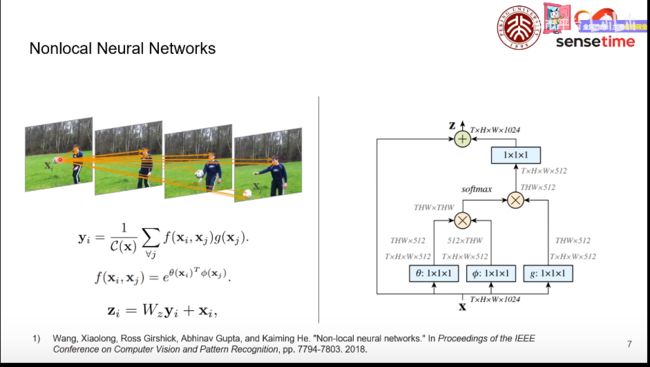

2. Nonlocal神经网络

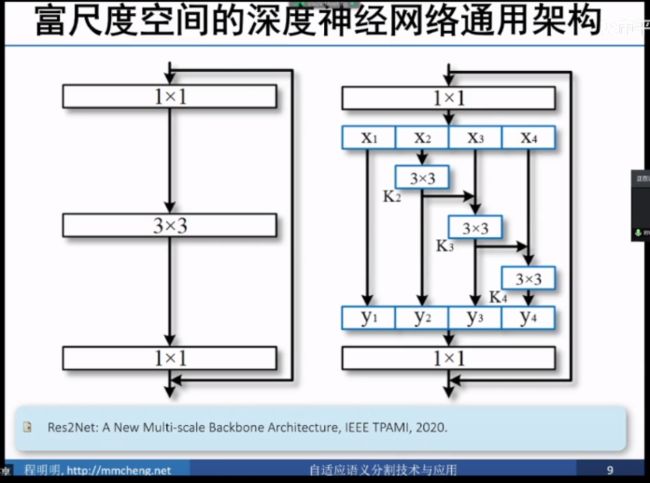

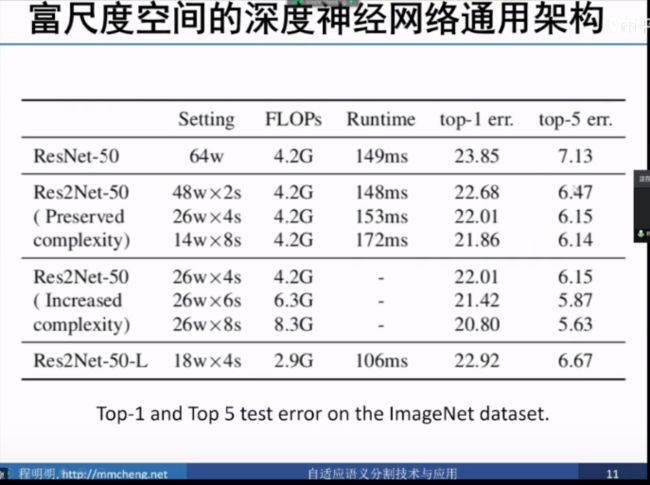

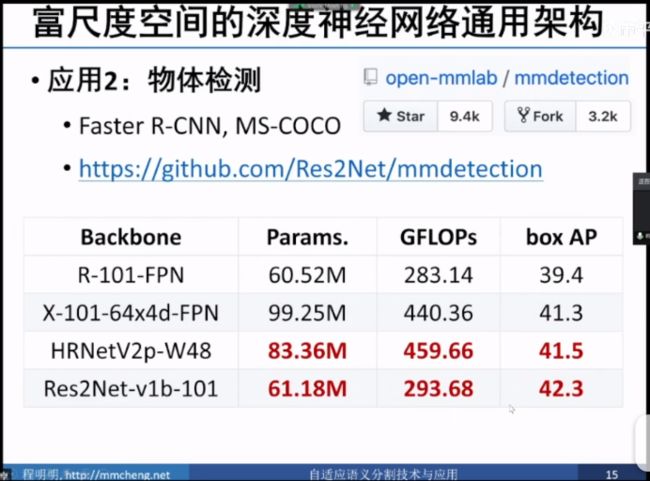

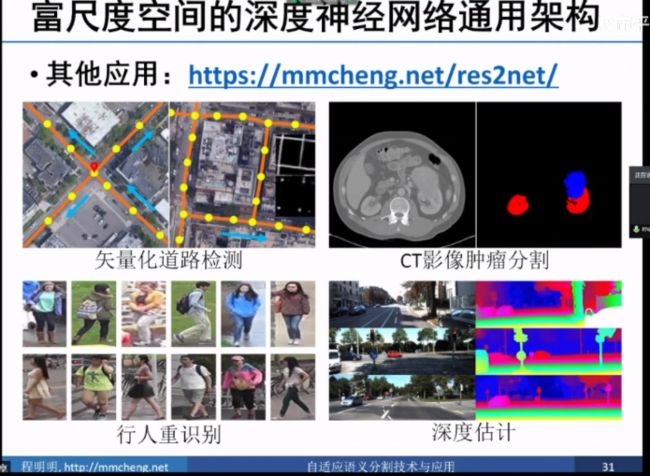

4.《图像语义分割前沿进展》:

挑战:大小各异、形状复杂、环境多变、数量众多