通过BlukLoad的方式快速导入海量数据

通过BlukLoad的方式快速导入海量数据

http://www.cnblogs.com/MOBIN/p/5559575.html

摘要

加载数据到HBase的方式有多种,通过HBase API导入或命令行导入或使用第三方(如sqoop)来导入或使用MR来批量导入(耗费磁盘I/O,容易在导入的过程使用节点宕机),但是这些方式不是慢就是在导入的过程的占用Region资料导致效率低下,今天要讲的就是利用HBase在HDFS存储原理及MapReduce的特性来快速导入海量的数据

HBase数据在HDFS下是如何存储的?

HBase中每张Table在根目录(/HBase)下用一个文件夹存储,Table名为文件夹名,在Table文件夹下每个Region同样用一个文件夹存储,每个Region文件夹下的每个列族也用文件夹存储,而每个列族下存储的就是一些HFile文件,HFile就是HBase数据在HFDS下存储格式,其整体目录结构如下:

/hbase////

HBase数据写路径

(图来自Cloudera)

在put数据时会先将数据的更新操作信息和数据信息写入WAL,在写入到WAL后,数据就会被放到MemStore中,当MemStore满后数据就会被flush到磁盘(即形成HFile文件),在这过程涉及到的flush,split,compaction等操作都容易造成节点不稳定,数据导入慢,耗费资源等问题,在海量数据的导入过程极大的消耗了系统性能,

避免

这些问题最好的方法就是使用BlukLoad的方式来加载数据到HBase中。

原理

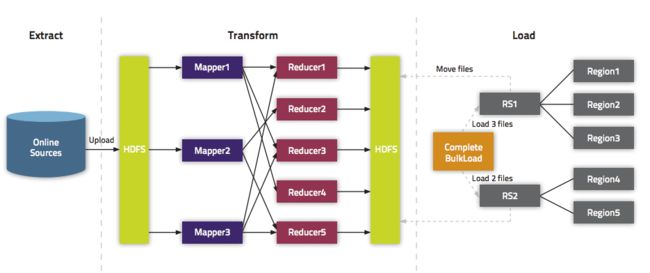

利用HBase数据按照HFile格式存储在HDFS的原理,使用Mapreduce直接生成HFile格式文件后,RegionServers再将HFile文件移动到相应的Region目录下

其流程如下图:

(图来自Cloudera)

导入过程

1.使用MapReduce生成HFile文件

GenerateHFile类

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

public

class

GenerateHFile

extends

Mapper

Text, ImmutableBytesWritable, Put>{

protected

void

map(LongWritable key, Text value, Context context)

throws

IOException, InterruptedException {

String line = value.toString();

String[] items = line.split(

"\t"

);

String ROWKEY = items[

1

] + items[

2

] + items[

3

];

ImmutableBytesWritable rowkey =

new

ImmutableBytesWritable(ROWKEY.getBytes());

Put put =

new

Put(ROWKEY.getBytes());

//ROWKEY

put.addColumn(

"INFO"

.getBytes(),

"URL"

.getBytes(), items[

0

].getBytes());

put.addColumn(

"INFO"

.getBytes(),

"SP"

.getBytes(), items[

1

].getBytes());

//出发点

put.addColumn(

"INFO"

.getBytes(),

"EP"

.getBytes(), items[

2

].getBytes());

//目的地

put.addColumn(

"INFO"

.getBytes(),

"ST"

.getBytes(), items[

3

].getBytes());

//出发时间

put.addColumn(

"INFO"

.getBytes(),

"PRICE"

.getBytes(), Bytes.toBytes(Integer.valueOf(items[

4

])));

//价格

put.addColumn(

"INFO"

.getBytes(),

"TRAFFIC"

.getBytes(), items[

5

].getBytes());

//交通方式

put.addColumn(

"INFO"

.getBytes(),

"HOTEL"

.getBytes(), items[

6

].getBytes());

//酒店

context.write(rowkey, put);

}

}

|

GenerateHFileMain类

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

public

class

GenerateHFileMain {

public

static

void

main(String[] args)

throws

IOException, ClassNotFoundException, InterruptedException {

final

String INPUT_PATH=

"hdfs://master:9000/INFO/Input"

;

final

String OUTPUT_PATH=

"hdfs://master:9000/HFILE/Output"

;

Configuration conf = HBaseConfiguration.create();

HTable table =

new

HTable(conf,

"TRAVEL"

);

Job job=Job.getInstance(conf);

job.getConfiguration().set(

"mapred.jar"

,

"/home/hadoop/TravelProject/out/artifacts/Travel/Travel.jar"

);

//预先将程序打包再将jar分发到集群上

job.setJarByClass(GenerateHFileMain.

class

);

job.setMapperClass(GenerateHFile.

class

);

job.setMapOutputKeyClass(ImmutableBytesWritable.

class

);

job.setMapOutputValueClass(Put.

class

);

job.setOutputFormatClass(HFileOutputFormat2.

class

);

HFileOutputFormat2.configureIncrementalLoad(job,table,table.getRegionLocator());

FileInputFormat.addInputPath(job,

new

Path(INPUT_PATH));

FileOutputFormat.setOutputPath(job,

new

Path(OUTPUT_PATH));

System.exit(job.waitForCompletion(

true

)?

0

:

1

);

}

}

|

注意

1.Mapper的输出Key类型必须是包含Rowkey的ImmutableBytesWritable格式,Value类型必须为KeyValue或Put类型,当导入的数据有多列时使用Put,只有一个列时使用KeyValue

2.job.setMapOutPutValueClass的值决定了job.setReduceClass的值,这里Reduce主要起到了对数据进行排序的作用,当job.setMapOutPutValueClass的值Put.class和KeyValue.class分别对应job.setReduceClass的PutSortReducer和KeyValueSortReducer

3.在创建表时对表进行预分区再结合MapReduce的并行计算机制能加快HFile文件的生成,如果对表进行了预分区(Region)就设置Reduce数等于分区数(Region)

4.在多列族的情况下需要进行多次的context.write

2.通过BlukLoad方式加载HFile文件

|

1

2

3

4

5

6

7

8

|

public

class

LoadIncrementalHFileToHBase {

public

static

void

main(String[] args)

throws

Exception {

Configuration conf = HBaseConfiguration.create();

Connection connection = ConnectionFactory.createConnection(configuration);

LoadIncrementalHFiles loder =

new

LoadIncrementalHFiles(configuration);

loder.doBulkLoad(

new

Path(

"hdfs://master:9000/HFILE/OutPut"

),

new

HTable(conf,

"TRAVEL"

));

}

}

|

由于BlukLoad是绕过了Write to WAL,Write to MemStore及Flush to disk的过程,所以并不能通过WAL来进行一些复制数据的操作

优点:

1.导入过程不占用Region资源

2.能快速导入海量的数据

3.节省内存