卷积神经网络(四)Resnet网络pytorch实现

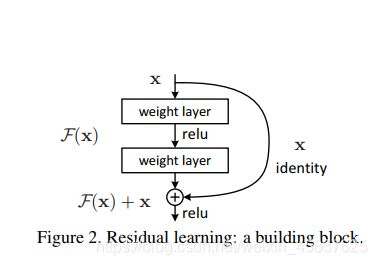

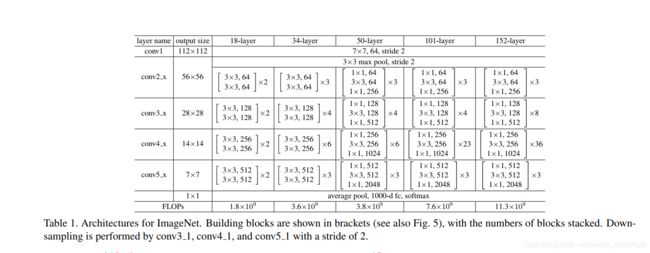

1.Resnet 主要结构图

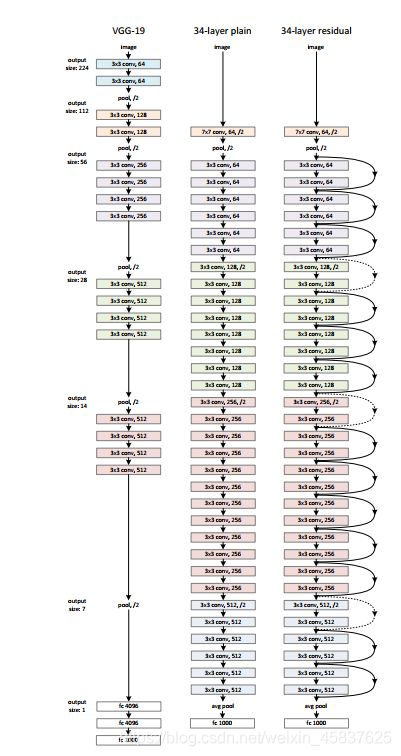

2.VGG与resnet34比较

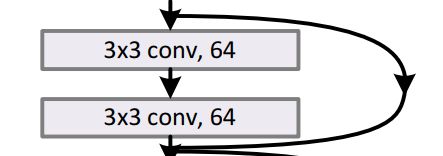

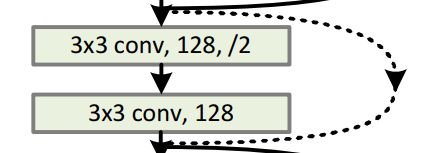

注意虚线和实线的区别:

2.1不需要下采样,直接相加

3.resnet参数结构

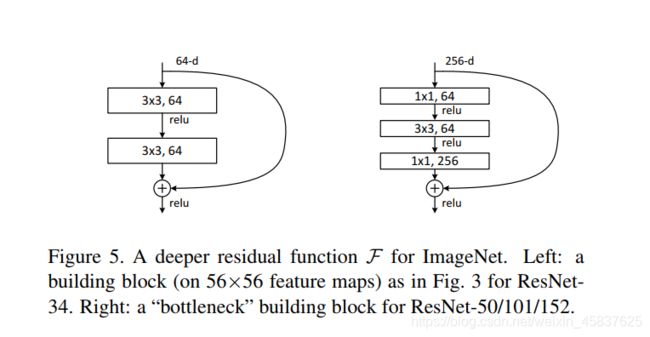

4.具有代表性的残差块

5.具体代码实现

5.1先定义适合Resnet34的基础卷积块

#18,34

class BasicBlock(nn.Module):

#因为第一个卷积和第二个卷积的通道数一样,所以这个设置为1

expansion = 1

def __init__(self, in_channel, out_channel, stride=1, downsample=None):

super(BasicBlock, self).__init__()

#Conv(3x3,64) stride=1

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channel)

self.relu = nn.ReLU()

#Conv(3x3,64) stride=1

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channel)

#残差边

self.downsample = downsample

def forward(self, x):

#

identity = x

#判断是否需要下采样

if self.downsample is not None:

#若需要

identity = self.downsample(x)

#第一次卷积

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

#第二次卷积

out = self.conv2(out)

out = self.bn2(out)

#相加之后传入给激活函数

out += identity

out = self.relu(out)

return out

5.2Resnet50基础卷积块

#50,101,152

class Bottleneck(nn.Module):

#第三个卷积块的通道数是第一个的四倍(64->256) ,前面定义方便后面运算

expansion = 4

def __init__(self, in_channel, out_channel, stride=1, downsample=None):

super(Bottleneck, self).__init__()

#Conv(1x1,64) stride=1

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

kernel_size=1, stride=1, bias=False) # squeeze channels

self.bn1 = nn.BatchNorm2d(out_channel)

# Conv(3x3,64) 第一个的时候stride=2 其他就是=1

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=stride, bias=False, padding=1) #stride=2,stride=1

self.bn2 = nn.BatchNorm2d(out_channel)

#Conv(1x1,256) stride=1

self.conv3 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel*self.expansion,

kernel_size=1, stride=1, bias=False) # unsqueeze channels

self.bn3 = nn.BatchNorm2d(out_channel*self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

def forward(self, x):

identity = x

#第一次卷积

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

#第二次卷积

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

#第三次卷积

out = self.conv3(out)

out = self.bn3(out)

#是否要进行下采样

if self.downsample is not None:

identity = self.downsample(x)

#相加之后再使用激活函数

out += identity

out = self.relu(out)

return out

6.模型整体代码:

import torch.nn as nn

import torch

#18,34

class BasicBlock(nn.Module):

#因为第一个卷积块和第二个卷积块的通道数一样,所以这个设置为1

expansion = 1

def __init__(self, in_channel, out_channel, stride=1, downsample=None):

super(BasicBlock, self).__init__()

#Conv(3x3,64) stride 要自己定义,因为如果为大卷积块的第一个残差网络,这儿的步长就是2

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

kernel_size=3, stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(out_channel)

self.relu = nn.ReLU()

#Conv(3x3,64) stride=1

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channel)

#残差边

self.downsample = downsample

def forward(self, x):

#

identity = x

#判断是否需要下采样

if self.downsample is not None:

#若需要

identity = self.downsample(x)

#第一次卷积

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

#第二次卷积

out = self.conv2(out)

out = self.bn2(out)

#相加之后传入给激活函数

out += identity

out = self.relu(out)

return out

#50,101,152

class Bottleneck(nn.Module):

#第三个卷积块的通道数是第一个的四倍(64->256) ,前面定义方便后面运算

expansion = 4

def __init__(self, in_channel, out_channel, stride=1, downsample=None):

super(Bottleneck, self).__init__()

#Conv(1x1,64) stride=1

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

kernel_size=1, stride=1, bias=False) # squeeze channels

self.bn1 = nn.BatchNorm2d(out_channel)

# Conv(3x3,64) 第一个的时候stride=2 其他就是=1

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=stride, bias=False, padding=1) #stride=2,stride=1

self.bn2 = nn.BatchNorm2d(out_channel)

#Conv(1x1,256) stride=1

self.conv3 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel*self.expansion,

kernel_size=1, stride=1, bias=False) # unsqueeze channels

self.bn3 = nn.BatchNorm2d(out_channel*self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

def forward(self, x):

identity = x

#第一次卷积

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

#第二次卷积

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

#第三次卷积

out = self.conv3(out)

out = self.bn3(out)

#是否要进行下采样

if self.downsample is not None:

identity = self.downsample(x)

#相加之后再使用激活函数

out += identity

out = self.relu(out)

return out

class ResNet(nn.Module):

#block :BasicBlock Bottleneck

#blocks_num :[3, 4, 6, 3], [3, 4, 23, 3]

#num_classes=1000

def __init__(self, block, blocks_num, num_classes=1000, include_top=True):

super(ResNet, self).__init__()

self.include_top = include_top #看是否有后面的全连接

#定义初始通道数=64

self.in_channel = 64

#第一个卷积层(7x7,64) stride=2

self.conv1 = nn.Conv2d(3, self.in_channel, kernel_size=7, stride=2,

padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(self.in_channel)

self.relu = nn.ReLU(inplace=True)

#(3x3,2) stride=2

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

#第一个卷积层结束

#Bottleneck ,64,3

#

#

#

self.layer1 = self._make_layer(block, 64, blocks_num[0])

#

self.layer2 = self._make_layer(block, 128, blocks_num[1], stride=2)

self.layer3 = self._make_layer(block, 256, blocks_num[2], stride=2)

self.layer4 = self._make_layer(block, 512, blocks_num[3], stride=2)

if self.include_top:

self.avgpool = nn.AdaptiveAvgPool2d((1, 1)) # output size = (1, 1)

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

#Bottleneck ,64,3

#Bottleneck ,128,4

#Bottleneck ,256,6

#Bottleneck ,512,3

def _make_layer(self, block, channel, block_num, stride=1):

#下采样初始定义为:None

downsample = None

"""

下采样部分

"""

#当步长不等于1,或者输入通道不等于 4xchannel的时候要进行下采样

#stride=2,这个数值要自己传入的,channel,这个数值也是要自己传入的

#只有这种情况才执行下采样:3个大块,第一个小块开始的时候才执行这个下采样,为了特征图大小维度一致

# : 4个大块。。。。。。。。。。

# :6个大块。。。。。。。。。。

# :3个大块。。。。。。。。。。

if stride != 1 or self.in_channel != channel * block.expansion: #当步长不等于一,In_channel不等于四倍的block.expension

#把特征的维度,宽高都要和前面的搞一致,这样就可以相加了

downsample = nn.Sequential(

nn.Conv2d(self.in_channel, channel * block.expansion, kernel_size=1, stride=stride, bias=False), #stride=2

nn.BatchNorm2d(channel * block.expansion))

#定义一个列表

layers = []

#

#64,64,None,1

#把第一个卷积层传入到列表中 第一个含有下采样,其他的没有

#注意这个block()代表的是所要传入的参数,

layers.append(block(self.in_channel, channel, downsample=downsample, stride=stride)) #stride=2(50) #stride=1(34)

#256

#重新定义4倍

self.in_channel = channel * block.expansion #256,512,1024,2048

#(1,3)

for i in range(1, block_num): #(1到block_num) 不包括block_num (0,block_num)0, #1,2

#block()->Bottleneck()

layers.append(block(self.in_channel, channel)) #256, 64

#256

"""0

inchannel=256,这个就是下采样的那个残差网络块,产生的,因为他是一个块一个块的,用block(self.in_channel=256,channel=64)

#对于这个channel=64这个,self._make_layer(block, 64, blocks_num[0]),这个参数一定要对应,channel里面是64,调用make_layer()的时候

要注意前面传入的block,后面传入的是channel,block里面的参数就是残差网络块的了

终于0明0白

"""

return nn.Sequential(*layers)

#注意参数的对应,

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

if self.include_top:

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def resnet34(num_classes=1000, include_top=True):

return ResNet(BasicBlock, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

def resnet101(num_classes=1000, include_top=True):

return ResNet(Bottleneck, [3, 4, 23, 3], num_classes=num_classes, include_top=include_top)