mmdetection训练自己的标注数据, 以faster RCNN ,yolo为例子

为什么选择了?mmdetection?

因为看到了一篇语义分割的论文,是用mmdetection写的,之前看到一个caffe的,环境装了很久,就是没成,遂换了mmdetection。

1.0 mmdetection环境配置

我就不讲这部分了

2.0 数据标注与准备

mmdetection采用的是coco数据格式,也支持voc,但是需要动手修改,因为团队是用的voc格式,于是决定修改成voc格式

现在想一想,我把voc用脚本转换成coco就可以很方便使用了,我还该了一两天来弄这个voc的数据集的适配

1、数据集生成工具

具体数据集构建可以看:

https://www.cnblogs.com/pprp/p/10863496.html#数据集构建

https://blog.csdn.net/weicao1990/article/details/93484603

有一个库有一些脚本进行检查和生成:

https://github.com/pprp/voc2007_for_yolo_torch

2、到这里,你的数据集就生成好了

├── Datasets

│ │ ├── VOC2007

│ │ │ ├── Annotations

│ │ │ ├── JPEGImages

│ │ │ ├── ImageSets

│ │ │ │ ├── Main

│ │ │ │ │ ├── test.txt

│ │ │ │ │ ├── trainval.txt

|—— val.txt

修改配置

一共是五个文件要修改,去适配自己的voc2007格式的数据集

如下图:

文件夹1:voc.py 修改类别classes

文件夹2: voc0712.py 修改数据集的位置,指明數據集的類型,路徑,以及各個訓練集、測試集文件路徑即可:

例如:

dataset_type = 'VOCDataset'

data_root = 'Datasets/'

...

ann_file=[data_root + 'VOC2007/ImageSets/Main/trainval.txt',]

img_prefix=[data_root + 'VOC2007/', ]

不理解我的意思的可以看:https://www.twblogs.net/a/5d10c615bd9eee1e5c8201d1 配置数据集的部分

文件夹3: faster_rcnn_r50_caffe_fpn_mstrain_1x_voc0712.py 我本次采用的是faster rcnn作为例子。我是把faster_rcnn_r50_caffe_fpn_mstrain_1x_coco.py这个自带的文件,复制了一份改名字为faster_rcnn_r50_caffe_fpn_mstrain_1x_voc0712.py

修改支持的多尺度照片:

img_scale=[(1333, 640), (1333, 672), (1333, 704), (1333, 736),

(1333, 768), (1280, 720), (600, 600)]

其中(1280,720),(600,600)就是我的数据集的大小

文件夹4: faster_rcnn_r50_fpn_1x_voc0712.py 这个也是由faster_rcnn_r50_fpn_1x_coco.py复制而来,修改里面的内容:

原来:

_base_ = [

'../_base_/models/faster_rcnn_r50_fpn.py',

'../_base_/datasets/coco_detection.py',

'../_base_/schedules/schedule_1x.py', '../_base_/default_runtime.py'

]

现在:

_base_ = [

'../_base_/models/faster_rcnn_r50_fpn_voc0712.py',

'../_base_/datasets/voc0712.py',

'../_base_/schedules/schedule_1x.py', '../_base_/default_runtime.py'

]

文件5 : faster_rcnn_r50_fpn_voc0712.py

修改网络参数,主要是num_classes

文件夹6 :

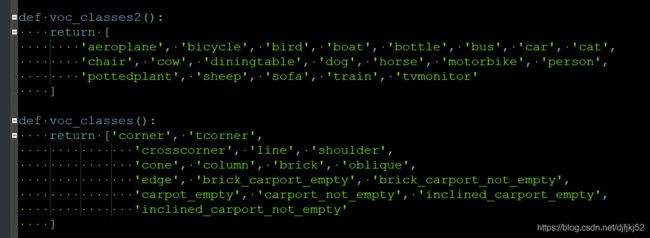

其中 .\mmdetection\mmdet\core\evaluation\class_names.py

可以改变的voc_classes

训练

# Check Pytorch installation

import torch, torchvision

print(torch.__version__, torch.cuda.is_available())

# Check MMDetection installation

import mmdet

print(mmdet.__version__)

# Check mmcv installation

from mmcv.ops import get_compiling_cuda_version, get_compiler_version

print(get_compiling_cuda_version())

print(get_compiler_version())

import mmcv

import matplotlib.pyplot as plt

import copy

import os.path as osp

import mmcv

import numpy as np

from mmdet.datasets.builder import DATASETS

from mmdet.datasets.custom import CustomDataset

from mmcv import Config

cfg = Config.fromfile('./configs/faster_rcnn/faster_rcnn_r50_caffe_fpn_mstrain_1x_voc0712.py')

from mmdet.apis import set_random_seed

# Modify dataset type and path

cfg.dataset_type = 'VOCDataset'

cfg.data_root = 'Datasets/'

cfg.model.roi_head.bbox_head.num_classes = 16

#cfg.total_epochs = 2

# We can still use the pre-trained Mask RCNN model though we do not need to

# use the mask branch

cfg.load_from = 'checkpoints/mask_rcnn_r50_caffe_fpn_mstrain-poly_3x_coco_bbox_mAP-0.408__segm_mAP-0.37_20200504_163245-42aa3d00.pth'

# Set up working dir to save files and logs.

cfg.work_dir = './tutorial_exps'

# The original learning rate (LR) is set for 8-GPU training.

# We divide it by 8 since we only use one GPU.

cfg.optimizer.lr = 0.02 / 8

cfg.lr_config.warmup = None

cfg.log_config.interval = 10

# Change the evaluation metric since we use customized dataset.

cfg.evaluation.metric = 'mAP'

# We can set the evaluation interval to reduce the evaluation times

cfg.evaluation.interval = 12

# We can set the checkpoint saving interval to reduce the storage cost

cfg.checkpoint_config.interval = 12

# Set seed thus the results are more reproducible

cfg.seed = 0

set_random_seed(0, deterministic=False)

cfg.gpu_ids = range(1)

# We can initialize the logger for training and have a look

# at the final config used for training

print(f'Config:\n{cfg.pretty_text}')

from mmdet.datasets import build_dataset

from mmdet.models import build_detector

from mmdet.apis import train_detector

# Build dataset

datasets = [build_dataset(cfg.data.train)]

# Build the detector

model = build_detector(

cfg.model, train_cfg=cfg.train_cfg, test_cfg=cfg.test_cfg)

# Add an attribute for visualization convenience

model.CLASSES = datasets[0].CLASSES

# Create work_dir

mmcv.mkdir_or_exist(osp.abspath(cfg.work_dir))

train_detector(model, datasets, cfg, distributed=False, validate=True)

检验

from mmdet.apis import inference_detector, init_detector, show_result_pyplot

img = mmcv.imread('Datasets/VOC2007/JPEGImages/0001.jpg')

model.cfg = cfg

result = inference_detector(model, img)

#show_result_pyplot(model, img, result)

show_result_pyplot(model, img, result, score_thr=0.5)

参考

https://pprp.github.io/2019/11/13/mmdetection%E4%BD%BF%E7%94%A8%E6%8C%87%E5%8D%97/

https://zhuanlan.zhihu.com/p/42980766

https://www.twblogs.net/a/5d10c615bd9eee1e5c8201d1