本文分为两部分,先写一个入门的教程,然后再给出自己添加maxout与NIN的layer的方法

(一)

其实在Github上已经有答案了(https://github.com/BVLC/caffe/issues/684)

Here's roughly the process I follow.

- Add a class declaration for your layer to the appropriate one of

common_layers.hpp, data_layers.hpp,loss_layers.hpp, neuron_layers.hpp, or vision_layers.hpp. Include an inline implementation oftype and the *Blobs() methods to specify blob number requirements. Omit the *_gpu declarations if you'll only be implementing CPU code.

- Implement your layer in

layers/your_layer.cpp.

-

SetUp for initialization: reading parameters, allocating buffers, etc.

-

Forward_cpu for the function your layer computes

-

Backward_cpu for its gradient

- (Optional) Implement the GPU versions

Forward_gpu and Backward_gpu in layers/your_layer.cu.

- Add your layer to

proto/caffe.proto, updating the next available ID. Also declare parameters, if needed, in this file.

- Make your layer createable by adding it to

layer_factory.cpp.

- Write tests in

test/test_your_layer.cpp. Use test/test_gradient_check_util.hpp to check that your Forward and Backward implementations are in numerical agreement.

上面是一个大致的流程,我就直接翻译过来吧,因为我自己琢磨出来的步骤跟这个是一样的。在这里,我们就添加一个Wtf_Layer,然后作用跟Convolution_Layer一模一样。注意这里的命名方式,Wtf第一个字母大写,剩下的小写,算是一个命名规范吧,强迫症表示很舒服。

1. 首先确定要添加的layer的类型,是common_layer 还是 data_layer 还是loss_layer, neuron_layer, vision_layer ,这里的Wtf_Layer肯定是属vision_layer了,所以打开vision_layers.hpp 然后复制convolution_layer的相关代码,把类名还有构造函数的名字改为WtfLayer,如果没有用到GPU运算,那么把里面的带GPU的函数都删掉

2. 将Wtf_layer.cpp 添加到src\caffe\layers文件夹中,代码内容复制convolution_layer.cpp 把对应的类名修改(可以搜一下conv关键字,然后改为Wtf)

3. 假如有gpu的代码就添加响应的Wtf_layer.cu (这里不添加了)

4. 修改proto/caffe.proto文件,找到LayerType,添加WTF,并更新ID(新的ID应该是34)。假如说Wtf_Layer有参数,比如Convolution肯定是有参数的,那么添加WtfParameter类

5. 在layer_factory.cpp中添加响应的代码,就是一堆if ... else的那片代码

6. 这个可以不做,但是为了结果还是做一个,就是写一个测试文件,检查前向后向传播的数据是否正确。gradient_check的原理可以参考UFLDL教程的对应章节

之后我会更新我自己写的maxout_layer的demo,在这立一个flag以鞭策自己完成吧╮(╯▽╰)╭

(二) 如何添加maxout_layer

表示被bengio的maxout给搞郁闷了,自己摆出一个公式巴拉巴拉说了一堆,结果用到卷积层的maxout却给的另一种方案,吐槽无力,不过后来又想了下应该是bengio没表述清楚的问题。

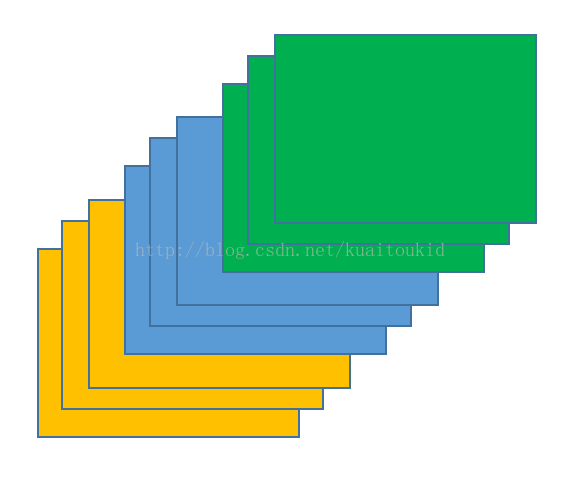

我的maxout的算法思路是这样的,首先要确定一个group_size变量,表示最大值是在group_size这样一个规模的集合下挑选出来的,简而言之就是给定group_size个数,取最大。确定好group_size变量,然后让卷积层的output_num变为原来的group_size倍,这样输出的featuremap的个数就变为原来的group_size倍,然后以group_size为一组划分这些featuremap,每组里面挑出响应最大的点构成一个新的featuremap,这样就得到了maxout层的输出。

要是还不明白我就拿上面的图来说一下,上面一共9张图,相当于卷积层输出9张featuremap,我们每3个为一组,那么maxout层输出9/3=3张featuremap,对于每组featuremaps,比如我们挑出绿色的三张featuremaps,每张大小为w*h,那么声明一个新的output_featuremap大小为w*h,遍历output_featuremap的每个点,要赋的数值为三张绿色featuremap对应点的最大的那个,也就是三个数里面选最大的,这样就输出了一张output_featuremap,剩下的组类似操作。

我觉得到这应该明白maxout的原理跟算法了吧= =,下面就直接贴代码了

新建一个maxout_layer.cpp放到src/caffe/layer文件夹下

- #include

-

- #include

-

-

-

- #include "caffe/filler.hpp"

-

- #include "caffe/layer.hpp"

-

- #include "caffe/util/im2col.hpp"

-

- #include "caffe/util/math_functions.hpp"

-

- #include "caffe/vision_layers.hpp"

-

-

-

- namespace caffe {

-

-

-

- template <typename Dtype>

-

- void MaxoutLayer::SetUp(const vector*>& bottom,

-

- vector*>* top) {

-

- Layer::SetUp(bottom, top);

-

- printf("===============================================================has go into setup !==============================================\n");

-

- MaxoutParameter maxout_param = this->layer_param_.maxout_param();

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

- num_output_ = this->layer_param_.maxout_param().num_output();

-

- CHECK_GT(num_output_, 0) << "output number cannot be zero.";

-

-

-

-

-

- num_ = bottom[0]->num();

-

- channels_ = bottom[0]->channels();

-

- height_ = bottom[0]->height();

-

- width_ = bottom[0]->width();

-

-

-

-

-

-

-

- for (int bottom_id = 1; bottom_id < bottom.size(); ++bottom_id) {

-

- CHECK_EQ(num_, bottom[bottom_id]->num()) << "Inputs must have same num.";

-

- CHECK_EQ(channels_, bottom[bottom_id]->channels())

-

- << "Inputs must have same channels.";

-

- CHECK_EQ(height_, bottom[bottom_id]->height())

-

- << "Inputs must have same height.";

-

- CHECK_EQ(width_, bottom[bottom_id]->width())

-

- << "Inputs must have same width.";

-

- }

-

-

-

-

-

- CHECK_EQ(channels_ % num_output_, 0)

-

- << "Number of channel should be multiples of output number.";

-

-

-

- group_size_ = channels_ / num_output_;

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

-

- (*top)[0]->Reshape(num_, num_output_, height_, width_);

- max_idx_.Reshape(num_, num_output_, height_, width_);

-

-

-

- }

-

-

-

-

-

- template <typename Dtype>

-

- Dtype MaxoutLayer::Forward_cpu(const vector*>& bottom,

-

- vector*>* top) {

-

-

-

- int featureSize = height_ * width_;

-

- Dtype* mask = NULL;

-

- mask = max_idx_.mutable_cpu_data();

-

-

-

-

-

- const int top_count = (*top)[0]->count();

-

- caffe_set(top_count, Dtype(0), mask);

-

-

-

-

-

-

-

-

- for (int i = 0; i < bottom.size(); ++i) {

-

- const Dtype* bottom_data = bottom[i]->cpu_data();

-

- Dtype* top_data = (*top)[i]->mutable_cpu_data();

-

-

-

- for (int n = 0; n < num_; n ++) {

-

-

-

- for (int o = 0; o < num_output_; o ++) {

-

- for (int g = 0; g < group_size_; g ++) {

-

- if (g == 0) {

-

- for (int h = 0; h < height_; h ++) {

-

- for (int w = 0; w < width_; w ++) {

-

- int index = w + h * width_;

-

- top_data[index] = bottom_data[index];

-

- mask[index] = index;

-

- }

-

- }

-

- }

-

- else {

-

- for (int h = 0; h < height_; h ++) {

-

- for (int w = 0; w < width_; w ++) {

-

- int index0 = w + h * width_;

-

- int index1 = index0 + g * featureSize;

-

- if (top_data[index0] < bottom_data[index1]) {

-

- top_data[index0] = bottom_data[index1];

-

- mask[index0] = index1;

-

- }

-

- }

-

- }

-

- }

-

- }

-

- bottom_data += featureSize * group_size_;

-

- top_data += featureSize;

-

- mask += featureSize;

-

- }

-

- }

-

- }

-

-

-

- return Dtype(0.);

-

- }

-

-

-

- template <typename Dtype>

-

- void MaxoutLayer::Backward_cpu(const vector*>& top,

-

- const vector<bool>& propagate_down, vector*>* bottom) {

-

- if (!propagate_down[0]) {

-

- return;

-

- }

-

- const Dtype* top_diff = top[0]->cpu_diff();

-

- Dtype* bottom_diff = (*bottom)[0]->mutable_cpu_diff();

-

- caffe_set((*bottom)[0]->count(), Dtype(0), bottom_diff);

-

- const Dtype* mask = max_idx_.mutable_cpu_data();

-

- int featureSize = height_ * width_;

-

-

- for (int i = 0; i < top.size(); i ++) {

-

- const Dtype* top_diff = top[i]->cpu_diff();

-

- Dtype* bottom_diff = (*bottom)[i]->mutable_cpu_diff();

-

-

-

- for (int n = 0; n < num_; n ++) {

-

-

-

- for (int o = 0; o < num_output_; o ++) {

-

- for (int h = 0; h < height_; h ++) {

-

- for (int w = 0; w < width_; w ++) {

-

- int index = w + h * width_;

-

- int bottom_index = mask[index];

-

- bottom_diff[bottom_index] += top_diff[index];

-

- }

-

- }

-

- bottom_diff += featureSize * group_size_;

-

- top_diff += featureSize;

-

- mask += featureSize;

-

- }

-

- }

-

- }

-

- }

-

-

-

-

-

-

-

-

-

-

-

- INSTANTIATE_CLASS(MaxoutLayer);

-

-

-

- }

里面的乱码是中文,到了linux里面就乱码了,不影响,还一个printf是测试用的(要被笑话用printf了= =)

vision_layers.hpp 里面添加下面的代码

-

-

- template <typename Dtype>

- class MaxoutLayer : public Layer {

- public:

- explicit MaxoutLayer(const LayerParameter& param)

- : Layer(param) {}

- virtual void SetUp(const vector*>& bottom,

- vector*>* top);

-

- virtual inline LayerParameter_LayerType type() const {

- return LayerParameter_LayerType_MAXOUT;

- }

-

- protected:

- virtual Dtype Forward_cpu(const vector*>& bottom,

- vector*>* top);

-

-

- virtual void Backward_cpu(const vector*>& top,

- const vector<bool>& propagate_down, vector*>* bottom);

-

-

-

- int num_output_;

- int num_;

- int channels_;

- int height_;

- int width_;

- int group_size_;

- Blob max_idx_;

-

- };

剩下的是layer_factory.cpp 的改动,不说明了,然后是proto文件的改动

- message MaxoutParameter {

- optional uint32 num_output = 1;

- }

额,当然还有proto文件的其他改动也不说明了,还有test文件,我没写,因为我自己跑了下demo,没啥问题,所以代码可以说是正确的。

不过要说明的是,目前的代码不能接在全连接层后面,是我里面有几句代码写的有问题,之后我会改动一下,问题不大。

然后就是NIN的实现了,表示自己写的渣一样的代码啊,效率目测很低。哦对了,这些都是CPU算的,GPU不大会,还没打算写。

NIN_layer 的实现

我之前一直以为Github上的network in network 是有问题的,事实证明,我最后也写成了Github上面的样子= =所以大家自行搜索caffe+network in network吧……不过得下载,所以我就把网络格式的代码直接贴出来(cifar10数据库的网络结构)

![]()