python opencv 特征匹配

Brute-Force匹配器很简单,它取第一个集合里一个特征的描述子并用第二个集合里所有其他的特征和他通过一些距离计算进行匹配。最近的返回。

对于BF匹配器,首先我们得用cv2.BFMatcher()创建BF匹配器对象.它取两个可选参数,第一个是normType。它指定要使用的距离量度。默认是cv2.NORM_L2。对于SIFT,SURF很好。(还有cv2.NORM_L1)。对于二进制字符串的描述子,比如ORB,BRIEF,BRISK等,应该用cv2.NORM_HAMMING。使用Hamming距离度量,如果ORB使用VTA_K == 3或者4,应该用cv2.NORM_HAMMING2

我们使用SIFT描述子来匹配特征,所以让我们先加载图像,找到描述子。

import numpy as np

import cv2

from matplotlib import pyplot as plt

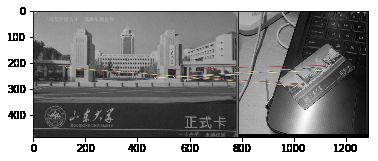

img1 = cv2.imread('xiaoyuanka.jpg',0)# queryImage

img2 = cv2.imread('xiaoyuanka_sence.jpg',0) # trainImage

# Initiate SIFT detector

orb = cv2.ORB_create()

# find the keypoints and descriptors with SIFT

kp1, des1 = orb.detectAndCompute(img1,None)

kp2, des2 = orb.detectAndCompute(img2,None)

接着我们用距离度量cv2.NORM_HAMMING创建一个BFMatcher对象,crossCheck设为真。然后我们用Matcher.match()方法来获得两个图像里最匹配的。我们按他们距离升序排列,这样最匹配的(距离最小)在最前面。然后我们画出最开始的10个匹配(为了好看,你可以增加)

遇到的问题 img3 = cv2.drawMatches(img1,kp1,img2,kp2,matches[:10], None,flags=2) 有none

matches = bf.match(des1,des2) 大小好像有要求

# create BFMatcher object

bf = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True)

# bf = cv.BFMatcher_create(cv2.NORM_HAMMING, crossCheck=True)

# Match descriptors.

matches = bf.match(des1,des2)

# Sort them in the order of their distance.

matches = sorted(matches, key = lambda x:x.distance)

# Draw first 10 matches.

img3 = cv2.drawMatches(img1,kp1,img2,kp2,matches[:10], None,flags=2)

plt.imshow(img3),plt.show()

cv2.imshow('drawMatches',img3)

cv2.waitKey(0)

cv2.destroyAllWindows()

这次,我们使用BFMatcher.knnMatch()来获得k个最匹配的。在这个例子里,我们设置k=2这样我们可以应用比率检测。

import numpy as np

import cv2

from matplotlib import pyplot as plt

img1 = cv2.imread('xiaoyuanka.jpg',0)# queryImage

img2 = cv2.imread('xiaoyuanka_sence.jpg',0) # trainImage

# Initiate SIFT detector

sift = cv2.xfeatures2d.SIFT_create()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(img1,None)

kp2, des2 = sift.detectAndCompute(img2,None)

# BFMatcher with default params

bf = cv2.BFMatcher()

matches = bf.knnMatch(des1,des2, k=2)

# Apply ratio test

good = []

for m,n in matches:

if m.distance < 0.75*n.distance:

good.append([m])

# cv2.drawMatchesKnn expects list of lists as matches.

img3 = cv2.drawMatchesKnn(img1,kp1,img2,kp2,good,None,flags=2)

plt.imshow(img3),plt.show()

cv2.imshow('drawMatches',img3)

cv2.waitKey(0)

cv2.destroyAllWindows()

基于FLANN的匹配器

FLANN是快速估计最近邻的库。它包含了一些为大数据集内搜索快速近邻和高维特征的优化算法。它在大数据集的时候比BFMatcher更快。

import numpy as np

import cv2

from matplotlib import pyplot as plt

img1 = cv2.imread('xiaoyuanka.jpg',0)# queryImage

img2 = cv2.imread('xiaoyuanka_sence.jpg',0) # trainImage

# Initiate SIFT detector

sift = cv2.xfeatures2d.SIFT_create()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(img1,None)

kp2, des2 = sift.detectAndCompute(img2,None)

# FLANN parameters

FLANN_INDEX_KDTREE = 0

index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5)

search_params = dict(checks=50)# or pass empty dictionary

flann = cv2.FlannBasedMatcher(index_params,search_params)

matches = flann.knnMatch(des1,des2,k=2)

# Need to draw only good matches, so create a mask

matchesMask = [[0,0] for i in range(len(matches))]

# ratio test as per Lowe's paper

for i,(m,n) in enumerate(matches):

if m.distance < 0.7*n.distance:

matchesMask[i]=[1,0]

draw_params = dict(matchColor = (0,255,0),

singlePointColor = (255,0,0),

matchesMask = matchesMask,

flags = 0)

img3 = cv2.drawMatchesKnn(img1,kp1,img2,kp2,matches,None,**draw_params)

plt.imshow(img3),plt.show()

cv2.imshow('drawMatches',img3)

cv2.waitKey(0)

cv2.destroyAllWindows()