OpenCV - 特征匹配(Python实现)

蛮力( Brute-Force)匹配

Brute-Force匹配非常简单,首先在第一幅图像中选取一个关键点然后依次与第二幅图像的每个关键点进行(描述符)距离测试,最后返回距离最近的关键点.

对于BF匹配器,首先我们必须使用cv2.BFMatcher()创建 BFMatcher 对象。它需要两个可选的参数.

- 第一个是

normType,它指定要使用的距离测量,默认情况下,它是cv2.NORM_L2.它适用于SIFT,SURF等(cv2.NORM_L1也在那里).对于基于二进制字符串的描述符,如ORB,BRIEF,BRISK等,应使用cv2.NORM_HAMMING,使用汉明距离作为度量,如果ORB使用WTA_K == 3or4,则应使用cv2.NORM_HAMMING2. crossCheck:默认值为False。如果设置为True,匹配条件就会更加严格,只有到A中的第i个特征点与B中的第j个特征点距离最近,并且B中的第j个特征点到A中的第i个特征点也是最近时才会返回最佳匹配,即这两个特征点要互相匹配才行.

两个重要的方法是BFMatcher.match()和BFMatcher.knnMatch(), 第一个返回最佳匹配, 第二种方法返回k个最佳匹配,其中k由用户指定.

使用cv2.drawMatches()来绘制匹配的点,它会将两幅图像先水平排列,然后在最佳匹配的点之间绘制直线。如果前面使用的BFMatcher.knnMatch(),现在可以使用函数cv2.drawMatchsKnn为每个关键点和它的个最佳匹配点绘制匹配线。如果要选择性绘制就要给函数传入一个掩模.

import numpy as np

import cv2

import matplotlib.pyplot as plt

img1 = cv2.imread('image.jpg') # queryImage

ROI = img1[250:350,150:300] # trainImage

img2=cv2.resize(ROI,None,fx=2,fy=2,interpolation=cv2.INTER_CUBIC)

# Initiate ORB detector

orb = cv2.ORB_create()

# find the keypoints and descriptors with ORB

kp1, des1 = orb.detectAndCompute(img1,None)

kp2, des2 = orb.detectAndCompute(img2,None)

# create BFMatcher object

bf = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True)

# Match descriptors.

matches = bf.match(des1,des2)

# Sort them in the order of their distance.

matches = sorted(matches, key = lambda x:x.distance)

# Draw first 10 matches.

img3 = cv2.drawMatches(img1,kp1,img2,kp2,matches[:10],None, flags=2)

cv2.imshow("show",img3)

cv2.waitKey()

cv2.destroyAllWindows()

Matcher对象

matches = bf.match(des1,des2)行的结果是DMatch对象的列表。 此DMatch对象具有以下属性:

- DMatch.distance - 描述符之间的距离。 越低越好。

- DMatch.trainIdx - 训练描述符中描述符的索引

- DMatch.queryIdx - 查询描述符中描述符的索引

- DMatch.imgIdx - 目标图像的索引

对 SIFT 描述符进行蛮力匹配和比值测试

import numpy as np

import cv2

img1 = cv2.imread('image.jpg') # queryImage

ROI = img1[250:350,150:300] # trainImage

img2=cv2.resize(ROI,None,fx=2,fy=2,interpolation=cv2.INTER_CUBIC)

# Initiate SIFT detector

sift = cv2.xfeatures2d.SIFT_create()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(img1,None)

kp2, des2 = sift.detectAndCompute(img2,None)

# BFMatcher with default params

bf = cv2.BFMatcher()

matches = bf.knnMatch(des1,des2, k=2)

# Apply ratio test

# 比值测试,首先获取与 A 距离最近的点 B(最近)和 C(次近),只有当 B/C

# 小于阈值时( 0.75)才被认为是匹配,因为假设匹配是一一对应的,真正的匹配的理想距离为 0

good = []

for m,n in matches:

if m.distance < 0.75*n.distance:

good.append([m])

# cv.drawMatchesKnn expects list of lists as matches.

img3 = cv2.drawMatchesKnn(img1,kp1,img2,kp2,good,None,flags=2)

cv2.imshow("show",img3)

cv2.waitKey()

cv2.destroyAllWindows()

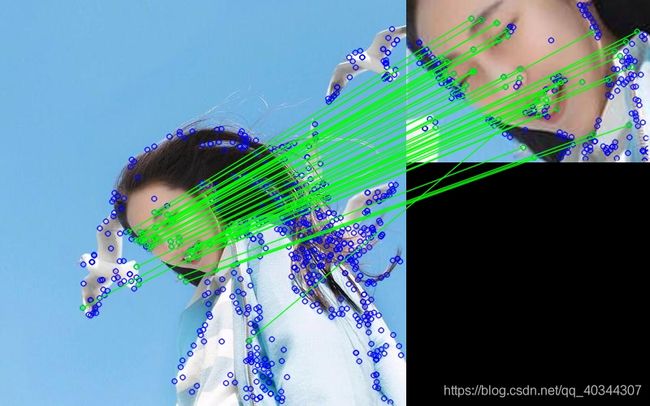

FLANN 匹配器

FLANN 是快速最近邻搜索包( Fast_Library_for_Approximate_Nearest_Neighbors)的简称。它是一个对大数据集和高维特征进行最近邻搜索的算法的集合,而且这些算法都已经被优化过了。在面对大数据集时它的效果要好于 BFMatcher。

import numpy as np

import cv2

img1 = cv2.imread('image.jpg') # queryImage

ROI = img1[250:350,150:300] # trainImage

img2=cv2.resize(ROI,None,fx=2,fy=2,interpolation=cv2.INTER_CUBIC)

# Initiate SIFT detector

sift = cv2.xfeatures2d.SIFT_create()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(img1,None)

kp2, des2 = sift.detectAndCompute(img2,None)

# FLANN parameters

FLANN_INDEX_KDTREE = 1

index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5)

search_params = dict(checks=50) # or pass empty dictionary

flann = cv2.FlannBasedMatcher(index_params,search_params)

matches = flann.knnMatch(des1,des2,k=2)

# Need to draw only good matches, so create a mask

matchesMask = [[0,0] for i in range(len(matches))]

# ratio test as per Lowe's paper

for i,(m,n) in enumerate(matches):

if m.distance < 0.7*n.distance:

matchesMask[i]=[1,0]

draw_params = dict(matchColor = (0,255,0),

singlePointColor = (255,0,0),

matchesMask = matchesMask,

flags = 0)

img3 = cv2.drawMatchesKnn(img1,kp1,img2,kp2,matches,None,**draw_params)

cv2.imshow("show",img3)

cv2.waitKey()

cv2.destroyAllWindows()