elasticsearch 与 hive集成

ElasticSearch是一个基于Lucene构建的开源,分布式,RESTful搜索引擎。设计用于云计算中,能够达到实时搜索,稳定,可靠,快速,安装使用方便。

hive是一个基于hdfs的数据仓库,方便使用者可以通过一种类sql(HiveQL)的语言对hdfs上面的打数据进行访问,通过elasticsearch与hive的结合来实现对hdfs上面的数据实时访问的效果。

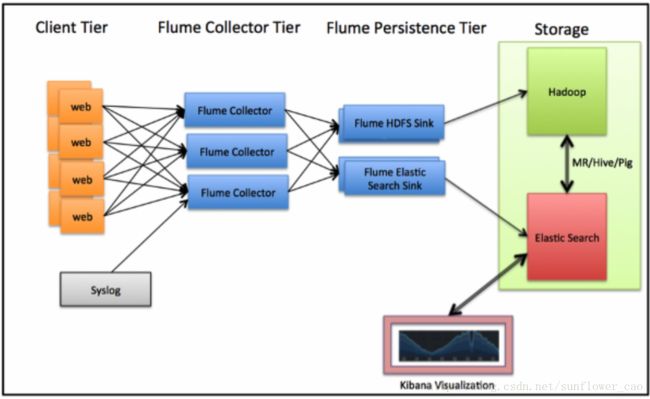

在上面的图中描述了日志通过Flume Collector 流到Sink 然后进入hdfs和elastic search,然后可以通过es的接口可以实时将一些趋势 比如当前用户数 请求次数等展示在图表中实现数据可视化。

要作集成需要在hive上有两个表,一个是原数据表,另外一个类似于在元数据表上面建立的view,但是并不是数据的存储 下面是作者Costin Leau在邮件列表里边的描述,网址http://elasticsearch-users.115913.n3.nabble.com/Elasticsearch-Hadoop-td4047293.html

There is no duplication per-se in HDFS. Hive tables are just 'views' of data - one sits unindexed, in raw format in HDFS

the other one is indexed and analyzed in Elasticsearch.

You can't combine the two since they are completely different things - one is a file-system, the other one is a search

and analytics engine.

org.elasticsearch

elasticsearch-hadoop

2.0.1

这个地址是elasticsearch-hadoop的github地址:https://github.com/elasticsearch/elasticsearch-hadoop#readme

目前最新的版本是2.0.1 这个版本能支持目前所有的hadoop衍生版本。

取得这个jar包之后,可以将其拷贝到hive的lib目录中,然后以如下方式打开hive命令窗口:

bin/hive -hiveconf hive.aux.jars.path=/home/hadoop/hive/lib/elasticsearch-hadoop-2.0.1.jar建立view表

CREATE EXTERNAL TABLE user (id INT, name STRING)

STORED BY 'org.elasticsearch.hadoop.hive.ESStorageHandler'

TBLPROPERTIES('es.resource' = 'radiott/artiststt','es.index.auto.create' = 'true');es.resource的radiott/artiststt分别是索引名和索引的类型,这个是在es访问数据时候使用的。

然后建立源数据表

CREATE TABLE user_source (id INT, name STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY ','; 数据示例:

1,medcl

2,lcdem

3,tom

4,jack

LOAD DATA LOCAL INPATH '/home/hadoop/files1.txt' OVERWRITE INTO TABLE user_source;

hive> select * from user_source;

OK

1 medcl

2 lcdem

3 tom

4 jack

Time taken: 3.4 seconds, Fetched: 4 row(s)

将数据导入到user表中:

INSERT OVERWRITE TABLE user SELECT s.id, s.name FROM user_source s;

hive> INSERT OVERWRITE TABLE user SELECT s.id, s.name FROM user_source s;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1412756024135_0007, Tracking URL = N/A

Kill Command = /home/hadoop/hadoop/bin/hadoop job -kill job_1412756024135_0007

Hadoop job information for Stage-0: number of mappers: 1; number of reducers: 0

2014-10-08 17:44:04,121 Stage-0 map = 0%, reduce = 0%

2014-10-08 17:45:04,360 Stage-0 map = 0%, reduce = 0%, Cumulative CPU 1.21 sec

2014-10-08 17:45:05,505 Stage-0 map = 0%, reduce = 0%, Cumulative CPU 1.21 sec

2014-10-08 17:45:06,707 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.29 sec

2014-10-08 17:45:07,728 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.29 sec

2014-10-08 17:45:08,757 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.29 sec

2014-10-08 17:45:09,778 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.29 sec

2014-10-08 17:45:10,800 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.29 sec

2014-10-08 17:45:11,915 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.29 sec

2014-10-08 17:45:12,969 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.42 sec

2014-10-08 17:45:14,231 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.42 sec

2014-10-08 17:45:15,258 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.42 sec

2014-10-08 17:45:16,300 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.42 sec

2014-10-08 17:45:17,326 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.42 sec

2014-10-08 17:45:18,352 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.42 sec

2014-10-08 17:45:19,374 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.42 sec

2014-10-08 17:45:20,396 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.42 sec

2014-10-08 17:45:21,423 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.42 sec

2014-10-08 17:45:22,447 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.42 sec

2014-10-08 17:45:23,475 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.42 sec

MapReduce Total cumulative CPU time: 1 seconds 420 msec

Ended Job = job_1412756024135_0007

MapReduce Jobs Launched:

Job 0: Map: 1 Cumulative CPU: 1.42 sec HDFS Read: 253 HDFS Write: 0 SUCCESS

Total MapReduce CPU Time Spent: 1 seconds 420 msec

OK

Time taken: 113.778 seconds

radiotthadoop@caozw:~/elasticsearch-1.3.3/data/elasticsearch/nodes/0/indices$ ls

index1demo indexdemo radiotest radiott

可以通过如下java程序去访问数据了

package com.cn.bhh.example.analysis.elasticsearch.hive;

import com.cn.bhh.example.analysis.elasticsearch.local.DataFactory;

import com.cn.bhh.example.analysis.elasticsearch.local.ElasticSearchHandler;

import com.cn.bhh.example.analysis.elasticsearch.local.Medicine;

import org.elasticsearch.action.search.SearchRequestBuilder;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.action.search.SearchType;

import org.elasticsearch.client.Client;

import org.elasticsearch.client.transport.TransportClient;

import org.elasticsearch.common.transport.InetSocketTransportAddress;

import org.elasticsearch.hadoop.hive.EsStorageHandler;

import org.elasticsearch.index.query.BoolQueryBuilder;

import org.elasticsearch.index.query.QueryBuilder;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.index.query.QueryStringQueryBuilder;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.SearchHits;

import java.util.ArrayList;

import java.util.List;

/**

* Created by caozw on 10/8/14.

*/

public class Test {

private Client client;

public Test(){

//使用本机做为节点

this("127.0.0.1");

}

public Test(String ipAddress){

//集群连接超时设置

/*

Settings settings = ImmutableSettings.settingsBuilder().put("client.transport.ping_timeout", "10s").build();

client = new TransportClient(settings);

*/

client = new TransportClient().addTransportAddress(new InetSocketTransportAddress(ipAddress, 9300));

}

public List searcher(QueryBuilder queryBuilder, String indexname, String type){

SearchRequestBuilder builder = client.prepareSearch(indexname).setTypes(type).setSearchType(SearchType.DEFAULT).setFrom(0).setSize(100);

builder.setQuery(queryBuilder);

SearchResponse response = builder.execute().actionGet();

System.out.println(" " + response);

//System.out.println(response.getHits().getTotalHits());

List list = new ArrayList();

SearchHits hits = response.getHits();

SearchHit[] searchHists = hits.getHits();

if(searchHists.length>0){

for(SearchHit hit:searchHists){

Integer id = (Integer)hit.getSource().get("id");

String name = (String) hit.getSource().get("name");

//String function = (String) hit.getSource().get("funciton");

String function = "";

list.add(new Medicine(id, name, function));

}

}

return list;

}

public static void main(String[] args) {

Test esHandler = new Test();

//List jsondata = DataFactory.getInitJsonData();

//List jsondata = DataFactory.getInitJsonData();

String indexname = "radiott";

String type = "artiststt";

//esHandler.createIndexResponse(indexname, type, jsondata);

//查询条件

/*QueryBuilder queryBuilder = QueryBuilders.fuzzyQuery("name", "银花 感冒 颗粒");*/

BoolQueryBuilder qb = QueryBuilders.boolQuery().must(new QueryStringQueryBuilder("lcdem").field("name"));

//.should(new QueryStringQueryBuilder("解表").field("function"));

/*QueryBuilder queryBuilder = QueryBuilders.boolQuery()

.must(QueryBuilders.termQuery("id", 1));*/

List result = esHandler.searcher(qb, indexname, type);

for(int i=0; i 运行结果:

/home/hadoop/jdk1.7.0_67/bin/java -Didea.launcher.port=7533 -Didea.launcher.bin.path=/home/hadoop/idea-IU-135.909/bin -Dfile.encoding=UTF-8 -classpath /home/hadoop/jdk1.7.0_67/jre/lib/rt.jar:/home/hadoop/jdk1.7.0_67/jre/lib/jsse.jar:/home/hadoop/jdk1.7.0_67/jre/lib/charsets.jar:/home/hadoop/jdk1.7.0_67/jre/lib/jfxrt.jar:/home/hadoop/jdk1.7.0_67/jre/lib/resources.jar:/home/hadoop/jdk1.7.0_67/jre/lib/plugin.jar:/home/hadoop/jdk1.7.0_67/jre/lib/jce.jar:/home/hadoop/jdk1.7.0_67/jre/lib/javaws.jar:/home/hadoop/jdk1.7.0_67/jre/lib/management-agent.jar:/home/hadoop/jdk1.7.0_67/jre/lib/deploy.jar:/home/hadoop/jdk1.7.0_67/jre/lib/jfr.jar:/home/hadoop/jdk1.7.0_67/jre/lib/ext/localedata.jar:/home/hadoop/jdk1.7.0_67/jre/lib/ext/sunjce_provider.jar:/home/hadoop/jdk1.7.0_67/jre/lib/ext/zipfs.jar:/home/hadoop/jdk1.7.0_67/jre/lib/ext/sunec.jar:/home/hadoop/jdk1.7.0_67/jre/lib/ext/dnsns.jar:/home/hadoop/jdk1.7.0_67/jre/lib/ext/sunpkcs11.jar:/home/hadoop/IdeaProjects/XingXuntongDemo/target/classes:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-client/2.3.0-cdh5.1.2/hadoop-client-2.3.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-common/2.3.0-cdh5.1.2/hadoop-common-2.3.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-annotations/2.3.0-cdh5.1.2/hadoop-annotations-2.3.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/com/google/guava/guava/12.0.1/guava-12.0.1.jar:/home/hadoop/apache-maven-3.1.1/repo/com/google/code/findbugs/jsr305/1.3.9/jsr305-1.3.9.jar:/home/hadoop/apache-maven-3.1.1/repo/commons-cli/commons-cli/1.2/commons-cli-1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/commons/commons-math3/3.1.1/commons-math3-3.1.1.jar:/home/hadoop/apache-maven-3.1.1/repo/xmlenc/xmlenc/0.52/xmlenc-0.52.jar:/home/hadoop/apache-maven-3.1.1/repo/commons-httpclient/commons-httpclient/3.1/commons-httpclient-3.1.jar:/home/hadoop/apache-maven-3.1.1/repo/commons-logging/commons-logging/1.1.1/commons-logging-1.1.1.jar:/home/hadoop/apache-maven-3.1.1/repo/commons-codec/commons-codec/1.7/commons-codec-1.7.jar:/home/hadoop/apache-maven-3.1.1/repo/commons-io/commons-io/2.4/commons-io-2.4.jar:/home/hadoop/apache-maven-3.1.1/repo/commons-net/commons-net/3.1/commons-net-3.1.jar:/home/hadoop/apache-maven-3.1.1/repo/commons-collections/commons-collections/3.2.1/commons-collections-3.2.1.jar:/home/hadoop/apache-maven-3.1.1/repo/log4j/log4j/1.2.17/log4j-1.2.17.jar:/home/hadoop/apache-maven-3.1.1/repo/commons-lang/commons-lang/2.6/commons-lang-2.6.jar:/home/hadoop/apache-maven-3.1.1/repo/commons-configuration/commons-configuration/1.6/commons-configuration-1.6.jar:/home/hadoop/apache-maven-3.1.1/repo/commons-digester/commons-digester/1.8/commons-digester-1.8.jar:/home/hadoop/apache-maven-3.1.1/repo/commons-beanutils/commons-beanutils/1.7.0/commons-beanutils-1.7.0.jar:/home/hadoop/apache-maven-3.1.1/repo/commons-beanutils/commons-beanutils-core/1.8.0/commons-beanutils-core-1.8.0.jar:/home/hadoop/apache-maven-3.1.1/repo/org/slf4j/slf4j-api/1.7.5/slf4j-api-1.7.5.jar:/home/hadoop/apache-maven-3.1.1/repo/org/slf4j/slf4j-log4j12/1.7.5/slf4j-log4j12-1.7.5.jar:/home/hadoop/apache-maven-3.1.1/repo/org/codehaus/jackson/jackson-core-asl/1.8.8/jackson-core-asl-1.8.8.jar:/home/hadoop/apache-maven-3.1.1/repo/org/codehaus/jackson/jackson-mapper-asl/1.8.8/jackson-mapper-asl-1.8.8.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/avro/avro/1.7.5-cdh5.1.2/avro-1.7.5-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/com/thoughtworks/paranamer/paranamer/2.3/paranamer-2.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/xerial/snappy/snappy-java/1.0.5/snappy-java-1.0.5.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/commons/commons-compress/1.4.1/commons-compress-1.4.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/tukaani/xz/1.0/xz-1.0.jar:/home/hadoop/apache-maven-3.1.1/repo/com/google/protobuf/protobuf-java/2.5.0/protobuf-java-2.5.0.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-auth/2.3.0-cdh5.1.3/hadoop-auth-2.3.0-cdh5.1.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/httpcomponents/httpclient/4.2.5/httpclient-4.2.5.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/httpcomponents/httpcore/4.2.5/httpcore-4.2.5.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/directory/server/apacheds-kerberos-codec/2.0.0-M15/apacheds-kerberos-codec-2.0.0-M15.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/directory/server/apacheds-i18n/2.0.0-M15/apacheds-i18n-2.0.0-M15.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/directory/api/api-asn1-api/1.0.0-M20/api-asn1-api-1.0.0-M20.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/directory/api/api-util/1.0.0-M20/api-util-1.0.0-M20.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/zookeeper/zookeeper/3.4.5-cdh5.1.3/zookeeper-3.4.5-cdh5.1.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-hdfs/2.3.0-cdh5.1.2/hadoop-hdfs-2.3.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/mortbay/jetty/jetty-util/6.1.26.cloudera.2/jetty-util-6.1.26.cloudera.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-mapreduce-client-app/2.3.0-cdh5.1.2/hadoop-mapreduce-client-app-2.3.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-mapreduce-client-common/2.3.0-cdh5.1.2/hadoop-mapreduce-client-common-2.3.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-yarn-common/2.3.0-cdh5.1.2/hadoop-yarn-common-2.3.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-yarn-api/2.3.0-cdh5.1.2/hadoop-yarn-api-2.3.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/javax/xml/bind/jaxb-api/2.1/jaxb-api-2.1.jar:/home/hadoop/apache-maven-3.1.1/repo/javax/activation/activation/1.1/activation-1.1.jar:/home/hadoop/apache-maven-3.1.1/repo/com/sun/jersey/jersey-core/1.8/jersey-core-1.8.jar:/home/hadoop/apache-maven-3.1.1/repo/com/sun/jersey/jersey-server/1.8/jersey-server-1.8.jar:/home/hadoop/apache-maven-3.1.1/repo/asm/asm/3.1/asm-3.1.jar:/home/hadoop/apache-maven-3.1.1/repo/com/sun/jersey/jersey-json/1.8/jersey-json-1.8.jar:/home/hadoop/apache-maven-3.1.1/repo/org/codehaus/jettison/jettison/1.1/jettison-1.1.jar:/home/hadoop/apache-maven-3.1.1/repo/com/sun/xml/bind/jaxb-impl/2.2.3-1/jaxb-impl-2.2.3-1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/codehaus/jackson/jackson-jaxrs/1.8.8/jackson-jaxrs-1.8.8.jar:/home/hadoop/apache-maven-3.1.1/repo/org/codehaus/jackson/jackson-xc/1.7.1/jackson-xc-1.7.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-yarn-client/2.3.0-cdh5.1.2/hadoop-yarn-client-2.3.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-mapreduce-client-core/2.3.0-cdh5.1.2/hadoop-mapreduce-client-core-2.3.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-yarn-server-common/2.3.0-cdh5.1.2/hadoop-yarn-server-common-2.3.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-mapreduce-client-shuffle/2.3.0-cdh5.1.2/hadoop-mapreduce-client-shuffle-2.3.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/javax/servlet/servlet-api/2.5/servlet-api-2.5.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-mapreduce-client-jobclient/2.3.0-cdh5.1.2/hadoop-mapreduce-client-jobclient-2.3.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/mysql/mysql-connector-java/5.1.30/mysql-connector-java-5.1.30.jar:/home/hadoop/apache-maven-3.1.1/repo/redis/clients/jedis/2.4.2/jedis-2.4.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/commons/commons-pool2/2.0/commons-pool2-2.0.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hbase/hbase-server/0.98.1-cdh5.1.3/hbase-server-0.98.1-cdh5.1.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hbase/hbase-common/0.98.1-cdh5.1.3/hbase-common-0.98.1-cdh5.1.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-core/2.3.0-mr1-cdh5.1.3/hadoop-core-2.3.0-mr1-cdh5.1.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/mortbay/jetty/jetty/6.1.26.cloudera.2/jetty-6.1.26.cloudera.2.jar:/home/hadoop/apache-maven-3.1.1/repo/tomcat/jasper-runtime/5.5.23/jasper-runtime-5.5.23.jar:/home/hadoop/apache-maven-3.1.1/repo/commons-el/commons-el/1.0/commons-el-1.0.jar:/home/hadoop/apache-maven-3.1.1/repo/tomcat/jasper-compiler/5.5.23/jasper-compiler-5.5.23.jar:/home/hadoop/apache-maven-3.1.1/repo/javax/servlet/jsp/jsp-api/2.1/jsp-api-2.1.jar:/home/hadoop/apache-maven-3.1.1/repo/net/java/dev/jets3t/jets3t/0.6.1/jets3t-0.6.1.jar:/home/hadoop/apache-maven-3.1.1/repo/hsqldb/hsqldb/1.8.0.10/hsqldb-1.8.0.10.jar:/home/hadoop/apache-maven-3.1.1/repo/org/eclipse/jdt/core/3.1.1/core-3.1.1.jar:/home/hadoop/apache-maven-3.1.1/repo/com/github/stephenc/findbugs/findbugs-annotations/1.3.9-1/findbugs-annotations-1.3.9-1.jar:/home/hadoop/apache-maven-3.1.1/repo/junit/junit/4.11/junit-4.11.jar:/home/hadoop/apache-maven-3.1.1/repo/org/hamcrest/hamcrest-core/1.3/hamcrest-core-1.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hbase/hbase-protocol/0.98.1-cdh5.1.3/hbase-protocol-0.98.1-cdh5.1.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hbase/hbase-client/0.98.1-cdh5.1.3/hbase-client-0.98.1-cdh5.1.3.jar:/home/hadoop/apache-maven-3.1.1/repo/io/netty/netty/3.6.6.Final/netty-3.6.6.Final.jar:/home/hadoop/apache-maven-3.1.1/repo/org/cloudera/htrace/htrace-core/2.04/htrace-core-2.04.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hbase/hbase-prefix-tree/0.98.1-cdh5.1.3/hbase-prefix-tree-0.98.1-cdh5.1.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hbase/hbase-hadoop-compat/0.98.1-cdh5.1.3/hbase-hadoop-compat-0.98.1-cdh5.1.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hbase/hbase-hadoop2-compat/0.98.1-cdh5.1.3/hbase-hadoop2-compat-0.98.1-cdh5.1.3.jar:/home/hadoop/apache-maven-3.1.1/repo/com/yammer/metrics/metrics-core/2.1.2/metrics-core-2.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/com/github/stephenc/high-scale-lib/high-scale-lib/1.1.1/high-scale-lib-1.1.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/commons/commons-math/2.1/commons-math-2.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/mortbay/jetty/jetty-sslengine/6.1.26.cloudera.2/jetty-sslengine-6.1.26.cloudera.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/mortbay/jetty/jsp-2.1/6.1.14/jsp-2.1-6.1.14.jar:/home/hadoop/apache-maven-3.1.1/repo/org/mortbay/jetty/jsp-api-2.1/6.1.14/jsp-api-2.1-6.1.14.jar:/home/hadoop/apache-maven-3.1.1/repo/org/mortbay/jetty/servlet-api-2.5/6.1.14/servlet-api-2.5-6.1.14.jar:/home/hadoop/apache-maven-3.1.1/repo/org/jamon/jamon-runtime/2.3.1/jamon-runtime-2.3.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-hdfs/2.3.0-cdh5.1.3/hadoop-hdfs-2.3.0-cdh5.1.3-tests.jar:/home/hadoop/apache-maven-3.1.1/repo/commons-daemon/commons-daemon/1.0.13/commons-daemon-1.0.13.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hbase/hbase-thrift/0.98.1-cdh5.1.3/hbase-thrift-0.98.1-cdh5.1.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/thrift/libthrift/0.9.0/libthrift-0.9.0.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hbase/hbase-testing-util/0.98.1-cdh5.1.3/hbase-testing-util-0.98.1-cdh5.1.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hbase/hbase-common/0.98.1-cdh5.1.3/hbase-common-0.98.1-cdh5.1.3-tests.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hbase/hbase-server/0.98.1-cdh5.1.3/hbase-server-0.98.1-cdh5.1.3-tests.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hbase/hbase-hadoop-compat/0.98.1-cdh5.1.3/hbase-hadoop-compat-0.98.1-cdh5.1.3-tests.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hbase/hbase-hadoop2-compat/0.98.1-cdh5.1.3/hbase-hadoop2-compat-0.98.1-cdh5.1.3-tests.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-minicluster/2.3.0-mr1-cdh5.1.3/hadoop-minicluster-2.3.0-mr1-cdh5.1.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-test/2.3.0-mr1-cdh5.1.3/hadoop-test-2.3.0-mr1-cdh5.1.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/ftpserver/ftplet-api/1.0.0/ftplet-api-1.0.0.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/mina/mina-core/2.0.0-M5/mina-core-2.0.0-M5.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/ftpserver/ftpserver-core/1.0.0/ftpserver-core-1.0.0.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/ftpserver/ftpserver-deprecated/1.0.0-M2/ftpserver-deprecated-1.0.0-M2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hadoop/hadoop-common/2.3.0-cdh5.1.3/hadoop-common-2.3.0-cdh5.1.3-tests.jar:/home/hadoop/apache-maven-3.1.1/repo/com/jcraft/jsch/0.1.42/jsch-0.1.42.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hive/hive-common/0.12.0-cdh5.1.2/hive-common-0.12.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hive/hive-shims/0.12.0-cdh5.1.2/hive-shims-0.12.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hive/shims/hive-shims-common/0.12.0-cdh5.1.2/hive-shims-common-0.12.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hive/shims/hive-shims-common-secure/0.12.0-cdh5.1.2/hive-shims-common-secure-0.12.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hive/shims/hive-shims-0.23/0.12.0-cdh5.1.2/hive-shims-0.23-0.12.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hive/hive-serde/0.12.0-cdh5.1.2/hive-serde-0.12.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hive/hive-metastore/0.12.0-cdh5.1.2/hive-metastore-0.12.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/com/jolbox/bonecp/0.7.1.RELEASE/bonecp-0.7.1.RELEASE.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/derby/derby/10.4.2.0/derby-10.4.2.0.jar:/home/hadoop/apache-maven-3.1.1/repo/org/datanucleus/datanucleus-api-jdo/3.2.1/datanucleus-api-jdo-3.2.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/datanucleus/datanucleus-core/3.2.2/datanucleus-core-3.2.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/datanucleus/datanucleus-rdbms/3.2.1/datanucleus-rdbms-3.2.1.jar:/home/hadoop/apache-maven-3.1.1/repo/javax/jdo/jdo-api/3.0.1/jdo-api-3.0.1.jar:/home/hadoop/apache-maven-3.1.1/repo/javax/transaction/jta/1.1/jta-1.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/antlr/antlr-runtime/3.4/antlr-runtime-3.4.jar:/home/hadoop/apache-maven-3.1.1/repo/org/antlr/stringtemplate/3.2.1/stringtemplate-3.2.1.jar:/home/hadoop/apache-maven-3.1.1/repo/antlr/antlr/2.7.7/antlr-2.7.7.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/thrift/libfb303/0.9.0/libfb303-0.9.0.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hive/hive-jdbc/0.12.0-cdh5.1.2/hive-jdbc-0.12.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hive/hive-service/0.12.0-cdh5.1.2/hive-service-0.12.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hive/hive-exec/0.12.0-cdh5.1.2/hive-exec-0.12.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/hive/hive-ant/0.12.0-cdh5.1.2/hive-ant-0.12.0-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/ant/ant/1.9.1/ant-1.9.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/ant/ant-launcher/1.9.1/ant-launcher-1.9.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/velocity/velocity/1.5/velocity-1.5.jar:/home/hadoop/apache-maven-3.1.1/repo/oro/oro/2.0.8/oro-2.0.8.jar:/home/hadoop/apache-maven-3.1.1/repo/com/twitter/parquet-hadoop-bundle/1.2.5-cdh5.1.2/parquet-hadoop-bundle-1.2.5-cdh5.1.2.jar:/home/hadoop/apache-maven-3.1.1/repo/org/antlr/ST4/4.0.4/ST4-4.0.4.jar:/home/hadoop/apache-maven-3.1.1/repo/org/codehaus/groovy/groovy-all/2.1.6/groovy-all-2.1.6.jar:/home/hadoop/apache-maven-3.1.1/repo/stax/stax-api/1.0.1/stax-api-1.0.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/elasticsearch/elasticsearch-hadoop/2.0.1/elasticsearch-hadoop-2.0.1.jar:/home/hadoop/apache-maven-3.1.1/repo/joda-time/joda-time/1.6/joda-time-1.6.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/pig/pig/0.13.0/pig-0.13.0.jar:/home/hadoop/apache-maven-3.1.1/repo/net/sf/kosmosfs/kfs/0.3/kfs-0.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/elasticsearch/elasticsearch/1.3.3/elasticsearch-1.3.3.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/lucene/lucene-core/4.9.1/lucene-core-4.9.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/lucene/lucene-analyzers-common/4.9.1/lucene-analyzers-common-4.9.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/lucene/lucene-codecs/4.9.1/lucene-codecs-4.9.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/lucene/lucene-queries/4.9.1/lucene-queries-4.9.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/lucene/lucene-memory/4.9.1/lucene-memory-4.9.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/lucene/lucene-highlighter/4.9.1/lucene-highlighter-4.9.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/lucene/lucene-queryparser/4.9.1/lucene-queryparser-4.9.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/lucene/lucene-sandbox/4.9.1/lucene-sandbox-4.9.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/lucene/lucene-suggest/4.9.1/lucene-suggest-4.9.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/lucene/lucene-misc/4.9.1/lucene-misc-4.9.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/lucene/lucene-join/4.9.1/lucene-join-4.9.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/lucene/lucene-grouping/4.9.1/lucene-grouping-4.9.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/apache/lucene/lucene-spatial/4.9.1/lucene-spatial-4.9.1.jar:/home/hadoop/apache-maven-3.1.1/repo/com/spatial4j/spatial4j/0.4.1/spatial4j-0.4.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/ow2/asm/asm/4.1/asm-4.1.jar:/home/hadoop/apache-maven-3.1.1/repo/org/ow2/asm/asm-commons/4.1/asm-commons-4.1.jar:/home/hadoop/idea-IU-135.909/lib/idea_rt.jar com.intellij.rt.execution.application.AppMain com.cn.bhh.example.analysis.elasticsearch.hive.Test

14/10/08 18:02:24 INFO elasticsearch.plugins: [Termagaira] loaded [], sites []

{

"took" : 90,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"failed" : 0

},

"hits" : {

"total" : 2,

"max_score" : 1.4054651,

"hits" : [ {

"_index" : "radiott",

"_type" : "artiststt",

"_id" : "Zc0L0HXxQ2m69Oif0hAwGQ",

"_score" : 1.4054651,

"_source":{"id":2,"name":"lcdem"}

}, {

"_index" : "radiott",

"_type" : "artiststt",

"_id" : "5bZnD4BRTjmdmCPmVM6cBw",

"_score" : 1.0,

"_source":{"id":2,"name":"lcdem"}

} ]

}

}

(2)姓名:lcdem

(2)姓名:lcdem

CREATE EXTERNAL TABLE artiststt (

id BIGINT,

name STRING)

STORED BY 'org.elasticsearch.hadoop.hive.EsStorageHandler'

TBLPROPERTIES('es.resource' = 'radiott/artiststt', 'es.query' = '?q=me*');

hive> select * from estest;

OK

1 medcl

Time taken: 0.585 seconds, Fetched: 1 row(s)

而第一种方式使用hiveql语句查询的时候会报错:

hive> select * from user;

OK

Failed with exception java.io.IOException:java.lang.ClassCastException: org.apache.hadoop.io.LongWritable cannot be cast to org.apache.hadoop.io.IntWritable

Time taken: 0.472 seconds

hive> CREATE EXTERNAL TABLE artiststt1 (

> id BIGINT,

> name STRING)

> STORED BY 'org.elasticsearch.hadoop.hive.EsStorageHandler'

> TBLPROPERTIES('es.resource' = 'radiott1/artiststt1', 'es.query' = '?q=*');

OK

Time taken: 0.986 seconds

hive> INSERT OVERWRITE TABLE artiststt1 SELECT s.id, s.name FROM user_source s;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1412756024135_0010, Tracking URL = http://caozw:8088/proxy/application_1412756024135_0010/

Kill Command = /home/hadoop/hadoop/bin/hadoop job -kill job_1412756024135_0010

Hadoop job information for Stage-0: number of mappers: 1; number of reducers: 0

2014-10-08 18:07:21,587 Stage-0 map = 0%, reduce = 0%

2014-10-08 18:07:48,337 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.45 sec

2014-10-08 18:07:49,579 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.45 sec

2014-10-08 18:07:50,605 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.45 sec

2014-10-08 18:07:54,561 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.45 sec

2014-10-08 18:07:55,580 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.45 sec

2014-10-08 18:07:56,600 Stage-0 map = 100%, reduce = 0%, Cumulative CPU 1.45 sec

MapReduce Total cumulative CPU time: 1 seconds 450 msec

Ended Job = job_1412756024135_0010

MapReduce Jobs Launched:

Job 0: Map: 1 Cumulative CPU: 1.45 sec HDFS Read: 253 HDFS Write: 0 SUCCESS

Total MapReduce CPU Time Spent: 1 seconds 450 msec

OK

Time taken: 58.285 seconds

hive> select * from artiststt1;

OK

1 medcl

3 tom

2 lcdem

4 jack

Time taken: 0.609 seconds, Fetched: 4 row(s)