数据下载

下载数据

!wget https://tianchi-competition.oss-cn-hangzhou.aliyuncs.com/531810/train_set.csv.zip

!wget https://tianchi-competition.oss-cn-hangzhou.aliyuncs.com/531810/test_a.csv.zip

!wget https://tianchi-competition.oss-cn-hangzhou.aliyuncs.com/531810/test_a_sample_submit.csv

解压数据, 共包含3个文件, 训练数据(train_set.csv), 测试数据(test_a.csv), 结果提交样例文件(test_a_sample_submit.csv)

!mkdir /content/drive/My\ Drive/competitions/NLPNews

!unzip /content/test_a.csv.zip -d /content/drive/My\ Drive/competitions/NLPNews/test

!unzip /content/train_set.csv.zip -d /content/drive/My\ Drive/competitions/NLPNews/train

!mv /content/test_a_sample_submit.csv /content/drive/My\ Drive/competitions/NLPNews/submit.csv

!mv /content/drive/My\ Drive/competitions/NLPNews/test/test_a.csv /content/drive/My\ Drive/competitions/NLPNews/test.csv

!mv /content/drive/My\ Drive/competitions/NLPNews/test/train_set.csv /content/drive/My\ Drive/competitions/NLPNews/train.csv读取数据

import pandas as pd

import os

from collections import Counter

import matplotlib.pyplot as plt

%matplotlib inlineroot_dir = '/content/drive/My Drive/competitions/NLPNews'

train_df = pd.read_csv(root_dir+'/train.csv', sep='\t')

train_df['word_cnt'] = train_df['text'].apply(lambda x: len(x.split(' ')))

train_df.head(10)| label | text | word_cnt | |

|---|---|---|---|

| 0 | 2 | 2967 6758 339 2021 1854 3731 4109 3792 4149 15... | 1057 |

| 1 | 11 | 4464 486 6352 5619 2465 4802 1452 3137 5778 54... | 486 |

| 2 | 3 | 7346 4068 5074 3747 5681 6093 1777 2226 7354 6... | 764 |

| 3 | 2 | 7159 948 4866 2109 5520 2490 211 3956 5520 549... | 1570 |

| 4 | 3 | 3646 3055 3055 2490 4659 6065 3370 5814 2465 5... | 307 |

| 5 | 9 | 3819 4525 1129 6725 6485 2109 3800 5264 1006 4... | 1050 |

| 6 | 3 | 307 4780 6811 1580 7539 5886 5486 3433 6644 58... | 267 |

| 7 | 10 | 26 4270 1866 5977 3523 3764 4464 3659 4853 517... | 876 |

| 8 | 12 | 2708 2218 5915 4559 886 1241 4819 314 4261 166... | 314 |

| 9 | 3 | 3654 531 1348 29 4553 6722 1474 5099 7541 307 ... | 1086 |

查看数据

train_df['word_cnt'] = train_df['word_cnt'].apply(int)

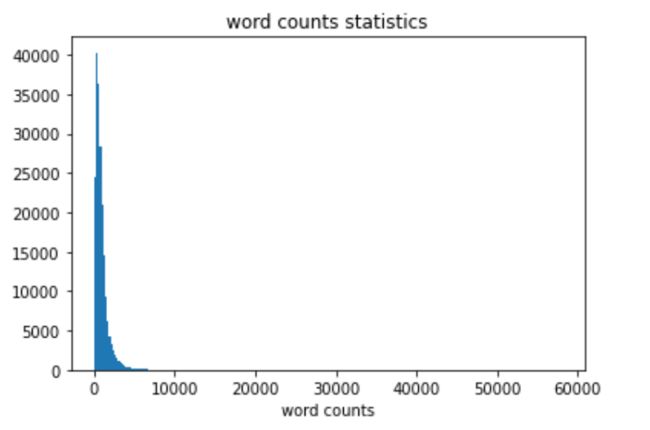

train_df['word_cnt'].describe()count 200000.000000

mean 907.207110

std 996.029036

min 2.000000

25% 374.000000

50% 676.000000

75% 1131.000000

max 57921.000000

Name: word_cnt, dtype: float64

可以看到, 每个新闻中包含的单词个数差异很大, 最小只有2个, 而最大则有57921个.

plt.hist(train_df['word_cnt'], bins=255)

plt.title('word counts statistics')

plt.xlabel('word counts')

plt.show()plt.bar(range(1, 15), train_df['label'].value_counts().values)

plt.title('label counts statistic')

# plt.xticks(range(1, 15), labels=labels)

plt.xlabel('label')

plt.show()labels = ['科技', '股票', '体育', '娱乐', '时政', '社会', '教育', '财经', '家居', '游戏', '房产', '时尚', '彩票', '星座']

for label, cnt in zip(labels, train_df['label'].value_counts()):

print(label, cnt)科技 38918

股票 36945

体育 31425

娱乐 22133

时政 15016

社会 12232

教育 9985

财经 8841

家居 7847

游戏 5878

房产 4920

时尚 3131

彩票 1821

星座 908

s = ' '.join(list(train_df['text']))

counter = Counter(s.split(' '))

counter = sorted(counter.items(), key=lambda x: x[1], reverse=True)

print('the most occured words: ', counter[0])

print('the less occured words: ', counter[-1])- 假设字符3750,字符900和字符648是句子的标点符号,请分析赛题每篇新闻平均由多少个句子构成?

import re

train_df['sentence_cnt'] = train_df['text'].apply(lambda x: len(re.split('3750|900|648', x)))

pd.concate([train_df.head(5), train_df.tail(5)], 0)- 统计每类新闻中出现次数对多的字符

for i in train_df['label'].unique():

s = ' '.join(list(train_df.loc[train_df['label'] == i, 'text']))

counter = Counter(s.split(' '))

most = sorted(counter.items(), lambda x: x[1], reversed=True)[0]

print(i, most)