TJU暑期的深度学习训练营,这是人脸识别运用图像增强后的一段代码~

import os, shutil

base_dir = './tjudataset'

train_dir = os.path.join(base_dir,'train')

validation_dir = os.path.join(base_dir,'validation')

test_dir = os.path.join(base_dir,'test')

from keras import layers

from keras import models

from keras import optimizers

from keras.preprocessing.image import ImageDataGenerator

model = models.Sequential()

model.add(layers.Conv2D(64, (2, 2), activation='relu',

input_shape=(210, 210, 3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (2, 2), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(256, (2, 2), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(512, (2, 2), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Flatten())

model.add(layers.Dropout(0.3))

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dropout(0.2))

model.add(layers.Dense(61, activation='softmax'))

model.compile(loss='categorical_crossentropy',

optimizer=optimizers.RMSprop(lr=1e-4),

metrics=['acc'])

model.summary()

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 209, 209, 64) 832

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 104, 104, 64) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 103, 103, 128) 32896

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 51, 51, 128) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 50, 50, 256) 131328

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 25, 25, 256) 0

_________________________________________________________________

conv2d_4 (Conv2D) (None, 24, 24, 512) 524800

_________________________________________________________________

max_pooling2d_4 (MaxPooling2 (None, 12, 12, 512) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 73728) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 73728) 0

_________________________________________________________________

dense_1 (Dense) (None, 512) 37749248

_________________________________________________________________

dropout_2 (Dropout) (None, 512) 0

_________________________________________________________________

dense_2 (Dense) (None, 61) 31293

=================================================================

Total params: 38,470,397

Trainable params: 38,470,397

Non-trainable params: 0

_________________________________________________________________

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=10,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

validation_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size=(210, 210),

batch_size=61,

class_mode='categorical')

validation_generator = validation_datagen.flow_from_directory(

validation_dir,

target_size=(210, 210),

batch_size=61,

class_mode='categorical')

Found 549 images belonging to 61 classes.

Found 61 images belonging to 61 classes.

from keras.callbacks import ModelCheckpoint

from matplotlib import pyplot as plt

import numpy as np

checkpointer = ModelCheckpoint(filepath='TJUFACE.augmentation.model.weights.best.hdf5', verbose=1,

save_best_only=True)

before = 0

test_datagen = ImageDataGenerator(rescale=1./255)

test_generator = test_datagen.flow_from_directory(

test_dir,

target_size=(210, 210),

batch_size=61,

class_mode='categorical')

xx = []

yy = []

for i in range(200):

xx += [i]

print('The ',i+1,'times:')

history = model.fit_generator(

train_generator,

steps_per_epoch=9,

epochs=1,

validation_data=validation_generator,

validation_steps=1,

callbacks=[checkpointer],

verbose=1)

test_loss, test_acc = model.evaluate_generator(test_generator, steps=1)

yy += [test_acc]

if test_acc > before:

print('-------------------------------------------------------------------------')

print('epochs = ',i+1)

print('Test_acc:', test_acc)

print('-------------------------------------------------------------------------')

before = test_acc

print()

print('The highest test_acc :',before)

Found 61 images belonging to 61 classes.

The 1 times:

Epoch 1/1

9/9 [==============================] - 14s 2s/step - loss: 4.1443 - acc: 0.0109 - val_loss: 4.0953 - val_acc: 0.0984

Epoch 00001: val_loss improved from inf to 4.09529, saving model to TJUFACE.augmentation.model.weights.best.hdf5

-------------------------------------------------------------------------

epochs = 1

Test_acc: 0.09836065769195557

-------------------------------------------------------------------------

The 2 times:

Epoch 1/1

9/9 [==============================] - 11s 1s/step - loss: 4.1036 - acc: 0.0328 - val_loss: 4.0612 - val_acc: 0.0656

Epoch 00001: val_loss improved from 4.09529 to 4.06118, saving model to TJUFACE.augmentation.model.weights.best.hdf5

The 3 times:

Epoch 1/1

9/9 [==============================] - 11s 1s/step - loss: 4.0701 - acc: 0.0510 - val_loss: 3.9763 - val_acc: 0.0656

……

……

……

Epoch 00001: val_loss did not improve from 0.33823

The 199 times:

Epoch 1/1

9/9 [==============================] - 11s 1s/step - loss: 0.4557 - acc: 0.8743 - val_loss: 0.8803 - val_acc: 0.8361

Epoch 00001: val_loss did not improve from 0.33823

The 200 times:

Epoch 1/1

9/9 [==============================] - 11s 1s/step - loss: 0.5764 - acc: 0.8452 - val_loss: 0.4710 - val_acc: 0.8852

Epoch 00001: val_loss did not improve from 0.33823

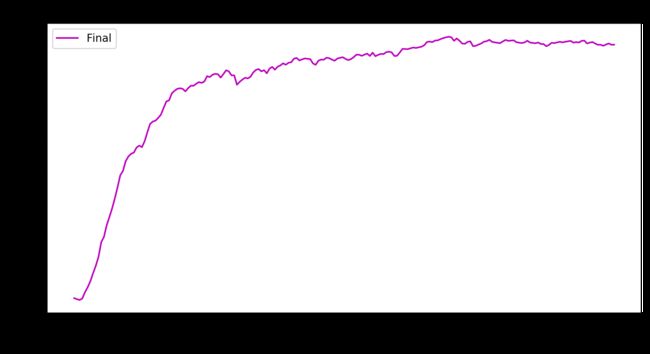

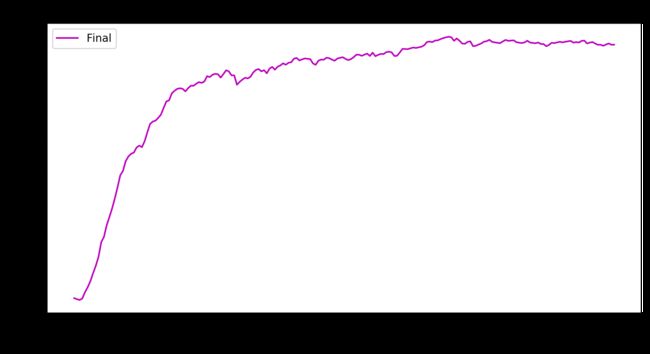

The highest test_acc : 0.9508196711540222

plt.figure(figsize=(10,5),dpi=200)

plt.title('Test_acc')

plt.xlabel('Epochs')

plt.xticks(np.arange(0,205,10))

plt.ylabel('Test_acc')

plt.yticks(np.arange(0,1,0.1))

def smooth_curve(points, factor=0.8):

smoothed_points = []

for point in points:

if smoothed_points:

previous = smoothed_points[-1]

smoothed_points.append(previous * factor + point * (1 - factor))

else:

smoothed_points.append(point)

return smoothed_points

plt.plot(xx,smooth_curve(yy),label='Final',color='m',marker=',',linestyle='-')

plt.legend()

plt.show()

model.load_weights('TJUFACE.augmentation.model.weights.best.hdf5')

test_datagen = ImageDataGenerator(rescale=1./255)

test_generator = test_datagen.flow_from_directory(

test_dir,

target_size=(210, 210),

batch_size=61,

class_mode='categorical')

test_loss, test_acc = model.evaluate_generator(test_generator, steps=1)

print('test acc:', test_acc)

Found 61 images belonging to 61 classes.

test acc: 0.9016393423080444