写在前边:

OpenCV3.0+要想使用MHI,就要现安装扩展模块opencv_contrib。安装方法见:ubuntu 14.04 64位 安装Opencv3.1.0 (包含opencv_contrib模块)

OpenCV2.4.11中samples/python2/motempl.py 就是使用mhi的一个例子,但是在OpenCV3.1.0下使用的话需要稍加修改:

1.把OpenCV2.4.11/samples/python2/motempl.py 复制到OpenCV3.1.0/samples/python 目录下。

OpenCV3.1.0的samples里边已经没有motempl.py了。 注:motempl.py需要使用common.py 和 video.py两个文件,直接使用3.1.0/samples里边的这两个文件就行。

2.把motempl.py 的 47、48、49、75这四行的代码,改为:

47 cv2.motempl.updateMotionHistory(motion_mask, motion_history, timestamp, MHI_DURATION)

48 mg_mask, mg_orient = cv2.motempl.calcMotionGradient(motion_history, MAX_TIME_DELTA, MIN_TIME_DELTA, apertureSize=5)

49 seg_mask, seg_bounds = cv2.motempl.segmentMotion(motion_history, timestamp, MAX_TIME_DELTA)

75 angle = cv2.motempl.calcGlobalOrientation(orient_roi, mask_roi, mhi_roi, timestamp, MHI_DURATION)

其实就是在前边加了个“motempl.”,因为3.0+版本里,把这些函数放在了motempl API里边。

这里贴出来common.py video.py motempl.py三个文件的代码。

common.py

1 #!/usr/bin/env python 2 3 ''' 4 This module contains some common routines used by other samples. 5 ''' 6 7 # Python 2/3 compatibility 8 from __future__ import print_function 9 import sys 10 PY3 = sys.version_info[0] == 3 11 12 if PY3: 13 from functools import reduce 14 15 import numpy as np 16 import cv2 17 18 # built-in modules 19 import os 20 import itertools as it 21 from contextlib import contextmanager 22 23 image_extensions = ['.bmp', '.jpg', '.jpeg', '.png', '.tif', '.tiff', '.pbm', '.pgm', '.ppm'] 24 25 class Bunch(object): 26 def __init__(self, **kw): 27 self.__dict__.update(kw) 28 def __str__(self): 29 return str(self.__dict__) 30 31 def splitfn(fn): 32 path, fn = os.path.split(fn) 33 name, ext = os.path.splitext(fn) 34 return path, name, ext 35 36 def anorm2(a): 37 return (a*a).sum(-1) 38 def anorm(a): 39 return np.sqrt( anorm2(a) ) 40 41 def homotrans(H, x, y): 42 xs = H[0, 0]*x + H[0, 1]*y + H[0, 2] 43 ys = H[1, 0]*x + H[1, 1]*y + H[1, 2] 44 s = H[2, 0]*x + H[2, 1]*y + H[2, 2] 45 return xs/s, ys/s 46 47 def to_rect(a): 48 a = np.ravel(a) 49 if len(a) == 2: 50 a = (0, 0, a[0], a[1]) 51 return np.array(a, np.float64).reshape(2, 2) 52 53 def rect2rect_mtx(src, dst): 54 src, dst = to_rect(src), to_rect(dst) 55 cx, cy = (dst[1] - dst[0]) / (src[1] - src[0]) 56 tx, ty = dst[0] - src[0] * (cx, cy) 57 M = np.float64([[ cx, 0, tx], 58 [ 0, cy, ty], 59 [ 0, 0, 1]]) 60 return M 61 62 63 def lookat(eye, target, up = (0, 0, 1)): 64 fwd = np.asarray(target, np.float64) - eye 65 fwd /= anorm(fwd) 66 right = np.cross(fwd, up) 67 right /= anorm(right) 68 down = np.cross(fwd, right) 69 R = np.float64([right, down, fwd]) 70 tvec = -np.dot(R, eye) 71 return R, tvec 72 73 def mtx2rvec(R): 74 w, u, vt = cv2.SVDecomp(R - np.eye(3)) 75 p = vt[0] + u[:,0]*w[0] # same as np.dot(R, vt[0]) 76 c = np.dot(vt[0], p) 77 s = np.dot(vt[1], p) 78 axis = np.cross(vt[0], vt[1]) 79 return axis * np.arctan2(s, c) 80 81 def draw_str(dst, target, s): 82 x, y = target 83 cv2.putText(dst, s, (x+1, y+1), cv2.FONT_HERSHEY_PLAIN, 1.0, (0, 0, 0), thickness = 2, lineType=cv2.LINE_AA) 84 cv2.putText(dst, s, (x, y), cv2.FONT_HERSHEY_PLAIN, 1.0, (255, 255, 255), lineType=cv2.LINE_AA) 85 86 class Sketcher: 87 def __init__(self, windowname, dests, colors_func): 88 self.prev_pt = None 89 self.windowname = windowname 90 self.dests = dests 91 self.colors_func = colors_func 92 self.dirty = False 93 self.show() 94 cv2.setMouseCallback(self.windowname, self.on_mouse) 95 96 def show(self): 97 cv2.imshow(self.windowname, self.dests[0]) 98 99 def on_mouse(self, event, x, y, flags, param): 100 pt = (x, y) 101 if event == cv2.EVENT_LBUTTONDOWN: 102 self.prev_pt = pt 103 elif event == cv2.EVENT_LBUTTONUP: 104 self.prev_pt = None 105 106 if self.prev_pt and flags & cv2.EVENT_FLAG_LBUTTON: 107 for dst, color in zip(self.dests, self.colors_func()): 108 cv2.line(dst, self.prev_pt, pt, color, 5) 109 self.dirty = True 110 self.prev_pt = pt 111 self.show() 112 113 114 # palette data from matplotlib/_cm.py 115 _jet_data = {'red': ((0., 0, 0), (0.35, 0, 0), (0.66, 1, 1), (0.89,1, 1), 116 (1, 0.5, 0.5)), 117 'green': ((0., 0, 0), (0.125,0, 0), (0.375,1, 1), (0.64,1, 1), 118 (0.91,0,0), (1, 0, 0)), 119 'blue': ((0., 0.5, 0.5), (0.11, 1, 1), (0.34, 1, 1), (0.65,0, 0), 120 (1, 0, 0))} 121 122 cmap_data = { 'jet' : _jet_data } 123 124 def make_cmap(name, n=256): 125 data = cmap_data[name] 126 xs = np.linspace(0.0, 1.0, n) 127 channels = [] 128 eps = 1e-6 129 for ch_name in ['blue', 'green', 'red']: 130 ch_data = data[ch_name] 131 xp, yp = [], [] 132 for x, y1, y2 in ch_data: 133 xp += [x, x+eps] 134 yp += [y1, y2] 135 ch = np.interp(xs, xp, yp) 136 channels.append(ch) 137 return np.uint8(np.array(channels).T*255) 138 139 def nothing(*arg, **kw): 140 pass 141 142 def clock(): 143 return cv2.getTickCount() / cv2.getTickFrequency() 144 145 @contextmanager 146 def Timer(msg): 147 print(msg, '...',) 148 start = clock() 149 try: 150 yield 151 finally: 152 print("%.2f ms" % ((clock()-start)*1000)) 153 154 class StatValue: 155 def __init__(self, smooth_coef = 0.5): 156 self.value = None 157 self.smooth_coef = smooth_coef 158 def update(self, v): 159 if self.value is None: 160 self.value = v 161 else: 162 c = self.smooth_coef 163 self.value = c * self.value + (1.0-c) * v 164 165 class RectSelector: 166 def __init__(self, win, callback): 167 self.win = win 168 self.callback = callback 169 cv2.setMouseCallback(win, self.onmouse) 170 self.drag_start = None 171 self.drag_rect = None 172 def onmouse(self, event, x, y, flags, param): 173 x, y = np.int16([x, y]) # BUG 174 if event == cv2.EVENT_LBUTTONDOWN: 175 self.drag_start = (x, y) 176 if self.drag_start: 177 if flags & cv2.EVENT_FLAG_LBUTTON: 178 xo, yo = self.drag_start 179 x0, y0 = np.minimum([xo, yo], [x, y]) 180 x1, y1 = np.maximum([xo, yo], [x, y]) 181 self.drag_rect = None 182 if x1-x0 > 0 and y1-y0 > 0: 183 self.drag_rect = (x0, y0, x1, y1) 184 else: 185 rect = self.drag_rect 186 self.drag_start = None 187 self.drag_rect = None 188 if rect: 189 self.callback(rect) 190 def draw(self, vis): 191 if not self.drag_rect: 192 return False 193 x0, y0, x1, y1 = self.drag_rect 194 cv2.rectangle(vis, (x0, y0), (x1, y1), (0, 255, 0), 2) 195 return True 196 @property 197 def dragging(self): 198 return self.drag_rect is not None 199 200 201 def grouper(n, iterable, fillvalue=None): 202 '''grouper(3, 'ABCDEFG', 'x') --> ABC DEF Gxx''' 203 args = [iter(iterable)] * n 204 if PY3: 205 output = it.zip_longest(fillvalue=fillvalue, *args) 206 else: 207 output = it.izip_longest(fillvalue=fillvalue, *args) 208 return output 209 210 def mosaic(w, imgs): 211 '''Make a grid from images. 212 213 w -- number of grid columns 214 imgs -- images (must have same size and format) 215 ''' 216 imgs = iter(imgs) 217 if PY3: 218 img0 = next(imgs) 219 else: 220 img0 = imgs.next() 221 pad = np.zeros_like(img0) 222 imgs = it.chain([img0], imgs) 223 rows = grouper(w, imgs, pad) 224 return np.vstack(map(np.hstack, rows)) 225 226 def getsize(img): 227 h, w = img.shape[:2] 228 return w, h 229 230 def mdot(*args): 231 return reduce(np.dot, args) 232 233 def draw_keypoints(vis, keypoints, color = (0, 255, 255)): 234 for kp in keypoints: 235 x, y = kp.pt 236 cv2.circle(vis, (int(x), int(y)), 2, color)

video.py

1 #!/usr/bin/env python 2 3 ''' 4 Video capture sample. 5 6 Sample shows how VideoCapture class can be used to acquire video 7 frames from a camera of a movie file. Also the sample provides 8 an example of procedural video generation by an object, mimicking 9 the VideoCapture interface (see Chess class). 10 11 'create_capture' is a convinience function for capture creation, 12 falling back to procedural video in case of error. 13 14 Usage: 15 video.py [--shotdir] [source0] [source1] ...' 16 17 sourceN is an 18 - integer number for camera capture 19 - name of video file 20 - synth:for procedural video 21 22 Synth examples: 23 synth:bg=../data/lena.jpg:noise=0.1 24 synth:class=chess:bg=../data/lena.jpg:noise=0.1:size=640x480 25 26 Keys: 27 ESC - exit 28 SPACE - save current frame todirectory 29 30 ''' 31 32 # Python 2/3 compatibility 33 from __future__ import print_function 34 35 import numpy as np 36 from numpy import pi, sin, cos 37 38 import cv2 39 40 # built-in modules 41 from time import clock 42 43 # local modules 44 import common 45 46 class VideoSynthBase(object): 47 def __init__(self, size=None, noise=0.0, bg = None, **params): 48 self.bg = None 49 self.frame_size = (640, 480) 50 if bg is not None: 51 self.bg = cv2.imread(bg, 1) 52 h, w = self.bg.shape[:2] 53 self.frame_size = (w, h) 54 55 if size is not None: 56 w, h = map(int, size.split('x')) 57 self.frame_size = (w, h) 58 self.bg = cv2.resize(self.bg, self.frame_size) 59 60 self.noise = float(noise) 61 62 def render(self, dst): 63 pass 64 65 def read(self, dst=None): 66 w, h = self.frame_size 67 68 if self.bg is None: 69 buf = np.zeros((h, w, 3), np.uint8) 70 else: 71 buf = self.bg.copy() 72 73 self.render(buf) 74 75 if self.noise > 0.0: 76 noise = np.zeros((h, w, 3), np.int8) 77 cv2.randn(noise, np.zeros(3), np.ones(3)*255*self.noise) 78 buf = cv2.add(buf, noise, dtype=cv2.CV_8UC3) 79 return True, buf 80 81 def isOpened(self): 82 return True 83 84 class Chess(VideoSynthBase): 85 def __init__(self, **kw): 86 super(Chess, self).__init__(**kw) 87 88 w, h = self.frame_size 89 90 self.grid_size = sx, sy = 10, 7 91 white_quads = [] 92 black_quads = [] 93 for i, j in np.ndindex(sy, sx): 94 q = [[j, i, 0], [j+1, i, 0], [j+1, i+1, 0], [j, i+1, 0]] 95 [white_quads, black_quads][(i + j) % 2].append(q) 96 self.white_quads = np.float32(white_quads) 97 self.black_quads = np.float32(black_quads) 98 99 fx = 0.9 100 self.K = np.float64([[fx*w, 0, 0.5*(w-1)], 101 [0, fx*w, 0.5*(h-1)], 102 [0.0,0.0, 1.0]]) 103 104 self.dist_coef = np.float64([-0.2, 0.1, 0, 0]) 105 self.t = 0 106 107 def draw_quads(self, img, quads, color = (0, 255, 0)): 108 img_quads = cv2.projectPoints(quads.reshape(-1, 3), self.rvec, self.tvec, self.K, self.dist_coef) [0] 109 img_quads.shape = quads.shape[:2] + (2,) 110 for q in img_quads: 111 cv2.fillConvexPoly(img, np.int32(q*4), color, cv2.LINE_AA, shift=2) 112 113 def render(self, dst): 114 t = self.t 115 self.t += 1.0/30.0 116 117 sx, sy = self.grid_size 118 center = np.array([0.5*sx, 0.5*sy, 0.0]) 119 phi = pi/3 + sin(t*3)*pi/8 120 c, s = cos(phi), sin(phi) 121 ofs = np.array([sin(1.2*t), cos(1.8*t), 0]) * sx * 0.2 122 eye_pos = center + np.array([cos(t)*c, sin(t)*c, s]) * 15.0 + ofs 123 target_pos = center + ofs 124 125 R, self.tvec = common.lookat(eye_pos, target_pos) 126 self.rvec = common.mtx2rvec(R) 127 128 self.draw_quads(dst, self.white_quads, (245, 245, 245)) 129 self.draw_quads(dst, self.black_quads, (10, 10, 10)) 130 131 132 classes = dict(chess=Chess) 133 134 presets = dict( 135 empty = 'synth:', 136 lena = 'synth:bg=../data/lena.jpg:noise=0.1', 137 chess = 'synth:class=chess:bg=../data/lena.jpg:noise=0.1:size=640x480' 138 ) 139 140 141 def create_capture(source = 0, fallback = presets['chess']): 142 '''source:or ' 143 ''' 144 source = str(source).strip() 145 chunks = source.split(':') 146 # handle drive letter ('c:', ...) 147 if len(chunks) > 1 and len(chunks[0]) == 1 and chunks[0].isalpha(): 148 chunks[1] = chunks[0] + ':' + chunks[1] 149 del chunks[0] 150 151 source = chunks[0] 152 try: source = int(source) 153 except ValueError: pass 154 params = dict( s.split('=') for s in chunks[1:] ) 155 156 cap = None 157 if source == 'synth': 158 Class = classes.get(params.get('class', None), VideoSynthBase) 159 try: cap = Class(**params) 160 except: pass 161 else: 162 cap = cv2.VideoCapture(source) 163 if 'size' in params: 164 w, h = map(int, params['size'].split('x')) 165 cap.set(cv2.CAP_PROP_FRAME_WIDTH, w) 166 cap.set(cv2.CAP_PROP_FRAME_HEIGHT, h) 167 if cap is None or not cap.isOpened(): 168 print('Warning: unable to open video source: ', source) 169 if fallback is not None: 170 return create_capture(fallback, None) 171 return cap 172 173 if __name__ == '__main__': 174 import sys 175 import getopt 176 177 print(__doc__) 178 179 args, sources = getopt.getopt(sys.argv[1:], '', 'shotdir=') 180 args = dict(args) 181 shotdir = args.get('--shotdir', '.') 182 if len(sources) == 0: 183 sources = [ 0 ] 184 185 caps = list(map(create_capture, sources)) 186 shot_idx = 0 187 while True: 188 imgs = [] 189 for i, cap in enumerate(caps): 190 ret, img = cap.read() 191 imgs.append(img) 192 cv2.imshow('capture %d' % i, img) 193 ch = 0xFF & cv2.waitKey(1) 194 if ch == 27: 195 break 196 if ch == ord(' '): 197 for i, img in enumerate(imgs): 198 fn = '%s/shot_%d_%03d.bmp' % (shotdir, i, shot_idx) 199 cv2.imwrite(fn, img) 200 print(fn, 'saved') 201 shot_idx += 1 202 cv2.destroyAllWindows()| |synth [: = [:...]]'

motempl.py

1 #!/usr/bin/env python 2 3 import numpy as np 4 import cv2 5 import video 6 from common import nothing, clock, draw_str 7 8 MHI_DURATION = 0.5 9 DEFAULT_THRESHOLD = 32 10 MAX_TIME_DELTA = 0.25 11 MIN_TIME_DELTA = 0.05 12 13 14 def draw_motion_comp(vis, (x, y, w, h), angle, color): 15 cv2.rectangle(vis, (x, y), (x + w, y + h), (0, 255, 0)) 16 r = min(w / 2, h / 2) 17 cx, cy = x + w / 2, y + h / 2 18 angle = angle * np.pi / 180 19 cv2.circle(vis, (cx, cy), r, color, 3) 20 cv2.line(vis, (cx, cy), (int(cx + np.cos(angle) * r), int(cy + np.sin(angle) * r)), color, 3) 21 22 23 if __name__ == '__main__': 24 import sys 25 26 try: 27 video_src = sys.argv[1] 28 except: 29 video_src = 0 30 31 cv2.namedWindow('motempl') 32 visuals = ['input', 'frame_diff', 'motion_hist', 'grad_orient'] 33 cv2.createTrackbar('visual', 'motempl', 2, len(visuals) - 1, nothing) 34 cv2.createTrackbar('threshold', 'motempl', DEFAULT_THRESHOLD, 255, nothing) 35 36 cam = video.create_capture(video_src, fallback='synth:class=chess:bg=../cpp/lena.jpg:noise=0.01') 37 ret, frame = cam.read() 38 h, w = frame.shape[:2] 39 prev_frame = frame.copy() 40 motion_history = np.zeros((h, w), np.float32) 41 hsv = np.zeros((h, w, 3), np.uint8) 42 hsv[:, :, 1] = 255 43 while True: 44 ret, frame = cam.read() 45 frame_diff = cv2.absdiff(frame, prev_frame) 46 gray_diff = cv2.cvtColor(frame_diff, cv2.COLOR_BGR2GRAY) 47 thrs = cv2.getTrackbarPos('threshold', 'motempl') 48 ret, motion_mask = cv2.threshold(gray_diff, thrs, 1, cv2.THRESH_BINARY) 49 timestamp = clock() 50 cv2.motempl.updateMotionHistory(motion_mask, motion_history, timestamp, MHI_DURATION) 51 mg_mask, mg_orient = cv2.motempl.calcMotionGradient(motion_history, MAX_TIME_DELTA, MIN_TIME_DELTA, apertureSize=5) 52 seg_mask, seg_bounds = cv2.motempl.segmentMotion(motion_history, timestamp, MAX_TIME_DELTA) 53 54 visual_name = visuals[cv2.getTrackbarPos('visual', 'motempl')] 55 if visual_name == 'input': 56 vis = frame.copy() 57 elif visual_name == 'frame_diff': 58 vis = frame_diff.copy() 59 elif visual_name == 'motion_hist': 60 vis = np.uint8(np.clip((motion_history - (timestamp - MHI_DURATION)) / MHI_DURATION, 0, 1) * 255) 61 vis = cv2.cvtColor(vis, cv2.COLOR_GRAY2BGR) 62 elif visual_name == 'grad_orient': 63 hsv[:, :, 0] = mg_orient / 2 64 hsv[:, :, 2] = mg_mask * 255 65 vis = cv2.cvtColor(hsv, cv2.COLOR_HSV2BGR) 66 67 for i, rect in enumerate([(0, 0, w, h)] + list(seg_bounds)): 68 x, y, rw, rh = rect 69 area = rw * rh 70 if area < 64 ** 2: 71 continue 72 silh_roi = motion_mask[y:y + rh, x:x + rw] 73 orient_roi = mg_orient[y:y + rh, x:x + rw] 74 mask_roi = mg_mask[y:y + rh, x:x + rw] 75 mhi_roi = motion_history[y:y + rh, x:x + rw] 76 if cv2.norm(silh_roi, cv2.NORM_L1) < area * 0.05: 77 continue 78 angle = cv2.motempl.calcGlobalOrientation(orient_roi, mask_roi, mhi_roi, timestamp, MHI_DURATION) 79 color = ((255, 0, 0), (0, 0, 255))[i == 0] 80 draw_motion_comp(vis, rect, angle, color) 81 82 draw_str(vis, (20, 20), visual_name) 83 cv2.imshow('motempl', vis) 84 85 prev_frame = frame.copy() 86 if 0xFF & cv2.waitKey(5) == 27: 87 break 88 cv2.destroyAllWindows()

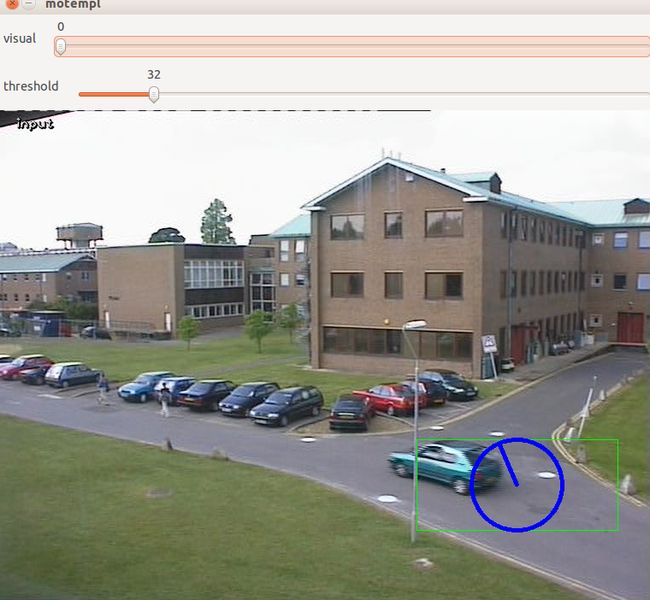

3.运行结果:

1 python motempl.py camera2.mov //第三个参赛不加的话是直接开启摄像头