Scrapyd使用介绍

Scrapy GitHub: https://github.com/scrapy/scrapyd

文档: https://scrapyd.readthedocs.io/en/stable/

什么是scrapyd?

Scrapyd is a service for running Scrapy spiders.

It allows you to deploy your Scrapy projects and control their spiders using an HTTP JSON API.

安装

pip install scrapyd # 服务器端

pip install scrapyd-client # 客户端

修改scrapy.cfg

[deploy:onefine]

url = http://localhost:6800/ # 否则会出现KeyError: 'url'错误

project = data_acquisition

启动

Linux/MacOS 下

scrapyd-deploy

Windows环境

在C:\Users\xxx\Envs\Py3_spider\Scripts下新建文件scrapyd-deploy.bat文件

然后添加内容:

@echo off

"C:\Users\xxx\Envs\Py3_spider\Scripts\python.exe" "C:\Users\xxx\Envs\Py3_spider\Scripts\scrapyd-deploy" %1 %2 %3 %4 %5 %6 %7 %8 %9

保存!然后就可以执行scrapyd-deploy。

(Py3_spider) Soufan_crawl\scrapyd> scrapyd-deploy

'scrapyd-deploy' 不是内部或外部命令,也不是可运行的程序或批处理文件。

(Py3_spider) Soufan_crawl\scrapyd> scrapyd-deploy

Error: no Scrapy project found in this location

(Py3_spider) Soufan_crawl\data_acquisition> scrapyd-deploy -l

onefine http://localhost:6800/

(Py3_spider) Soufan_crawl\data_acquisition>

部署

部署之前注意检查scrapy list可用:

(Py3_spider) D:\PythonProject\DjangoProject\Soufan_crawl\data_acquisition>scrapy list

Traceback (most recent call last):

File "c:\users\xxx\appdata\local\programs\python\python37\Lib\runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

File "c:\users\xxx\appdata\local\programs\python\python37\Lib\runpy.py", line 85, in _run_code

exec(code, run_globals)

File "C:\Users\xxx\Envs\Py3_spider\Scripts\scrapy.exe\__main__.py", line 9, in <module>

File "c:\users\xxx\envs\py3_spider\lib\site-packages\scrapy\cmdline.py", line 149, in execute

cmd.crawler_process = CrawlerProcess(settings)

File "c:\users\xxx\envs\py3_spider\lib\site-packages\scrapy\crawler.py", line 251, in __init__

super(CrawlerProcess, self).__init__(settings)

File "c:\users\xxx\envs\py3_spider\lib\site-packages\scrapy\crawler.py", line 137, in __init__

self.spider_loader = _get_spider_loader(settings)

File "c:\users\xxx\envs\py3_spider\lib\site-packages\scrapy\crawler.py", line 338, in _get_spider_loader

return loader_cls.from_settings(settings.frozencopy())

File "c:\users\xxx\envs\py3_spider\lib\site-packages\scrapy\spiderloader.py", line 61, in from_settings

return cls(settings)

File "c:\users\xxx\envs\py3_spider\lib\site-packages\scrapy\spiderloader.py", line 25, in __init__

self._load_all_spiders()

File "c:\users\xxx\envs\py3_spider\lib\site-packages\scrapy\spiderloader.py", line 47, in _load_all_spiders

for module in walk_modules(name):

File "c:\users\xxx\envs\py3_spider\lib\site-packages\scrapy\utils\misc.py", line 71, in walk_modules

submod = import_module(fullpath)

File "c:\users\xxx\envs\py3_spider\lib\importlib\__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "" , line 1006, in _gcd_import

File "" , line 983, in _find_and_load

File "" , line 967, in _find_and_load_unlocked

File "" , line 677, in _load_unlocked

File "" , line 728, in exec_module

File "" , line 219, in _call_with_frames_removed

File "D:\PythonProject\DjangoProject\Soufan_crawl\data_acquisition\data_acquisition\spiders\com_58.py", line 8, in <module>

from items import Com58FanLoder, Com58VillageLoder

ModuleNotFoundError: No module named 'items'

(Py3_spider) D:\PythonProject\DjangoProject\Soufan_crawl\data_acquisition>

解决办法

在setting.py当中加入:

import os

import sys

BASE_DIR = os.path.dirname(os.path.abspath(os.path.dirname(__file__)))

sys.path.insert(0, os.path.join(BASE_DIR, 'data_acquisition'))

然后重试:

(Py3_spider) Soufan_crawl\data_acquisition> scrapy list

add_task

com_58

com_anjuke

com_ganji

com_leju

example

(Py3_spider) Soufan_crawl\data_acquisition>

没问题之后开始部署:

scrapyd-deploy onefine -p data_acquisition

这里onefine和data_acquisition应该和scrapy.cfg文件保持一致;p是project。

[deploy:onefine]

url = http://localhost:6800/

project = data_acquisition

服务器端

C:\Users\xxx> workon Py3_spider

(Py3_spider) C:\Users\xxx> D:

(Py3_spider) D:\> cd PythonProject\DjangoProject\Soufan_crawl\data_acquisition

(Py3_spider) D:\PythonProject\DjangoProject\Soufan_crawl\data_acquisition> mkdir scrapyd

(Py3_spider) D:\PythonProject\DjangoProject\Soufan_crawl\data_acquisition> cd scrapyd

(Py3_spider) Soufan_crawl\data_acquisition\scrapyd> scrapyd

客户端

# 上传scrapy项目

data_acquisition> workon Py3_spider

(Py3_spider) data_acquisition> scrapyd-deploy onefine -p data_acquisition

Packing version 1554381226

Deploying to project "data_acquisition" in http://localhost:6800/addversion.json

Server response (200):

{"node_name": "ONE-FINE", "status": "ok", "project": "data_acquisition", "version": "1554381226", "spiders": 1}

# 此时可以看到data_acquisition\scrapyd\eggs里面产生一个文件夹,里面包含打包的项目

# 返回scrapyd的状态

(Py3_spider) data_acquisition> curl http://localhost:6800/daemonstatus.json

{"node_name": "ONE-FINE", "status": "ok", "pending": 0, "running": 0, "finished": 0}

# 运行某项目下的指定爬虫

(Py3_spider) data_acquisition> curl http://localhost:6800/schedule.json -d project=data_acquisition -d spider=com_58

{"node_name": "ONE-FINE", "status": "ok", "jobid": "7d4efe4a56e311e99c261cb72c9b2772"}

# 删除scrapy项目

(Py3_spider) data_acquisition> curl http://localhost:6800/delproject.json -d project=data_acquisition

{"node_name": "ONE-FINE", "status": "ok"}

# 此时可以看到data_acquisition\scrapyd\eggs里面内容为空

# 取消爬虫运行

(Py3_spider) data_acquisition> curl http://localhost:6800/cancel.json -d project=data_acquisition -d job=com_58

...

# 查看部署的项目

(Py3_spider) data_acquisition> curl http://localhost:6800/listprojects.json

{"node_name": "ONE-FINE", "status": "ok", "projects": ["data_acquisition", "default"]}

# 查看project中的spider

(Py3_spider) data_acquisition> curl http://localhost:6800/listversions.json?project=data_acquisition

{"node_name": "ONE-FINE", "status": "ok", "versions": ["1554388528"]}

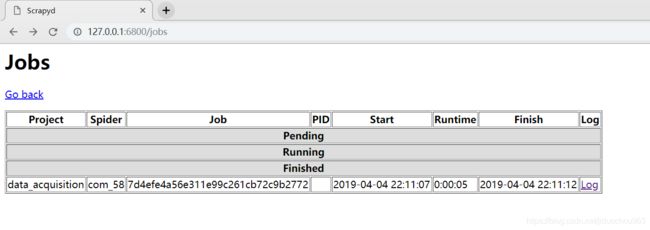

# 查看任务

(Py3_spider) data_acquisition> curl http://localhost:6800/listjobs.json?project=data_acquisition

{"node_name": "ONE-FINE", "status": "ok", "pending": [], "running": [], "finished": [{"id": "7d4efe4a56e311e99c261cb72c9b2772", "spider": "com_58", "start_time": "2019-04-04 22:11:07.367313", "end_time": "2019-04-04 22:11:12.778198"}, {"id": "70518d0056e511e9b1641cb72c9b2772", "spider": "com_58", "start_time": "2019-04-04 22:25:04.578779", "end_time": "2019-04-04 22:25:09.149797"}, {"id": "6f898aac56e611e9bafb1cb72c9b2772", "spider": "com_58", "start_time": "2019-04-04 22:32:12.736132", "end_time": "2019-04-04 22:32:17.326493"}, {"id": "bc5d354256e611e99a501cb72c9b2772", "spider": "com_58", "start_time": "2019-04-04 22:34:21.611161", "end_time": "2019-04-04 22:34:26.387410"}, {"id": "ec6d9ba456e611e98d031cb72c9b2772", "spider": "com_58", "start_time": "2019-04-04 22:35:42.288252", "end_time": "2019-04-04 22:35:47.833061"}]}

(Py3_spider) D:\PythonProject\DjangoProject\Soufan_crawl\data_acquisition>

实例:

> workon Py3_spider

(Py3_spider) data_acquisition> scrapy list

com_58

com_anjuke

com_ganji

com_leju

(Py3_spider) data_acquisition> scrapyd-deploy soufan_crawl -p soufan_data_acquisition

Packing version 1555723830

Traceback (most recent call last):

File "C:\Users\ONEFINE\Envs\Py3_spider\Scripts\scrapyd-deploy", line 292, in <module>

main()

File "C:\Users\ONEFINE\Envs\Py3_spider\Scripts\scrapyd-deploy", line 101, in main

exitcode, tmpdir = _build_egg_and_deploy_target(target, version, opts)

File "C:\Users\ONEFINE\Envs\Py3_spider\Scripts\scrapyd-deploy", line 122, in _build_egg_and_deploy_target

if not _upload_egg(target, egg, project, version):

File "C:\Users\ONEFINE\Envs\Py3_spider\Scripts\scrapyd-deploy", line 206, in _upload_egg

url = _url(target, 'addversion.json')

File "C:\Users\ONEFINE\Envs\Py3_spider\Scripts\scrapyd-deploy", line 172, in _url

return urljoin(target['url'], action)

KeyError: 'url'

## 这里注意,修改scrapy.cfg文件:

### [deploy:soufan_crawl] # 发布到服务器名称为soufan_crawl

### url = http://localhost:6800/ # 取消注释

(Py3_spider) data_acquisition> scrapyd-deploy soufan_crawl -p soufan_data_acquisition

Packing version 1555724021

Deploying to project "soufan_data_acquisition" in http://localhost:6800/addversion.json

Server response (200):

{"node_name": "ONE-FINE", "status": "ok", "project": "soufan_data_acquisition", "version": "1555724021", "spiders": 4}

(Py3_spider) data_acquisition> curl http://localhost:6800/schedule.json -d project=soufan_data_acquisition -d spider=com_58

{"node_name": "ONE-FINE", "status": "ok", "jobid": "f138ea6e630c11e9aac61cb72c9b2772"}

# 删除某一工程,并将工程下各版本爬虫一起删除

(Py3_spider) data_acquisition> curl http://localhost:6800/delproject.json -d project=soufan_data_acquisition

{"node_name": "ONE-FINE", "status": "ok"}