【计算机视觉】照相机模型与增强现实

一、原理

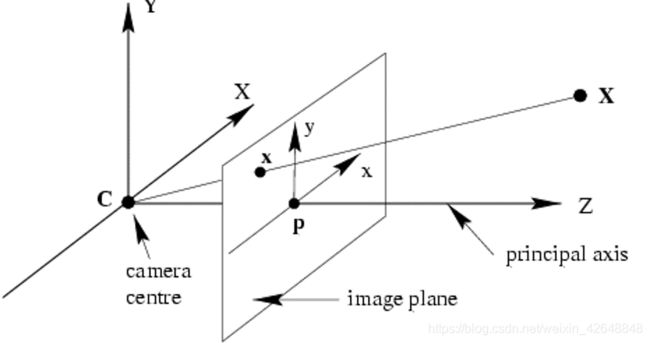

1.针孔照相机模型

在光线投影到图像平面之前,从唯一一个点经过,也就是照相机中心C,如图:

1.1 照相机矩阵

P = K [ R | t ]

R是描述照相机方向的旋转矩阵,t是描述照相机中心位置的三维平移向量,内标定矩阵K描述照相机的投影性质。常用如下矩阵表示K:

还包括三维点的投影,照相机矩阵的分解,计算照相机中心

2.照相机标定

即计算出该照相机内参数。常拍摄多幅平面棋盘图像,处理计算。

焦距和光心用像素度量,即改变分辨率就会改变这些值。常用如下函数保存相机测量值。

def my_calibration(sz):

row, col = sz

fx = 2555*col/2592

fy = 2586*row/1936

K = diag([fx, fy, 1])

K[0, 2] = 0.5*col

K[1, 2] = 0.5*row

return K

3.以平面和标记物进行姿态估计

如果图像中包含平面状的标记物体,并且已经对照相机进行了标定,那么我们可以利用单应性矩阵计算出照相机的姿态(旋转和平移)。这里的标记物体可以为对任何平坦的物体。

4.增强现实

1.定义

将物体和相应信息放置在图像数据上的一系列操作的总称。例子:放置一个三维计算机图像学模型,使其看起来属于该场景。

2.工具包

要利用到PyGame和OpenGL工具包(需要自行安装),主要用到pygame里面的窗口和事件控制和OpenGL中设置照相机投影的函数。

3.过程

1.如果获得已经标定好的照相机K,可以利用set_projection_from_camera(K)将照相机参数转换为OpenGL中的投影矩阵

2.在图像中放置虚拟物体,将想放的图像作为背景添加进来

3.综合集成

4.载入模型

二、样例

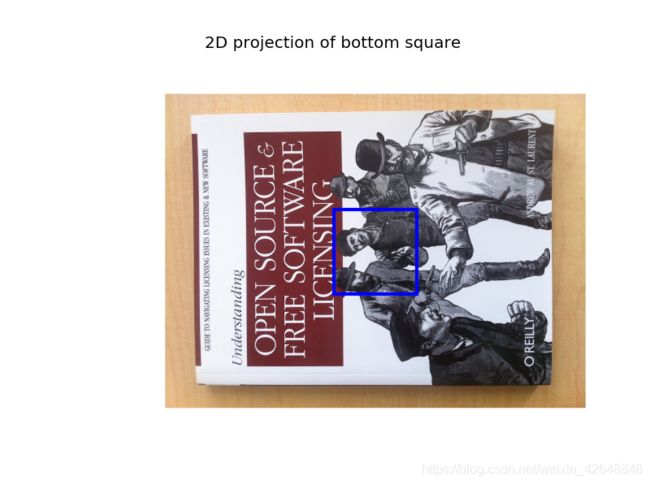

1.以平面和标记物进行姿态估计(以“立方体”为例)

提取两幅图像的SIFT特征,然后使用RANSAC算法稳健地估计单应性矩阵,并利用立方体检验结果的正确性:

2.增强现实(以“茶壶”为例)

三、代码

1.立方体

from pylab import *

from PIL import Image

# If you have PCV installed, these imports should work

from PCV.geometry import homography, camera

from PCV.localdescriptors import sift

"""

This is the augmented reality and pose estimation cube example from Section 4.3.

"""

def cube_points(c, wid):

""" Creates a list of points for plotting

a cube with plot. (the first 5 points are

the bottom square, some sides repeated). """

p = []

# bottom

p.append([c[0]-wid, c[1]-wid, c[2]-wid])

p.append([c[0]-wid, c[1]+wid, c[2]-wid])

p.append([c[0]+wid, c[1]+wid, c[2]-wid])

p.append([c[0]+wid, c[1]-wid, c[2]-wid])

p.append([c[0]-wid, c[1]-wid, c[2]-wid]) #same as first to close plot

# top

p.append([c[0]-wid, c[1]-wid, c[2]+wid])

p.append([c[0]-wid, c[1]+wid, c[2]+wid])

p.append([c[0]+wid, c[1]+wid, c[2]+wid])

p.append([c[0]+wid, c[1]-wid, c[2]+wid])

p.append([c[0]-wid, c[1]-wid, c[2]+wid]) #same as first to close plot

# vertical sides

p.append([c[0]-wid, c[1]-wid, c[2]+wid])

p.append([c[0]-wid, c[1]+wid, c[2]+wid])

p.append([c[0]-wid, c[1]+wid, c[2]-wid])

p.append([c[0]+wid, c[1]+wid, c[2]-wid])

p.append([c[0]+wid, c[1]+wid, c[2]+wid])

p.append([c[0]+wid, c[1]-wid, c[2]+wid])

p.append([c[0]+wid, c[1]-wid, c[2]-wid])

return array(p).T

def my_calibration(sz):

"""

Calibration function for the camera (iPhone4) used in this example.

"""

row, col = sz

fx = 2555*col/2592

fy = 2586*row/1936

K = diag([fx, fy, 1])

K[0, 2] = 0.5*col

K[1, 2] = 0.5*row

return K

# compute features

sift.process_image('book_frontal.JPG', 'im0.sift')

l0, d0 = sift.read_features_from_file('im0.sift')

sift.process_image('book_perspective.bmp', 'im1.sift')

l1, d1 = sift.read_features_from_file('im1.sift')

# match features and estimate homography

matches = sift.match_twosided(d0, d1)

ndx = matches.nonzero()[0]

fp = homography.make_homog(l0[ndx, :2].T)

ndx2 = [int(matches[i]) for i in ndx]

tp = homography.make_homog(l1[ndx2, :2].T)

model = homography.RansacModel()

H, inliers = homography.H_from_ransac(fp, tp, model)

# camera calibration

K = my_calibration((747, 1000))

# 3D points at plane z=0 with sides of length 0.2

box = cube_points([0, 0, 0.1], 0.1)

# project bottom square in first image

cam1 = camera.Camera(hstack((K, dot(K, array([[0], [0], [-1]])))))

# first points are the bottom square

box_cam1 = cam1.project(homography.make_homog(box[:, :5]))

# use H to transfer points to the second image

box_trans = homography.normalize(dot(H,box_cam1))

# compute second camera matrix from cam1 and H

cam2 = camera.Camera(dot(H, cam1.P))

A = dot(linalg.inv(K), cam2.P[:, :3])

A = array([A[:, 0], A[:, 1], cross(A[:, 0], A[:, 1])]).T

cam2.P[:, :3] = dot(K, A)

# project with the second camera

box_cam2 = cam2.project(homography.make_homog(box))

# plotting

im0 = array(Image.open('book_frontal.JPG'))

im1 = array(Image.open('book_perspective.bmp'))

figure()

imshow(im0)

plot(box_cam1[0, :], box_cam1[1, :], linewidth=3)

title('2D projection of bottom square')

axis('off')

figure()

imshow(im1)

plot(box_trans[0, :], box_trans[1, :], linewidth=3)

title('2D projection transfered with H')

axis('off')

figure()

imshow(im1)

plot(box_cam2[0, :], box_cam2[1, :], linewidth=3)

title('3D points projected in second image')

axis('off')

show()

2.茶壶

import math

import pickle

from pylab import *

from OpenGL.GL import *

from OpenGL.GLU import *

from OpenGL.GLUT import *

import pygame, pygame.image

from pygame.locals import *

from PCV.geometry import homography, camera

from PCV.localdescriptors import sift

def cube_points(c, wid):

""" Creates a list of points for plotting

a cube with plot. (the first 5 points are

the bottom square, some sides repeated). """

p = []

# bottom

p.append([c[0]-wid, c[1]-wid, c[2]-wid])

p.append([c[0]-wid, c[1]+wid, c[2]-wid])

p.append([c[0]+wid, c[1]+wid, c[2]-wid])

p.append([c[0]+wid, c[1]-wid, c[2]-wid])

p.append([c[0]-wid, c[1]-wid, c[2]-wid]) #same as first to close plot

# top

p.append([c[0]-wid, c[1]-wid, c[2]+wid])

p.append([c[0]-wid, c[1]+wid, c[2]+wid])

p.append([c[0]+wid, c[1]+wid, c[2]+wid])

p.append([c[0]+wid, c[1]-wid, c[2]+wid])

p.append([c[0]-wid, c[1]-wid, c[2]+wid]) #same as first to close plot

# vertical sides

p.append([c[0]-wid, c[1]-wid, c[2]+wid])

p.append([c[0]-wid, c[1]+wid, c[2]+wid])

p.append([c[0]-wid, c[1]+wid, c[2]-wid])

p.append([c[0]+wid, c[1]+wid, c[2]-wid])

p.append([c[0]+wid, c[1]+wid, c[2]+wid])

p.append([c[0]+wid, c[1]-wid, c[2]+wid])

p.append([c[0]+wid, c[1]-wid, c[2]-wid])

return array(p).T

def my_calibration(sz):

row, col = sz

fx = 2555*col/2592

fy = 2586*row/1936

K = diag([fx, fy, 1])

K[0, 2] = 0.5*col

K[1, 2] = 0.5*row

return K

def set_projection_from_camera(K):

glMatrixMode(GL_PROJECTION)

glLoadIdentity()

fx = K[0,0]

fy = K[1,1]

fovy = 2*math.atan(0.5*height/fy)*180/math.pi

aspect = (width*fy)/(height*fx)

near = 0.1

far = 100.0

gluPerspective(fovy,aspect,near,far)

glViewport(0,0,width,height)

def set_modelview_from_camera(Rt):

glMatrixMode(GL_MODELVIEW)

glLoadIdentity()

Rx = np.array([[1,0,0],[0,0,-1],[0,1,0]])

R = Rt[:,:3]

U,S,V = np.linalg.svd(R)

R = np.dot(U,V)

R[0,:] = -R[0,:]

t = Rt[:,3]

M = np.eye(4)

M[:3,:3] = np.dot(R,Rx)

M[:3,3] = t

M = M.T

m = M.flatten()

glLoadMatrixf(m)

def draw_background(imname):

bg_image = pygame.image.load(imname).convert()

bg_data = pygame.image.tostring(bg_image,"RGBX",1)

glMatrixMode(GL_MODELVIEW)

glLoadIdentity()

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT)

glEnable(GL_TEXTURE_2D)

glBindTexture(GL_TEXTURE_2D,glGenTextures(1))

glTexImage2D(GL_TEXTURE_2D,0,GL_RGBA,width,height,0,GL_RGBA,GL_UNSIGNED_BYTE,bg_data)

glTexParameterf(GL_TEXTURE_2D,GL_TEXTURE_MAG_FILTER,GL_NEAREST)

glTexParameterf(GL_TEXTURE_2D,GL_TEXTURE_MIN_FILTER,GL_NEAREST)

glBegin(GL_QUADS)

glTexCoord2f(0.0,0.0); glVertex3f(-1.0,-1.0,-1.0)

glTexCoord2f(1.0,0.0); glVertex3f( 1.0,-1.0,-1.0)

glTexCoord2f(1.0,1.0); glVertex3f( 1.0, 1.0,-1.0)

glTexCoord2f(0.0,1.0); glVertex3f(-1.0, 1.0,-1.0)

glEnd()

glDeleteTextures(1)

def draw_teapot(size):

glEnable(GL_LIGHTING)

glEnable(GL_LIGHT0)

glEnable(GL_DEPTH_TEST)

glClear(GL_DEPTH_BUFFER_BIT)

glMaterialfv(GL_FRONT,GL_AMBIENT,[0,0,0,0])

glMaterialfv(GL_FRONT,GL_DIFFUSE,[0.5,0.0,0.0,0.0])

glMaterialfv(GL_FRONT,GL_SPECULAR,[0.7,0.6,0.6,0.0])

glMaterialf(GL_FRONT,GL_SHININESS,0.25*128.0)

glutSolidTeapot(size)

width,height = 1000,747

def setup():

pygame.init()

pygame.display.set_mode((width,height),OPENGL | DOUBLEBUF)

pygame.display.set_caption("OpenGL AR demo")

# compute features

sift.process_image('book_frontal.JPG', 'im0.sift')

l0, d0 = sift.read_features_from_file('im0.sift')

sift.process_image('book_perspective.JPG', 'im1.sift')

l1, d1 = sift.read_features_from_file('im1.sift')

# match features and estimate homography

matches = sift.match_twosided(d0, d1)

ndx = matches.nonzero()[0]

fp = homography.make_homog(l0[ndx, :2].T)

ndx2 = [int(matches[i]) for i in ndx]

tp = homography.make_homog(l1[ndx2, :2].T)

model = homography.RansacModel()

H, inliers = homography.H_from_ransac(fp, tp, model)

K = my_calibration((747, 1000))

cam1 = camera.Camera(hstack((K, dot(K, array([[0], [0], [-1]])))))

box = cube_points([0, 0, 0.1], 0.1)

box_cam1 = cam1.project(homography.make_homog(box[:, :5]))

box_trans = homography.normalize(dot(H,box_cam1))

cam2 = camera.Camera(dot(H, cam1.P))

A = dot(linalg.inv(K), cam2.P[:, :3])

A = array([A[:, 0], A[:, 1], cross(A[:, 0], A[:, 1])]).T

cam2.P[:, :3] = dot(K, A)

Rt=dot(linalg.inv(K),cam2.P)

setup()

draw_background("book_perspective.bmp")

set_projection_from_camera(K)

set_modelview_from_camera(Rt)

draw_teapot(0.05)

pygame.display.flip()

while True:

for event in pygame.event.get():

if event.type==pygame.QUIT:

sys.exit()