pytorch自然语言处理基础模型之七:Seq2Seq

1、模型原理

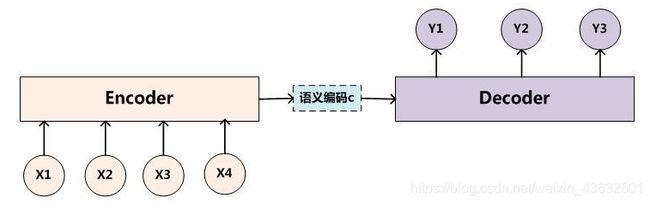

所谓Seq2Seq(Sequence to Sequence), 就是一种能够根据给定的序列,通过特定的方法生成另一个序列的方法。最基础的Seq2Seq模型包含了三个部分,即Encoder、Decoder以及连接两者的中间状态向量C,Encoder通过学习输入,将其编码成一个固定大小的状态向量c,继而将c传给Decoder,Decoder再通过对状态向量c的学习来进行输出。结构如图所示:

这里面的输入有三个:Encoder的输入, Encoder生产的隐藏状态(语义编码c),Decoder的输入(上图画的不是很准确,Decoder部分也应该包含输入)。

2、代码实现

本文所用的数据为简单的六个词组,如下所示:

['man', 'women'], ['black', 'white'], ['king', 'queen'], ['girl', 'boy'], ['up', 'down'], ['high', 'low']

我们假设这是一个翻译模型,输入每个词组的第一个单词,经过Seq2Seq网络的训练后,模型可以给出该单词的“翻译”,例如当输入“man”时,我们希望模型给出的结果是“women”。

1. 导入需要的库,设置数据类型

import numpy as np

import torch

import torch.nn as nn

from torch.autograd import Variable

dtype = torch.FloatTensor

2. 创建数据和字典

seq_data = [['man', 'women'], ['black', 'white'], ['king', 'queen'], ['girl', 'boy'], ['up', 'down'], ['high', 'low']]

// S: 单词的开始标志

// E: 单词的结束标志

// P: 单词长度少于时间步长时用P进行填充

char_arr = [c for c in 'SEPabcdefghijklmnopqrstuvwxyz']

num_dict = {n:i for i, n in enumerate(char_arr)}

3. 设置网络参数

n_step = 5

n_hidden = 128

n_class = len(num_dict)

batch_size = len(seq_data)

Seq2Seq的网络设计好之后的输入序列和输出序列长度是不可变的。因此我们设置时间步长为5,当一个单词的字符长度不够5时,比如“man”,我们就用"P"将长度补齐,

4. 创建batch

def make_batch(seq_data):

input_batch, output_batch, target_batch = [], [], []

for seq in seq_data:

for i in range(2):

//长度不够5的单词用'P'补齐

seq[i] = seq[i] + 'P' * (n_step-len(seq[i]))

input = [num_dict[n] for n in seq[0]]

output = [num_dict[n] for n in ('S' + seq[1])]

target = [num_dict[n] for n in (seq[1] + 'E')]

// input_batch : [batch_size, n_step, n_class]

// output_batch : [batch_size, n_step+1 (becase of 'S' ), n_class]

// target_batch : [batch_size, n_step+1 (becase of 'E')]

input_batch.append(np.eye(n_class)[input])

output_batch.append(np.eye(n_class)[output])

target_batch.append(target)

// make tensor

return Variable(torch.Tensor(input_batch)), Variable(torch.Tensor(output_batch)), Variable(torch.LongTensor(target_batch))

input_batch, output_batch, target_batch = make_batch(seq_data)

5. 创建网络

class Seq2Seq(nn.Module):

def __init__(self):

super(Seq2Seq, self).__init__()

self.enc_cell = nn.RNN(input_size=n_class, hidden_size=n_hidden, dropout=0.5)

self.dec_cell = nn.RNN(input_size=n_class, hidden_size=n_hidden, dropout=0.5)

self.fc = nn.Linear(n_hidden, n_class)

def forward(self, enc_input, enc_hidden, dec_input):

// enc_input: [n_step, batch_size, n_class]

enc_input = enc_input.transpose(0, 1)

// dec_input: [n_step+1, batch_size, n_class]

dec_input = dec_input.transpose(0, 1)

// enc_states : [num_layers(=1) * num_directions(=1), batch_size, n_hidden]

_, enc_states = self.enc_cell(enc_input, enc_hidden)

// outputs : [max_len+1(=6), batch_size, num_directions(=1) * n_hidden(=128)]

outputs, _ = self.dec_cell(dec_input, enc_states)

// model : [max_len+1(=6), batch_size, n_class]

model = self.fc(outputs)

return model

6. 训练模型

model = Seq2Seq()

loss_fn = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

for epoch in range(5000):

// 初始化encoder的隐藏层状态: [num_layers * num_directions, batch_size, n_hidden]

hidden = Variable(torch.zeros(1, batch_size, n_hidden))

optimizer.zero_grad()

// input_batch : [batch_size, n_step, n_class]

// output_batch : [batch_size, n_step+1 (becase of 'S' ), n_class]

// target_batch : [batch_size, n_step+1 (becase of 'E')]

output = model(input_batch, hidden, output_batch)

// output : [max_len+1, batch_size, num_directions(=1) * n_hidden]

output = output.transpose(0, 1)

loss = 0

for i in range(0, len(target_batch)):

// output[i] : [max_len+1, num_directions(=1) * n_hidden]

// target_batch[i] : max_len+1

loss += loss_fn(output[i], target_batch[i])

if (epoch + 1) % 1000 == 0:

print('Epoch:', '%04d' % (epoch + 1), 'cost =', '{:.6f}'.format(loss))

loss.backward()

optimizer.step()

训练结果如下:

Epoch: 1000 cost = 0.003524

Epoch: 2000 cost = 0.000967

Epoch: 3000 cost = 0.000412

Epoch: 4000 cost = 0.000207

Epoch: 5000 cost = 0.000112

7. 查看训练结果

//预测

def translate(word):

// 预测时假设decoder的输入全为"P"

input_batch, output_batch, _ = make_batch([[word, 'P' * len(word)]])

// 初始化隐藏层状态 [num_layers * num_directions, batch_size, n_hidden]

hidden = Variable(torch.zeros(1, 1, n_hidden))

// output : [max_len+1(=6), batch_size(=1), n_class]

output = model(input_batch, hidden, output_batch)

// troch.max()[1] : 返回最大值的每个索引, data只返回variable中的数据部分

predict = output.data.max(2)[1]

decoded = [char_arr[i] for i in predict]

// index():包含子字符串返回开始的索引值

end = decoded.index('E')

translated = ''.join(decoded[:end])

return translated.replace('P', '')

print('man ->', translate('man'))

print('mans ->', translate('mans'))

print('king ->', translate('king'))

print('black ->', translate('black'))

print('upp ->', translate('upp'))

预测结果如下:

man -> women

mans -> women

king -> queen

black -> white

upp -> down

可以看到"man"和"mans"的翻译结果都是women,将"up"改为"upp"后,仍能翻译出"down"。

参考链接

https://blog.csdn.net/qq_32241189/article/details/81591456

https://www.jianshu.com/p/b2b95f945a98

https://github.com/graykode/nlp-tutorial