pytorch迁移学习实现猫狗二分类

作为深度学习的小白,最近在学习陈云大佬的《深度学习框架,pytorch入门与实践》一书,刚刚看完pytorch基础知识,苦于命令大多无法记住,特此想通过此项目加深对pytorch的理解。相关模块的用法会记录在本分类栏里面。侵删!

参考:Pytorch实现猫狗大战(二)

实战pytorch与计算机视觉,唐进民

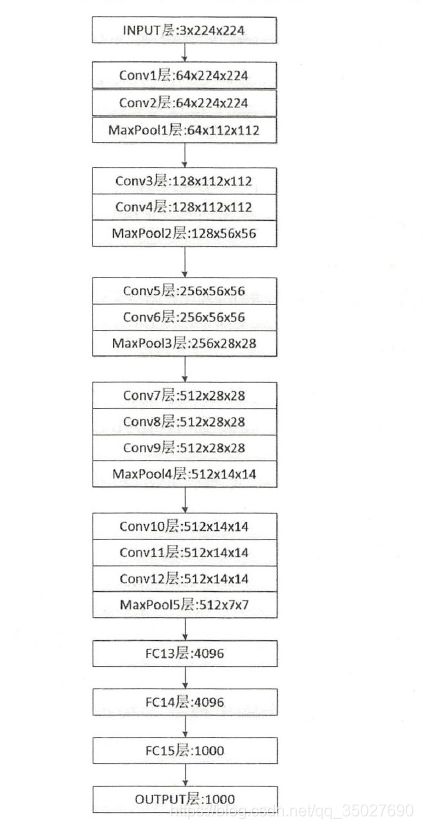

vgg16简介

VGGNet是由牛津大学的视觉几何组(Visual Geometry Group)提出。在该模型中统一了卷积中使用的参数,卷积核为3x3,卷积核步长为1,padding统一为1,池化为2x2的最大池化等等。同时增加了卷积神经网络模型架构的深度,分别为vgg16和vgg19。下面是vgg16的模型。

卷积层计算公式:

W o u t p u t = W i n p u t − W f i l t e r + 2 P S + 1 {W_{output}} = \frac{{{W_{input}} - {W_{filter}} + 2P}}{S} + 1 Woutput=SWinput−Wfilter+2P+1

池化层计算公式:

W o u t p u t = W i n p u t − W f i l t e r S + 1 {W_{output}} = \frac{{{W_{input}} - {W_{filter}}}}{S} + 1 Woutput=SWinput−Wfilter+1

大概计算一下:输入为3x224x224,经过Conv1,卷积核为3x3,步长为1,padding为1。同时用了64个卷积核,经过上述公式可得输出为64x224x224。

猫狗二分类

模型由两部分组成:特征和分类器。特征部分由一堆卷积层组成,整体传入分类器中,分类器输出为1000,无法解决我们的问题,意味着我们要改写分类器,但是特征检测完全没有问题,我们可以把预训练的网络作为很好的特征检测器,可以用作我们自己写的分类器的输入。

注意:

-

我们最终完成的是分两类任务,所以需要将原模型的全连接层进行改写,只需要输出2类。

-

网络灵活的搭建包括提取特征部分parma.requires_grad = False,分类器部分最后parma.requires_grad = True

我采用的是cpu,速度慢,所以只采取了100多张图片进行训练,文件结构如下:

训练代码如下:

import torch

import torchvision

from torchvision import datasets, transforms, models

import os

import numpy as np

import matplotlib.pyplot as plt

from torch.autograd import Variable

import time

path = "data1"

transform = transforms.Compose([transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])])

data_image = {x: datasets.ImageFolder(root=os.path.join(path, x), ##imageFOLDER 返回的是一个list,这里的写法是字典的形式

transform=transform)

for x in ["train", "val"]}

data_loader_image = {x: torch.utils.data.DataLoader(dataset=data_image[x],

batch_size=4,

shuffle=True)

for x in ["train", "val"]}

# 检查电脑GPU资源

use_gpu = torch.cuda.is_available()

print(use_gpu) # 查看用没用GPU,用了打印True,没用打印False

classes = data_image["train"].classes # 按文件夹名字分类

classes_index = data_image["train"].class_to_idx # 文件夹类名所对应的链值

print(classes) # 打印类别

print(classes_index)

# 打印训练集,验证集大小

print("train data set:", len(data_image["train"]))

print("val data set:", len(data_image["val"]))

X_train,y_train = next(iter(data_loader_image["train"]))

mean = [0.5, 0.5, 0.5]

std = [0.5, 0.5, 0.5]

img = torchvision.utils.make_grid(X_train)

img = img.numpy().transpose((1,2,0))

img = img*std + mean

print([classes[i] for i in y_train])

plt.imshow(img)

plt.show()

# 选择模型

model = models.vgg16(pretrained=True) # 我们选择预训练好的模型vgg19

print(model) # 查看模型结构

for parma in model.parameters():

parma.requires_grad = False # 不进行梯度更新

# 改变模型的全连接层,因为原模型是输出1000个类,本项目只需要输出2类

model.classifier = torch.nn.Sequential(torch.nn.Linear(25088, 4096),

torch.nn.ReLU(),

torch.nn.Dropout(p=0.5),

torch.nn.Linear(4096, 4096),

torch.nn.ReLU(),

torch.nn.Dropout(p=0.5),

torch.nn.Linear(4096, 2))

for index, parma in enumerate(model.classifier.parameters()):

if index == 6:

parma.requires_grad = True

if use_gpu:

model = model.cuda()

print(parma)

# 定义代价函数

cost = torch.nn.CrossEntropyLoss()

# 定义优化器

optimizer = torch.optim.Adam(model.classifier.parameters())

# 再次查看模型结构

print(model)

### 开始训练模型

n_epochs = 1

for epoch in range(n_epochs):

since = time.time()

print("Epoch{}/{}".format(epoch, n_epochs))

print("-" * 10)

for param in ["train", "val"]:

if param == "train":

model.train = True

else:

model.train = False

running_loss = 0.0

running_correct = 0

batch = 0

for data in data_loader_image[param]:

batch += 1

X, y = data

if use_gpu:

X, y = Variable(X.cuda()), Variable(y.cuda())

else:

X, y = Variable(X), Variable(y)

optimizer.zero_grad()

y_pred = model(X)

_, pred = torch.max(y_pred.data, 1)

loss = cost(y_pred, y)

if param == "train":

loss.backward()

optimizer.step()

running_loss += loss.item()

# running_loss += loss.data[0]

running_correct += torch.sum(pred == y.data)

if batch % 5 == 0 and param == "train":

print("Batch {}, Train Loss:{:.4f}, Train ACC:{:.4f}".format(

batch, running_loss / (4 * batch), 100 * running_correct / (4 * batch)))

epoch_loss = running_loss / len(data_image[param])

epoch_correct = 100 * running_correct / len(data_image[param])

print("{} Loss:{:.4f}, Correct:{:.4f}".format(param, epoch_loss, epoch_correct))

now_time = time.time() - since

print("Training time is:{:.0f}m {:.0f}s".format(now_time // 60, now_time % 60))

torch.save(model, 'model.pth')

###输出结果

#Batch 55, Train Loss:1.8566, Train ACC:78.0000

#train Loss:1.7800, Correct:78.0000

#val Loss:0.4586, Correct:92.0000

#Training time is:2m 6s

将模型保存下来,备测试使用。

测试代码:

import os

import torch

import torchvision

from torchvision import datasets, transforms, models

import numpy as np

import matplotlib.pyplot as plt

from torch.autograd import Variable

import time

model = torch.load('model.pth')

path = "data1"

transform = transforms.Compose([transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])])

data_test_img = datasets.ImageFolder(root="data1/val/", transform = transform)

data_loader_test_img = torch.utils.data.DataLoader(dataset=data_test_img,

batch_size = 16,shuffle=True) #载入测试数据集,并随机打乱

classes = data_test_img.classes ##class

image, label = next(iter(data_loader_test_img))

images = Variable(image)

y_pred = model(images)

_,pred = torch.max(y_pred.data, 1)

print(pred)

img = torchvision.utils.make_grid(image)

img = img.numpy().transpose(1,2,0)

mean = [0.5, 0.5, 0.5]

std = [0.5, 0.5, 0.5]

img = img * std + mean

print("Pred Label:", [classes[i] for i in pred])

plt.imshow(img)

plt.show()

测试结果:

tensor([1, 1, 0, 1, 0, 1, 0, 0, 0, 1, 0, 0, 0, 1, 1, 0])

Pred Label: [‘dog’, ‘dog’, ‘cat’, ‘dog’, ‘cat’, ‘dog’, ‘cat’, ‘cat’, ‘cat’, ‘dog’, ‘cat’, ‘cat’, ‘cat’, ‘dog’, ‘dog’, ‘cat’]