hadoop 基础环境搭建

Background

cynric-111 ,cynric-112 ,cynric-113 三台主机

每台机器一块80G ssd盘做为根盘(vda)

每台机器一块100G ssd盘做为数据盘 (vdb)

每台机器一块2T sata盘做为数据盘 (vdc)

一、步骤

1.免密码登录

修改三台机器 /etc/hosts 文件如下

192.168.xx.111 cynric-111

192.168.xx.112 cynric-112

192.168.xx.113 cynric-113

执行 ssh-keygen -t rsa 命令

cd 到 /root/.ssh 目录 (根据自己用户不同不一定是root)

将三台机器 id_rsa.pub 中内容都复制到一个叫 authorized_keys文件中并存放到/root/.ssh目录中,我这里是这么操作的

先登录 cynric-111

cd /root/.ssh

cat id_rsa.pub >> authorized_keys

scp authorized_keys root@cynric-112:/root/.ssh/

ssh root@cynric-112

cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys

scp /root/.ssh/authorized_keys root@cynric-113:/root/.ssh/

cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys

scp /root/.ssh/authorized_keys root@cynric-111:/root/.ssh/

scp /root/.ssh/authorized_keys root@cynric-112:/root/.ssh/

这样三台机器相互之间免密码登录就配置好了,对了记得关闭防火墙

systemctl stop firewalld #这是用来关闭防火墙的

systemctl disable firewalld #这是用来禁止开机启动防火墙的

2. 挂载磁盘

mkdir /data1 #用于挂载ssd

mkdir /data2 #用于挂载sata

mkfs.xfs /dev/vdb

mkfs.xfs -f /dev/vdc

mount /dev/vdb /data1

mount /dev/vdc /data2

可以使用 lsblk命令来查看一下目录挂载情况

3. 基础平台搭建

我这里需要安装以下组件(装的版本都比较新不知道会有多少坑!):

| 组件名称 | 版本号 |

|---|---|

| java | 1.8 |

| scala | 2.11.8 |

| zookeeper | 3.5.6 |

| hadoop | 2.7.7 |

| hbase | 1.4.11 |

| kafka | 2.3.1 |

| spark2 | 2.4.4 |

首先安装 jdk, 我使用的是 jdk-8u211 这个版本,使用的tar.gz包安装,我的jdk放在了/opt下所以我需要在 /etc/profile文件中添加下面两行来以内环境变量。

JAVA_HOME=/opt/jdk

PATH=$PATH:$JAVA_HOME/bin

最后别忘记执行source /etc/profile激活刚修改的环境变量,使用java -version命令来确认 java环境变量是否设置成功。

scala 版本我这里用的是 2.11.8 (因为spark除了2.4.2 以外都是以2.11编译的,需要2.12的同学可以自己编译一下)

同 jdk一样将其加入到环境变量中,使用scala -version来确认是否安装成功

安装zookeeper

zookeeper 是一个分布式应用程序协调服务,在hadoop生态中扮演重要的角色,因为后面部署的 hbase 和 kafka 都需要使用到zookeeper,所以这里就先安装zookeeper.

cd /opt

wget https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/stable/apache-zookeeper-3.5.6-bin.tar.gz

首先, 下载 zookeeper, 并使用scp命令将其分发到所有机器上。使用tar zxvf命令将其解压缩,并使用mv命令将其重命名为zookeeper(我习惯去掉版本号命名目录)

修改zookeeper配置文件,让它以集群模式来运行。

cd /opt/zookeeper/conf

cat zoo_sample.cfg > zoo.cfg

修改zoo.cfg内容如下

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data1/zookeeper

dataLogDir=/data2/log/zookeeper

clientPort=2181

maxClientCnxns=60

server.1=cynric-111:2888:3888

server.2=cynric-112:2888:3888

server.3=cynric-113:2888:3888

同时分别在3台机器 /data1/zookeeper/ 目录下创建一个名为myid的文件,内容为上面配置文件中server.x中的x. 如果这个文件不加入的话服务是起不来的哦(官网的小字…不仔细看真的会忽略)!

我们这里在cynric-111 /data1/zookeeper/myid 中添加内容为 1,cynric-112 /data1/zookeeper/myid 中添加内容为 2,cynric-113 /data1/zookeeper/myid 中添加内容为 3 。

关于zookeeper配置文件信息,可以自己查看下面地址。

https://zookeeper.apache.org/doc/r3.5.6/zookeeperAdmin.html#sc_maintenance

这样 zookeeper 就大功告成了

安装 hadoop (hdfs/yarn)

接下来我们开始安装hadoop,老样子解压+改名分发到所有机器。

首先查看一下 /opt/hadoop/etc/hadoop/hadoop-env.sh 脚本,通过注释我们可以知道,这里只需要设置JAVA_HOME这个参数即可,其他的参数都是可选的。这里我只修改了log dir这个配置其他的没有变化。

export JAVA_HOME=/opt/jdk/

#指定hadoop log文件位置

export HADOOP_LOG_DIR=/data2/log/hadoop

#指定hadoop运行时 process目录

export HADOOP_PID_DIR=${HADOOP_PID_DIR}

export HADOOP_SECURE_DN_PID_DIR=${HADOOP_PID_DIR}

#指定hadoop配置文件目录

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-"/etc/hadoop"}

#添加classpath

for f in $HADOOP_HOME/contrib/capacity-scheduler/*.jar; do

if [ "$HADOOP_CLASSPATH" ]; then

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$f

else

export HADOOP_CLASSPATH=$f

fi

done

#最大堆内存(MB)

#export HADOOP_HEAPSIZE=

#export HADOOP_NAMENODE_INIT_HEAPSIZE=""

#hadoop运行时额外配置

export HADOOP_OPTS="$HADOOP_OPTS -Djava.net.preferIPv4Stack=true"

# namenode 运行时额外配置

export HADOOP_NAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_NAMENODE_OPTS"

# datanode 运行时额外配置

export HADOOP_DATANODE_OPTS="-Dhadoop.security.logger=ERROR,RFAS $HADOOP_DATANODE_OPTS"

#secondary namenode运行时额外配置

export HADOOP_SECONDARYNAMENODE_OPTS="-Dhadoop.security.logger=${HADOOP_SECURITY_LOGGER:-INFO,RFAS} -Dhdfs.audit.logger=${HDFS_AUDIT_LOGGER:-INFO,NullAppender} $HADOOP_SECONDARYNAMENODE_OPTS"

#resource manager 运行时额外配置

#export YARN_RESOURCEMANAGER_OPTS=""

#node manager 运行时额外配置

#export YARN_NODEMANAGER_OPTS=""

#web app proxy 运行时额外配置

#export YARN_PROXYSERVER_OPTS=""

#history server运行时额外配置

#export HADOOP_JOB_HISTORYSERVER_OPTS=""

#

export HADOOP_NFS3_OPTS="$HADOOP_NFS3_OPTS"

export HADOOP_PORTMAP_OPTS="-Xmx512m $HADOOP_PORTMAP_OPTS"

# 客户端配置

export HADOOP_CLIENT_OPTS="-Xmx512m $HADOOP_CLIENT_OPTS"

# On secure datanodes, user to run the datanode as after dropping privileges.

# This **MUST** be uncommented to enable secure HDFS if using privileged ports

# to provide authentication of data transfer protocol. This **MUST NOT** be

# defined if SASL is configured for authentication of data transfer protocol

# using non-privileged ports.

export HADOOP_SECURE_DN_USER=${HADOOP_SECURE_DN_USER}

#

export HADOOP_SECURE_DN_LOG_DIR=${HADOOP_LOG_DIR}/${HADOOP_HDFS_USER}

export HADOOP_IDENT_STRING=$USER

接下来配置hadoop的一些守护进程,这些配置主要写在了$HADOOP_HOME/etc/hadoop/core-site.xml,

$HADOOP_HOME/etc/hadoop/hdfs-site.xml,

$HADOOP_HOME/etc/hadoop/yarn-site.xml,

$HADOOP_HOME/etc/hadoop/mapred-site.xml这四个文件中。

core-site.xml中需要配置以下两个参数

| 配置名 | 配置值 | 描述 |

|---|---|---|

| fs.defaultFS | hdfs://host:port/(例如hdfs://cynric-111:9000或者高可用中是nameservice hdfs://catherine) | NameNode URI |

| io.file.buffer.size | 131072 | hadoop访问文件的IO操作都需要通过代码库。因此,在很多情况下,io.file.buffer.size都被用来设置缓存的大小。不论是对硬盘或者是网络操作来讲,较大的缓存都可以提供更高的数据传输,但这也就意味着更大的内存消耗和延迟。这个参数要设置为系统页面大小的倍数,以byte为单位,默认值是4KB,这里设置的是128KB. |

这里我们需要配置一个高可用的hdfs,详情可以参照:https://hadoop.apache.org/docs/r2.7.7/hadoop-project-dist/hadoop-hdfs/HDFSHighAvailabilityWithQJM.html

这里开始我们就要分配角色啦, cynric-111, cynric-112这两台机器为NameNode、cynric-111,cynric-112,cynric-113这三台机器为JournalNode, 这里文档中说 JournalNode比较轻量,但是至少要有三个JournalNode守护进程(这里需要注意的是JournalNode部署要为奇数台机器,可以容忍 (N-1)/2 台机器的 JNs 出现故障)

hdfs-site.xml需要配置如下:

namenode相关配置

| 配置名 | 配置值 | 描述 |

|---|---|---|

| dfs.namenode.name.dir | file:///data1/hadoop/namenode (这里需要使用到本地文件系统) | Path on the local filesystem where the NameNode stores the namespace and transactions logs persistently. If this is a comma-delimited list of directories then the name table is replicated in all of the directories, for redundancy. |

| dfs.hosts / dfs.hosts.exclude | List of permitted/excluded DataNodes. If necessary, use these files to control the list of allowable datanodes. | |

| dfs.blocksize | 268435456 | HDFS 块大小 256MB |

| dfs.namenode.handler.count | 100 | NameNode有一个工作线程池用来处理客户端的远程过程调用及集群守护进程的调用。处理程序数量越多意味着要更大的池来处理来自不同DataNode的并发心跳以及客户端并发的元数据操作。设置该值的一般原则是将其设置为集群大小的自然对数乘以20,即20logN,N为集群大小。 |

datanode相关配置

| 配置名 | 配置值 | 描述 |

|---|---|---|

| dfs.datanode.data.dir | file:///data2/hadoop/datanode | Comma separated list of paths on the local filesystem of a DataNode where it should store its blocks. If this is a comma-delimited list of directories, then data will be stored in all named directories, typically on different devices. |

高可用配置

| 配置名 | 配置值 | 描述 |

|---|---|---|

| dfs.nameservices | catherine | 逗号分割的命名空间(这里我们起名字叫catherine) |

| dfs.ha.namenodes.[nameservice ID] (这里是dfs.ha.namenodes.catherine) | namenode12,namenode13 | 两个 namenode 在该命名空间中的唯一标识(要和dfs.nameservices这个配置的值对应),逗号分割(目前对于每个命名空间最多支持两个namenode) |

| dfs.namenode.rpc-address.[nameservice ID].[name node ID] (这里是dfs.namenode.rpc-address.catherine.namenode12和dfs.namenode.rpc-address.catherine.namenode13) | cynric-111:8020 / cynric-112:8020 | 每个namenode的rpc地址 |

| dfs.namenode.http-address.[nameservice ID].[name node ID] (这里是dfs.namenode.http-address.catherine.namenode12和dfs.namenode.http-address.catherine.namenode13) | cynric-111:50070/ cynric-112:50070 | 每个namenode的http地址 |

| dfs.namenode.shared.edits.dir | qjournal://cynric-111:8485;cynric-112:8485;cynric-113:8485/catherine | 主namenode将这个系统的变化写到这个URI中,备用namenode读取这个URI |

| dfs.client.failover.proxy.provider.[nameservice ID] (dfs.client.failover.proxy.provider.catherine) | org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider | Java用于和namenode通信的类 |

| dfs.journalnode.edits.dir | /path/to/journal/node/local/data | JNs守护进程存储本地状态 |

| dfs.ha.automatic-failover.enabled | true | 自动恢复,当一个namenode坏了另外一个namenode自动切换为主namenode |

| ha.zookeeper.quorum | cynric-111:2181,cynric-112:2181,cynric-113:2181 | 通过zookeeper来实现的主备切换 |

配置好以上的这些配置就可以了

然后启动3个journal node, 分别在三台机器执行 /opt/hadoop/sbin/hadoop-daemon.sh start journalnode 命令

![]()

可以看到打印如上, jps 一下也可以看到 JournalNode进程

然后格式化我们的hdfs,在cynric-111或者112上执行 (注意这里要先启动journalnode才能格式化)

hdfs -namenode format

在另外一台未执行format的namenode上执行

hdfs namenode -bootstrapStandby 同步

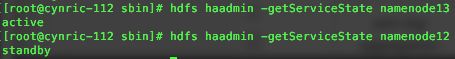

使用hdfs haadmin -getServiceState namenode12 查看namenode的状态

然后在 cynric-111 或者cynric-112上执行 hdfs zkfc -formatZK

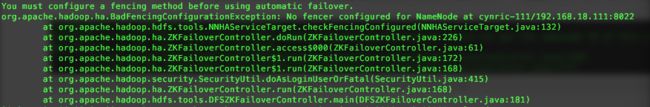

如果出现以下错误,请检查你的配置文件是否写对了,我是偷懒从我们测试环境拷贝了一个配置文件,其中有几个忘了改了导致这几个错误

当所有配置项都填写正确的时候 通过ZKCli.sh登录zookeeper客户端,就可以看到在根目录的地方生成了一个hadoop-ha的目录啦

![]()

然后启动 zkfc ./hadoop-daemon.sh start zkfc

然后这里有个坑坑了我好久,启动zkfc的时候失败了!没有提示,后来是去看日志才知道,这个配置没有写没有写没有写!!!

| 配置名 | 配置值 | 描述 |

|---|---|---|

| dfs.ha.fencing.methods | sshfence /n shell(/bin/true) | |

| dfs.ha.fencing.ssh.private-key-files | /root/.ssh/id_rsa | |

| dfs.ha.fencing.ssh.connect-timeout | 30000 |

如果zkfc启动失败的话,zookeeper 里 nameservice 节点(/hadoop-ha/catherine)就是空的,在这种情况下启动两个namenode会发现两个namenode都是standby的状态!!!!

然后启动 sbin/hadoop-daemon.sh start namenode,sbin/hadoop-daemon.sh start datanode

![]()

至此高可用,自动恢复的hdfs搭建就结束了! 可以尝试关闭active namenode看看 是不是可以正常切换!

| 机器 | 角色 |

|---|---|

| cynric-111 | nn,jn,dn,quorum |

| cynric-112 | nn,jn,dn,quorum |

| cynric-113 | jn,dn,quorum |

接下来我们需要安装高可用yarn

yarn-site.xml需要如下配置

| 配置名 | 配置值 | 描述 |

|---|---|---|

| yarn.acl.enable | true / false | 是否开启ACL(Access Control List),默认为不开启. |

| yarn.admin.acl | 用户1,用户2,用户3 用户组1,用户组2,用户组3(用户和用户组之间必须有个空格) | 设置管理ACL用户和用户组, 默认值为*,意味着所有人都可以管理Resource Manager、管理已提交 (比如取消 kill) 的任务。 |

| yarn.log-aggregation-enable | false | 是否启用日志聚合功能,默认不开启 |

从这个网址找到相关ACL的一些信息https://www.ibm.com/support/knowledgecenter/en/SSPT3X_4.2.5/com.ibm.swg.im.infosphere.biginsights.admin.doc/doc/ACL_Management_YARN.html

Access Control List Management for YARN

ACLs in YARN are handled by the Capacity Scheduler. These ACLs are meant to control user access to queues, and are configured through the Capacity Scheduler. This allows administrators to specify which users or groups will be allowed to access the specific queue. Values are modified in the YARN config file. You can use the Yarn Queue Manager view out-of-box for configuring queues and ACLs through a user interface.

yarn.acl.enable must be set to true if ACLs are to be enabled.

yarn.admin.acl is an ACL meant to set which users will be the admins for the cluster. This uses a comma separated list of users and groups, for example comma-separated-users comma-separated-groups.

Then each capacity scheduler value must be set: yarn.scheduler.capacity.root..acl_submit_applications, this can also be set to "*" (asterisk) which allows all users and groups access, or a " " (space character) to block all users and groups from access.

Administrator ACLs are configured using the yarn.scheduler.capacity.root..acl_administer_queue properties, which allows administrators the freedom to monitor and control applications that go through the queues.

Capacity Scheduler has a pre-defined queue called 'root', and all queues in the system are children of this 'root' queue. Users can define child queues in the capacity-scheduler.xml file using the yarn.scheduler.capacity.root.queues parameter.

Besides editing yarn-site.xml, users can edit the capacity-scheduler.xml file to further define the parameters that they need. This includes yarn.scheduler.capacity..capacity the parameter that, in conjunction with yarn.nodemanager.resource.memory-mb, further gives users control over memory allocation by setting percentages for cluster resources. Another parameter is yarn.scheduler.capacity..state which sets the current state of the queue, whether it should be RUNNING or STOPPED, which is determined by the user.

Once all required parameters have been set in capacity-scheduler.xml, the command yarn rmadmin -refreshQueues must be run. To check to see if the queues have been configured properly, you can run the hadoop queue -list command.

resource manager相关配置

| 配置名 | 配置值 | 描述 |

|---|---|---|

| yarn.resourcemanager.address | ResourceManager host:port for clients to submit jobs. | host:port If set, overrides the hostname set in yarn.resourcemanager.hostname. |

| yarn.resourcemanager.scheduler.address | ResourceManager host:port for ApplicationMasters to talk to Scheduler to obtain resources. | host:port If set, overrides the hostname set in yarn.resourcemanager.hostname. |

| yarn.resourcemanager.resource-tracker.address | ResourceManager host:port for NodeManagers. | host:port If set, overrides the hostname set in yarn.resourcemanager.hostname. |

| yarn.resourcemanager.admin.address | ResourceManager host:port for administrative commands. | host:port If set, overrides the hostname set in yarn.resourcemanager.hostname. |

| yarn.resourcemanager.webapp.address | ResourceManager web-ui host:port. | host:port If set, overrides the hostname set in yarn.resourcemanager.hostname. |

| yarn.resourcemanager.hostname | ResourceManager host. | host Single hostname that can be set in place of setting all yarn.resourcemanager*address resources. Results in default ports for ResourceManager components. |

| yarn.resourcemanager.scheduler.class | ResourceManager Scheduler class. | CapacityScheduler (recommended), FairScheduler (also recommended), or FifoScheduler |

| yarn.scheduler.minimum-allocation-mb | Minimum limit of memory to allocate to each container request at the Resource Manager. | In MBs |

| yarn.scheduler.maximum-allocation-mb | Maximum limit of memory to allocate to each container request at the Resource Manager. | In MBs |

| yarn.resourcemanager.nodes.include-path / yarn.resourcemanager.nodes.exclude-path | List of permitted/excluded NodeManagers. | If necessary, use these files to control the list of allowable NodeManagers. |

node manager相关配置

| 配置名 | 配置值 | 描述 |

|---|---|---|

| yarn.nodemanager.resource.memory-mb | Resource i.e. available physical memory, in MB, for given NodeManager | Defines total available resources on the NodeManager to be made available to running containers |

| yarn.nodemanager.vmem-pmem-ratio | Maximum ratio by which virtual memory usage of tasks may exceed physical memory | The virtual memory usage of each task may exceed its physical memory limit by this ratio. The total amount of virtual memory used by tasks on the NodeManager may exceed its physical memory usage by this ratio. |

| yarn.nodemanager.local-dirs | Comma-separated list of paths on the local filesystem where intermediate data is written. | Multiple paths help spread disk i/o. |

| yarn.nodemanager.log-dirs | Comma-separated list of paths on the local filesystem where logs are written. | Multiple paths help spread disk i/o. |

| yarn.nodemanager.log.retain-seconds | 10800 | Default time (in seconds) to retain log files on the NodeManager Only applicable if log-aggregation is disabled. |

| yarn.nodemanager.remote-app-log-dir | /logs | HDFS directory where the application logs are moved on application completion. Need to set appropriate permissions. Only applicable if log-aggregation is enabled. |

| yarn.nodemanager.remote-app-log-dir-suffix | logs | Suffix appended to the remote log dir. Logs will be aggregated to y a r n . n o d e m a n a g e r . r e m o t e − a p p − l o g − d i r / {yarn.nodemanager.remote-app-log-dir}/ yarn.nodemanager.remote−app−log−dir/{user}/${thisParam} Only applicable if log-aggregation is enabled. |

| yarn.nodemanager.aux-services | mapreduce_shuffle | Shuffle service that needs to be set for Map Reduce applications. |

高可用的配置实在是太多了,头都大了,懒得都抄过来了。可以去官网查看 default-yarn-site.xml 文件,查看具体配置都是干啥用的~

这里我就把我的配置文件贴出来

<configuration>

<property>

<name>yarn.acl.enablename>

<value>truevalue>

<description>是否开启ACL(Access Control List),默认为不开启.description>

property>

<property>

<name>yarn.admin.aclname>

<value>*value>

<description>设置管理ACL用户和用户组description>

property>

<property>

<name>yarn.log-aggregation-enablename>

<value>truevalue>

<description>是否开启日志聚合description>

property>

<property>

<name>yarn.log-aggregation.retain-secondsname>

<value>2592000value>

<description>日志保存时间(秒)description>

property>

<property>

<name>yarn.log.server.urlname>

<value>http://cynric-113:19888/jobhistory/logsvalue>

<description>log server的地址description>

property>

<property>

<name>yarn.nodemanager.addressname>

<value>0.0.0.0:8041value>

<description>The address of the container manager in the NMdescription>

property>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shuffle,spark_shufflevalue>

property>

<property>

<name>yarn.nodemanager.aux-services.spark_shuffle.classname>

<value>org.apache.spark.network.yarn.YarnShuffleServicevalue>

property>

<property>

<name>spark.shuffle.service.portname>

<value>7337value>

property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce_shuffle.classname>

<value>org.apache.hadoop.mapred.ShuffleHandlervalue>

property>

<property>

<name>yarn.nodemanager.bind-hostname>

<value>0.0.0.0value>

property>

<property>

<name>yarn.nodemanager.disk-health-checker.min-free-space-per-disk-mbname>

<value>1000value>

property>

<property>

<name>yarn.nodemanager.health-checker.interval-msname>

<value>135000value>

property>

<property>

<name>yarn.nodemanager.health-checker.script.timeout-msname>

<value>60000value>

property>

<property>

<name>yarn.nodemanager.local-dirsname>

<value>/data2/hadoop/yarn/localvalue>

<description>description>

property>

<property>

<name>yarn.nodemanager.log-aggregation.compression-typename>

<value>gzvalue>

property>

<property>

<name>yarn.nodemanager.log-aggregation.debug-enabledname>

<value>falsevalue>

property>

<property>

<name>yarn.nodemanager.log-aggregation.num-log-files-per-appname>

<value>30value>

property>

<property>

<name>yarn.nodemanager.log-dirsname>

<value>/data2/log/hadoop/yarnvalue>

property>

<property>

<name>yarn.nodemanager.log.retain-secondname>

<value>604800value>

property>

<property>

<name>yarn.nodemanager.recovery.dirname>

<value>/data2/hadoop/yarn/yarn-nm-recoveryvalue>

property>

<property>

<name>yarn.nodemanager.recovery.enabledname>

<value>truevalue>

property>

<property>

<name>yarn.nodemanager.remote-app-log-dirname>

<value>/data2/log/hadoop/yarn/app-logsvalue>

property>

<property>

<name>yarn.nodemanager.resource.cpu-vcoresname>

<value>16value>

property>

<property>

<name>yarn.nodemanager.resource.memory-mbname>

<value>20480value>

property>

<property>

<name>yarn.nodemanager.vmem-pmem-rationame>

<value>5value>

property>

<property>

<name>yarn.timeline-service.handler-thread-countname>

<value>20value>

property>

<property>

<name>yarn.timeline-service.generic-application-history.max-applicationsname>

<value>3000value>

property>

<property>

<name>yarn.resourcemanager.scheduler.monitor.enablename>

<value>truevalue>

property>

<property>

<name>yarn.resourcemanager.bind-hostname>

<value>0.0.0.0value>

property>

<property>

<name>yarn.resourcemanager.cluster-idname>

<value>cynthiavalue>

property>

<property>

<name>yarn.resourcemanager.ha.enabledname>

<value>truevalue>

property>

<property>

<name>yarn.resourcemanager.ha.rm-idsname>

<value>rm12,rm13value>

property>

<property>

<name>yarn.resourcemanager.hostname.rm12name>

<value>cynric-113value>

property>

<property>

<name>yarn.resourcemanager.hostname.rm13name>

<value>cynric-112value>

property>

<property>

<name>yarn.resourcemanager.recovery.enabledname>

<value>truevalue>

property>

<property>

<name>yarn.resourcemanager.scheduler.classname>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacitySchedulervalue>

property>

<property>

<name>yarn.resourcemanager.max-completed-applicationsname>

<value>3000value>

property>

<property>

<name>yarn.timeline-service.generic-application-history.enabledname>

<value>truevalue>

property>

<property>

<name>yarn.resourcemanager.store.classname>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStorevalue>

property>

<property>

<name>yarn.resourcemanager.system-metrics-publisher.enabledname>

<value>truevalue>

property>

<property>

<name>yarn.resourcemanager.webapp.address.rm12name>

<value>${yarn.resourcemanager.hostname.rm12}:8088value>

property>

<property>

<name>yarn.resourcemanager.address.rm12name>

<value>${yarn.resourcemanager.hostname.rm12}:8032value>

property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm12name>

<value>${yarn.resourcemanager.hostname.rm12}:8030value>

property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm12name>

<value>${yarn.resourcemanager.hostname.rm12}:8031value>

property>

<property>

<name>yarn.resourcemanager.admin.address.rm12name>

<value>${yarn.resourcemanager.hostname.rm12}:8033value>

property>

<property>

<name>yarn.resourcemanager.webapp.https.address.rm12name>

<value>${yarn.resourcemanager.hostname.rm12}:8090value>

property>

<property>

<name>yarn.resourcemanager.webapp.address.rm13name>

<value>${yarn.resourcemanager.hostname.rm13}:8088value>

property>

<property>

<name>yarn.resourcemanager.webapp.https.address.rm13name>

<value>${yarn.resourcemanager.hostname.rm13}:8090value>

property>

<property>

<name>yarn.resourcemanager.address.rm13name>

<value>${yarn.resourcemanager.hostname.rm13}:8032value>

property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm13name>

<value>${yarn.resourcemanager.hostname.rm13}:8030value>

property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm13name>

<value>${yarn.resourcemanager.hostname.rm13}:8031value>

property>

<property>

<name>yarn.resourcemanager.admin.address.rm13name>

<value>${yarn.resourcemanager.hostname.rm13}:8033value>

property>

<property>

<name>yarn.resourcemanager.webapp.delegation-token-auth-filter.enabledname>

<value>falsevalue>

property>

<property>

<name>yarn.resourcemanager.zk-addressname>

<value>cynric-111:2181,cynric-112:2181,cynric-113:2181value>

property>

<property>

<name>yarn.timeline-service.enabledname>

<value>truevalue>

property>

<property>

<name>name>

<value>value>

<description>description>

property>

<property>

<name>yarn.timeline-service.leveldb-timeline-store.pathname>

<value>/data2/hadoop/yarn/timelinevalue>

property>

<property>

<name>yarn.timeline-service.leveldb-timeline-store.start-time-read-cache-sizename>

<value>3000value>

property>

<property>

<name>yarn.timeline-service.leveldb-timeline-store.start-time-write-cache-sizename>

<value>3000value>

property>

<property>

<name>yarn.timeline-service.ttl-msname>

<value>2678400000value>

property>

<property>

<name>yarn.timeline-service.webapp.addressname>

<value>cynric-113:8188value>

property>

<property>

<name>yarn.timeline-service.webapp.https.addressname>

<value>cynric-113:8190value>

<description>description>

property>

configuration>

这里面说下碰到的坑,在配置文件中写入的路径,记得手动mkdir创建,貌似他不会自动创建(可能是幻觉,可能我觉得的不对哈)

第二个坑就是按照我这个配置文件启动nodemanager是启动不来的会报一个错误如下:

因为配置文件中yarn.nodemanager.aux-services.spark_shuffle.class这个配置需要spark包中的一个类,从下载的spark中将其拷贝过去即可,或者将其加入到yarn启动的classpath中。我这里简单的将其拷贝过去,

cp /opt/spark/yarn/spark-2.4.4-yarn-shuffle.jar /opt/hadoop/share/hadoop/yarn/lib/

然后在运行sbin/yarn-daemon.sh start nodemanager 即可

至此高可用的yarn配置完毕

哦对了!启动 nodemanager 的时候可能会遇到找不到java_home,可以在/etc/hadoop/yarn-env.sh中 export JAVA_HOME一下下。。。

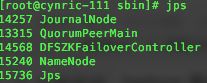

![]()

这时候zookeeper中多出了 resourcemanager高可用和选举需要的文件。

现在每台机器的角色如下:

| 机器 | 角色 |

|---|---|

| cynric-111 | nn,jn,dn,quorum,nm |

| cynric-112 | nn,jn,dn,quorum,rm,nm |

| cynric-113 | jn,dn,quorum,rm,nm |

安装hbase

长记性,首先cd /opt/hbase/conf修改hbase-env.sh 添加JAVA_HOME, 添加HBASE_CLASSPATH,因为hbase自己包含一个zookeeper, 而这里我们需要使用自己已经安装好的zookeeper这里还需要配置 export HBASE_MANAGES_ZK=false

修改hbase-site.xml

<configuration>

<property>

<name>hbase.rootdirname>

<value>hdfs://catherine/hbasevalue>

property>

<property>

<name>hbase.cluster.distributedname>

<value>truevalue>

property>

<property>

<name>hbase.zookeeper.quorumname>

<value>cynric-111,cynric-112,cynric-113value>

property>

configuration>

修改 conf/regionservers 这个文件 将三台机器的主机名称添加进去

在conf/文件夹下添加backup-masters 文件内容为备用hmaster的主机名称

![]()

这样hbase就配置好了!开心的启动!天真的我们以为照着官网一切都可以,但是事实上。。。。我们的hdfs是高可用,nameservices怎么识别啊!是不是regionserver起不来呀!?哈哈哈,看下日志

就知道结果是这样的,后来google一下,将hdfs-site.xml 和 core-site.xml cp 到 hbase/conf/ 下再启动就好啦

至此hbase安装完毕。。。。。。。

安装到这里感觉整个人都精(bu)神(hao)了…配置太多了。。。。。。。。。。。

现在感觉cdh真香。。。。。

已经不想安装spark和kafka了。。。。。。。哈哈哈开玩笑,会在后续的文章中写的,目前需要来活儿了,需要测试一下opentsdb的性能。。剩下的回头补上,通过这次安装原生hadoop环境,学到了好多东西,包括之前一些不是很明白的,可重新阅读了很多配置文件,了解其作用,对于日后平台调优也是有好处的。

最后,编程一定要动手,真的是读100篇文章不如自己动手部署一套来的强。。因为别人永远不会告诉你他们部署的时候遇到的坑。包括我这次部署,虽然大部分遇到的坑都写在文章中了,但是还是有一些可能随手就解决了。没有记录下来。遇到坑不可怕,通过日志一般都可以解决。。。。。。