R2CNN: Rotational Region CNN for Orientation Robust Scene Text Detection

R

2CNN: Rotational Region CNN for Orientation Robust Scene Text Detection

Yingying Jiang, Xiangyu Zhu, Xiaobing Wang, Shuli Yang, Wei Li, Hua Wang, Pei Fu and Zhenbo Luo

Samsung R&D Institute China - Beijing

rotational [ro'teʃənl]:adj. 转动的,回转的,轮流的

Samsung R&D Institute China-Beijing,SRC-B

Samsung:三星

geometry [dʒɪ'ɒmɪtrɪ]:n. 几何学,几何结构

geometric [,dʒɪə'metrɪk]:adj. 几何学的,几何学图形的

reason ['riːz(ə)n]:n. 理由,理性,动机 vi. 推论,劝说 vt. 说服,推论,辩论

monocular [mə'nɒkjʊlə]:adj. 单眼的,单眼用的

localization [,ləʊkəlaɪ'zeɪʃən]:n. 定位,局限,地方化

Computer Science,CS:计算机科学

Computer Vision,CV:计算机视觉

moderation [mɒdə'reɪʃ(ə)n]:n. 适度,节制,温和,缓和

preprint ['priːprɪnt]:v. 预印 n. 预印本

arXiv (archive - the X represents the Greek letter chi [χ]) is a repository of electronic preprints approved for posting after moderation, but not full peer review.

Abstract

In this paper, we propose a novel method called Rotational Region CNN (R2CNN) for detecting arbitrary-oriented texts in natural scene images. The framework is based on Faster R-CNN [1] architecture. First, we use the Region Proposal Network (RPN) to generate axis-aligned bounding boxes that enclose the texts with different orientations. Second, for each axis-aligned text box proposed by RPN, we extract its pooled features with different pooled sizes and the concatenated features are used to simultaneously predict the text/non-text score, axis-aligned box and inclined minimum area box. At last, we use an inclined non-maximum suppression to get the detection results. Our approach achieves competitive results on text detection benchmarks: ICDAR 2015 and ICDAR 2013.

在本文中,我们提出了一种称为 Rotational Region CNN (R2CNN) 的新方法,用于检测自然场景图像中任意方向的文本。该框架基于 Faster R-CNN [1] 架构。首先,我们使用 Region Proposal Network (RPN) 生成轴对齐的边界框,这些边界框包含具有不同方向的文本。其次,对于 RPN 提出的每个轴对齐文本框,我们提取其具有不同池化尺寸的池化特征,并且连接的特征用于同时预测文本/非文本分数、轴对齐框和倾斜最小区域框。最后,我们使用倾斜的非极大值抑制来获得检测结果。我们的方法在文本检测基准上取得了有竞争力的成果:ICDAR 2015 和 ICDAR 2013。

concatenate [kən'kætɪneɪt]:v. 连接,连结,使连锁 adj. 连接的,连结的,连锁的

incline [ɪn'klaɪn]:v. 倾向于,(使) 倾向于,赞同 (某人或某事),有...倾向,(使) 倾斜,低头 n. 斜坡,斜面

对于每个 axis-aligned text box,平行地使用了不同 pooled sizes (7 × \times × 7, 11 × \times × 3, 3 × \times × 11) 的ROIPooling,然后将 ROIPooling 的结果 concatenate 在一起进行后续处理。

1. Introduction

Texts in natural scenes (e.g., street nameplates, store names, good names) play an important role in our daily life. They carry essential information about the environment. After understanding scene texts, they can be used in many areas, such as text-based retrieval, translation, etc. There are usually two key steps to understand scene texts: text detection and text recognition. This paper focuses on scene text detection. Scene text detection is challenging because scene texts have different sizes, width-height aspect ratios, font styles, lighting, perspective distortion, orientation, etc. As the orientation information is useful for scene text recognition and other tasks, scene text detection is different from common object detection tasks that the text orientation should be also be predicted in addition to the axis-aligned bounding box information.

自然场景中的文字 (例如,街道铭牌、商店名称、good names) 在我们的日常生活中发挥着重要作用。它们包含有关环境的基本信息。在理解了场景文本之后,它们可以用于许多领域,例如基于文本的检索、翻译等。通常有两个关键步骤来理解场景文本:文本检测和文本识别。本文重点介绍场景文本检测。场景文本检测具有挑战性,因为场景文本具有不同的大小、width-height aspect ratios、字体样式、光照、透视失真、方向等。由于方向信息对于场景文本识别和其他任务很有用,场景文本检测不同于普通物体检测任务,除了轴对齐的边界框信息之外,还应该预测文本的方向。

nameplate ['neɪmpleɪt]:n. 铭牌,标示牌

goods [ɡʊdz]:n. 商品,动产,合意的人,真本领

font [fɒnt]:n. 字体,字形,泉,洗礼盘,圣水器

distortion [dɪ'stɔːʃ(ə)n]:n. 变形,失真,扭曲,曲解

retrieval [rɪ'triːvl]:n. 检索,恢复,取回,拯救

While most previous text detection methods are designed for detecting horizontal or near-horizontal texts [2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14], some methods try to address the arbitrary-oriented text detection problem [15, 16, 17, 18, 19, 20, 31, 32, 33, 34]. Recently, arbitraryoriented scene text detection is a hot research area, which can be seen from the frequent result updates in ICDAR2015 Robust Reading competition in incidental scene text detection [21]. While traditional text detection methods are based on sliding-window or Connected Components (CCs) [2, 3, 4, 6, 10, 13, 17, 18, 19, 20], deep learning based methods have been widely studied recently [7, 8, 9, 12, 15, 16, 31, 32, 33, 34].

虽然大多数以前的文本检测方法都是为检测水平或接近水平文本而设计的 [2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14],但有些方法试图解决任意方向的文本检测问题 [15, 16, 17, 18, 19, 20, 31, 32, 33, 34]。最近,任意方向的场景文本检测是一个热门的研究领域,从 ICDAR2015 Robust Reading competition 附带场景文本检测中频繁结果更新中可以看出 [21]。传统的文本检测方法基于滑动窗口或 Connected Components (CCs) [2, 3, 4, 6, 10, 13, 17, 18, 19, 20],而基于深度学习的方法最近已被广泛研究 [7, 8, 9, 12, 15, 16, 31, 32, 33, 34]。

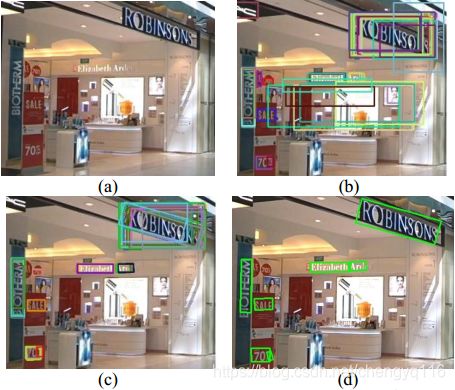

This paper presents a Rotational Region CNN (R2CNN) for detecting arbitrary-oriented scene texts. It is based on Faster R-CNN architecture [1]. Figure 1 shows the procedure of the proposed method. Figure 1 (a) is the original input image. We first use the RPN to propose axis-aligned bounding boxes that enclose the texts (Figure 1 (b)). Then we classify the proposals, refine the axis-aligned boxes and predict the inclined minimum area boxes with pooled features of different pooled sizes (Figure 1 (c)). At last, inclined non-maximum suppression is used to post-process the detection candidates to get the final detection results (Figure 1(d)). Our method yields an F-measure of 82.54% on ICDAR 2015 incidental text detection benchmark and 87.73% on ICDAR 2013 focused text detection benchmark.

本文提出了一种用于检测任意方向场景文本的 Rotational Region CNN (R2CNN)。它基于 Faster R-CNN 架构 [1]。 图 1 显示了所提出方法的过程。图 1 (a) 是原始输入图像。我们首先使用 RPN 来提出包含文本的轴对齐边界框 (图 1 (b))。然后我们对候选区域进行分类,细化轴对齐框并预测倾斜的最小区域框,其中包含不同池化尺寸的池化特征 (图 1 (c))。最后,使用倾斜的非极大值抑制来对检测候选区域进行后处理以获得最终的检测结果 (图 1 (d))。我们的方法在 ICDAR 2015 附带文本检测基准上产生了 82.54% 的 F-measure 值,在 ICDAR 2013 focused text detection benchmark 上达到了 87.73%。

retrieval [rɪ'triːvl]:n. 检索,恢复,取回,拯救

incidental [ɪnsɪ'dent(ə)l]:adj. 附带的,偶然的,容易发生的 n. 附带事件,偶然事件,杂项

Fig. 1. The procedure of the proposed method R2CNN. (a) Original input image; (b) text regions (axis-aligned bounding boxes) generated by RPN; (c) predicted axis-aligned boxes and inclined minimum area boxes (each inclined box is associated with an axis-aligned box, and the associated box pair is indicated by the same color); (d) detection result after inclined non-maximum suppression.

The contributions of this paper are as follows:

-

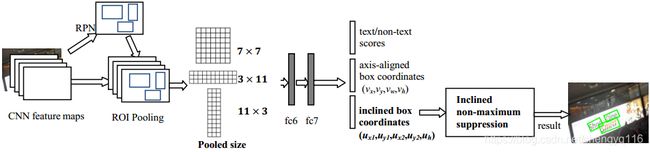

We introduce a novel framework for detecting scene texts of arbitrary orientations (Figure 2). It is based on Faster R-CNN [1]. The RPN is used for proposing text regions and the Fast R-CNN model [23] is modified to do text region classification, refinement and inclined box prediction.

我们介绍了一种用于检测任意方向的场景文本的新颖框架 (图 2)。它基于 Faster R-CNN [1]。RPN 用于提出文本区域,Fast R-CNN 模型 [23] 被修改为进行文本区域分类、细化和倾斜框预测。 -

The arbitrary-oriented text detection problem is formulated as a multi-task problem. The core of the approach is predicting text scores, axis-aligned boxes and inclined minimum area boxes for each proposal generated by the RPN.

面向任意的文本检测问题被构造为多任务问题。该方法的核心是预测 RPN 生成的每个候选区域的文本分数、轴对齐框和倾斜最小区域框。 -

To make the most of text characteristics, we do several ROI Poolings with different pooled sizes (7 × \times × 7, 11 × \times × 3, 3 × \times × 11) for each RPN proposal. The concatenated features are then used for further detection.

为了充分利用文本特征,我们为每个 RPN 候选区域生成几个具有不同池化尺寸 (7 × \times × 7, 11 × \times × 3, 3 × \times × 11) 的 ROIPoolings。然后将连接的特征用于进一步检测 (将池化的结果组合起来用于回归)。 -

Our modification of Faster R-CNN also include adding a smaller anchor for detecting small scene texts and using inclined non-maximum suppression to post-process the detection candidates to get the final result.

我们对 Faster R-CNN 的修改还包括添加较小的锚框以检测小的场景文本,并使用倾斜的非极大值抑制来对检测候选区域进行后处理以获得最终结果。

与 Faster RCNN 相比,回归的结果由 2 部分变成了 3 部分。

Fig.2. The network architecture of Rotational Region CNN (R2CNN). The RPN is used for proposing axis-aligned bounding boxes that enclose the arbitrary-oriented texts. For each box generated by RPN, three ROIPoolings with different pooled sizes are performed and the pooled features are concatenated for predicting the text scores, axis-aligned box ( v x , v y , v w , v h ) (v_{x}, v_{y}, v_{w}, v_{h}) (vx,vy,vw,vh) and inclined minimum area box ( u x 1 , u y 1 , u x 2 , u y 2 , u h ) (u_{x1}, u_{y1}, u_{x2}, u_{y2}, u_{h}) (ux1,uy1,ux2,uy2,uh). Then an inclined non-maximum suppression is conducted on the inclined boxes to get the final result.

2. Related Work

The traditional scene text detection methods consist of sliding-window based methods and Connected Components (CCs) based methods [2, 3, 4, 6, 10, 13, 17, 18, 19, 20]. The sliding-window based methods move a multi-scale window through an image densely and then classify the candidates as character or non-character to detect character candidates. The CCs based approaches generate character candidates based on CCs. In particular, the Maximally Stable Extremal Regions (MSER) based methods used to achieve good performances in ICDAR 2015 [21] and ICDAR 2013 [22] competitions. These traditional methods adopt a bottom-up strategy and often needs several steps to detect texts (e.g., character detection, text line construction and text line classification).

传统的场景文本检测方法包括基于滑动窗口的方法和基于 Connected Components (CCs) 的方法 [2, 3, 4, 6, 10, 13, 17, 18, 19, 20]。基于滑动窗口的方法将多尺寸窗口密集地移动通过图像,然后将候选区域分类为字符或非字符以检测字符候选区域。基于 CC 的方法基于 CC 生成字符候选区域。特别是,在 ICDAR 2015 [21] 和 ICDAR 2013 [22] 比赛中,基于 Maximally Stable Extremal Regions (MSER) 的方法取得了良好的表现。这些传统方法采用自下而上的策略,并且经常需要几个步骤来检测文本 (例如,字符检测、文本行构建和文本行分类)。

The general object detection is a hot research area recently. Deep learning based techniques have advanced object detection substantially. One kind of object detection methods rely on region proposal, such as R-CNN [24], SPPnet [25], Fast R-CNN [23], Faster R-CNN [1], and R-FCN [26]. Another family of object detectors do not rely on region proposal and directly estimate object candidates, such as SSD [27] and YOLO [28]. Our method is based on the Faster R-CNN architecture. In Faster R-CNN, a Region Proposal Network (RPN) is proposed to generate high-quality object proposals directly from the convolutional feature maps. The proposals generated by RPN is then refined and classified with the Fast R-CNN model [23]. As scene texts have orientations and are different from general objects, the general object detection methods cannot be used directly in scene text detection.

通用物体检测技术是近年来一个热门的研究领域。基于深度学习的技术大大提高了物体检测能力。一种目标检测方法依赖于候选区域,如 R-CNN [24],SPPnet [25],Fast R-CNN [23],Faster R-CNN [1] 和 R-FCN [26]。另一个物体检测器家族不依赖候选区域直接估计候选物体,如 SSD [27] 和 YOLO [28]。我们的方法基于 Faster R-CNN 架构。在 Faster R-CNN 中,提出了一个 Region Proposal Network (RPN),用于直接从卷积特征图中生成高质量的候选区域。RPN 生成的候选区域随后被改进并用 Fast R-CNN 模型进行分类 [23]。由于场景文本具有方向性,与一般物体不同,所以一般物体检测方法不能直接用于场景文本检测。

substantially [səb'stænʃ(ə)lɪ]:adv. 实质上,大体上,充分地

Deep learning based scene text detection methods [7, 8, 9, 12, 15, 16, 31, 32, 33, 34] have been studied and achieve better performance than traditional methods. TextBoxes is an end-to-end fast scene text detector with a single deep neural network [8]. DeepText generates word region proposals via Inception-RPN and then scores and refines each word proposal using the text detection network [7]. Fully-Convolutional Regression Network (FCRN) utilizes synthetic images to train the model for scene text detection [12]. However, these methods are designed to generate axis-aligned detection boxes and do not address the text orientation problem. Connectionist Text Proposal Network (CTPN) detects vertical boxes with fixed width, uses BLSTM to catch the sequential information and then links the vertical boxes to get final detection boxes [9]. It is good at detecting horizontal texts but not fit for high inclined texts. The method based on Fully Convolutional Network (FCN) is designed to detect multi-oriented scene texts [16]. Three steps are needed in this method: text block detection by text block FCN, multi-oriented text line candidate generation based on MSER and text line candidates classification. Rotation Region Proposal Network (RRPN) is also proposed to detect arbitrary-oriented scene text [15]. It is based on Faster R-CNN [1]. The RPN is modified to generate inclined proposals with text orientation angle information and the following classification and regression are based on the inclined proposals. SegLink [31] is proposed to detect oriented texts by detecting segments and links. It works well on text lines with arbitrary lengths. EAST [32] is designed to yield fast and accurate text detection in natural scenes. DMPNet [33] is designed to detect text with tighter quadrangle. Deep direct regression [34] is proposed for multi-oriented scene text detection.

已经研究了基于深度学习的场景文本检测方法 [7, 8, 9, 12, 15, 16, 31, 32, 33, 34] 并且实现了比传统方法更好的性能。 TextBoxes 是一个端到端的快速场景文本检测器,具有单个深度神经网络 [8]。DeepText 通过 Inception-RPN 生成单词候选区域,然后使用文本检测网络对每个单词候选区域进行评分和优化 [7]。Fully-Convolutional Regression Network (FCRN) 利用合成图像训练模型进行场景文本检测 [12]。但是,这些方法旨在生成轴对齐的检测框,而不是解决文本方向问题。Connectionist Text Proposal Network (CTPN) 检测具有固定宽度的垂直框,使用 BLSTM 捕获顺序信息,然后链接垂直框以获得最终检测框 [9]。它擅长检测水平文本但不适合高倾斜文本。基于 Fully Convolutional Network (FCN) 的方法被设计用于检测多向场景文本 [16]。该方法需要三个步骤:text block FCN 的文本块检测,基于 MSER 的多方向文本行候选区域生成和文本行候选分类。还提出了 Rotation Region Proposal Network (RRPN) 来检测任意方向的场景文本 [15]。它基于 Faster R-CNN [1]。修改 RPN 以生成具有文本方向角信息的倾斜候选区域,并且基于倾斜候选区域进行以下分类和回归。提出 SegLink [31] 通过检测段和链接来检测有向文本。它适用于任意长度的文本行。EAST [32] 旨在在自然场景中产生快速准确的文本检测。DMPNet [33] 旨在检测具有更严格四边形的文本。针对面向多方的场景文本检测,提出了 Deep direct regression [34]。

quadrangle ['kwɒdræŋg(ə)l]:n. 四边形,方院

synthetic [sɪn'θetɪk]:adj. 综合的,合成的,人造的 n. 合成物

refine [rɪ'faɪn]:vt. 精炼,提纯,改善,使...文雅

connectionist [kə'nekʃənist]:n. 联结主义

sequential [sɪ'kwenʃ(ə)l]:adj. 连续的,相继的,有顺序的

Our goal is to detect arbitrary-oriented scene texts. Similar to RRPN [15], our network is also based on Faster R-CNN [1], but we utilize a different strategy other than generating inclined proposals. We think the RPN is qualified to generate text candidates and we predict the orientation information based on the text candidates proposed by RPN.

我们的目标是检测任意的方向场景文本。与 RRPN [15] 类似,我们的网络也基于 Faster R-CNN [1],但除了生成倾斜候选区域之外,我们采用不同的策略。我们认为 RPN 有资格生成文本候选区域,我们根据 RPN 提出的候选文本预测方向信息。

We think the RPN is qualified to generate text candidates and we predict the orientation information based on the text candidates proposed by RPN.

3. Proposed Approach

In this section, we introduce our approach to detect arbitrary-oriented scene texts. Figure 2 shows the architecture of the proposed Rotational Region CNN (R2CNN). We first present how we formalize the arbitrary-oriented text detection problem and then introduce the details of R2CNN. After that, we describe our training objectives.

在本节中,我们将介绍检测任意方向的场景文本的方法。图 2 显示了所提出的 Rotational Region CNN (R2CNN) 的架构。我们首先介绍如何 formalize 任意方向的文本检测问题,然后介绍 R2CNN 的细节。之后,我们描述了我们的训练目标。

formalize ['fɔːm(ə)laɪz]:vt. 使形式化,使正式,拘泥礼仪 vi. 拘泥于形式

3.1. Problem definition

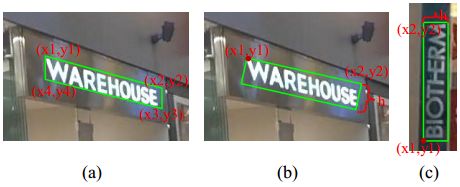

In ICDAR 2015 competition [21], the ground truth of incidental scene text detection is represented by four points in clockwise (x1, y1, x2, y2, x3, y3, x4, y4) as shown in Figure 3 (a). The label is at word level. The four points form a quadrangle, which is probably not a rectangle. Although scene texts can be more closely enclosed by irregular quadrangles due to perspective distortion, they can be roughly enclosed by inclined rectangles with orientation (Figure 3 (b)). As we think an inclined rectangle is able to cover most of the text area, we approximate the arbitrary-oriented scene text detection task as detecting an inclined minimum area rectangle in our approach. In the rest of the paper, when we mention the bounding box, it refers to a rectangular box.

在 ICDAR 2015 竞赛 [21] 中,附带场景文本检测的 ground truth 由顺时针 (x1, y1, x2, y2, x3, y3, x4, y4) 中的四个点表示,如 Figure 3 (a) 所示。标签是单词级别的。四个点形成一个四边形,可能不是一个矩形。虽然由于透视变形,场景文本可以被不规则的四边形更紧密地包围,但是它们可以被具有方向的倾斜矩形粗略地包围 (Figure 3 (b))。 我们认为倾斜矩形能够覆盖大部分文本区域,因此我们方法中我们将任意方向的场景文本检测任务近似为检测倾斜最小区域矩形。在本文的其余部分,当我们提到边界框时,它指的是一个矩形框。

quadrangle ['kwɒdræŋg(ə)l]:n. 四边形,方院

clockwise ['klɒkwaɪz]:adv. 顺时针方向地 adj. 顺时针方向的

irregular [ɪ'regjʊlə]:adj. 不整齐的,不规则的,不正常的,非正规的,不按时的,便秘的 n. 不规则物,不合规格的产品,非正规军人员

roughly ['rʌflɪ]:adv. 粗糙地,概略地

rectangular [rek'tæŋgjʊlə]:adj. 矩形的,成直角的

Although the straightforward method to represent a inclined rectangle is using an angle to represent its orientation, we don’t adopt this strategy because the angle target is not stable in some special points. For example, a rectangle with rotation angle 900 is very similar to the same rectangle with rotation angle -900, but their angles are quite different. This makes the network hard to learn to detect vertical texts. Instead of using an angle to represent the orientation information, we use the coordinates of the first two points in clockwise and the height of the bounding box to represent an inclined rectangle (x1, y1, x2, y2, h). We suppose the first point always means the point at the left-top corner of the scene text. Figure 3 (b) and Figure 3 (c) show two examples. (x1, y1) is the coordinates of the first point (the solid red point), (x2, y2) is the coordinates of the second point in clockwise, and h is the height of the inclined minimum area rectangle.

尽管表示倾斜矩形的直接方法是使用角度来表示其方向,但我们不采用此策略,因为角度目标在某些特殊点上不稳定。例如,旋转角度为 900 的矩形与旋转角度为 -900 的相同矩形非常相似,但它们的角度非常不同。这使得网络难以学习检测垂直文本。我们不使用角度来表示方向信息,而是使用顺时针前两个点的坐标和边界框的高度来表示倾斜的矩形 (x1, y1, x2, y2, h)。我们假设第一个点总是表示场景文本左上角的点。 图 3 (b) 和图 3 (c) 显示了两个例子。(x1, y1) 是第一点 (实心红点) 的坐标,(x2, y2) 是顺时针方向上第二点的坐标,h 是倾斜最小区域矩形的高度。

本文将 bounding box (x1, y1, x2, y2, x3, y3, x4, y4) 的表示设计为使用顺时针的前两个顶点和高度 (x1, y1, x2, y2, h)。角度描述任意方向不稳定,使用 (x1, y1, x2, y2, h) 表示。

正负 90 度,在不严格区分头尾的情况下可看作相同的角度,但是数值计算上却相差很大很难区分,只有理解了文本内容才能作区分,这样会给网络带来歧义。因而使用顺时针方向的两个点 [(x1, y1), (x2, y2)] 与一个框的高度 h 来表示回归的结果。

We suppose the first point always means the point at the left-top corner of the scene text. (x1, y1, x2, y2, h) 不是表示斜矩形唯一的方式,只要能够描述斜矩形都是可以的,不同的表示方式可能会影响网络的学习。

旋转角度 θ \theta θ 是水平轴 (x 轴) 逆时针旋转,与碰到的矩形的第一条边的夹角。这个边的边长是 width,另一条边边长是 height。在这里 width 与 height 不是按照长短来定义的。在 OpenCV 中,坐标系原点在左上角,相对于 x 轴,逆时针旋转角度为负,顺时针旋转角度为正。这里, θ ∈ ( − 9 0 0 , 0 0 ] \theta \in (-90^{0}, 0^{0}] θ∈(−900,00]。

Fig.3. Detection targets of arbitrary-oriented scene text detection. a) ICDAR 2015 labels the incidental scene texts in the form of four points in clockwise; b) the inclined minimum area rectangle is adopted as the detection targets in our approach; c) another example of the inclined rectangle.

如图 3 所示,任意方向场景文本检测的检测目标。a) ICDAR 2015 以顺时针方向四点的形式标记附带场景文本,b) 采用倾斜最小面积矩形作为检测目标,c) 倾斜矩形的另一个例子。

3.2. Rotational Region CNN (R2CNN)

Overview. We adopt the popular two-stage object detection strategy that consists of region proposal and region classification. Rotational Region CNN (R2CNN) is based on Faster R-CNN [1]. Figure 2 shows the architecture of R2CNN. The RPN is first used to generate text region proposals, which are axis-aligned bounding boxes that enclose the arbitrary-oriented texts (Figure 1 (b)). And then for each proposal, several ROIPoolings with different pooled sizes (7 × \times × 7, 11 × \times × 3, 3 × \times × 11) are performed on the convolutional feature maps and the pooled features are concatenated for further classification and regression. With concatenated features and fully connected layers, we predict text/non-text scores, axis-aligned boxes and inclined minimum area boxes (Figure 1 (c)). After that, the inclined boxes are post-processed by inclined non-maximum suppression to get the detection results (Figure 1 (d)).

Overview. 我们采用流行的两阶段目标检测策略,包括候选区域和区域分类。Rotational Region CNN (R2CNN) 基于 Faster R-CNN [1]。图 2 显示了 R2CNN 的体系结构。RPN 首先用于生成文本候选区域,这些候选区域是包含任意方向文本的轴对齐边界框 (图 1 (b))。然后对于每个候选区域,在卷积特征图上执行具有不同池化大小 (7 × \times × 7, 11 × \times × 3, 3 × \times × 11) 的若干 ROIPoolings,并且将池化的特征连接以进行进一步分类和回归。通过连接的特征和全连接的层,我们可以预测文本/非文本分数,轴对齐框和倾斜最小区域框 (图 1 (c))。之后,通过倾斜的非极大值抑制对倾斜的框进行后处理,以获得检测结果 (图 1 (d))。

RPN for proposing axis-aligned boxes. We use the RPN to generate axis-aligned bounding boxes that enclose the arbitrary-oriented texts. This is reasonable because the text in the axis-aligned box belongs to one of the following situations: a) the text is in the horizontal direction; b) the text is in the vertical direction; c) the text is in the diagonal direction of the axis-aligned box. As shown in Figure 1 (b), the RPN is competent for generating text regions in the form of axis-aligned boxes for arbitrary-oriented texts.

RPN for proposing axis-aligned boxes. 我们使用 RPN 生成轴对齐的边界框,其中包含任意方向的文本。这是合理的,因为轴对齐框中的文本属于以下情况之一:a) 文本在水平方向,b) 文本是垂直方向,c) 文本位于轴对齐框的对角线方向。如图 1 (b) 所示,RPN 有能力以任意方向文本的轴对齐框的形式生成文本区域。

Compared to general objects, there are more small scene texts. We support this by utilizing a smaller anchor scale in RPN. While the original anchor scales are (8, 16, 32) in Faster R-CNN [1], we investigate two strategies: a) changing an anchor scale to a smaller size and using (4, 8, 16); b) adding a new anchor scale and utilizing (4, 8, 16, 32). Our experiments confirm that the adoption of the smaller anchor is helpful for scene text detection.

与一般物体相比,有更多的小场景文本。我们通过在 RPN 中使用较小的 anchor 比例来支持这一点。虽然原始的 anchor 尺寸是 (8, 16, 32) 在 Faster R-CNN [1] 中,我们研究了两种策略:a) 将 anchor 尺寸改变为更小的尺寸并使用 (4, 8, 16),b) 添加新的 anchor 尺寸并利用 (4, 8, 16, 32)。我们的实验证实,采用较小的 anchor 有助于场景文本检测。

使用 anchor 尺度 (4, 8, 16, 32),提升对于文本检测的性能。

propose [prə'pəʊz]:vt. 建议,打算,计划,求婚 vi. 建议,求婚,打算

diagonal [daɪ'æg(ə)n(ə)l]:adj. 斜的,对角线的,斜纹的 n. 对角线,斜线

competent ['kɒmpɪt(ə)nt]:adj. 胜任的,有能力的,能干的,足够的

We keep other settings of RPN the same as Faster R-CNN [1], including the anchor aspect ratios, the definition of positive samples and negative samples, and etc.

我们保持 RPN 的其他设置与 Faster R-CNN [1] 相同,包括 anchor 长宽比、正样本和负样本的定义等。

ROIPoolings of different pooled sizes. The Faster R-CNN framework does ROIPooling on the feature map with pooled size 7 × \times × 7 for each RPN proposal. As the widths of some texts are much larger than their heights, we try to use three ROIPoolings with different sizes to catch more text characteristics. The pooled features are concatenated for further detection. Specifically, we add two pooled sizes: 11 × \times × 3 and 3 × \times × 11. The pooled size 3 × \times × 11 is supposed to catch more horizontal features and help the detection of the horizontal text whose width is much larger than its height. The pooled size 11 × \times × 3 is supposed to catch more vertical features and be useful for vertical text detection that the height is much larger than the width.

ROIPoolings of different pooled sizes. Faster R-CNN 框架在特征图上进行 ROIPooling,每个 RPN 候选区域的池化尺寸为 7 × \times × 7。由于某些文本的宽度远大于它们的高度,我们尝试使用三个不同尺寸的 ROIPoolings 来捕获更多文本特征。池化的特征被连接以进一步检测。具体来说,我们添加两个池化尺寸:11 × \times × 3 and 3 × \times × 11。池化尺寸 3 × \times × 11 应该捕获更多水平特征并帮助检测水平文本,它的宽度远大于高度。池化尺寸 11 × \times × 3 应该能够捕获更多垂直特征,并且对于高度比宽度大得多的垂直文本检测非常有用。

针对文本检测的实际情况,选用三个尺度的池化尺度 (7 × \times × 7, 11 × \times × 3, 3 × \times × 11)。11 × \times × 3 and 3 × \times × 11 分别用于捕获垂直方向和水平方向的特征。

Regression for text/non-text scores, axis-aligned boxes, and inclined minimum area boxes. In our approach, after RPN, we classify the proposal generated by RPN as text or non-text, refine the axis-aligned bounding boxes that contain the arbitrary-oriented texts and predict inclined bounding boxes. Each inclined box is associated with an axis-aligned box (Figure 1 (c) and Figure 4 (a)). Although our detection targets are the inclined bounding boxes, we think adding additional constraints (axis-aligned bounding box) could improve the performance. And our evaluation also confirm the effectiveness of this idea.

Regression for text/non-text scores, axis-aligned boxes, and inclined minimum area boxes. 在我们的方法中,在 RPN 之后,我们将 RPN 生成的候选区域分类为文本或非文本,细化包含任意方向文本的轴对齐边界框并预测倾斜边界框。每个倾斜的边界框与轴对齐的边界框相关联 (Figure 1 (c) and Figure 4 (a))。虽然我们的检测目标是倾斜的边界框,但我们认为添加额外的约束 (轴对齐边界框) 可以提高性能。我们的评估也证实了这一想法的有效性。

保留 axis-aligned bounding box 回归分量,对最后的结果有积极作用,可以提高性能。检测 inclined minimum area box 的同时检测 axis-aligned bounding box 可以改善 inclined minimum area box 的检测效果。

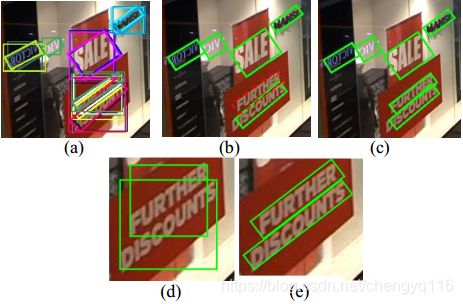

Inclined non-maximum suppression. Non-Maximum Suppression (NMS) is extensively used to post-process detection candidates by current object detection methods. As we estimate both the axis-aligned bounding box and the inclined bounding box, we can either do normal NMS on axis-aligned bounding boxes, or do inclined NMS on inclined bounding boxes. In the inclined NMS, the calculation of the traditional Intersection-over-Union (IoU) is modified to be the IoU between two inclined bounding boxes. The IoU calculation method used in [15] is utilized.

Inclined non-maximum suppression. 非极大值抑制 (NMS) 广泛用于通过当前物体检测方法对检测候选区域进行后处理。当我们估计轴对齐的边界框和倾斜的边界框时,我们可以在轴对齐的边界框上做正常的 NMS,或者在倾斜的边界框上做倾斜的 NMS。在倾斜 NMS 中,传统的 Intersection-over-Union (IoU) 的计算被修改为两个倾斜边界框之间的 IoU。使用 [15] 中使用的 IoU 计算方法。

Figure 4 illustrates the detection results after two kinds of NMS are performed. Figure 4 (a) shows predicted candidate boxes that each axis-aligned bounding box is associated with an inclined bounding box. Figure 4 (b) shows the effects of the normal NMS on axis-aligned boxes and Figure 4 (c) demonstrates the effects of the inclined NMS on inclined boxes. As show in Figure 4 (b), the text in red dashed box is not detected under normal NMS on axis-aligned boxes. Figure 4 (d) and Figure 4 (e) shows the reason why the inclined NMS is better for inclined scene text detection. We can see that for closely adjacent inclined texts, normal NMS may miss some text as the IoU between axis-aligned boxes can be high (Figure 4 (d)), but inclined NMS will not miss the text because the inclined IoU value is low (Figure 4 (e)).

图 4 显示了在执行两种 NMS 之后的检测结果。图 4 (a) 显示了预测的候选框,每个轴对齐的边界框与倾斜的边界框相关联。图 4 (b) 显示了正常 NMS 对轴对齐框的影响,图 4 (c) 显示了倾斜 NMS 对倾斜框的影响。如图 4 (b) 所示,在轴对齐框上的正常 NMS 下未检测到红色虚线框中的文本。图 4 (d) 和图 4 (e) 显示了倾斜 NMS 对于倾斜场景文本检测更好的原因。我们可以看到,对于紧密相邻的倾斜文本,正常的 NMS 可能会遗漏一些文本,因为轴对齐框之间的 IoU 可能很高 (图 4 (d)),但倾斜的 NMS 不会遗漏文本,因为倾斜的 IoU 值很低 (图 4 (e))。

Fig. 4. Inclined non-maximum suppression. (a) The candidate axis-aligned boxes and inclined boxes; (b) the detection results based on normal NMS on axis-aligned boxes (the green boxes are the correct detections, and the red dashed box is the box that is not detected); (c) the detection results based on inclined NMS on inclined boxes; (d) an example of two axis-aligned boxes; (e) an example of two inclined boxes.

图 4. 倾斜的非极大值抑制。(a) 候选轴对齐框和倾斜框,(b) 基于轴对齐框上的正常 NMS 的检测结果 (绿色框是正确的检测,红色虚线框是未检测到的框),(c) 倾斜框上基于倾斜 NMS 的检测结果,(d) 两个轴对齐框的例子,(e) 两个倾斜框的例子。

dash [dæʃ]:n. 短跑,破折号,匆忙,少量 v. 猛冲,急奔,急驰,猛掷

3.3. Training objective (Multi-task loss)

The training loss on RPN is the same as Faster R-CNN [1]. In this section, we only introduce the loss function of R2CNN on each axis-aligned box proposal generated by RPN.

RPN 上的训练损失与 Faster R-CNN 相同 [1]。在本节中,我们仅在 RPN 生成的每个轴对齐框候选区域中引入 R2CNN 的损失函数。

Our loss function defined on each proposal is the summation of the text/non-text classification loss and the box regression loss. The box regression loss consists of two parts: the loss of axis-aligned boxes that enclose the arbitrary-oriented texts and the loss of inclined minimum area boxes. The multi-task loss function on each proposal is defined as:

我们在每个候选区域上定义的损失函数是文本/非文本分类损失和框回归损失的总和。框回归损失由两部分组成:包含任意方向文本的轴对齐框的损失和倾斜最小区域框的损失。每个候选区域的多任务损失函数定义为:

(1) L ( p , t , v , v ∗ , u , u ∗ ) = L c l s ( p , t ) + λ 1 t ∑ i ∈ { x , y , w , h } L r e g ( v i , v i ∗ ) + λ 2 t ∑ i ∈ { x 1 , y 1 , x 2 , y 2 , h } L r e g ( u i , u i ∗ ) \begin{aligned} L(p, t, v, v^{\ast}, u, u^{\ast}) &= L_{cls}(p, t) \\ &+\lambda_{1}t \sum_{i \in \{x, y, w, h\}} L_{reg}(v_{i}, v_{i}^{\ast}) \\ &+\lambda_{2}t \sum_{i \in \{x_{1}, y_{1}, x_{2}, y_{2}, h\}} L_{reg}(u_{i}, u_{i}^{\ast}) \tag{1} \end{aligned} L(p,t,v,v∗,u,u∗)=Lcls(p,t)+λ1ti∈{x,y,w,h}∑Lreg(vi,vi∗)+λ2ti∈{x1,y1,x2,y2,h}∑Lreg(ui,ui∗)(1)

λ 1 \lambda_{1} λ1 and λ 2 \lambda_{2} λ2 are the balancing parameters that control the trade-off between three terms.

λ 1 \lambda_{1} λ1 and λ 2 \lambda_{2} λ2 是控制三部分之间权衡的平衡参数。

They should regress both the axis-aligned boxes and the inclined boxes ( λ 1 = 1 \lambda_{1} = 1 λ1=1 and l a m b d a 2 = 1 lambda_{2} = 1 lambda2=1 in Equation (1)). 使用 λ 1 \lambda_{1} λ1 and λ 2 \lambda_{2} λ2 进行调整加权,同时预测 the axis-aligned boxes and the inclined boxes。

- text or non-text 二分类损失函数。

- the axis-aligned bounding boxes that contain the arbitrary-oriented texts,水平框回归损失函数 L r e g ( v i , v i ∗ ) L_{reg}(v_{i}, v_{i}^{\ast}) Lreg(vi,vi∗)。

- predict inclined bounding boxes,倾斜框回归损失函数 L r e g ( u i , u i ∗ ) L_{reg}(u_{i}, u_{i}^{\ast}) Lreg(ui,ui∗)。

采用目标检测中常用的 smooth L1 损失函数,多任务损失更新参数 (Our loss function defined on each proposal is the summation of the text/non-text classification loss and the box regression loss. The box regression loss consists of two parts: the loss of axis-aligned boxes that enclose the arbitrary-oriented texts and the loss of inclined minimum area boxes.)。多任务学习,使用 3 个损失函数和表示总的损失函数。

objective [əb'dʒektɪv]:n. 目的,目标,(军事行动的) 攻击目标,物镜,宾格 adj. 客观的,客观存在的,(疾病症状) 客观的,他觉的,目标的,宾格的

The box regression only conducts on text. t t t is the indicator of the class label. Text is labeled as 1 ( t = 1 t = 1 t=1), and background is labeled as 0 ( t = 0 t = 0 t=0). The parameter p = ( p 0 , p 1 ) p = (p_{0}, p_{1}) p=(p0,p1) is the probability over text and background classes computed by the softmax function. L c l s ( p , t ) = − l o g p t L_{cls}(p, t) = -log p_{t} Lcls(p,t)=−logpt is the log loss for true class t t t.

框回归仅对文本进行。 t t t 是类标签的索引。文本标记为 1 ( t = 1 t = 1 t=1),背景标记为 0 ( t = 0 t = 0 t=0)。参数 p = ( p 0 , p 1 ) p = (p_{0}, p_{1}) p=(p0,p1) 是由 softmax 函数计算的文本和背景类的概率。 L c l s ( p , t ) = − l o g p t L_{cls}(p, t) = -log p_{t} Lcls(p,t)=−logpt 是 true class t t t 的 log 损失。

conduct ['kɒndʌkt]:v. 组织,实施,进行,指挥 (音乐),带领,引导,举止,表现,传导 (热或电) n. 行为举止,管理 (方式),实施(办法),引导

v = ( v x , v y , v w , v h ) v = (v_{x}, v_{y}, v_{w}, v_{h}) v=(vx,vy,vw,vh) is a tuple of true axis-aligned bounding box regression targets including coordinates of the center point and its width and height, and v ∗ = ( v x ∗ , v y ∗ , v w ∗ , v h ∗ ) v^{\ast} = (v_{x}^{\ast}, v_{y}^{\ast}, v_{w}^{\ast}, v_{h}^{\ast}) v∗=(vx∗,vy∗,vw∗,vh∗) is the predicted tuple for the text label. u = ( u x 1 , u y 1 , u x 2 , u y 2 , u h ) u = (u_{x1}, u_{y1}, u_{x2}, u_{y2}, u_{h}) u=(ux1,uy1,ux2,uy2,uh) is a tuple of true inclined bounding box regression targets including coordinates of first two points of the inclined box and its height, and u ∗ = ( u x 1 ∗ , u y 1 ∗ , u x 2 ∗ , u y 2 ∗ , u h ∗ ) u^{\ast} = (u_{x1}^{\ast}, u_{y1}^{\ast}, u_{x2}^{\ast}, u_{y2}^{\ast}, u_{h}^{\ast}) u∗=(ux1∗,uy1∗,ux2∗,uy2∗,uh∗) is the predicted tuple for the text label. We use the parameterization for v v v and v ∗ v^{\ast} v∗ given in [24], in which v v v and v ∗ v^{\ast} v∗ specify a scale-invariant translation and log-space height/width shift relative to an object proposal. For inclined bounding boxes, the parameterization of ( u x 1 , u y 1 ) (u_{x1}, u_{y1}) (ux1,uy1), ( u x 2 , u y 2 ) (u_{x2}, u_{y2}) (ux2,uy2), ( u x 1 ∗ , u y 1 ∗ ) (u_{x1}^{\ast}, u_{y1}^{\ast}) (ux1∗,uy1∗) and ( u x 1 ∗ , u y 1 ∗ ) (u_{x1}^{\ast}, u_{y1}^{\ast}) (ux1∗,uy1∗) is the same with that of ( v x , v y ) (v_{x}, v_{y}) (vx,vy). And the parameterization of u h u_{h} uh and u h ∗ u_{h}^{\ast} uh∗ is the same with the parameterization of v h v_{h} vh and v h ∗ v_{h}^{\ast} vh∗.

v = ( v x , v y , v w , v h ) v = (v_{x}, v_{y}, v_{w}, v_{h}) v=(vx,vy,vw,vh) 是真正的轴对齐边界框回归目标的元组,包括中心点的坐标及其宽度和高度, v ∗ = ( v x ∗ , v y ∗ , v w ∗ , v h ∗ ) v^{\ast} = (v_{x}^{\ast}, v_{y}^{\ast}, v_{w}^{\ast}, v_{h}^{\ast}) v∗=(vx∗,vy∗,vw∗,vh∗) 是文本标签的预测元组。 u = ( u x 1 , u y 1 , u x 2 , u y 2 , u h ) u = (u_{x1}, u_{y1}, u_{x2}, u_{y2}, u_{h}) u=(ux1,uy1,ux2,uy2,uh) 是真正的倾斜边界框回归目标的元组,包括倾斜框的前两个点的坐标及其高度, u ∗ = ( u x 1 ∗ , u y 1 ∗ , u x 2 ∗ , u y 2 ∗ , u h ∗ ) u^{\ast} = (u_{x1}^{\ast}, u_{y1}^{\ast}, u_{x2}^{\ast}, u_{y2}^{\ast}, u_{h}^{\ast}) u∗=(ux1∗,uy1∗,ux2∗,uy2∗,uh∗) 是文本标签的预测元组。我们在 [24] 中给出的 v v v and v ∗ v^{\ast} v∗ 的参数形式,其中 v v v and v ∗ v^{\ast} v∗ 指定了一个尺度不变的平移和对数空间的高度/宽度相对于物体候选区域的偏移。对于倾斜边界框, ( u x 1 , u y 1 ) (u_{x1}, u_{y1}) (ux1,uy1), ( u x 2 , u y 2 ) (u_{x2}, u_{y2}) (ux2,uy2), ( u x 1 ∗ , u y 1 ∗ ) (u_{x1}^{\ast}, u_{y1}^{\ast}) (ux1∗,uy1∗) and ( u x 1 ∗ , u y 1 ∗ ) (u_{x1}^{\ast}, u_{y1}^{\ast}) (ux1∗,uy1∗) 的参数形式与 ( v x , v y ) (v_{x}, v_{y}) (vx,vy) 相同。 u h u_{h} uh and u h ∗ u_{h}^{\ast} uh∗ 的参数形式与 v h v_{h} vh and v h ∗ v_{h}^{\ast} vh∗ 的参数形式相同。

tuple [tjʊpəl; ˈtʌpəl]:n. 元组,重数

Let ( w , w ∗ ) (w, w^{\ast}) (w,w∗) indicates v i , v i ∗ v_{i}, v_{i}^{\ast} vi,vi∗ or u i , u i ∗ u_{i}, u_{i}^{\ast} ui,ui∗, L r e g ( w , w ∗ ) L_{reg}(w, w^{\ast}) Lreg(w,w∗) is defined as:

设 ( w , w ∗ ) (w, w^{\ast}) (w,w∗) 表示 v i , v i ∗ v_{i}, v_{i}^{\ast} vi,vi∗ or u i , u i ∗ u_{i}, u_{i}^{\ast} ui,ui∗, L r e g ( w , w ∗ ) L_{reg}(w, w^{\ast}) Lreg(w,w∗) 定义为:

(3) smooth L 1 ( x ) = { 0.5 x 2 , if ∣ x ∣ < 1 ∣ x ∣ − 0.5 , otherwise \begin{aligned} \text{smooth}_{L1}(x) = \begin{cases} 0.5x^{2}, &\text{if } |x| < 1 \\ |x| - 0.5, &\text{otherwise} \end{cases} \end{aligned} \tag{3} smoothL1(x)={0.5x2,∣x∣−0.5,if ∣x∣<1otherwise(3)

4. Experiments

4.1. Implementation details

Training Data. Our training dataset includes 1000 incidental scene text images from ICDAR 2015 training dataset [21] and 2000 focused scene text images we collected. The scene texts in images we collected are clear and quite different from the blurry texts in ICDAR 2015. Although our simple experiments show that the additional collected images do not increase the performance on ICDAR2015, we still include them in the training to make our model more robust to different kinds of scene texts. As ICDAR 2015 training dataset contains difficult texts that is hard to detect which are labeled as “###”, we only use those readable text for training. Moreover, we only use those scene texts composed of more than one character for training.

Training Data. 我们的训练数据集包括来自 ICDAR 2015 训练数据集 [21] 的 1000 个附带的场景文本图像和我们收集的 2000 个专门的场景文本图像。我们收集的图像中的场景文本清晰,与 ICDAR 2015 中的模糊文本完全不同。虽然我们的简单实验表明额外收集的图像不会提高 ICDAR 2015 的性能,但我们仍然将它们包含在训练中以使我们的模型对不同类型的场景文本具有更强的鲁棒性。由于 ICDAR 2015 训练数据集包含难以检测的难以识别的标记为 “###” 的文本,因此我们仅使用这些可读文本进行训练。而且,我们只使用由多个字符组成的场景文本进行训练。

blurry ['blɜːrɪ]:adj. 模糊的,污脏的,不清楚的

To support arbitrary-oriented scene text detection, we augment ICDAR 2015 training dataset and our own data by rotating images. We rotate our image at the following angles (-90, -75, -60, -45, -30, -15, 0, 15, 30, 45, 60, 75, 90). Thus, after data augmentation, our training data consists of 39000 images.

为了支持任意方向的场景文本检测,我们通过旋转图像来增加 ICDAR 2015 训练数据集和我们自己的数据。我们以下列角度旋转图像 (-90, -75, -60, -45, -30, -15, 0, 15, 30, 45, 60, 75, 90)。因此,在数据增加之后,我们的训练数据由 39000 个图像组成。

The texts in ICDAR 2015 are labeled at word level with four clockwise point coordinates of quadrangle. As we simplify the problem of incidental text detection as detecting inclined rectangles as introduced in Section 3.1, we generate the ground truth inclined bounding box (rectangular data) from the quadrangle by computing the minimum area rectangle that encloses the quadrangle. We then compute the minimum axis-aligned bounding box that encloses the text as the ground truth axis-aligned box. Similar processing is done to generate ground truth data for images we collected.

ICDAR 2015 中的文本在单词级别标有四个四边形的顺时针点坐标。当我们简化像 3.1 节中介绍的检测倾斜矩形的附带文本检测问题时,我们通过计算包围四边形的最小区域矩形来生成来自四边形的 ground truth 倾斜边界框 (矩形数据)。然后,我们计算最小的轴对齐边界框,该边界框将文本封闭为 ground truth 轴对齐框。进行类似的处理以生成我们收集的图像的 ground truth 数据。

quadrangle ['kwɒdræŋg(ə)l]:n. 四边形,方院

enclose [ɪn'kləʊz; en-]:vt. 围绕,装入,放入封套

Training. Our network is initialized by the pre-trained VGG16 model for ImageNet classification [29]. We use the end-to-end training strategy. All the models are trained 20 × 1 0 4 20 \times 10^{4} 20×104 iterations in all. Learning rates start from 1 0 − 3 10^{-3} 10−3, and are multiplied by 1 10 \frac{1}{10} 101 after 5 × 1 0 4 5 \times 10^{4} 5×104, 10 × 1 0 4 10 \times 10^{4} 10×104 and 15 × 1 0 4 15 \times 10^{4} 15×104 iterations. Weight decays are 0.0005, and momentums are 0.9. All experiments use single scale training. The image’s shortest side is set as 720, while the longest side of an image is set as 1280. We choose this image size because the training and testing images in ICDAR 2015 [21] have the size (width: 1280, height: 720).

Training. 我们的网络由预先训练的用于 ImageNet 分类的 VGG16 模型初始化 [29]。我们使用端到端的训练策略。所有模型都训练了 20 × 1 0 4 20 \times 10^{4} 20×104 次迭代。学习率从 1 0 − 3 10^{-3} 10−3 开始,并在 5 × 1 0 4 5 \times 10^{4} 5×104, 10 × 1 0 4 10 \times 10^{4} 10×104 and 15 × 1 0 4 15 \times 10^{4} 15×104 次迭代之后乘以 1 10 \frac{1}{10} 101。权重衰减为 0.0005,动量为 0.9。All experiments use single scale training. 图像的最短边设置为 720,而图像的最长边设置为 1280。我们选择此图像尺寸,因为 ICDAR 2015 [21] 中的训练和测试图像具有尺寸 (宽度:1280,高度:720)。

4.2. Performance

We evaluate our method on ICDAR 2015 [21] and ICDAR 2013 [22]. The evaluation follows the ICDAR Robust Reading Competition metrics in the form of Precision, Recall and F-measure. The results are obtained by submitting the detection results to the competition website and get the evaluation results online.

我们在 ICDAR 2015 [21] 和 ICDAR 2013 [22] 上评估了我们的方法。评估遵循 ICDAR Robust Reading Competition 指标,Precision, Recall and F-measure。通过将检测结果提交给竞赛网站获得结果,并在线获得评估结果。

A. ICDAR 2015

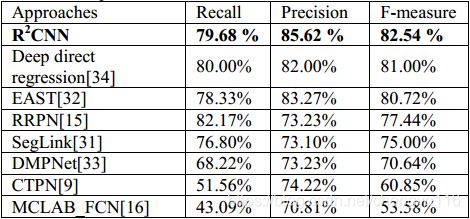

This section introduces our performances on ICDAR 2015 [21]. ICDAR 2015 competition test dataset consists of 500 images containing incidental scene texts with arbitrary orientations. Our method could achieve competitive results of Recall 79.68%, Precision 85.62%, and F-measure 82.54%.

本节介绍我们在 ICDAR 2015 上的表现 [21]。ICDAR 2015 竞赛测试数据集由 500 个图像组成,其中包含具有任意方向的附带场景文本。我们的方法可以达到 Recall 79.68%,Precision 85.62% 和 F-measure 82.54% 的有竞争力结果。

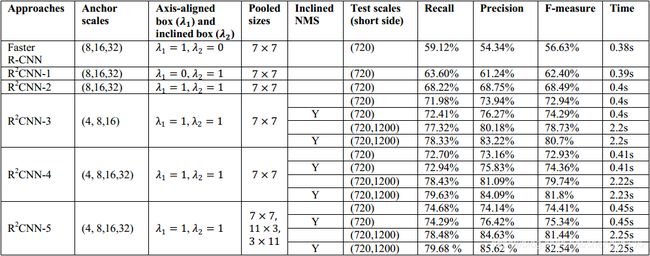

We conduct several experiments to confirm the effectiveness of our design. Table 1 summarizes the results of our models under different settings. We will compare the following models: Faster R-CNN [1], R2CNN-1, R2CNN-2, R2CNN-3, R2CNN-4, and R2CNN-5. We mainly focus on evaluating the influence of the axis-aligned box regression ( λ 1 ) (\lambda_{1}) (λ1) and the inclined box regression ( λ 2 ) (\lambda_{2}) (λ2), the influence of anchor scales and NMS strategy, and the impact of different pooled sizes of ROIPoolings. All these models are trained on the same dataset introduced in the last section.

我们进行了几次实验以确认我们的设计的有效性。表 1 总结了我们的模型在不同设置下的结果。我们将比较以下模型:Faster R-CNN [1]、R2CNN-1、R2CNN-2、R2CNN-3、R2CNN-4 和 R2CNN-5。我们主要关注评估轴对齐框回归 ( λ 1 ) (\lambda_{1}) (λ1) 和倾斜框回归 ( λ 2 ) (\lambda_{2}) (λ2) 的影响,anchor 尺寸和 NMS 策略的影响以及不同的 ROIPoolings 池化尺寸的影响。所有这些模型都是在上一节介绍的相同数据集上进行训练的。

We first perform single-scale testing with all models on ICDAR 2015. The test images keep the original size (width: 1280, height: 720) when performing the testing. We then do multi-scale testing following [30] on R2CNN-3, R2CNN-4, and R2CNN-5. With a trained model, we compute convolutional feature maps on an image pyramid, where the image’s short sides are s ∈ { 720 , 1200 } s \in \{720,1200\} s∈{720,1200}. The results of all our designs are better than the baseline Faster R-CNN.

我们首先在 ICDAR 2015 上对所有模型进行单尺度测试。测试图像在执行测试时保持原始尺寸 (宽度:1280,高度:720)。然后,我们使用 [30] 的策略对 R2CNN-3、R2CNN-4 和 R2CNN-5 进行多尺度测试。通过训练模型,我们计算图像金字塔上的卷积特征图,其中图像的短边是 s ∈ { 720 , 1200 } s \in \{720,1200\} s∈{720,1200}。我们所有设计的结果都比基线 Faster R-CNN 更好。

Axis-aligned box and inclined box. While Faster RCNN only regresses axis-aligned bounding boxes which is implemented by setting λ 1 = 1 \lambda_{1} = 1 λ1=1 and λ 2 = 0 \lambda_{2} = 0 λ2=0 in Equation (1), the detection outputs are axis-aligned boxes. Different from Faster RCNN, R2CNN-1 only regresses inclined boxes ( λ 1 = 0 \lambda_{1} = 0 λ1=0 and λ 2 = 1 \lambda_{2} = 1 λ2=1 in Equation (1)) and this leads to about 6% performance improvement over Faster R-CNN (F-measure: 62.40% vs. 56.63%). The reason is that the outputs of Faster R-CNN are axis-aligned boxes and the orientation information is ignored. R2CNN-2 regresses both the axis-aligned boxes that enclose the texts and the inclined boxes ( λ 1 = 1 \lambda_{1} = 1 λ1=1 and λ 2 = 1 \lambda_{2} = 1 λ2=1 in Equation (1)) and leads to another 6% performance improvement over R2CNN-1 (F-measure: 68.49% vs. 62.40%). This means that learning the additional axis-aligned box could help the detection of the inclined box.

Axis-aligned box and inclined box. 虽然 Faster RCNN 仅回归轴对齐边界框,通过在公式 (1) 中设置 λ 1 = 1 \lambda_{1} = 1 λ1=1 and λ 2 = 0 \lambda_{2} = 0 λ2=0 来实现,检测输出是轴对齐框。与 Faster RCNN 不同,R2CNN-1 仅回归倾斜的方框 ( λ 1 = 0 \lambda_{1} = 0 λ1=0 and λ 2 = 1 \lambda_{2} = 1 λ2=1 in Equation (1)),这导致比 Faster R-CNN 提高约 6% 的性能 (F-measure: 62.40% vs. 56.63%)。原因是 Faster R-CNN 的输出是轴对齐的框,忽略了方向信息。R2CNN-2 对包含文本的轴对齐框和倾斜框进行回归 ( λ 1 = 1 \lambda_{1} = 1 λ1=1 and λ 2 = 1 \lambda_{2} = 1 λ2=1 in Equation (1)) 并导致另外 6% 性能提升超过 R2CNN-1 (F-measure: 68.49% vs. 62.40%)。这意味着学习附加的轴对齐框可以帮助检测倾斜的框。

This means that learning the additional axis-aligned box could help the detection of the inclined box.

Anchor scales. R2CNN-3 and R2CNN-4 are designed to evaluate the influence of anchor scales on scene text detection. They should regress both the axis-aligned boxes and the inclined boxes ( λ 1 = 1 \lambda_{1} = 1 λ1=1 and λ 2 = 1 \lambda_{2} = 1 λ2=1 in Equation (1)). R2CNN-3 utilizes smaller anchor scales (4, 8, 16) compared to the original scales (8, 16, 32). R2CNN-4 adds a smaller anchor scale to the anchor scales and the anchor scales become (4, 8, 16, 32), which would generate 12 anchors in RPN. Results show that under single-scale test R2CNN-3 and R2CNN-4 have similar performance (F-measure: 72.94% vs. 72.93%), but they are both better than R2CNN-2 (F-measure: 68.49 %). This shows that small anchors could improve the scene text detection performance.

Anchor scales. R2CNN-3 和 R2CNN-4 用于评估 anchor 尺寸对场景文本检测的影响。它们应该回归轴对齐的框和倾斜的框 ( λ 1 = 1 \lambda_{1} = 1 λ1=1 and l a m b d a 2 = 1 lambda_{2} = 1 lambda2=1 in Equation (1))。与原始尺度 (8, 16, 32) 相比,R2CNN-3 使用较小的 anchor 尺寸 (4, 8, 16)。R2CNN-4 为 anchor 尺寸增加了较小的 anchor 尺寸,anchor 尺寸变为 (4, 8, 16, 32),这将在 RPN 中产生 12 个 anchor。结果显示,在单一尺度测试中,R2CNN-3 和 R2CNN-4 具有相似的性能 (F-measure: 72.94% vs. 72.93%),但它们都优于 R2CNN-2 (F-measure: 68.49 %)。这表明小 anchor 可以改善场景文本检测性能。

Under multi-scale test, R2CNN-4 is better than R2CNN-3 (F-measure: 79.74% vs. 78.73%). The reason is that scene texts can have more kinds of scales in the image pyramid under multi-scale test and R2CNN-4 with more anchor scales could detect scene texts of various sizes better than R2CNN-3.

在多尺度测试中,R2CNN-4 优于 R2CNN-3 (F-measure: 79.74% vs. 78.73%)。原因是场景文本在多尺度测试下可以在图像金字塔中具有更多种尺度,而具有更多 anchor 尺度的 R2CNN-4 可以比 R2CNN-3 更好地检测各种尺寸的场景文本。

Single pooled size vs. multiple pooled sizes. R2CNN-5 is supposed to evaluate the effect of multiple pooled sizes. As shown in Table 1, with three pooled sizes (7 × \times × 7, 11 × \times × 3, 3 × \times × 11), R2CNN-5 is better than R2CNN-4 with one pooled size (7 × \times × 7) (F-measure: 75.34% vs. 74.36% under single-scale test and inclined NMS, 82.54% vs. 81.8% under multi-scale test and inclined NMS). This confirm that utilizing more features in R2CNN is helpful for scene text detection.

Single pooled size vs. multiple pooled sizes. R2CNN-5 应该评估多个池化尺度的影响。如表 1 所示,具有三个池化尺度 (7 × \times × 7, 11 × \times × 3, 3 × \times × 11) 的 R2CNN-5 优于具有一个池化尺度 (7 × \times × 7) 的 R2CNN-4 (F-measure: 75.34% vs. 74.36% under single-scale test and inclined NMS, 82.54% vs. 81.8% under multi-scale test and inclined NMS)。这证实利用 R2CNN 中的更多特征有助于场景文本检测。

Normal NMS on axis-aligned boxes vs. inclined NMS on inclined boxes. Because we regress both the axis-aligned box and the inclined minimum area box and each axis-aligned box is associated with an inclined box, we compare the performance of normal NMS on axis-aligned boxes and the performance of inclined NMS on inclined boxes. We can see that inclined NMS with R2CNN-3, R2CNN-4 and R2CNN-5 under both single test and multi-scale test consistently perform than their counterparts.

Normal NMS on axis-aligned boxes vs. inclined NMS on inclined boxes. 因为我们回归轴对齐框和倾斜最小区域框并且每个轴对齐框与倾斜框相关联,我们比较轴对齐框上的普通 NMS 的性能和倾斜框上倾斜 NMS 的性能。我们可以看到,在单尺度测试和多尺度测试中,具有 R2CNN-3、R2CNN-4 和 R2CNN-5 的倾斜 NMS 始终比其对应的算法具有更好的性能。

inclined NMS 计算请参考 Arbitrary-Oriented Scene Text Detection via Rotation Proposals。

Table 1. Results of R2CNN under different settings on ICDAR 2015.

Test time. The test times in Table 1 are obtained when doing test on a Tesla K80 GPU. Under single-scale test, our method only increase little detection time compared to the Faster R-CNN baseline.

Test time. 表 1 中的测试时间是在 Tesla K80 GPU 上进行测试时获得的。在单一尺度测试中,与 Faster R-CNN baseline 相比,我们的方法仅增加很少的检测时间。

Comparisons with state-of-the-art. Table 2 shows the comparison of R2CNN with state-of-the-art results on ICDAR 2015 [21]. Here, R2CNN refers to R2CNN-5 with inclined NMS. We can see that our method can get competitive results of Recall 79.68%, Precision 85.62% and F-measure 82.54%.

Comparisons with state-of-the-art. 表 2 显示了在 ICDAR 2015 [21] 上 R2CNN 与最新结果的比较。这里,R2CNN 是指具有倾斜 NMS 的 R2CNN-5。我们可以看到我们的方法可以获得 Recall 79.68%, Precision 85.62% and F-measure 82.54% 的有竞争力的结果。

Table 2. Comparison with state-of-the-art on ICDAR2015.

As our approach can be considered as learning the inclined box based on the axis-aligned box, it can be easily adapted to other architectures, such as SSD [27] and YOLO [28].

由于我们的方法可以被认为是基于轴对齐框学习倾斜框,因此它可以很容易地被其他架构使用,例如 SSD [27] 和 YOLO [28]。

counterpart ['kaʊntəpɑːt]:n. 副本,配对物,极相似的人或物

Figure 5 demonstrates some detection results of our R2CNN on ICDAR 2015. We can see that our method can detect scene texts that have different orientations.

图 5 展示了我们的 R2CNN 在 ICDAR 2015 上的一些检测结果。我们可以看到我们的方法可以检测具有不同方向的场景文本。

Fig.5. Example detection results of our R2CNN on the ICDAR 2015 benchmark. The green boxes are the correct detection results. The red boxes are false positives. The dashed red boxes are false negatives.

B. ICDAR 2013

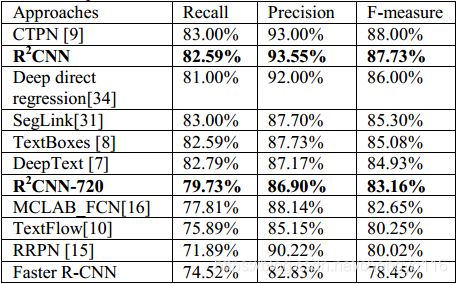

To evaluate our method’s adaptability, we conduct experiments on ICDAR 2013 [22]. ICDAR 2013 test dataset consists of 233 focused scene text images. The texts in the images are horizontal. As we can estimate both the axis-aligned box and the inclined box, we use the axis-aligned box as the output for ICDAR 2013.

为了评估我们方法的适应性,我们在 ICDAR 2013 上进行了实验 [22]。ICDAR 2013 测试数据集由 233 个专门的场景文本图像组成。图像中的文本是水平的。由于我们可以估计轴对齐框和倾斜框,我们使用轴对齐框作为 ICDAR 2013 的输出。

We conduct experiments on Faster R-CNN model and R2CNN-5 model trained in last section for ICDAR 2015. Table 3 shows our results and the state-of-the-art results. Our approach could reach the result of F-measure 87.73%. As the training data we used does not include single characters but single characters should be detected in ICDAR 2013, we think our method could achieve even better results when single characters are used for training our model.

We conduct experiments on Faster R-CNN model and R2CNN-5 model trained in last section for ICDAR 2015. 表 3 显示了我们的结果和最先进的结果。我们的方法可以达到 F-measure 87.73% 的结果。由于我们使用的训练数据不包括单个字符,但在 ICDAR 2013 中应该检测到单个字符,我们认为当使用单个字符训练我们的模型时,我们的方法可以获得更好的结果。

Table 3. Comparison with state-of-the-art on ICDAR2013.

To compare our method with the Faster R-CNN baseline, we also do a single-scale test in which the short side of the image is set to 720 pixels. In Table 3, both Faster R-CNN and R2CNN-720 adopt this testing scale. The result is that R2CNN-720 is much better than the Faster R-CNN baseline (F-measure: 83.16 % vs. 78.45%). This means our design is also useful for horizontal text detection.

为了将我们的方法与 Faster R-CNN baseline 进行比较,我们还进行了单尺度测试,其中图像的短边设置为 720 pixels。在表 3 中,Faster R-CNN and R2CNN-720 均采用该测试尺度。结果是 R2CNN-720 比 Faster R-CNN 基线好得多 (F-measure: 83.16 % vs. 78.45%)。这意味着我们的设计对水平文本检测也很有用。

adaptability [ə,dæptə'bɪlətɪ]:n. 适应性,可变性,适合性

baseline ['beɪs.laɪn];n.基线,底线,垒线,形态基准

horizontal [hɒrɪ'zɒnt(ə)l]:adj. 水平的,地平线的,同一阶层的 n. 水平线,水平面,水平位置

Figure 6 shows some detection results on ICDAR 2013. We can see R2CNN could detect horizontal focused scene texts well. The missed text in the figure is a single character.

图 6 显示了 ICDAR 2013 的一些检测结果。我们可以看到 R2CNN 可以很好地检测水平的场景文本。图中遗漏的文字是一个字符。

Fig.6. Example detection results of our R2CNN on the ICDAR 2013 benchmark. The green bounding boxes are correct detections. The red boxes are false positives. The red dashed boxes are false negatives.

5. Conclusion

In this paper, we introduce a Rotational Region CNN (R2CNN) for detecting scene texts in arbitrary orientations. The framework is based on Faster R-CNN architecture [1]. The RPN is used to generate axis-aligned region proposals. And then several ROIPoolings with different pooled sizes (7 × \times × 7, 11 × \times × 3, 3 × \times × 11) are performed on the proposal and the concatenated pooled features are used for classifying the proposal, estimating both the axis-aligned box and the inclined minimum area box. After that, inclined NMS is performed on the inclined boxes. Evaluation shows that our approach can achieve competitive results on ICDAR2015 and ICDAR2013.

在本文中,我们介绍了一个 Rotational Region CNN (R2CNN),用于检测任意方向的场景文本。该框架基于 Faster R-CNN 架构[1]。RPN 用于生成轴对齐候选区域。然后对候选区域执行几个具有不同池化尺度的 ROIPoolings (7 × \times × 7, 11 × \times × 3, 3 × \times × 11),并使用连接的池化特征对候选区域进行分类,同时估算轴对齐框和倾斜最小区域框。之后,在倾斜的框上进行倾斜的 NMS。评估表明,我们的方法可以在 ICDAR2015 和 ICDAR2013 上获得有竞争力的结果。

网络使用融合的特征去预测三个结果。

The method can be considered as learning the inclined box based on the axis-aligned box and it can be easily adapted to other general object detection frameworks such as SSD [27] and YOLO [28] to detect object with orientations.

该方法可以被认为是基于轴对齐框学习倾斜框,并且可以容易地适应其他一般物体检测框架,例如 SSD [27] 和 YOLO [28],以检测具有方向的物体。

References

[1] Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks

[8] TextBoxes: A Fast Text Detector with a Single Deep Neural Network

[15] Arbitrary-Oriented Scene Text Detection via Rotation Proposals

[21] ICDAR 2015 Competition on Robust Reading

[22] ICDAR 2013 robust reading competition

[23] Fast R-CNN

[30] Deep Residual Learning for Image Recognition

[34] Deep Direct Regression for Multi-Oriented Scene Text Detection

R2CNN: Rotational Region CNN Based on FPN (Tensorflow)

https://github.com/yangxue0827/R2CNN_FPN_Tensorflow

Rotational region detection based on Faster-RCNN

https://github.com/DetectionTeamUCAS/R2CNN_Faster-RCNN_Tensorflow

R2CNN++: Multi-Dimensional Attention Based Rotation Invariant Detector with Robust Anchor Strategy

https://github.com/DetectionTeamUCAS/R2CNN-Plus-Plus_Tensorflow

Skew IoU Computation / Skew Non-Maximum Suppression (Skew-NMS) - Arbitrary-Oriented Scene Text Detection via Rotation Proposals

https://github.com/mjq11302010044/RRPN

https://github.com/mjq11302010044/RRPN_pytorch

https://github.com/DetectionTeamUCAS/RRPN_Faster-RCNN_Tensorflow

WORDBOOK

KEY POINTS

Single Shot Text Detector with Regional Attention

https://github.com/BestSonny/SSTD

TextBoxes: A Fast Text Detector with a Single Deep Neural Network

https://github.com/MhLiao/TextBoxes

Detecting Oriented Text in Natural Images by Linking Segments

https://github.com/bgshih/seglink

EAST: An Efficient and Accurate Scene Text Detector

https://github.com/zxytim/EAST

Detecting Curve Text in the Wild: New Dataset and New Solution

https://github.com/Yuliang-Liu/Curve-Text-Detector

Arbitrary-Oriented Scene Text Detection via Rotation Proposals

https://github.com/mjq11302010044/RRPN

Rotation-sensitive Regression for Oriented Scene Text Detection

https://github.com/MhLiao/RRD

Multi-Oriented Text Detection With Fully Convolutional Networks

https://github.com/stupidZZ/FCN_Text

PixelLink: Detecting Scene Text via Instance Segmentation

https://github.com/ZJULearning/pixel_link

Detecting Text in Natural Image with Connectionist Text Proposal Network

https://github.com/tianzhi0549/CTPN

Geometry-Aware Scene Text Detection with Instance Transformation Network

https://github.com/zlmzju/itn

An End-to-End Trainable Neural Network for Image-Based Sequence Recognition and Its Application to Scene Text Recognition

https://github.com/bgshih/crnn

Synthetic Data for Text Localisation in Natural Images

https://github.com/ankush-me/SynthText

SEE: Towards Semi-Supervised End-to-End Scene Text Recognition

https://github.com/Bartzi/see

Deep TextSpotter: An End-to-End Trainable Scene Text Localization and Recognition Framework

https://github.com/MichalBusta/DeepTextSpotter

Verisimilar Image Synthesis for Accurate Detection and Recognition of Texts in Scenes

https://github.com/fnzhan/Verisimilar-Image-Synthesis-for-Accurate-Detection-and-Recognition-of-Texts-in-Scenes

Convolutional Neural Networks for Direct Text Deblurring

http://www.fit.vutbr.cz/~ihradis/CNN-Deblur/