HMM学习最佳范例笔记

HMM学习最佳范例

http://www.52nlp.cn/hmm-learn-best-practices-four-hidden-markov-models

一 、定义

一个隐马尔科夫模型是一个三元组(pi, A, B)。

pi:初始化概率向量;

A:状态转移矩阵;

B:混淆矩阵;二、应用

a) 评估(Evaluation)

b) 解码( Decoding)

C)学习(Learning)三、前向算法(评估观察序列概率)

3.1穷举思想

3.2递归思想

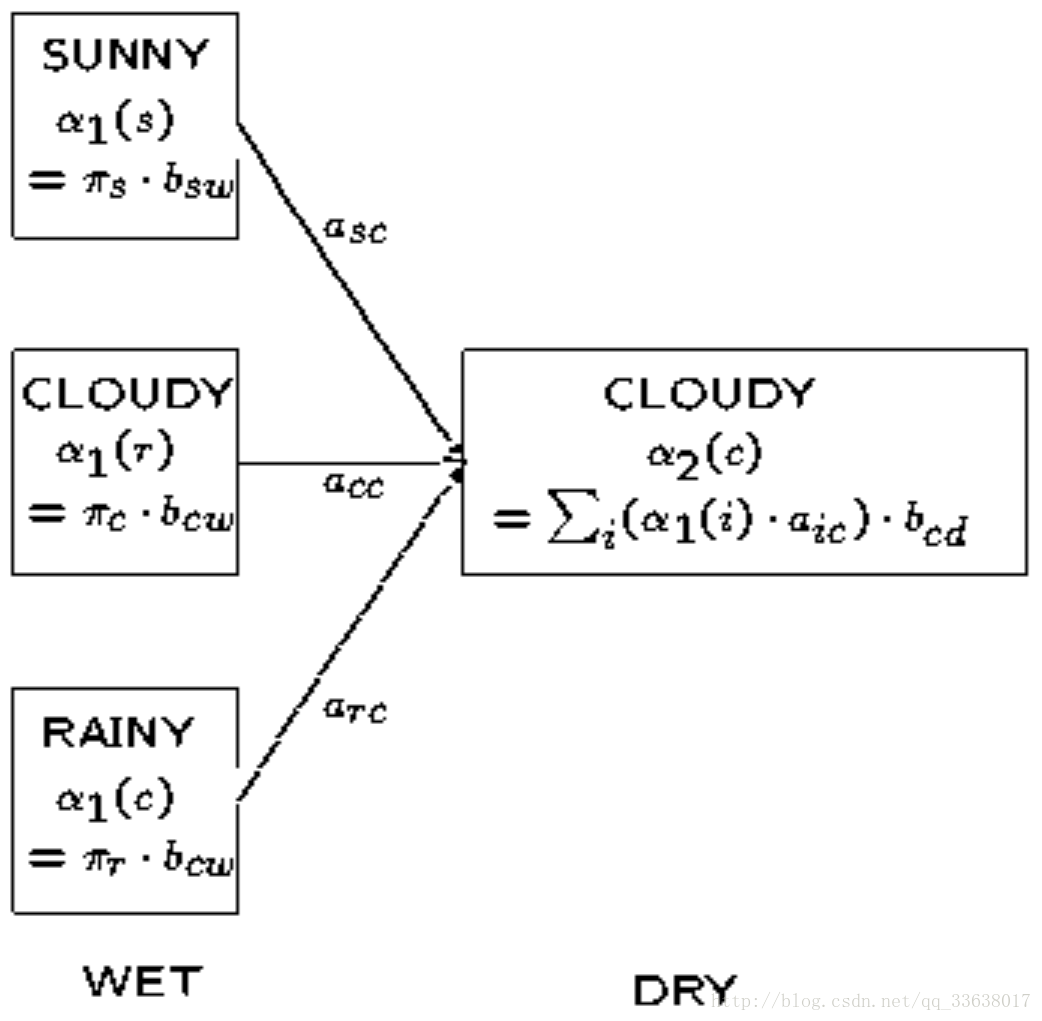

定义t时刻位于状态j的局部概率为at(j)——这个局部概率计算如下:

at(j)= Pr( 观察状态 | 隐藏状态j ) x Pr(t时刻所有指向j状态的路径)

对于这些最终局部概率求和等价于对于网格中所有可能的路径概率求和,也就求出了给定隐马尔科夫模型(HMM)后的观察序列概率已知:

观察序列:k1,k2...

初始:pi,A,B

计算:观察序列概率

步骤:

a)计算t=1时刻所有状态的概率

b)计算t=2,... ,T时,对于每个状态的局部概率

c)计算观察序列的概率:观察序列的概率等于T时刻所有局部概率之和四、维特比算法(解码隐藏状态)

4.1穷举思想

我们可以通过列出所有可能的隐藏状态序列并且计算对于每个组合相应的观察序列的概率来找到最可能的隐藏状态序列。最可能的隐藏状态序列是使下面这个概率最大的组合:

Pr(观察序列|隐藏状态的组合)4.2递归思想

对于网格中的每一个中间及终止状态,都有一个到达该状态的最可能路径。

因而delta(i,t)是t时刻到达状态i的所有序列概率中最大的概率,而局部最佳路径是得到此最大概率的隐藏状态序列。对于每一个可能的i和t值来说,这一类概率(及局部路径)均存在。实现:

a)计算t=1时刻的局部概率

b)计算t>1时刻的局部概率 c)选择最大的概率及其路径五、后向算法

步骤:

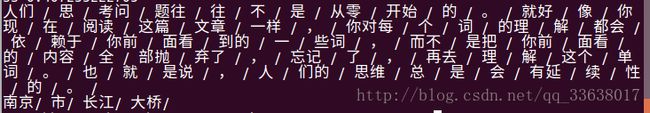

a)初始化,令t=T时刻所有状态的后向变量为1

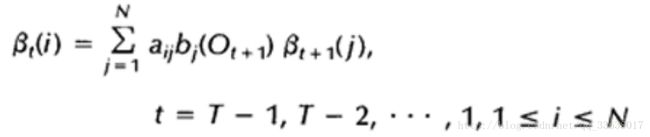

b)递归计算每个时间点,t=T-1,T-2,…,1时的后向变量

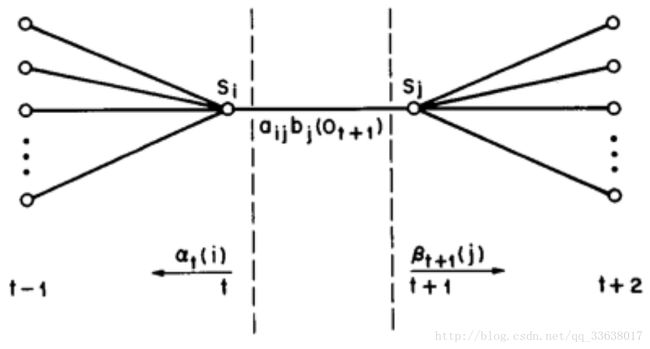

六、前向-后向算法(学习参数pi,A,B)

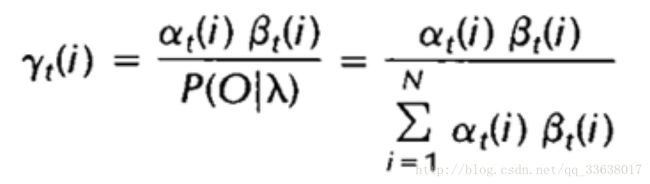

定义t时刻位于隐藏状态Si的概率变量为:

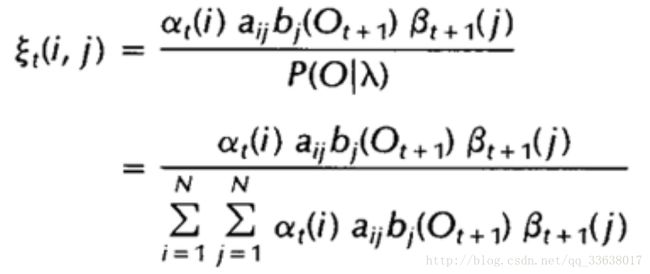

给定观察序列O及隐马尔科夫模型lamda,定义t时刻位于隐藏状态Si及t+1时刻位于隐藏状态Sj的概率变量为:

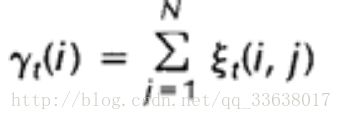

而上述定义的两个变量间也存在着如下关系:

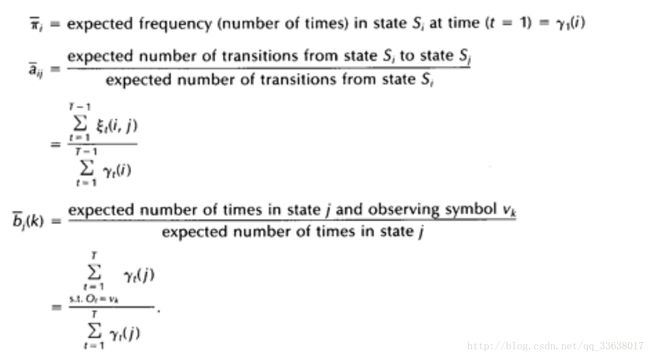

参数学习:

利用上述两个变量及其期望值来重新估计隐马尔科夫模型(HMM)的参数pi,A及B,直至收敛

七、实例一(分词)

转载 http://blog.csdn.net/orlandowww/article/details/52706135

a)模型训练

# -*- encoding: utf-8 -*-

import sys

import os

reload(sys)

sys.setdefaultencoding('utf-8')

# 'trainCorpus.txt_utf8'为人民日报已经人工分词的预料,29万多条句子

A_dic = {}

B_dic = {}

Count_dic = {}

Pi_dic = {}

word_set = set()

state_list = ['B','M','E','S']

line_num = -1

INPUT_DATA = "trainCorpus.txt_utf8"

PROB_START = "trainHMM/prob_start.py" #初始状态概率

PROB_EMIT = "trainHMM/prob_emit.py" #发射概率

PROB_TRANS = "trainHMM/prob_trans.py" #转移概率

def init(): #初始化字典

for state in state_list:

A_dic[state] = {}

for state1 in state_list:

A_dic[state][state1] = 0.0

for state in state_list:

Pi_dic[state] = 0.0

B_dic[state] = {}

Count_dic[state] = 0

def getList(input_str): #输入词语,输出状态

outpout_str = []

if len(input_str) == 1:

outpout_str.append('S')

elif len(input_str) == 2:

outpout_str = ['B','E']

else:

M_num = len(input_str) -2

M_list = ['M'] * M_num

outpout_str.append('B')

outpout_str.extend(M_list) #把M_list中的'M'分别添加进去

outpout_str.append('E')

return outpout_str

def Output(): #输出模型的三个参数:初始概率+转移概率+发射概率

start_fp = file(PROB_START,'w')

emit_fp = file(PROB_EMIT,'w')

trans_fp = file(PROB_TRANS,'w')

print "len(word_set) = %s " % (len(word_set))

for key in Pi_dic: #状态的初始概率

Pi_dic[key] = Pi_dic[key] * 1.0 / line_num

print >>start_fp,Pi_dic

for key in A_dic: #状态转移概率

for key1 in A_dic[key]:

A_dic[key][key1] = A_dic[key][key1] / Count_dic[key]

print >>trans_fp,A_dic

for key in B_dic: #发射概率(状态->词语的条件概率)

for word in B_dic[key]:

B_dic[key][word] = B_dic[key][word] / Count_dic[key]

print >>emit_fp,B_dic

start_fp.close()

emit_fp.close()

trans_fp.close()

def main():

ifp = file(INPUT_DATA)

init()

global word_set #初始是set()

global line_num #初始是-1

for line in ifp:

line_num += 1

if line_num % 10000 == 0:

print line_num

line = line.strip()

if not line:continue

line = line.decode("utf-8","ignore") #设置为ignore,会忽略非法字符

word_list = []

for i in range(len(line)):

if line[i] == " ":continue

word_list.append(line[i])

word_set = word_set | set(word_list) #训练预料库中所有字的集合

lineArr = line.split(" ")

line_state = []

for item in lineArr:

line_state.extend(getList(item)) #一句话对应一行连续的状态

if len(word_list) != len(line_state):

print >> sys.stderr,"[line_num = %d][line = %s]" % (line_num, line.endoce("utf-8",'ignore'))

else:

for i in range(len(line_state)):

if i == 0:

Pi_dic[line_state[0]] += 1 #Pi_dic记录句子第一个字的状态,用于计算初始状态概率

Count_dic[line_state[0]] += 1 #记录每一个状态的出现次数

else:

A_dic[line_state[i-1]][line_state[i]] += 1 #用于计算转移概率

Count_dic[line_state[i]] += 1

if not B_dic[line_state[i]].has_key(word_list[i]):

B_dic[line_state[i]][word_list[i]] = 0.0

else:

B_dic[line_state[i]][word_list[i]] += 1 #用于计算发射概率

Output()

ifp.close()

if __name__ == "__main__":

main()b)分词

# -*- encoding: utf-8 -*-

import sys

import os

reload(sys)

sys.setdefaultencoding('utf-8')

# 'trainCorpus.txt_utf8'为人民日报已经人工分词的预料,29万多条句子

def load_model(f_name):

ifp = file(f_name, 'rb')

return eval(ifp.read()) #eval参数是一个字符串, 可以把这个字符串当成表达式来求值,

prob_start = load_model("trainHMM/prob_start.py")

prob_trans = load_model("trainHMM/prob_trans.py")

prob_emit = load_model("trainHMM/prob_emit.py")

def viterbi(obs, states, start_p, trans_p, emit_p): #维特比算法(一种递归算法)

V = [{}]

path = {}

for y in states: #初始值

V[0][y] = start_p[y] * emit_p[y].get(obs[0],0) #在位置0,以y状态为末尾的状态序列的最大概率

path[y] = [y]

for t in range(1,len(obs)):

V.append({})

newpath = {}

for y in states: #从y0 -> y状态的递归

(prob, state) = max([(V[t-1][y0] * trans_p[y0].get(y,0) * emit_p[y].get(obs[t],0) ,y0) for y0 in states if V[t-1][y0]>0])

V[t][y] =prob

newpath[y] = path[state] + [y]

path = newpath #记录状态序列

(prob, state) = max([(V[len(obs) - 1][y], y) for y in states]) #在最后一个位置,以y状态为末尾的状态序列的最大概率

return (prob, path[state]) #返回概率和状态序列

def cut(sentence):

prob, pos_list = viterbi(sentence,('B','M','E','S'), prob_start, prob_trans, prob_emit)

return (prob,pos_list)

if __name__ == "__main__":

test_str = u"新华网驻东京记者报道"

prob,pos_list = cut(test_str)

print test_str

print pos_list八、实例二(lstm结合维特比分词)

转载自

https://github.com/yongyehuang/Tensorflow-Tutorial/blob/master/Tutorial_6%20-%20Bi-directional%20LSTM%20for%20sequence%20labeling%20(Chinese%20segmentation).ipynb

# -*- encoding: utf-8 -*-

import sys

import os

reload(sys)

sys.setdefaultencoding('utf-8')

import tensorflow as tf

config = tf.ConfigProto()

config.gpu_options.allow_growth = True

sess = tf.Session(config=config)

from tensorflow.contrib import rnn

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import re

from tqdm import tqdm

import time

# 以字符串的形式读入所有数据

with open('data/msr_train.txt', 'rb') as inp:

texts = inp.read().decode('gbk')

sentences = texts.split('\r\n') # 根据换行切分

# 将不规范的内容(如每行的开头)去掉

def clean(s):

if u'“/s' not in s: # 句子中间的引号不应去掉

return s.replace(u' ”/s', '')

elif u'”/s' not in s:

return s.replace(u'“/s ', '')

elif u'‘/s' not in s:

return s.replace(u' ’/s', '')

elif u'’/s' not in s:

return s.replace(u'‘/s ', '')

else:

return s

texts = u''.join(map(clean, sentences)) # 把所有的词拼接起来

print 'Length of texts is %d' % len(texts)

print 'Example of texts: \n', texts[:300]

def get_Xy(sentence):

"""将 sentence 处理成 [word1, w2, ..wn], [tag1, t2, ...tn]"""

words_tags = re.findall('(.)/(.)', sentence)

if words_tags:

words_tags = np.asarray(words_tags)

words = words_tags[:, 0]

tags = words_tags[:, 1]

return words, tags # 所有的字和tag分别存为 data / label

return None

datas = list()

labels = list()

print 'Start creating words and tags data ...'

for sentence in tqdm(iter(sentences)):

result = get_Xy(sentence)

if result:

datas.append(result[0])

labels.append(result[1])

print 'Length of datas is %d' % len(datas)

print 'Example of datas: ', datas[0]

print 'Example of labels:', labels[0]

'''

df_data = pd.DataFrame({'words': datas, 'tags': labels}, index=range(len(datas)))

# 句子长度

df_data['sentence_len'] = df_data['words'].apply(lambda words: len(words))

df_data.head(2)

# 句子长度的分布

import matplotlib.pyplot as plt

df_data['sentence_len'].hist(bins=100)

plt.xlim(0, 100)

plt.xlabel('sentence_length')

plt.ylabel('sentence_num')

plt.title('Distribution of the Length of Sentence')

plt.show()

# 1.用 chain(*lists) 函数把多个list拼接起来

from itertools import chain

all_words = list(chain(*df_data['words'].values))

# 2.统计所有 word

sr_allwords = pd.Series(all_words)

sr_allwords = sr_allwords.value_counts()

set_words = sr_allwords.index

set_ids = range(1, len(set_words)+1) # 注意从1开始,因为我们准备把0作为填充值

tags = [ 'x', 's', 'b', 'm', 'e']

tag_ids = range(len(tags))

# 3. 构建 words 和 tags 都转为数值 id 的映射(使用 Series 比 dict 更加方便)

word2id = pd.Series(set_ids, index=set_words)

id2word = pd.Series(set_words, index=set_ids)

tag2id = pd.Series(tag_ids, index=tags)

id2tag = pd.Series(tags, index=tag_ids)

vocab_size = len(set_words)

print 'vocab_size={}'.format(vocab_size)

max_len = 32

def X_padding(words):

"""把 words 转为 id 形式,并自动补全位 max_len 长度。"""

ids = list(word2id[words])

if len(ids) >= max_len: # 长则弃掉

return ids[:max_len]

ids.extend([0]*(max_len-len(ids))) # 短则补全

return ids

def y_padding(tags):

"""把 tags 转为 id 形式, 并自动补全位 max_len 长度。"""

ids = list(tag2id[tags])

if len(ids) >= max_len: # 长则弃掉

return ids[:max_len]

ids.extend([0]*(max_len-len(ids))) # 短则补全

return ids

df_data['X'] = df_data['words'].apply(X_padding)

df_data['y'] = df_data['tags'].apply(y_padding)

# 最后得到了所有的数据

X = np.asarray(list(df_data['X'].values))

y = np.asarray(list(df_data['y'].values))

print 'X.shape={}, y.shape={}'.format(X.shape, y.shape)

print 'Example of words: ', df_data['words'].values[0]

print 'Example of X: ', X[0]

print 'Example of tags: ', df_data['tags'].values[0]

print 'Example of y: ', y[0]

# 保存数据

import pickle

import os

if not os.path.exists('data/'):

os.makedirs('data/')

with open('data/data.pkl', 'wb') as outp:

pickle.dump(X, outp)

pickle.dump(y, outp)

pickle.dump(word2id, outp)

pickle.dump(id2word, outp)

pickle.dump(tag2id, outp)

pickle.dump(id2tag, outp)

print '** Finished saving the data.'

'''

# 导入数据

import pickle

with open('data/data.pkl', 'rb') as inp:

X = pickle.load(inp)

y = pickle.load(inp)

word2id = pickle.load(inp)

id2word = pickle.load(inp)

tag2id = pickle.load(inp)

id2tag = pickle.load(inp)

'''

# 划分测试集/训练集/验证集

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

X_train, X_valid, y_train, y_valid = train_test_split(X_train, y_train, test_size=0.2, random_state=42)

print 'X_train.shape={}, y_train.shape={}; \nX_valid.shape={}, y_valid.shape={};\nX_test.shape={}, y_test.shape={}'.format(

X_train.shape, y_train.shape, X_valid.shape, y_valid.shape, X_test.shape, y_test.shape)

# ** 3.build the data generator

class BatchGenerator(object):

""" Construct a Data generator. The input X, y should be ndarray or list like type.

Example:

Data_train = BatchGenerator(X=X_train_all, y=y_train_all, shuffle=False)

Data_test = BatchGenerator(X=X_test_all, y=y_test_all, shuffle=False)

X = Data_train.X

y = Data_train.y

or:

X_batch, y_batch = Data_train.next_batch(batch_size)

"""

def __init__(self, X, y, shuffle=False):

if type(X) != np.ndarray:

X = np.asarray(X)

if type(y) != np.ndarray:

y = np.asarray(y)

self._X = X

self._y = y

self._epochs_completed = 0

self._index_in_epoch = 0

self._number_examples = self._X.shape[0]

self._shuffle = shuffle

if self._shuffle:

new_index = np.random.permutation(self._number_examples)

self._X = self._X[new_index]

self._y = self._y[new_index]

@property

def X(self):

return self._X

@property

def y(self):

return self._y

@property

def num_examples(self):

return self._number_examples

@property

def epochs_completed(self):

return self._epochs_completed

def next_batch(self, batch_size):

""" Return the next 'batch_size' examples from this data set."""

start = self._index_in_epoch

self._index_in_epoch += batch_size

if self._index_in_epoch > self._number_examples:

# finished epoch

self._epochs_completed += 1

# Shuffle the data

if self._shuffle:

new_index = np.random.permutation(self._number_examples)

self._X = self._X[new_index]

self._y = self._y[new_index]

start = 0

self._index_in_epoch = batch_size

assert batch_size <= self._number_examples

end = self._index_in_epoch

return self._X[start:end], self._y[start:end]

print 'Creating the data generator ...'

data_train = BatchGenerator(X_train, y_train, shuffle=True)

data_valid = BatchGenerator(X_valid, y_valid, shuffle=False)

data_test = BatchGenerator(X_test, y_test, shuffle=False)

print 'Finished creating the data generator.'

# For Chinese word segmentation.

'''

# ##################### config ######################

decay = 0.85

max_epoch = 5

max_max_epoch = 10

timestep_size = max_len = 32 # 句子长度

vocab_size = 5159 # 样本中不同字的个数+1(padding 0),根据处理数据的时候得到

input_size = embedding_size = 64 # 字向量长度

class_num = 5

hidden_size = 128 # 隐含层节点数

layer_num = 2 # bi-lstm 层数

max_grad_norm = 5.0 # 最大梯度(超过此值的梯度将被裁剪)

lr = tf.placeholder(tf.float32, [])

keep_prob = tf.placeholder(tf.float32, [])

batch_size = tf.placeholder(tf.int32, []) # 注意类型必须为 tf.int32

model_save_path = 'ckpt/bi-lstm.ckpt' # 模型保存位置

with tf.variable_scope('embedding'):

embedding = tf.get_variable("embedding", [vocab_size, embedding_size], dtype=tf.float32)

def weight_variable(shape):

"""Create a weight variable with appropriate initialization."""

initial = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(initial)

def bias_variable(shape):

"""Create a bias variable with appropriate initialization."""

initial = tf.constant(0.1, shape=shape)

return tf.Variable(initial)

def lstm_cell():

cell = rnn.LSTMCell(hidden_size, reuse=tf.get_variable_scope().reuse)

return rnn.DropoutWrapper(cell, output_keep_prob=keep_prob)

def bi_lstm(X_inputs):

"""build the bi-LSTMs network. Return the y_pred"""

# X_inputs.shape = [batchsize, timestep_size] -> inputs.shape = [batchsize, timestep_size, embedding_size]

inputs = tf.nn.embedding_lookup(embedding, X_inputs)

# ** 1.构建前向后向多层 LSTM

cell_fw = rnn.MultiRNNCell([lstm_cell() for _ in range(layer_num)], state_is_tuple=True)

cell_bw = rnn.MultiRNNCell([lstm_cell() for _ in range(layer_num)], state_is_tuple=True)

# ** 2.初始状态

initial_state_fw = cell_fw.zero_state(batch_size, tf.float32)

initial_state_bw = cell_bw.zero_state(batch_size, tf.float32)

# 下面两部分是等价的

# **************************************************************

# ** 把 inputs 处理成 rnn.static_bidirectional_rnn 的要求形式

# ** 文档说明

# inputs: A length T list of inputs, each a tensor of shape

# [batch_size, input_size], or a nested tuple of such elements.

# *************************************************************

# Unstack to get a list of 'n_steps' tensors of shape (batch_size, n_input)

# inputs.shape = [batchsize, timestep_size, embedding_size] -> timestep_size tensor, each_tensor.shape = [batchsize, embedding_size]

# inputs = tf.unstack(inputs, timestep_size, 1)

# ** 3.bi-lstm 计算(tf封装) 一般采用下面 static_bidirectional_rnn 函数调用。

# 但是为了理解计算的细节,所以把后面的这段代码进行展开自己实现了一遍。

# ***********************************************************

# ***********************************************************

# ** 3. bi-lstm 计算(展开)

with tf.variable_scope('bidirectional_rnn'):

# *** 下面,两个网络是分别计算 output 和 state

# Forward direction

outputs_fw = list()

state_fw = initial_state_fw

with tf.variable_scope('fw'):

for timestep in range(timestep_size):

if timestep > 0:

tf.get_variable_scope().reuse_variables()

(output_fw, state_fw) = cell_fw(inputs[:, timestep, :], state_fw)

outputs_fw.append(output_fw)

# backward direction

outputs_bw = list()

state_bw = initial_state_bw

with tf.variable_scope('bw') as bw_scope:

inputs = tf.reverse(inputs, [1])

for timestep in range(timestep_size):

if timestep > 0:

tf.get_variable_scope().reuse_variables()

(output_bw, state_bw) = cell_bw(inputs[:, timestep, :], state_bw)

outputs_bw.append(output_bw)

# *** 然后把 output_bw 在 timestep 维度进行翻转

# outputs_bw.shape = [timestep_size, batch_size, hidden_size]

outputs_bw = tf.reverse(outputs_bw, [0])

# 把两个oupputs 拼成 [timestep_size, batch_size, hidden_size*2]

output = tf.concat([outputs_fw, outputs_bw], 2)

output = tf.transpose(output, perm=[1,0,2])

output = tf.reshape(output, [-1, hidden_size*2])

# ***********************************************************

return output # [-1, hidden_size*2]

with tf.variable_scope('Inputs'):

X_inputs = tf.placeholder(tf.int32, [None, timestep_size], name='X_input')

y_inputs = tf.placeholder(tf.int32, [None, timestep_size], name='y_input')

bilstm_output = bi_lstm(X_inputs)

with tf.variable_scope('outputs'):

softmax_w = weight_variable([hidden_size * 2, class_num])

softmax_b = bias_variable([class_num])

y_pred = tf.matmul(bilstm_output, softmax_w) + softmax_b

# adding extra statistics to monitor

# y_inputs.shape = [batch_size, timestep_size]

correct_prediction = tf.equal(tf.cast(tf.argmax(y_pred, 1), tf.int32), tf.reshape(y_inputs, [-1]))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

cost = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(labels = tf.reshape(y_inputs, [-1]), logits = y_pred))

# ***** 优化求解 *******

tvars = tf.trainable_variables() # 获取模型的所有参数

grads, _ = tf.clip_by_global_norm(tf.gradients(cost, tvars), max_grad_norm) # 获取损失函数对于每个参数的梯度

optimizer = tf.train.AdamOptimizer(learning_rate=lr) # 优化器

# 梯度下降计算

train_op = optimizer.apply_gradients( zip(grads, tvars),

global_step=tf.contrib.framework.get_or_create_global_step())

print 'Finished creating the bi-lstm model.'

def test_epoch(dataset):

"""Testing or valid."""

_batch_size = 500

fetches = [accuracy, cost]

_y = dataset.y

data_size = _y.shape[0]

batch_num = int(data_size / _batch_size)

start_time = time.time()

_costs = 0.0

_accs = 0.0

for i in xrange(batch_num):

X_batch, y_batch = dataset.next_batch(_batch_size)

feed_dict = {X_inputs:X_batch, y_inputs:y_batch, lr:1e-5, batch_size:_batch_size, keep_prob:1.0}

_acc, _cost = sess.run(fetches, feed_dict)

_accs += _acc

_costs += _cost

mean_acc= _accs / batch_num

mean_cost = _costs / batch_num

return mean_acc, mean_cost

sess.run(tf.global_variables_initializer())

tr_batch_size = 128

max_max_epoch = 6

display_num = 5 # 每个 epoch 显示是个结果

'''

tr_batch_num = int(data_train.y.shape[0] / tr_batch_size) # 每个 epoch 中包含的 batch 数

display_batch = int(tr_batch_num / display_num) # 每训练 display_batch 之后输出一次

saver = tf.train.Saver(max_to_keep=10) # 最多保存的模型数量

for epoch in xrange(max_max_epoch):

_lr = 1e-4

if epoch > max_epoch:

_lr = _lr * ((decay) ** (epoch - max_epoch))

print 'EPOCH %d, lr=%g' % (epoch+1, _lr)

start_time = time.time()

_costs = 0.0

_accs = 0.0

show_accs = 0.0

show_costs = 0.0

for batch in xrange(tr_batch_num):

fetches = [accuracy, cost, train_op]

X_batch, y_batch = data_train.next_batch(tr_batch_size)

feed_dict = {X_inputs:X_batch, y_inputs:y_batch, lr:_lr, batch_size:tr_batch_size, keep_prob:0.5}

_acc, _cost, _ = sess.run(fetches, feed_dict) # the cost is the mean cost of one batch

_accs += _acc

_costs += _cost

show_accs += _acc

show_costs += _cost

if (batch + 1) % display_batch == 0:

valid_acc, valid_cost = test_epoch(data_valid) # valid

print '\ttraining acc=%g, cost=%g; valid acc= %g, cost=%g ' % (show_accs / display_batch,

show_costs / display_batch, valid_acc, valid_cost)

show_accs = 0.0

show_costs = 0.0

mean_acc = _accs / tr_batch_num

mean_cost = _costs / tr_batch_num

if (epoch + 1) % 3 == 0: # 每 3 个 epoch 保存一次模型

save_path = saver.save(sess, model_save_path, global_step=(epoch+1))

print 'the save path is ', save_path

print '\ttraining %d, acc=%g, cost=%g ' % (data_train.y.shape[0], mean_acc, mean_cost)

print 'Epoch training %d, acc=%g, cost=%g, speed=%g s/epoch' % (data_train.y.shape[0], mean_acc, mean_cost, time.time()-start_time)

# testing

print '**TEST RESULT:'

test_acc, test_cost = test_epoch(data_test)

print '**Test %d, acc=%g, cost=%g' % (data_test.y.shape[0], test_acc, test_cost)

'''

# ** 导入模型

saver = tf.train.Saver()

best_model_path = 'ckpt/bi-lstm.ckpt-6'

saver.restore(sess, best_model_path)

# 利用 labels(即状态序列)来统计转移概率

# 因为状态数比较少,这里用 dict={'I_tI_{t+1}':p} 来实现

# A统计状态转移的频数

A = {

'sb':0,

'ss':0,

'be':0,

'bm':0,

'me':0,

'mm':0,

'eb':0,

'es':0

}

# zy 表示转移概率矩阵

zy = dict()

for label in labels:

for t in xrange(len(label) - 1):

key = label[t] + label[t+1]

A[key] += 1.0

zy['sb'] = A['sb'] / (A['sb'] + A['ss'])

zy['ss'] = 1.0 - zy['sb']

zy['be'] = A['be'] / (A['be'] + A['bm'])

zy['bm'] = 1.0 - zy['be']

zy['me'] = A['me'] / (A['me'] + A['mm'])

zy['mm'] = 1.0 - zy['me']

zy['eb'] = A['eb'] / (A['eb'] + A['es'])

zy['es'] = 1.0 - zy['eb']

keys = sorted(zy.keys())

print 'the transition probability: '

for key in keys:

print key, zy[key]

zy = {i:np.log(zy[i]) for i in zy.keys()}

def viterbi(nodes):

"""

维特比译码:除了第一层以外,每一层有4个节点。

计算当前层(第一层不需要计算)四个节点的最短路径:

对于本层的每一个节点,计算出路径来自上一层的各个节点的新的路径长度(概率)。保留最大值(最短路径)。

上一层每个节点的路径保存在 paths 中。计算本层的时候,先用paths_ 暂存,然后把本层的最大路径保存到 paths 中。

paths 采用字典的形式保存(路径:路径长度)。

一直计算到最后一层,得到四条路径,将长度最短(概率值最大的路径返回)

"""

paths = {'b': nodes[0]['b'], 's':nodes[0]['s']} # 第一层,只有两个节点

for layer in xrange(1, len(nodes)): # 后面的每一层

paths_ = paths.copy() # 先保存上一层的路径

# node_now 为本层节点, node_last 为上层节点

paths = {} # 清空 path

for node_now in nodes[layer].keys():

# 对于本层的每个节点,找出最短路径

sub_paths = {}

# 上一层的每个节点到本层节点的连接

for path_last in paths_.keys():

if path_last[-1] + node_now in zy.keys(): # 若转移概率不为 0

sub_paths[path_last + node_now] = paths_[path_last] + nodes[layer][node_now] + zy[path_last[-1] + node_now]

# 最短路径,即概率最大的那个

sr_subpaths = pd.Series(sub_paths)

sr_subpaths = sr_subpaths.sort_values() # 升序排序

node_subpath = sr_subpaths.index[-1] # 最短路径

node_value = sr_subpaths[-1] # 最短路径对应的值

# 把 node_now 的最短路径添加到 paths 中

paths[node_subpath] = node_value

# 所有层求完后,找出最后一层中各个节点的路径最短的路径

sr_paths = pd.Series(paths)

sr_paths = sr_paths.sort_values() # 按照升序排序

return sr_paths.index[-1] # 返回最短路径(概率值最大的路径)

def text2ids(text):

"""把字片段text转为 ids."""

words = list(text)

ids = list(word2id[words])

if len(ids) >= max_len: # 长则弃掉

print u'输出片段超过%d部分无法处理' % (max_len)

return ids[:max_len]

ids.extend([0]*(max_len-len(ids))) # 短则补全

ids = np.asarray(ids).reshape([-1, max_len])

return ids

def simple_cut(text):

"""对一个片段text(标点符号把句子划分为多个片段)进行预测。"""

if text:

text_len = len(text)

X_batch = text2ids(text) # 这里每个 batch 是一个样本

fetches = [y_pred]

feed_dict = {X_inputs:X_batch, lr:1.0, batch_size:1, keep_prob:1.0}

_y_pred = sess.run(fetches, feed_dict)[0][:text_len] # padding填充的部分直接丢弃

words = []

nodes = [dict(zip(['s','b','m','e'], each[1:])) for each in _y_pred]

tags = viterbi(nodes)

for i in range(len(text)):

if tags[i] in ['s', 'b']:

words.append(text[i])

else:

words[-1] += text[i]

return words

else:

return []

def cut_word(sentence):

"""首先将一个sentence根据标点和英文符号/字符串划分成多个片段text,然后对每一个片段分词。"""

not_cuts = re.compile(u'([0-9\da-zA-Z ]+)|[。,、?!.\.\?,!]')

result = []

start = 0

for seg_sign in not_cuts.finditer(sentence):

result.extend(simple_cut(sentence[start:seg_sign.start()]))

result.append(sentence[seg_sign.start():seg_sign.end()])

start = seg_sign.end()

result.extend(simple_cut(sentence[start:]))

return result

# 例

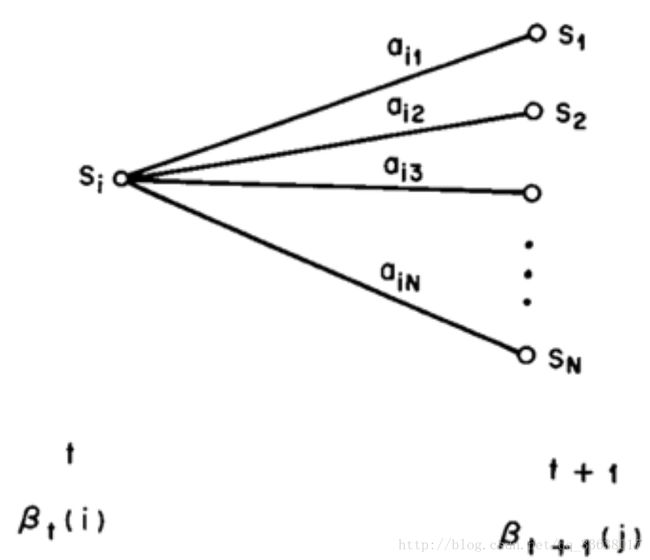

sentence = u'人们思考问题往往不是从零开始的。就好像你现在阅读这篇文章一样,你对每个词的理解都会依赖于你前面看到的一些词,而不是把你前面看的内容全部抛弃了,忘记了,再去理解这个单词。也就是说,人们的思维总是会有延续性的。'

result = cut_word(sentence)

rss = ''

for each in result:

rss = rss + each + ' / '

print rss

# 例

sentence = u'南京市长江大桥'

result = cut_word(sentence)

rss = ''

for each in result:

rss = rss + each + '/ '

print rss