深度学习 残差卷积和反残差卷积的认识与代码实现

我们先尝试的实现残差卷积:

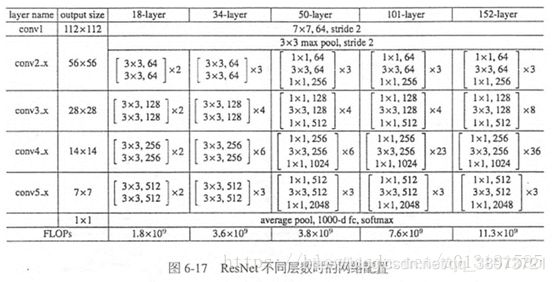

首先,从图中我们不难看出,图中有五种层级的卷积

每种层级之间略微的有些不同,比如18-layer、34-layer中每一个conv中仅仅用了两种卷积

相比较而言,50-layer、101-layer、152-layer中每一个conv中使用了三种卷积

所以我们首先要定义一个setting字典,来区分它们之间的不同

RESNET18 = "RESNET18"

RESNET34 = "RESNET34"

RESNET50 = "RESNET50"

RESNET101 = "RESNET101"

RESNET152 = "RESNET152"

SETTING = {

RESNET18 : {'bottlencek':False, 'repeats':[2,2,2,2]},

RESNET34 : {'bottlencek':False, 'repeats':[3,4,6,3]},

RESNET50 : {'bottlencek':True, 'repeats':[3,4,6,3]},

RESNET101 : {'bottlencek':True, 'repeats':[3,4,23,3]},

RESNET152 : {'bottlencek':True, 'repeats':[3,8,36,3]}

}

其中,bottlencek用来标记卷积的时候判断是否瓶颈型的(瓶颈型其实就是含有三层卷积,非瓶颈型则次之)

其次,repeats用来标记每一组卷积需要的卷积次数

定义一个ResNet类,并在其构造方法中引用setting的值

class RestNet:

def __init__(self, name):

self.bottlencek = SETTING[name]['bottlencek']

self.repeats = SETTING[name]['repeats']

定义一个主函数,用来实现残差卷积的过程,为了方便主函数的调用,这里我们使用了__call__函数

这里简单的介绍一下__call__函数:

当我们调用一个类的方法的时候通常是这样的:

class A:

def a():

pass

a = A()

a.a()

上面这里类方法的调用需要用类名.的形式进行实现

但当我们使用__call__函数时,我们调用一个类中的方法时:

class B:

__call__():

pass

B()

想用调用__call__函数中的方法,直接使用类名就可以调用了。

这里我们使用残差卷积对图片进行处理,(这里,我们使用图片的尺寸是32的倍数)

为什么使用32的倍数的图片,在这里我说一下我自己的看法:

- 残差卷积模型进行了5组卷积,每一组将图片的大小折半,也就是( 2 5 = 32 2^5=32 25=32)

- 人的大脑能够处理的(分析的)也仅仅是五层的卷积【人类脑学家的发现】

基于以上的问题,我们需要使用32的倍数的图片。

这样,我们在实现__call__函数中,首要的就是判断传入的图片是否是32的倍数图片

这里,我们定义一个_check函数进行图片的检测

def _check(image):

shape = image.shape

height = shape[1].value

width = shape[2].value

assert len(shape)==4

assert height % 32 == 0

assert width % 32 == 0

return height, width

定义完了_check函数,接下来,我们需要实现卷积以及池化的操作,因为在残差卷积中涉及到多种卷积,多次卷积

所以,我们需要在__call__函数中定义一个变量的命令空间—tf.variable_scope()也就是用来指定变量的作用域

这也就是我们所希望的所有图片都共享同一过滤器变量(filters)

其次,命名空间也是需要有名字的。并且每一组的命名空间也是不同的。

所以我们就有了如下的定义:

if name is None:

global _name_id

name = '%d' % _name_id

_name_id += 1

我们将获取的名字添加到scope中从而实现上诉的需求:

with tf.variable_scope(name):

准备工作都做好了,接下来,我们要实现的就是残差卷积中的每一个步骤了

以resnet50为例:图链接

从上面的节选图中,我们不难看出,每一次的卷积都会带有一次BN操作和一次激活操作

当然BN操作更加的有趣,这里我就不做详细的说明,到后期我会更新(手写代码==》实现BN操作)

在这里,我们仅仅理解BN操作就是一个批正态归一化的操作

按照以上三步,我们可以定义一个_my_conv来实现以上三个步骤

def _my_conv(image, filters, kernal_size, strides, padding, name, training, active=True):

with tf.variable_scope(name):

image = tf.layers.conv2d(image, filters, kernal_size, strides, padding, name="conv")

image = tf.layers.batch_normalization(image, [1,2,3], epsilon=1e-6, training=training, name='bn')

if active:

image = tf.nn.relu(image)

return image

回归正题,我们回到__call__函数中,这里我们定义了_check和_my_conv的函数

至此我们可以实现残差卷积的大部分的流程(__call__函数的局部定义)

def __call__(self, image, logits:int, training, name=None):

""""""

height , width = _check(image)

if name is None:

global _name_id

name = '%d' % _name_id

_name_id += 1

with tf.variable_scope(name):

image = _my_conv(image, 64, (height//32, width//32), 2, 'same', name='conv', training=training)

从本文第一张图,我们不难看出,此时的__call__函数已经实现了

![]()

接下来就是最大池化操作,记住这里的步长为2的

image = tf.layers.max_pooling2d(image, 2, 2, 'same')

紧接着,我们就开始实现下面的每组的卷积了,也就是下图:

这里的方法也就好的实现了

这里,我不做过多的赘述,详细可以看我的上一篇博客:https://blog.csdn.net/qq_38973721/article/details/107250736

代码实现:

def _repeat(self, x, training):

filters = 64

for num_i, num in enumerate(self.repeats):

for i in range(num):

x = self._residual(x, num_i, i, filters, training)

filters *= 2

return x

def _residual(self, x, num_i, i, filters, training):

strides = 2 if num_i > 0 and i == 0 else 1

if self.bottleneck:

left = _my_conv(x, filters, 1, strides, 'same', name='res_%d_%d_left_myconv1' % (num_i, i), training=training)

left = _my_conv(left, filters, 3, 1, 'same', name='res_%d_%d_left_myconv2' % (num_i, i), training=training)

left = _my_conv(left, 4*filters, 1, 1, 'same', name='res_%d_%d_left_myconv3' % (num_i, i), training=training, active=False)

else:

left = _my_conv(x, filters, 3, strides, 'same', name='res_%d_%d_left_myconv1' % (num_i, i), training=training)

left = _my_conv(left, filters, 3, 1, 'same', name='res_%d_%d_left_myconv2' % (num_i, i), training=training)

if i == 0:

if self.bottleneck:

filters *= 4

right = _my_conv(x, filters, 1, strides, 'same', name='res_%d_%d_right_myconv' % (num_i, i), training=training, active=False)

else:

right = x

return tf.nn.relu(left + right)

这样,我们就基本完成了残差卷积的任务了(一下是__call__函数的完整调用代码)

def __call__(self, x, logits: int, training, name=None):

height, width = _check(x)

if name is None:

global _name_id

name = 'resnet_%d' % _name_id

_name_id += 1

with tf.variable_scope(name):

x = _my_conv(x, 64, (height // 32, width // 32), 2, 'same', name='conv1', training=training) # [-1, h/2, w/2, 64]

x = tf.layers.max_pooling2d(x, 2, 2, 'same') # [-1, h/4, w/4, 64]

x = self._repeat(x, training)

x = tf.layers.average_pooling2d(x, (height//32, width//32), 1) # [-1, 1, 1, 2048]

x = tf.layers.flatten(x)

x = tf.layers.dense(x, logits, name='fc')

return x

过了一遍残差卷积的实现,那么反残差卷积的实现也就不是很难了(直接附上代码了)

def __call__(self, image, size:int, training, name=None):

# image : [-1, -1]

height , width = _check(size)

if name is None:

global _name_id

name = 'transpose_resnet_%d' % _name_id

_name_id += 1

with tf.variable_scope(name):

image = tf.layers.dense(image, 2048, name='fc', activation=tf.nn.relu)

image = tf.reshape(image, [-1, 1, 1, 2048])

image = tf.layers.conv2d_transpose(image, 2048, (height//32, width//32), 1, name='deconv1', activation=tf.nn.relu)

image = self._repeats(image, training)

# x : [-1, 56, 56, 64]

image = tf.layers.conv2d_transpose(image, 64, 3, 2, 'same', name='deconv2', activation=tf.nn.relu) # [-1, 112, 112, 64]

image = tf.layers.conv2d_transpose(image, 3, (height//32, width//32), 2, 'same', name='deconv3') # [-1, 224, 224, 3]

return image

#----------------------------------------------------------------------

def _repeats(self, image, training):

filters = image.shape[-1].value # 2048

for num_i, num in zip(range(len(self.repeats)-1, -1, -1), reversed(self.repeats)):

for i in range(num-1, -1, -1):

image = self._transpose_residual(image, num_i, i, filters, training)

filters //= 2

return image

#----------------------------------------------------------------------

def _transpose_residual(self, image, num_i, i, filters, training):

strides = 2 if num_i > 0 and i == 0 else 1

if self.bottlencek:

left = _my_deconv(image, filters, 1, 1, 'same', name='res_%d_%d_deconv1'%(num_i, i), training=training)

filters //= 4

left = _my_deconv(left, filters, 3, 1, 'same', name='res_%d_%d_deconv2'%(num_i, i), training=training)

left = _my_deconv(left, filters, 1, strides, 'same', name='res_%d_%d_deconv3'%(num_i, i), training=training, active=False)

else:

left = _my_deconv(image, filters, 3, 1, 'same', name='res_%d_%d_left_mydeconv1'%(num_i, i), training=training)

left = _my_deconv(left, filters, 3, strides, 'same', name='res_%d_%d_left_mydeconv2'%(num_i, i), training=training)

if filters != image.shape[-1].value or strides > 1:

right = _my_deconv(image, filters, 1, strides, 'same', name='res_%d_%d_right_deconv'%(num_i, i), training=training)

else:

right = image

return tf.nn.relu(left+right)

#----------------------------------------------------------------------

def _my_deconv(image, filters, kernal_size, strides, padding, name, training, active=True):

""""""

with tf.variable_scope(name):

image = tf.layers.conv2d_transpose(image, filters, kernal_size, strides, padding, name="deconv")

image = tf.layers.batch_normalization(image, [1,2,3], epsilon=1e-6, training=training, name='bn')

if active:

image = tf.nn.relu(image)

return image