YouTube-8M视频数据集概览

参考链接:

官网 https://www.kaggle.com/c/youtube8m-2018/overview

比赛官网:https://research.google.com/youtube8m/index.html

官方发布视频特征提取代码:https://github.com/google/youtube-8m/tree/master/feature_extractor

冠军代码:https://github.com/antoine77340/Youtube-8M-WILLOW

目录

一、概览

1.1 数据集概览

1.2 要求

1.3 数据量大

1.4 特征存储

二、标签分布

三、下载方法

3.1 下载地址

3.2 相关代码

3.3 Frame-level features dataset

3.4 Video-level features dataset

四、已有方法概览

4.1 标签相关性

4.2 时间多尺度信息

4.3 注意力模型

一、概览

1.1 数据集概览

youtube上每天产生1 billion(10^9) hour的视频。

Google AI制作了一个3700+个视频的 YouTube-8M数据集。

为了保证数据集的质量,在选取视频时,做了一些限制:

- 每一个视频都是公开的,且每个视频至少有 1000 帧

- 每一个视频的长度在 120s 到 500s 之间

- 每一个视频至少与一个 Knowledge Graph entities(知识图谱实体)相联系

- 成人视频由自动分类器移除

共有3862类,平均每个视频3个标签,6.1 million的视频总量,

1.2 要求

挑战目的在于learning video representation under budget constraints.

即使在云计算的前提下,也希望模型不要太大,能达到较小的时间和空间复杂度。

模型不能大于1 GB

1.3 数据量大

数据集中视频超过了 5000 个小时,一般需要 1PB(1PB=1024TB) 的硬盘来存储,同时一般也需要 50 CPU-years 来处理这个视频。

1.4 特征存储

同时,已经用在 ImageNet 上训练得到的 Inception-V3 image annotation model 提取了这些视频的 frame-level、video-level 特征。

这些特征是从 1.9 Billion 视频帧中,以每秒 1 帧的时间分辨率进行提取的。之后进行了 PCA 降维处理,是最后的特征能够存储在一张硬盘中(小于 1.5T)。

二、标签分布

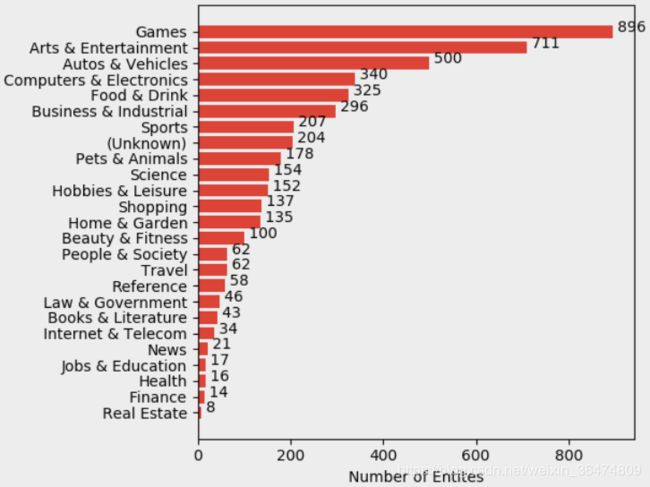

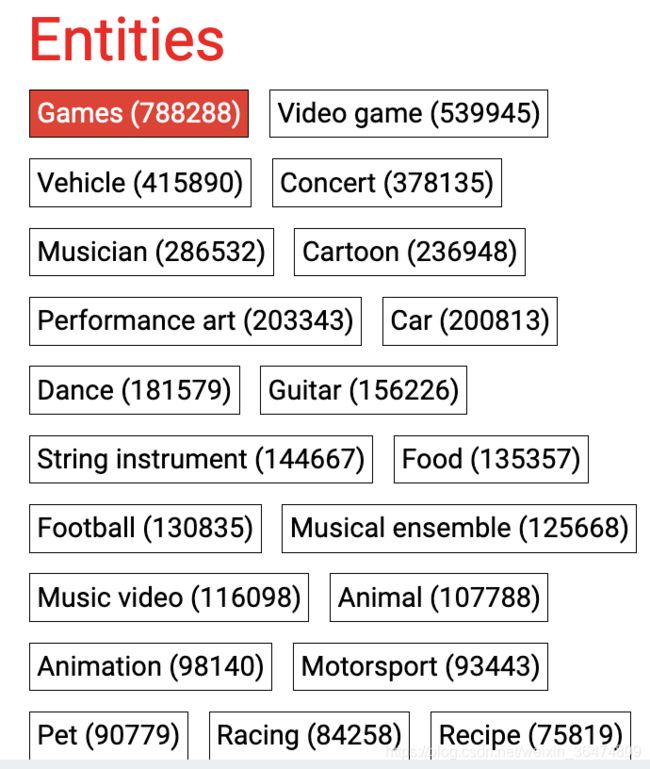

The (multiple) labels per video are Knowledge Graph entities, organized into 24 top-level verticals. Each entity represents a semantic topic that is visually recognizable in video, and the video labels reflect the main topics of each video.

You can download a CSV file (2017 version CSV, deprecated) of our vocabulary. The first field in the file corresponds to each label's index in the dataset files, with the first label corresponding to index 0. The CSV file contains the following columns:

Index,TrainVideoCount,KnowledgeGraphId,Name,WikiUrl,Vertical1,Vertical2,Vertical3,WikiDescription

The entity frequencies are plotted below in log-log scale, which shows a Zipf-like distribution:

标签分布

三、下载方法

3.1 下载地址

We offer the YouTube8M dataset for download as TensorFlow Record files. We provide downloader script that fetches the dataset in shards and stores them in the current directory (output of pwd). It can be restarted if the connection drops. In which case, it only downloads shards that haven't been downloaded yet. We also provide html index pages listing all shards, if you'd like to manually download them. There are two versions of the features: frame-level and video-level features. The dataset is made available by Google Inc. under a Creative Commons Attribution 4.0 International (CC BY 4.0) license.

3.2 相关代码

Starter code for the dataset can be found on our GitHub page. In addition to training code, you will also find python scripts for evaluating standard metrics for comparisons between models.

Note that this starter code is tested only against the latest version and minor changes may be required to use on older versions due to data format changes (e.g., video_id vs. id field, feature names and the number of classes for each of the versions, etc.).

github地址:https://github.com/google/youtube-8m

3.3 Frame-level features dataset

To download the frame-level features, you have the following options:

- Manually download all 3844 shards from the frame-level training, frame-level validation, and the frame-level test partitions. You may also find it useful to download a handful of shards (see details below), start developing your code against those shards, and in conjunction kick-off the larger download.

- Use our python download script. This assumes that you have python and curl installed.

To download the Frame-level dataset using the download script, navigate your terminal to a directory where you would like to download the data. For example:mkdir -p ~/data/yt8m/frame; cd ~/data/yt8m/frame

Then download the training and validation data. Note: Make sure you have 1.53TB of free disk space to store the frame-level feature files. Download the entire dataset as follows:curl data.yt8m.org/download.py | partition=2/frame/train mirror=us python

The above uses the us mirror. If you are located in Europe or Asia, please swap the mirror flag us with eu or asia, respectively.

curl data.yt8m.org/download.py | partition=2/frame/validate mirror=us python

curl data.yt8m.org/download.py | partition=2/frame/test mirror=us python

To download 1/100-th of the training data from the US use:curl data.yt8m.org/download.py | shard=1,100 partition=2/frame/train mirror=us python

3.4 Video-level features dataset

The total size of the video-level features is 31 Gigabytes. They are broken into 3844 shards which can be subsampled to reduce the dataset size. Similar to above, we offer two download options:

- Manually download all 3844 shards from the video-level training, video-level validation, and the video-level test partitions. You may also find it useful to download a handful of shards, start developing your code against those shards, and in conjunction kick-off the larger download.

If you are located in Europe or Asia, please replace us in the URL with eu or asia, respectively to speed up the transfer of the files. - Use our python download script. For example:

mkdir -p ~/data/yt8m/video; cd ~/data/yt8m/video

If you are located in Europe or Asia, please swap the domain prefix us with eu or asia, respectively.

curl data.yt8m.org/download.py | partition=2/video/train mirror=us python

curl data.yt8m.org/download.py | partition=2/video/validate mirror=us python

curl data.yt8m.org/download.py | partition=2/video/test mirror=us python

To download 1/100-th of the training data from the US use:curl data.yt8m.org/download.py | shard=1,100 partition=2/video/train mirror=us python

四、已有方法概览

方法较早,为2017年提出的。

http://www.sohu.com/a/161900690_473283

来自清华大学电子系的团队主要从三个方面对视频进行建模:标签相关性、视频的多层次信息,以及时间上的注意力模型。最终,他们的方法在 600 多支参赛队伍中获得第二

论文地址:https://arxiv.org/abs/1706.05150

代码地址:https://github.com/wangheda/youtube-8m

4.1 标签相关性

提出一种链式神经网络结构来建模多标签分类时的标签相关性。如下图所示,当输入是视频级别特征时,该结构将单个网络的预测输出进行降维,并将降维结果与视频表示层合并成一个表示并再经过一个网络进行预测。网络中最后一级的预测结果为最终分类结果,中间几级的预测结果也会作为损失函数的一部分。链式结构可以重复数级,在视频级别特征和专家混合网络上的实验表明,在控制参数数量相同的条件下,链式结构的层级越多,分类性能越好。

不仅视频级别特征可以使用链式结构,通过如 LSTM、CNN 和注意力网络等视频表示网络,同样也可以对帧级别特征使用链式结构网络。在对该网络进行实验时,我们发现,对其中不同层级的视频表示网络使用不共享的权重,可以获得更好的性能。

4.2 时间多尺度信息

由于不同的语义信息在视频中所占据的时长不同,在一个时间尺度上进行建模可能会对某些分类较为不利。因此,我们采取一种在时间上进行 pooling 的方式来利用在更大的时间尺度上的语义信息。我们采用 1D-CNN 对帧序列提取特征,通过时间上 pooling 来降低特征序列的长度,再通过 1D-CNN 再次提取特征,如此反复得到多个不同长度的特征序列,对每个特征序列,我们采用一个 LSTM 模型进行建模,将最终得到的预测结果进行合并。通过这种方式,我们利用了多个不同时间尺度上的信息,该模型也是我们性能最好的单模型。

4.3 注意力模型

使用的另一模型是对帧序列的表示采用 Attention Pooling 的方式进行聚合,由于原始序列只反映每帧的局部信息,而我们希望聚合具有一定的序列语义的信息,因此我们对 LSTM 模型的输出序列进行 Attention Pooling。实验表明,这种 Attention Pooling 的方式可以提高模型的预测效果。另外,在注意力网络中使用位置 Embedding 可以进一步改善模型性能。

对注意力网络输出的权重进行了可视化,我们发现,注意力网络倾向于给予呈现完整的、可视的物体的画面更高的权重,而对于没有明显前景的、较暗的或呈现字幕的画面更低的权重。