Windows下基于pycharm进行Keras配置

目录

1.下载安装PyCharm

2.安装Python环境

3.下载配置Keras库

4.程序测试

1.下载安装PyCharm

下载地址:https://www.baidu.com/link?url=d7pDJKNJFN-8vn2JKoBIKUEJIfObFDkbLY16ONHZIPtdG1joUb0-El8nTNVlnV2o&ck=3408.4.55.400.311.169.209.336&shh=www.baidu.com&sht=02049043_62_pg&wd=&eqid=8f2494e00014f991000000035d03821c

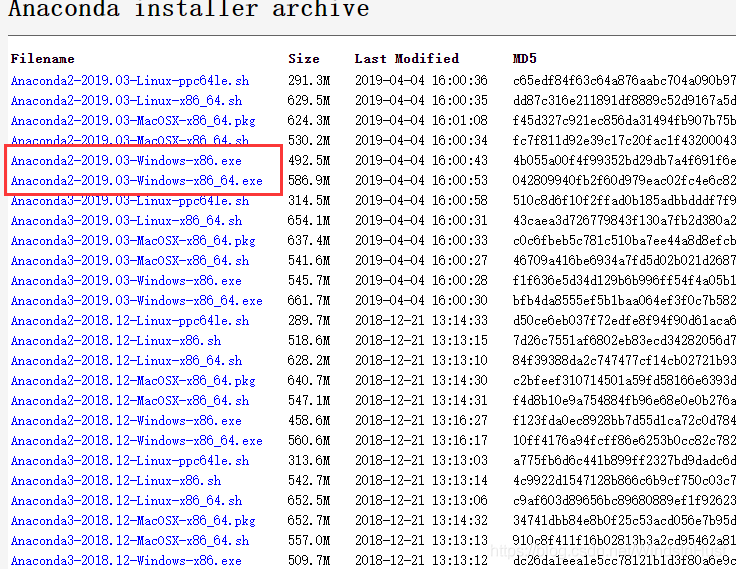

2.安装Python环境

安装的时候勾选上环境变量配置

下载安装Anaconda,地址:

https://repo.continuum.io/archive/index.html

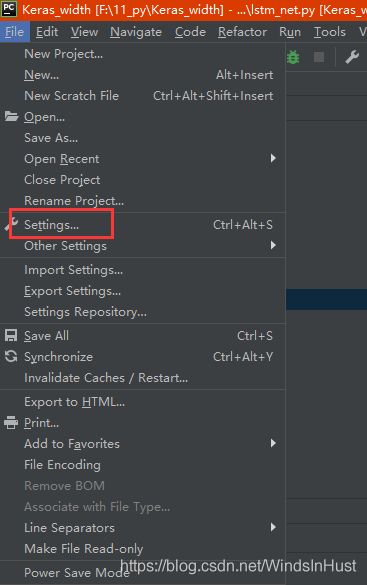

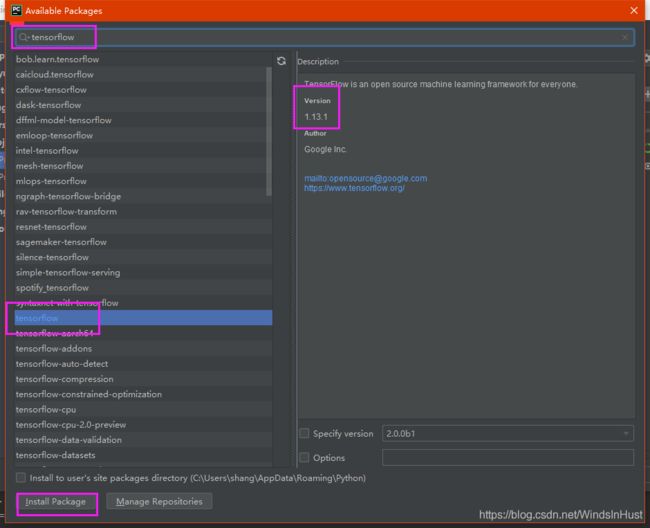

3.下载配置Keras库

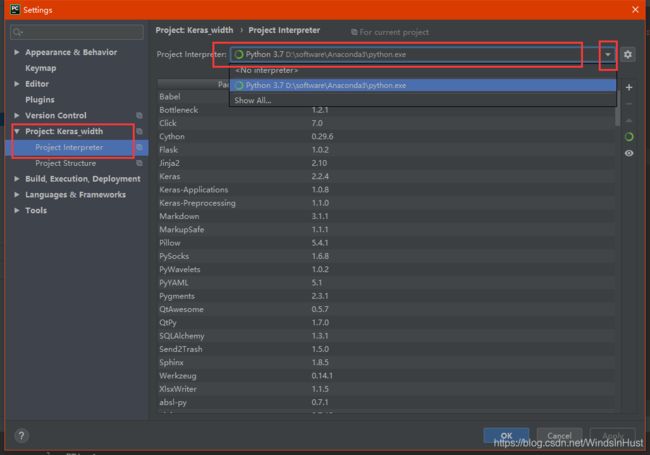

全部安装完成后,打开pycharm软件,设置

安装完成后

同理,安装keras库

配置完成。

4.程序测试

# -*- coding:utf-8 -*-

import numpy as np

np.random.seed(123)

from keras.models import Sequential

from keras.layers import Dense, Dropout, Activation, Flatten

from keras.layers.convolutional import Conv2D

from keras.layers.pooling import MaxPooling2D

from keras.layers import Embedding, LSTM

from keras.utils import np_utils

from keras.datasets import mnist

# 载入mnist数据集(第一次执行需要下载数据)

def loda_mnist():

(X_train, y_train), (X_test, y_test) = mnist.load_data()

# 5. Preprocess input data

X_train = X_train.reshape(X_train.shape[0], 28, 28, 1)

X_test = X_test.reshape(X_test.shape[0], 28, 28, 1)

X_train = X_train.astype('float32')

X_test = X_test.astype('float32')

X_train /= 255

X_test /= 255

Y_train = np_utils.to_categorical(y_train, 10)

Y_test = np_utils.to_categorical(y_test, 10)

print

X_train.shape, Y_train.shape

print

X_test.shape, Y_test.shape

return X_train, Y_train, X_test, Y_test

if __name__ == '__main__':

X_train, Y_train, X_test, Y_test = loda_mnist();

model = Sequential()

# 2个卷积层

model.add(Conv2D(32, (5, 5), activation='relu', input_shape=(28, 28, 1))) # 第一层卷积

model.add(MaxPooling2D(pool_size=(2, 2))) # 第一层池化

model.add(Conv2D(64, (5, 5), activation='relu', input_shape=(14, 14, 1))) # 第二层卷积

model.add(MaxPooling2D(pool_size=(2, 2))) # 第二层池化

model.add(Dropout(0.25)) # 添加节点keep_prob

# 2个全连接层

model.add(Flatten()) # 将多维数据压成1维,方便全连接层操作

model.add(Dense(1024, activation='relu')) # 添加全连接层

model.add(Dropout(0.5))

model.add(Dense(10, activation='softmax'))

# 编译模型

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

# 训练模型

model.fit(X_train, Y_train, batch_size=32, nb_epoch=10, verbose=1)

# 评估模型

score = model.evaluate(X_test, Y_test, verbose=0)

print(score)

输出

Using TensorFlow backend.

Downloading data from https://s3.amazonaws.com/img-datasets/mnist.npz

8192/11490434 [..............................] - ETA: 15:57

24576/11490434 [..............................] - ETA: 10:43

40960/11490434 [..............................] - ETA: 8:05

57344/11490434 [..............................] - ETA: 6:50

73728/11490434 [..............................] - ETA: 6:08

106496/11490434 [..............................] - ETA: 4:52

122880/11490434 [..............................] - ETA: 4:46

……

59616/60000 [============================>.] - ETA: 0s - loss: 0.0179 - acc: 0.9948

59680/60000 [============================>.] - ETA: 0s - loss: 0.0180 - acc: 0.9948

59744/60000 [============================>.] - ETA: 0s - loss: 0.0180 - acc: 0.9948

59808/60000 [============================>.] - ETA: 0s - loss: 0.0180 - acc: 0.9948

59872/60000 [============================>.] - ETA: 0s - loss: 0.0180 - acc: 0.9948

59936/60000 [============================>.] - ETA: 0s - loss: 0.0180 - acc: 0.9948

60000/60000 [==============================] - 74s 1ms/step - loss: 0.0181 - acc: 0.9948

[0.03161891764163324, 0.9914]

Process finished with exit code 0

最简单的手写数字数据集识别网络,99% 的正确率,恭喜,到此,你已经学会了深度学习了。