B站视频弹幕的爬取和绘制词云图

# 只需 shift+回车 运行本单元格,就可以让jupyter notebook宽屏显示

from IPython.core.display import display, HTML

display(HTML(''))

1.爬虫

① url:网页链接

② 模拟浏览器的发送请求即响应

③ 解析网页内容

def BZDM(url,headers):

# 获取网页信息,发送请求

resp = requests.get(url,headers=headers)

# 更改为"utf-8"编码,防止出现乱码

resp.encoding = "utf-8"

res = resp.text

"""

正则表达式都需要先通过分析网页源代码,然后写表达式才能提取我们想要的信息

这里写了3种表达式,都可以实现弹幕的提取

"""

# 正则表达式提取每个人的弹幕,包括标点符号

# ex = r"(.*?)"

ex = '[\u3002\uff1b\uff0c\uff1a\u201c\u201d\uff08\uff09\u3001\uff1f\u300a\u300b\u4e00-\u9fa5]+'

#ex = '[\u4e00-\u9fa5]+' # 只提取汉字,不含标点符号

# 提取

ZM = re.findall(ex,res,re.S)

return ZM

import requests

import re

# 头文件信息:模拟浏览器登录、访问

headers = {

'user-agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:79.0) Gecko/20100101 Firefox/79.0',

"cookie":"_uuid=149B7425-9EC0-F418-E3D4-F05F11F52E7817158infoc; buvid3=7BCBA652-D987-4D86-903B-52710A2084A570379infoc; sid=9euuhyjd; DedeUserID=148925615; DedeUserID__ckMd5=805b9a52a946d4dc; SESSDATA=435a5c44%2C1611037459%2Ca06e4*71; bili_jct=acdb9ef8436f6e9edffb8acdfbefa73d; CURRENT_FNVAL=16; CURRENT_QUALITY=80; rpdid=|(um~u)lRm)k0J'ulmlR)Y|)k; PVID=1; bp_video_offset_148925615=420590814271723170; bp_t_offset_148925615=418367829385970886; bfe_id=393becc67cde8e85697ff111d724b3c8"

}

url = "https://api.bilibili.com/x/v2/dm/history?type=1&oid=217870191&date=2020-08-03"

ZM = BZDM(url,headers)

④ 数据的存储

# 数据的存储

import csv # 储存csv库

b = []

for i in ZM:

with open("B站弹幕.csv","a",newline="",encoding="utf-8-sig") as f:

writer = csv.writer(f)

writer.writerow([i])

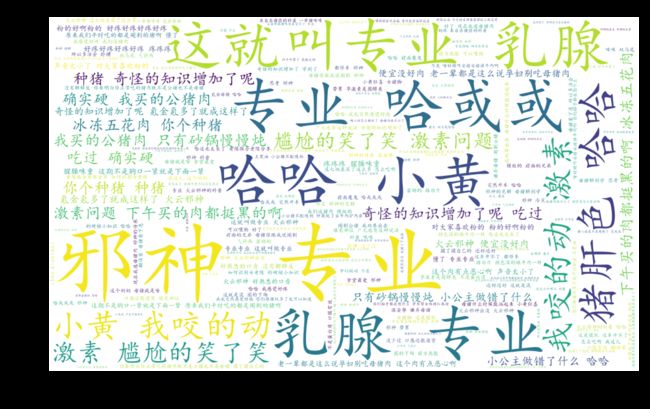

2.绘制词云图

突出文章的关键词,词出现频率越大,在图中显示的就越大。

jieba中文分词地址

import jieba # 中文分词课,用于将句子拆分为词语

import wordcloud

import pyecharts.charts as pe

import matplotlib.pyplot as plt

import matplotlib.font_manager as fm

plt.rcParams['font.sans-serif']=['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus']=False # 用来正常显示负号

f = open("B站弹幕.csv",encoding="utf-8")

txt = f.read()

def make_wordcloud(text):

# 把不想展示的词语删掉

text1 = text.replace("哈哈哈", "")

# 读取词云形状的图片

# bg = plt.imread("zz.jpg")

# 生成

wc = wordcloud.WordCloud(# FFFAE3

background_color="white", # 设置背景为白色,默认为黑色

width=1000, # 设置图片的宽度

height=600, # 设置图片的高度

#mask=bg,

margin=1, # 设置图片的边缘

max_font_size=150, # 显示的最大的字体大小

random_state=10, # 为每个单词返回一个PIL颜色

font_path='simkai.ttf' # 中文处理,用系统自带的字体

).generate_from_text(text1)

# 为图片设置字体

my_font = fm.FontProperties(fname='simkai.ttf')

# 图片背景

# bg_color = wordcloud.ImageColorGenerator(bg)

# 设置图片大小和质量

plt.figure(dpi=1000)

# 显示

plt.imshow(wc.recolor())

# 去掉坐标轴

plt.axis("off")

# 保存云图

#wc.to_file("CC.png")

make_wordcloud(txt)

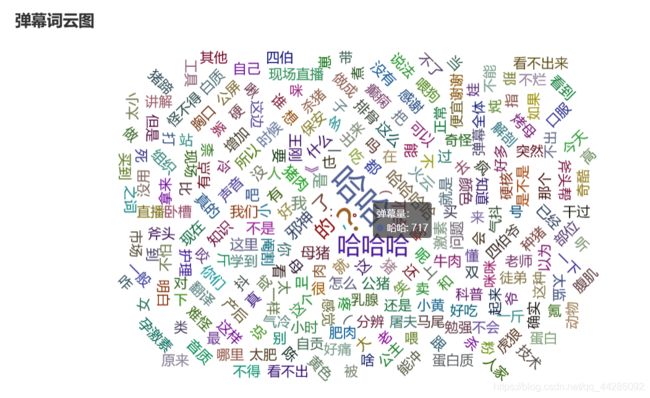

def data_Pyechart(ZM):

jieba.enable_paddle()# 启动paddle模式。

Words = []

for i in ZM:

# seg_list = jieba.cut(i, cut_all=True) # 全模式

# seg_list = jieba.cut(i, cut_all=False) # 精确模式

seg_list = jieba.cut_for_search(i) # 搜索引擎

for j in seg_list:

Words.append(j)

Words_set = set(Words)

data = []

for i in Words_set:

n = 0

for j in Words:

if i == j:

n += 1

if n > 2:

data.append((i,'%d' %n))

return data

def Drow_Pyecharts_WordCloed(ZM,series_name,title):

import pyecharts.options as opts

from pyecharts.charts import WordCloud

data = data_Pyechart(ZM)

c = (

WordCloud(init_opts=opts.InitOpts(width="1000px", height="600px"))

.add(series_name=series_name, data_pair=data, word_size_range=[20, 50])

.set_global_opts(

title_opts=opts.TitleOpts(

title=title, title_textstyle_opts=opts.TextStyleOpts(font_size=23)

),

tooltip_opts=opts.TooltipOpts(is_show=True),

)

)

return c.render_notebook()

Drow_Pyecharts_WordCloed(ZM,"AA","BB")

Paddle enabled successfully......