3天入门python深度学习第一天(黑马程序员,有想要视频资料的小伙伴吗)

应用场景

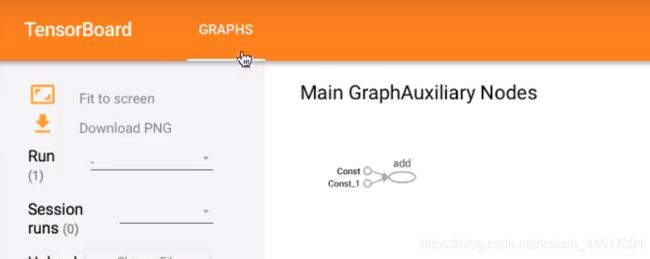

用Tensorflow实现加法运算演示数据流图(需要开启会话)

import tensorflow as tf

def tensorflow_demo():

# Tensorflow实现加法

a=tf.constant(2)

b=tf.constant(3)

c=a+b

print("Tensorflow加法运算的结果:\n",c)

#开启会话

with tf.Session() as sess:

c_t=sess.run(c)

print("c_t:\n",c_t)

if __name__ == '__main__':

tensorflow_demo()

import tensorflow as tf

def tensorflow_demo():

# Tensorflow实现加法

a=tf.constant(2)

b=tf.constant(3)

#c=a+b(不提倡直接使用符合运算)

c=tf.add(a,b)

print("Tensorflow加法运算的结果:\n",c)

# 查看默认图

# 方法1:调用方法

default_g = tf.get_default_graph()

print("default_g:\n", default_g)

# 方法2:查看属性

print("a的图属性:\n", a.graph)

print("c的图属性:\n", c.graph)

#开启会话

with tf.Session() as sess:

c_t=sess.run(c)

print("c_t:\n",c_t)

print("sess的图属性:\n",sess.graph)

return None

if __name__ == '__main__':

tensorflow_demo()

import tensorflow as tf

def tensorflow_demo():

new_g = tf.get_default_graph()

with new_g.as_default():

a_new = tf.constant(20)

b_new = tf.constant(30)

c_new = a_new+b_new

print("c_new:\n",c_new)

#这时就不能用默认的sesstion了

#开启new_g的会话

with tf.Session(graph=new_g) as new_sess:

c_new_value = new_sess.run(c_new)

print("c_new_value:\n",c_new_value)

print("new_sess的图属性:\n",new_sess.graph)

return None

if __name__ == '__main__':

tensorflow_demo()

OP

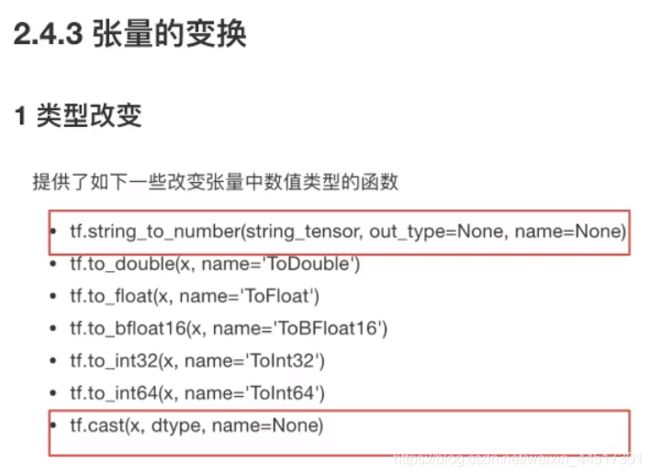

张量

演示:

张量变换总结:

创建变量、变量的初始化、修改命名空间代码:

import tensorflow as tf

import os

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

def variable_demo():

"""

变量的演示

:return:

"""

# 创建变量

with tf.variable_scope("my_scope"):

a = tf.Variable(initial_value=50)

b = tf.Variable(initial_value=40)

with tf.variable_scope("your_scope"):

c = tf.add(a, b)

print("a:\n", a)

print("b:\n", b)

print("c:\n", c)

# 初始化变量

init = tf.global_variables_initializer()

# 开启会话

with tf.Session() as sess:

# 运行初始化

sess.run(init)

a_value, b_value, c_value = sess.run([a, b, c])

print("a_value:\n", a_value)

print("b_value:\n", b_value)

print("c_value:\n", c_value)

return None

if __name__ == '__main__':

variable_demo()

import tensorflow as tf

def linear_regression():

"""

自实现一个线性回归

:return:

"""

# 1)准备数据

X = tf.random_normal(shape=[100, 1])

y_true = tf.matmul(X, [[0.8]]) + 0.7

# 2)构造模型

# 定义模型参数 用 变量

weights = tf.Variable(initial_value=tf.random_normal(shape=[1, 1]))

bias = tf.Variable(initial_value=tf.random_normal(shape=[1, 1]))

y_predict = tf.matmul(X, weights) + bias

# 3)构造损失函数

error = tf.reduce_mean(tf.square(y_predict - y_true))

# 4)优化损失

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.01).minimize(error)

# 显式地初始化变量

init = tf.global_variables_initializer()

# 开启会话

with tf.Session() as sess:

# 初始化变量

sess.run(init)

# 查看初始化模型参数之后的值

print("训练前模型参数为:权重%f,偏置%f,损失为%f" % (weights.eval(), bias.eval(), error.eval()))

#开始训练

for i in range(100):

sess.run(optimizer)

print("第%d次训练后模型参数为:权重%f,偏置%f,损失为%f" % (i+1, weights.eval(), bias.eval(), error.eval()))

return None

if __name__ == '__main__':

linear_regression()

import tensorflow as tf

import os

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

def linear_regression():

"""

自实现一个线性回归

:return:

"""

with tf.variable_scope("prepare_data"):

# 1)准备数据

X = tf.random_normal(shape=[100, 1], name="feature")

y_true = tf.matmul(X, [[0.8]]) + 0.7

with tf.variable_scope("create_model"):

# 2)构造模型

# 定义模型参数 用 变量

weights = tf.Variable(initial_value=tf.random_normal(shape=[1, 1]), name="Weights")

bias = tf.Variable(initial_value=tf.random_normal(shape=[1, 1]), name="Bias")

y_predict = tf.matmul(X, weights) + bias

with tf.variable_scope("loss_function"):

# 3)构造损失函数

error = tf.reduce_mean(tf.square(y_predict - y_true))

with tf.variable_scope("optimizer"):

# 4)优化损失

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.01).minimize(error)

# 2_收集变量

tf.summary.scalar("error", error)

tf.summary.histogram("weights", weights)

tf.summary.histogram("bias", bias)

# 3_合并变量

merged = tf.summary.merge_all()

# 创建Saver对象

saver = tf.train.Saver()

# 显式地初始化变量

init = tf.global_variables_initializer()

# 开启会话

with tf.Session() as sess:

# 初始化变量

sess.run(init)

# 1_创建事件文件

file_writer = tf.summary.FileWriter("./tmp/linear", graph=sess.graph)

# 查看初始化模型参数之后的值

print("训练前模型参数为:权重%f,偏置%f,损失为%f" % (weights.eval(), bias.eval(), error.eval()))

# #开始训练

# for i in range(100):

# sess.run(optimizer)

# print("第%d次训练后模型参数为:权重%f,偏置%f,损失为%f" % (i+1, weights.eval(), bias.eval(), error.eval()))

#

# # 运行合并变量操作

# summary = sess.run(merged)

# # 将每次迭代后的变量写入事件文件

# file_writer.add_summary(summary, i)

#

# # 保存模型

# if i % 10 ==0:

# saver.save(sess, "./tmp/model/my_linear.ckpt")

# 加载模型

if os.path.exists("./tmp/model/checkpoint"):

saver.restore(sess, "./tmp/model/my_linear.ckpt")

print("训练后模型参数为:权重%f,偏置%f,损失为%f" % (weights.eval(), bias.eval(), error.eval()))

return None

if __name__ == '__main__':

linear_regression()

import tensorflow as tf

# 1)定义命令行参数

tf.app.flags.DEFINE_integer("max_step", 100, "训练模型的步数")

tf.app.flags.DEFINE_string("model_dir", "Unknown", "模型保存的路径+模型名字")

# 2)简化变量名

FLAGS = tf.app.flags.FLAGS

def command_demo():

"""

命令行参数演示

:return:

"""

print("max_step:\n", FLAGS.max_step)

print("model_dir:\n", FLAGS.model_dir)

return None

if __name__ == '__main__':

command_demo()

在主函数中直接使用

![]()

就可以调用main(argv),功能是显示路径