Spark RDD

Spark RDD

一、概述

At a high level, every Spark application consists of a driver program that runs the user’s main function and executes various parallel operations on a cluster. The main abstraction Spark provides is a resilient

distributed dataset (RDD), which is a collection of elements partitioned across the nodes of the cluster that can be operated on in parallel.

总体上看Spark,每个Spark应⽤程序都包含⼀个Driver,该Driver程序运⾏⽤户的main⽅法并在集

群上执⾏各种并⾏操作。 Spark提供的主要抽象概念,是弹性分布式数据集(RDD resilient distributed dataset),它是 跨集群 分的元素 的集合,可以并⾏操作。

RDD可以通过从Hadoop⽂件系统(或任何其他Hadoop⽀持的⽂件系统)中的⽂件或驱动程序中现有的

Scala集合开始并进⾏转换来创建RDD,然后调⽤RDD算⼦实现对RDD的转换运算。⽤户还可以要求Spark将RDD持久存储在内存中,从⽽使其可以在并⾏操作中⾼效地重复使⽤。最后,RDD会⾃动从节点故障中恢复。

二、开发环境

1、导⼊Maven依赖

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-core_2.11artifactId>

<version>2.4.5version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-clientartifactId>

<version>2.9.2version>

dependency>

2、Scala编译插件

<plugin>

<groupId>net.alchim31.mavengroupId>

<artifactId>scala-maven-pluginartifactId>

<version>4.0.1version>

<executions>

<execution>

<id>scala-compile-firstid>

<phase>process-resourcesphase>

<goals>

<goal>add-sourcegoal>

<goal>compilegoal>

goals>

execution>

executions>

plugin>

3、打包fat jar插件

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-shade-pluginartifactId>

<version>2.4.3version>

<executions>

<execution>

<phase>packagephase>

<goals>

<goal>shadegoal>

goals>

<configuration>

<filters>

<filter>

<artifact>*:*artifact>

<excludes>

<exclude>META-INF/*.SFexclude>

<exclude>META-INF/*.DSAexclude>

<exclude>META-INF/*.RSAexclude>

excludes>

filter>

filters>

configuration>

execution>

executions>

plugin>

4、JDK编译版本插件(可选)

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-compiler-pluginartifactId>

<version>3.2version>

<configuration>

<source>1.8source>

<target>1.8target>

<encoding>UTF-8encoding>

configuration>

<executions>

<execution>

<phase>compilephase>

<goals>

<goal>compilegoal>

goals>

execution>

executions>

plugin>

5、Driver编写

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object SparkWordCountApplication1 {

// Driver

def main(args: Array[String]): Unit = {

//1.创建SparkContext

val conf = new SparkConf()

.setMaster("spark://CentOS:7077")

.setAppName("SparkWordCountApplication")

val sc = new SparkContext(conf)

//2.创建RDD - 细化

val linesRDD: RDD[String] = sc.textFile("hdfs:///demo/words")

//3.RDD->RDD 转换 lazy 并⾏的 - 细化

var resultRDD:RDD[(String,Int)]=linesRDD.flatMap(line=> line.split("\\s+"))

.map(word=>(word,1))

.reduceByKey((v1,v2)=>v1+v2)

//4.RDD-> Unit或者本地集合Array|List 动作转换 触发job执⾏

val resutlArray: Array[(String, Int)] = resultRDD.collect()

//Scala本地集合运算和Spark脱离关系

resutlArray.foreach(t=>println(t._1+"->"+t._2))

//5.关闭SparkContext

sc.stop()

}

}

6、使⽤maven package进⾏打包,将fatjar上传到CentOS

7、使⽤spark-submit提交任务

[root@CentOS spark-2.4.5]# ./bin/spark-submit --master spark://centos:7077 --deploy-mode client --class com.baizhi.quickstart.SparkWordCountApplication1 --name wordcount --total-executor-cores 6 /root/spark-rdd-1.0-SNAPSHOT.jar

8、Spark提供了本地测试的⽅法

object SparkWordCountApplication2 {

// Driver

def main(args: Array[String]): Unit = {

//1.创建SparkContext

val conf = new SparkConf()

.setMaster("local[6]")

.setAppName("SparkWordCountApplication")

val sc = new SparkContext(conf)

//关闭⽇志显示

sc.setLogLevel("ERROR")

//2.创建RDD - 细化

val linesRDD: RDD[String] = sc.textFile("hdfs://CentOS:9000/demo/words")

//3.RDD->RDD 转换 lazy 并⾏的 - 细化

var resultRDD:RDD[(String,Int)]=linesRDD.flatMap(line=> line.split("\\s+"))

.map(word=>(word,1))

.reduceByKey((v1,v2)=>v1+v2)

//4.RDD-> Unit或者本地集合Array|List 动作转换 触发job执⾏

val resutlArray: Array[(String, Int)] = resultRDD.collect()

//Scala本地集合运算和Spark脱离关系

resutlArray.foreach(t=>println(t._1+"->"+t._2))

//5.关闭SparkContext

sc.stop()

}

}

需要resource导⼊log4j.poperties

log4j.rootLogger = FATAL,stdout

log4j.appender.stdout = org.apache.log4j.ConsoleAppender

log4j.appender.stdout.Target = System.out

log4j.appender.stdout.layout = org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern = %p %d{yyyy-MM-dd HH:mm:ss} %c %m%n

三、RDD创建

Spark revolves around the concept of a resilient distributed dataset (RDD), which is a fault-tolerant collection of elements that can be operated on in parallel. There are two ways to create RDDs: parallelizing an existing collection in your driver program, or referencing a dataset in an external storage system, such as a shared filesystem, HDFS, HBase, or any data source o!ering a Hadoop InputFormat.

Spark围绕弹性分布式数据集(RDD)的概念展开,RDD是⼀个具有容错特性且可并⾏操作的元素集合。创建RDD的⽅法有两种:

①可以在Driver并⾏化现有的Scala集合

②引⽤外部存储系统(例如共享⽂件系统,HDFS,HBase或提供Hadoop InputFormat的任何数据源)中的数据集。

1、Parallelized Collections(并行集合,从本地创建)sc.parallelize或sc.makeRDD

通过在Driver程序中的现有集合(Scala Seq)上调⽤SparkContext的parallelize或者makeRDD⽅法来创建并⾏集合。复制集合的元素以形成可以并⾏操作的分布式数据集。例如,以下是创建包含数字1到5的并⾏化集合的⽅法:

scala> val data = Array(1, 2, 3, 4, 5)

data: Array[Int] = Array(1, 2, 3, 4, 5)

scala> val distData = sc.parallelize(data)

distData: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[0] at parallelize at

<console>:26

并⾏集合的可以指定⼀个分区参数,⽤于指定计算的并⾏度。Spark集群的为每个分区运⾏⼀个任务。

当⽤户不指定分区的时候,sc会根据系统分配到的资源⾃动做分区。例如:

[root@centos spark-2.4.5]# ./bin/spark-shell --master spark://centos:7077 --total-executor-cores 6

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://centos:4040

Spark context available as 'sc' (master = spark://centos:7077, app id = app-20200208013551-0006).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.5

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_231)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

系统会⾃动在并⾏化集合的时候,指定分区数为6。⽤户也可以⼿动指定分区数

scala> val distData = sc.parallelize(data,10)

distData: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[1] at parallelize at

<console>:26

scala> distData.getNumPartitions

res1: Int = 10

2、External Datasets(从外围系统读取数据)

Spark可以从Hadoop⽀持的任何存储源创建分布式数据集,包括您的本地⽂件系统,HDFS,HBase,Amazon S3、RDBMS等。

①、本地⽂件系统

scala> sc.textFile("file:///root/t_word").collect

res6: Array[String] = Array(this is a demo, hello spark, "good good study ", "day day up ", come on baby)

②、读HDFS

a、textFile

会将⽂件转换为RDD[String]集合对象,每⼀⾏⽂件表示RDD集合中的⼀个元素

scala> sc.textFile("hdfs:///demo/words/t_word").collect

res7: Array[String] = Array(this is a demo, hello spark, "good good study ", "day day up ", come on baby)

该参数也可以指定分区数,但是需要分区数 >= ⽂件系统数据块的个数,所以⼀般在不知道德情况下,⽤户可以省略不给。

b、wholeTextFiles

会将⽂件转换为RDD[(String,String)]集合对象,RDD中每⼀个元组元素表示⼀个⽂件。其中 _1 ⽂件

名 _2 ⽂件内容

scala> sc.wholeTextFiles("hdfs:///demo/words",1).collect

res26: Array[(String, String)] =

Array((hdfs://CentOS:9000/demo/words/t_word,"this is a demo

hello spark

good good study

day day up

come on baby

"))

scala> sc.wholeTextFiles("hdfs:///demo/words",1).collect

res26: Array[(String, String)] =

Array((hdfs://CentOS:9000/demo/words/t_word,"this is a demo

hello spark

good good study

day day up

come on baby

"))

scala>

sc.wholeTextFiles("hdfs:///demo/words",1).map(t=>t._2).flatMap(context=>context.split(

"\n")).collect

res25: Array[String] = Array(this is a demo, hello spark, "good good study ", "day day

up ", come on baby)

3、newAPIHadoopRDD

①、MySQL

<dependency>

<groupId>mysqlgroupId>

<artifactId>mysql-connector-javaartifactId>

<version>5.1.38version>

dependency>

object SparkNewHadoopAPIMySQL {

// Driver

def main(args: Array[String]): Unit = {

//1.创建SparkContext

val conf = new SparkConf()

.setMaster("local[*]")

.setAppName("SparkWordCountApplication")

val sc = new SparkContext(conf)

val hadoopConfig = new Configuration()

DBConfiguration.configureDB(hadoopConfig, //配置数据库的链接参数

"com.mysql.jdbc.Driver",

"jdbc:mysql://localhost:3306/test",

"root",

"root"

)

//设置查询相关属性

hadoopConfig.set(DBConfiguration.INPUT_QUERY,"select id,name,password,birthDay

from t_user")

hadoopConfig.set(DBConfiguration.INPUT_COUNT_QUERY,"select count(id) from t_user")

hadoopConfig.set(DBConfiguration.INPUT_CLASS_PROPERTY,"com.baizhi.createrdd.UserDBWri

table")

//通过Hadoop提供的InputFormat读取外部数据源

val jdbcRDD:RDD[(LongWritable,UserDBWritable)] = sc.newAPIHadoopRDD(

hadoopConfig, //hadoop配置信息

classOf[DBInputFormat[UserDBWritable]], //输⼊格式类

classOf[LongWritable], //Mapper读⼊的Key类型

classOf[UserDBWritable] //Mapper读⼊的Value类型

)

jdbcRDD.map(t=>(t._2.id,t._2.name,t._2.password,t._2.birthDay))

.collect() //动作算⼦ 远程数据 拿到 Driver端 ,⼀般⽤于⼩批量数据测试

.foreach(t=>println(t))

//jdbcRDD.foreach(t=>println(t))//动作算⼦,远端执⾏ ok

//jdbcRDD.collect().foreach(t=>println(t)) 因为UserDBWritable、LongWritable都没法序列

化 error

//5.关闭SparkContext

sc.stop()

}

}

class UserDBWritable extends DBWritable {

var id:Int=_

var name:String=_

var password:String=_

var birthDay:Date=_

//主要⽤于DBOutputFormat,因为使⽤的是读取,该⽅法可以忽略

override def write(preparedStatement: PreparedStatement): Unit = {}

//在使⽤DBInputFormat,需要将读取的结果集封装给成员属性

override def readFields(resultSet: ResultSet): Unit = {

id=resultSet.getInt("id")

name=resultSet.getString("name")

password=resultSet.getString("password")

birthDay=resultSet.getDate("birthDay")

}

}

②、Hbase

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-authartifactId>

<version>2.9.2version>

dependency> <dependency>

<groupId>org.apache.hbasegroupId>

<artifactId>hbase-clientartifactId>

<version>1.2.4version>

dependency> <dependency>

<groupId>org.apache.hbasegroupId>

<artifactId>hbase-serverartifactId>

<version>1.2.4version>

dependency>

import org.apache.hadoop.conf.Configuration

import org.apache.hadoop.hbase.HConstants

import org.apache.hadoop.hbase.client.{Result, Scan}

import org.apache.hadoop.hbase.io.ImmutableBytesWritable

import org.apache.hadoop.hbase.mapreduce.{TableInputFormat, TableMapReduceUtil}

import org.apache.hadoop.hbase.protobuf.ProtobufUtil

import org.apache.hadoop.hbase.util.{Base64, Bytes}

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object SparkNewHadoopAPIHbase {

// Driver

def main(args: Array[String]): Unit = {

//1.创建SparkContext

val conf = new SparkConf()

.setMaster("local[*]")

.setAppName("SparkWordCountApplication")

val sc = new SparkContext(conf)

val hadoopConf = new Configuration()

hadoopConf.set(HConstants.ZOOKEEPER_QUORUM,"CentOS")//hbase链接参数

hadoopConf.set(TableInputFormat.INPUT_TABLE,"baizhi:t_user")

val scan = new Scan() //构建查询项

val pro = ProtobufUtil.toScan(scan)

hadoopConf.set(TableInputFormat.SCAN,Base64.encodeBytes(pro.toByteArray))

val hbaseRDD:RDD[(ImmutableBytesWritable,Result)] = sc.newAPIHadoopRDD(

hadoopConf, //hadoop配置

classOf[TableInputFormat],//输⼊格式

classOf[ImmutableBytesWritable], //Mapper key类型

classOf[Result]//Mapper Value类型

)

hbaseRDD.map(t=>{

val rowKey = Bytes.toString(t._1.get())

val result = t._2

val name = Bytes.toString(result.getValue("cf1".getBytes(), "name".getBytes()))

(rowKey,name)

}).foreach(t=> println(t))

//5.关闭SparkContext

sc.stop()

}

}

四、RDD Operations(RDD操作)

RDD⽀持两种类型的操作:transformations-转换 ,将⼀个已经存在的RDD转换为⼀个新的RDD,另外⼀种称为actions-动作 ,动作算⼦⼀般在执⾏结束以后,会将结果返回给Driver。在Spark中所有的transformations 都是lazy(惰性)的,所有转换算⼦并不会⽴即执⾏,它们仅仅是记录对当前RDD的转换逻辑。仅当Actions 算⼦要求将结果返回给Driver程序时transformations 才开始真正的进⾏转换计算。这种设

计使Spark可以更⾼效地运⾏。

默认情况下,每次在其上执⾏操作时,都可能会重新计算每个转换后的RDD。但是,您也可以使⽤persist(或cache)⽅法将RDD保留在内存中,在这种情况下,Spark会将元素保留在群集中,以便下次

查询时可以更快地进⾏访问。

scala> var rdd1=sc.textFile("hdfs:///demo/words/t_word",1).map(line=>line.split(" ").length)

rdd1: org.apache.spark.rdd.RDD[Int] = MapPartitionsRDD[117] at map at <console>:24

scala> rdd1.cache

res54: org.apache.spark.rdd.RDD[Int] = MapPartitionsRDD[117] at map at <console>:24

scala> rdd1.reduce(_+_)

res55: Int = 15

scala> rdd1.reduce(_+_)

res56: Int = 15

Spark还⽀持将RDD持久存储在磁盘上,或在多个节点之间复制。⽐如⽤户可调⽤ persist(StorageLevel.DISK_ONLY_2) 将RDD存储在磁盘上,并且存储2份。

1、Transformations(转换)

①、map(func)

Return a new distributed dataset formed by passing each element of the source through a function func .

将⼀个RDD[U] 转换为 RRD[T]类型。在转换的时候需要⽤户提供⼀个匿名函数 func: U => T

scala> var rdd:RDD[String]=sc.makeRDD(List("a","b","c","a"))

rdd: org.apache.spark.rdd.RDD[String] = ParallelCollectionRDD[120] at makeRDD at

<console>:25

scala> val mapRDD:RDD[(String,Int)] = rdd.map(w => (w, 1))

mapRDD: org.apache.spark.rdd.RDD[(String, Int)] = MapPartitionsRDD[121] at map at

<console>:26

②、filter(func)

Return a new dataset formed by selecting those elements of the source on which func returns true.

将对⼀个RDD[U]类型元素进⾏过滤,过滤产⽣新的RDD[U],但是需要⽤户提供 func:U => Boolean 系

统仅仅会保留返回true的元素。

scala> var rdd:RDD[Int]=sc.makeRDD(List(1,2,3,4,5))

rdd: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[122] at makeRDD at

<console>:25

scala> val mapRDD:RDD[Int]=rdd.filter(num=> num %2 == 0)

mapRDD: org.apache.spark.rdd.RDD[Int] = MapPartitionsRDD[123] at filter at

<console>:26

scala> mapRDD.collect

res63: Array[Int] = Array(2, 4)

③、flatMap(func)

Similar to map, but each input item can be mapped to 0 or more output items (so func should return a Seq rather than a single item)

和map类似,也是将⼀个RDD[U] 转换为 RRD[T]类型。但是需要⽤户提供⼀个⽅法 func:U => Seq[T]

scala> var rdd:RDD[String]=sc.makeRDD(List("this is","good good"))

rdd: org.apache.spark.rdd.RDD[String] = ParallelCollectionRDD[124] at makeRDD at

<console>:25

scala> var flatMapRDD:RDD[(String,Int)]=rdd.flatMap(line=> for(i<- line.split("\\s+"))

yield (i,1))

flatMapRDD: org.apache.spark.rdd.RDD[(String, Int)] = MapPartitionsRDD[125] at flatMap

at <console>:26

scala> var flatMapRDD:RDD[(String,Int)]=rdd.flatMap( line=>

line.split("\\s+").map((_,1)))

flatMapRDD: org.apache.spark.rdd.RDD[(String, Int)] = MapPartitionsRDD[126] at flatMap

at <console>:26

scala> flatMapRDD.collect

res64: Array[(String, Int)] = Array((this,1), (is,1), (good,1), (good,1))

④、mapPartitions(func)

Similar to map, but runs separately on each partition (block) of the RDD, so func must be of type Iterator => Iterator when running on an RDD of type T.

和map类似,但是该⽅法的输⼊时⼀个分区的全量数据,因此需要⽤户提供⼀个分区的转换⽅法:func:Iterator => Iterator

scala> var rdd:RDD[Int]=sc.makeRDD(List(1,2,3,4,5))

rdd: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[128] at makeRDD at

<console>:25

scala> var mapPartitionsRDD=rdd.mapPartitions(values => values.map(n=>(n,n%2==0)))

mapPartitionsRDD: org.apache.spark.rdd.RDD[(Int, Boolean)] = MapPartitionsRDD[129] at

mapPartitions at <console>:26

scala> mapPartitionsRDD.collect

res70: Array[(Int, Boolean)] = Array((1,false), (2,true), (3,false), (4,true),

(5,false))

⑤、mapPartitionsWithIndex(func)

Similar to mapPartitions, but also provides func with an integer value representing the index of the partition, so func must be of type (Int, Iterator) => Iterator when running on an RDD of type T.

和mapPartitions类似,但是该⽅法会提供RDD元素所在的分区编号。因此 func:(Int, Iterator)=> Iterator

scala> var rdd:RDD[Int]=sc.makeRDD(List(1,2,3,4,5,6),2)

rdd: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[139] at makeRDD at

<console>:25

scala> var mapPartitionsWithIndexRDD=rdd.mapPartitionsWithIndex((p,values) =>

values.map(n=>(n,p)))

mapPartitionsWithIndexRDD: org.apache.spark.rdd.RDD[(Int, Int)] =

MapPartitionsRDD[140] at mapPartitionsWithIndex at <console>:26

scala> mapPartitionsWithIndexRDD.collect

res77: Array[(Int, Int)] = Array((1,0), (2,0), (3,0), (4,1), (5,1), (6,1))

⑥、sample( withReplacement , fraction , seed)

Sample a fraction fraction of the data, with or without replacement, using a given random number generator seed.

抽取RDD中的样本数据,可以通过 withReplacement :是否允许重复抽样、 fraction :控制抽样⼤致⽐例、 seed :控制的是随机抽样过程中产⽣随机数。

scala> var rdd:RDD[Int]=sc.makeRDD(List(1,2,3,4,5,6))

rdd: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[150] at makeRDD at

<console>:25

scala> var simpleRDD:RDD[Int]=rdd.sample(false,0.5d,1L)

simpleRDD: org.apache.spark.rdd.RDD[Int] = PartitionwiseSampledRDD[151] at sample at

<console>:26

scala> simpleRDD.collect

res91: Array[Int] = Array(1, 5, 6)

种⼦不⼀样,会影响最终的抽样结果!

⑦、union( otherDataset)

Return a new dataset that contains the union of the elements in the source dataset and the argument.

是将两个同种类型的RDD的元素进⾏合并。

scala> var rdd:RDD[Int]=sc.makeRDD(List(1,2,3,4,5,6))

rdd: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[154] at makeRDD at

<console>:25

scala> var rdd2:RDD[Int]=sc.makeRDD(List(6,7))

rdd2: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[155] at makeRDD at

<console>:25

scala> rdd.union(rdd2).collect

res95: Array[Int] = Array(1, 2, 3, 4, 5, 6, 6, 7)

⑧、intersection( otherDataset)

Return a new RDD that contains the intersection of elements in the source dataset and the argument.

是将两个同种类型的RDD的元素进⾏计算交集。

scala> var rdd:RDD[Int]=sc.makeRDD(List(1,2,3,4,5,6))

rdd: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[154] at makeRDD at

<console>:25

scala> var rdd2:RDD[Int]=sc.makeRDD(List(6,7))

rdd2: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[155] at makeRDD at

<console>:25

scala> rdd.intersection(rdd2).collect

res100: Array[Int] = Array(6)

⑨、distinct([ numPartitions ]))

Return a new dataset that contains the distinct elements of the source dataset.

去除RDD中重复元素,其中numPartitions 是⼀个可选参数,是否修改RDD的分区数,⼀般是在当数据集经过去重之后,如果数据量级⼤规模降低,可以尝试传递numPartitions 减少分区数

scala> var rdd:RDD[Int]=sc.makeRDD(List(1,2,3,4,5,6,5))

rdd: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[154] at makeRDD at

<console>:25

scala> rdd.distinct(3).collect

res106: Array[Int] = Array(6, 3, 4, 1, 5, 2)

⑩、join( otherDataset , [ numPartitions ])

When called on datasets of type (K, V) and (K, W), returns a dataset of (K, (V, W)) pairs with all pairs of elements for each key. Outer joins are supported through le"OuterJoin, rightOuterJoin, and fullOuterJoin.

当调⽤RDD[(K,V)]和RDD[(K,W)]系统可以返回⼀个新的RDD[(k,(v,w))](默认内连接),⽬前⽀持leftOuterJoin, rightOuterJoin, 和 fullOuterJoin.

scala> var userRDD:RDD[(Int,String)]=sc.makeRDD(List((1,"zhangsan"),(2,"lisi")))

userRDD: org.apache.spark.rdd.RDD[(Int, String)] = ParallelCollectionRDD[204] at

makeRDD at <console>:25

scala> case class OrderItem(name:String,price:Double,count:Int)

defined class OrderItem

scala> var

orderItemRDD:RDD[(Int,OrderItem)]=sc.makeRDD(List((1,OrderItem("apple",4.5,2))))

orderItemRDD: org.apache.spark.rdd.RDD[(Int, OrderItem)] = ParallelCollectionRDD[206]

at makeRDD at <console>:27

scala> userRDD.join(orderItemRDD).collect

res107: Array[(Int, (String, OrderItem))] = Array((1,

(zhangsan,OrderItem(apple,4.5,2))))

scala> userRDD.leftOuterJoin(orderItemRDD).collect

res108: Array[(Int, (String, Option[OrderItem]))] = Array((1,

(zhangsan,Some(OrderItem(apple,4.5,2)))), (2,(lisi,None)))

⑪、cogroup( otherDataset , [ numPartitions ])

When called on datasets of type (K, V) and (K, W), returns a dataset of (K, (Iterable,Iterable)) tuples. This operation is also called groupWith .

当调用(K, V)和K, W)类型的数据集时,返回一个(K, (Iterable < V >, Iterable < W >))元组的数据集。此操作也称为groupWith。

scala> var userRDD:RDD[(Int,String)]=sc.makeRDD(List((1,"zhangsan"),(2,"lisi")))

userRDD: org.apache.spark.rdd.RDD[(Int, String)] = ParallelCollectionRDD[204] at

makeRDD at <console>:25

scala> var

orderItemRDD:RDD[(Int,OrderItem)]=sc.makeRDD(List((1,OrderItem("apple",4.5,2)),

(1,OrderItem("pear",1.5,2))))

orderItemRDD: org.apache.spark.rdd.RDD[(Int, OrderItem)] = ParallelCollectionRDD[215]

at makeRDD at <console>:27

scala> userRDD.cogroup(orderItemRDD).collect

res110: Array[(Int, (Iterable[String], Iterable[OrderItem]))] = Array((1,

(CompactBuffer(zhangsan),CompactBuffer(OrderItem(apple,4.5,2),

OrderItem(pear,1.5,2)))), (2,(CompactBuffer(lisi),CompactBuffer())))

scala> userRDD.groupWith(orderItemRDD).collect

res119: Array[(Int, (Iterable[String], Iterable[OrderItem]))] = Array((1,

(CompactBuffer(zhangsan),CompactBuffer(OrderItem(apple,4.5,2),

OrderItem(pear,1.5,2)))), (2,(CompactBuffer(lisi),CompactBuffer())))

⑫、cartesian( otherDataset)

When called on datasets of types T and U, returns a dataset of (T, U) pairs (all pairs of elements)

当调用T和U类型数据集时,返回一个(T, U)(所有成对的元素)数据集

计算集合笛卡尔积

scala> var rdd1:RDD[Int]=sc.makeRDD(List(1,2,4))

rdd1: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[238] at makeRDD at

<console>:25

scala> var rdd2:RDD[String]=sc.makeRDD(List("a","b","c"))

rdd2: org.apache.spark.rdd.RDD[String] = ParallelCollectionRDD[239] at makeRDD at

<console>:25

scala> rdd1.cartesian(rdd2).collect

res120: Array[(Int, String)] = Array((1,a), (1,b), (1,c), (2,a), (2,b), (2,c), (4,a),

(4,b), (4,c))

⑬、oalesce( numPartitions)

Decrease the number of partitions in the RDD to numPartitions. Useful for running operations more e!iciently a"er filtering down a large dataset.

当经过⼤规模的过滤数据以后,可以使 coalesce 对RDD进⾏分区的缩放(只能减少分区,不可以增

加)。

scala> var rdd1:RDD[Int]=sc.makeRDD(0 to 100)

rdd1: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[252] at makeRDD at

<console>:25

scala> rdd1.getNumPartitions

res129: Int = 6

scala> rdd1.filter(n=> n%2 == 0).coalesce(3).getNumPartitions

res127: Int = 3

scala> rdd1.filter(n=> n%2 == 0).coalesce(12).getNumPartitions

res128: Int = 6

⑭、repartition( numPartitions)

Reshu!le the data in the RDD randomly to create either more or fewer partitions and balance it

across them. This always shu!les all data over the network.

和coalesce 相似,但是该算⼦能够变⼤或者缩⼩RDD的分区数。

scala> var rdd1:RDD[Int]=sc.makeRDD(0 to 100)

rdd1: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[252] at makeRDD at

<console>:25

scala> rdd1.getNumPartitions

res129: Int = 6

scala> rdd1.filter(n=> n%2 == 0).repartition(12).getNumPartitions

res130: Int = 12

scala> rdd1.filter(n=> n%2 == 0).repartition(3).getNumPartitions

res131: Int = 3

⑮、repartitionAndSortWithinPartitions( partitioner)

Repartition the RDD according to the given partitioner and, within each resulting partition, sort records by their keys. This is more e!icient than calling repartition and then sorting within each partition because it can push the sorting down into the shu!le machinery.

该算⼦能够使⽤⽤户提供的 partitioner 实现对RDD中数据分区,然后对分区内的数据按照他们key进

⾏排序。

scala> case class User(name:String,deptNo:Int)

defined class User

var empRDD:RDD[User]= sc.parallelize(List(User("张 三",1),User("lisi",2),User("wangwu",1)))

empRDD.map(t => (t.deptNo, t.name)).repartitionAndSortWithinPartitions(new Partitioner

{

override def numPartitions: Int = 4

override def getPartition(key: Any): Int = {

key.hashCode() & Integer.MAX_VALUE % numPartitions

}

}).mapPartitionsWithIndex((p,values)=> {

println(p+"\t"+values.mkString("|"))

values

}).collect()

思考

1、如果有两个超⼤型⽂件需要join,有何优化策略?

按照分区生成文件

var user=sc.parallelize(List((1,"zhangsan")))

var order=sc.parallelize(List(2,"apple"))

var up=user.repartitionAndSirtWithinPartitions(new Partitioner{

override def numPartitions:Int=4

override def getPartition(key:Anny):Int={

key.hashCode()&Integer.MAX_VALUE%numPartitions

}

})

var op=user.repartitionAndSirtWithinPartitions(new Partitioner{

override def numPartitions:Int=4

override def getPartition(key:Anny):Int={

key.hashCode()&Integer.MAX_VALUE%numPartitions

}

})

up.saveAsTextFile("file://E:/user/up")

op.saveAsTextFile("file://E:/user/op")

再将相同分区的文件join

for(i<-0 to 4){

var up=sc.textFile("file://E:/user/up/part-0000"+i).map(t=>{

var us=t.substring(1,t.size-1).split(",")

(us(0),us(1))

})

var op=sc.textFile("file://E:/user/op/part-0000"+i).map(t=>{

var ur=t.substring(1,t.size-1).split(",")

(ur(0),ur(1))

})

up.join(op).collect

}

⑯、xxxByKey-算⼦

在Spark中专⻔针对RDD[(K,V)]类型数据集提供了xxxByKey算⼦实现对RDD[(K,V)]类型针对性实现计算。

a、groupByKey([ numPartitions ])

When called on a dataset of (K, V) pairs, returns a dataset of (K, Iterable) pairs.

类似于MapReduce计算模型。将RDD[(K, V)]转换为RDD[ (K, Iterable)]

scala> var lines=sc.parallelize(List("this is good good"))

lines: org.apache.spark.rdd.RDD[String] = ParallelCollectionRDD[0] at parallelize at

<console>:24

scala> lines.flatMap(_.split("\\s+")).map((_,1)).groupByKey.collect

res3: Array[(String, Iterable[Int])] = Array((this,CompactBuffer(1)),

(is,CompactBuff)), (good,CompactBuffer(1, 1)))

b、groupBy(f:(k,v)=> T)

scala> var lines=sc.parallelize(List("this is good good"))

lines: org.apache.spark.rdd.RDD[String] = ParallelCollectionRDD[0] at parallelize at

<console>:24

scala> lines.flatMap(_.split("\\s+")).map((_,1)).groupBy(t=>t._1)

res5: org.apache.spark.rdd.RDD[(String, Iterable[(String, Int)])] = ShuffledRDD[18] at

groupBy at <console>:26

scala> lines.flatMap(_.split("\\s+")).map((_,1)).groupBy(t=>t._1).map(t=>

(t._1,t._2.size)).collect

res6: Array[(String, Int)] = Array((this,1), (is,1), (good,2))

c、reduceByKey( func , [ numPartitions ])

When called on a dataset of (K, V) pairs, returns a dataset of (K, V) pairs where the values for each key are aggregated using the given reduce function func , which must be of type (V,V) => V. Like in

groupByKey , the number of reduce tasks is configurable through an optional second argument.

scala> var lines=sc.parallelize(List("this is good good"))

lines: org.apache.spark.rdd.RDD[String] = ParallelCollectionRDD[0] at parallelize at

<console>:24

scala> lines.flatMap(_.split("\\s+")).map((_,1)).reduceByKey(_+_).collect

res8: Array[(String, Int)] = Array((this,1), (is,1), (good,2))

d、aggregateByKey( zeroValue )( seqOp , combOp , [ numPartitions ])

When called on a dataset of (K, V) pairs, returns a dataset of (K, U) pairs where the values for each key are aggregated using the given combine functions and a neutral “zero” value. Allows an aggregated value type that is di!erent than the input value type, while avoiding unnecessary allocations. Like in groupByKey , the number of reduce tasks is configurable through an optional second argument.

scala> var lines=sc.parallelize(List("this is good good"))

lines: org.apache.spark.rdd.RDD[String] = ParallelCollectionRDD[0] at parallelize at

<console>:24

scala> lines.flatMap(_.split("\\s+")).map((_,1)).aggregateByKey(0)(_+_,_+_).collect

res9: Array[(String, Int)] = Array((this,1), (is,1), (good,2))

e、sortByKey([ ascending ], [ numPartitions ])

When called on a dataset of (K, V) pairs where K implements Ordered, returns a dataset of (K, V) pairs sorted by keys in ascending or descending order, as specified in the boolean ascending argument

scala> var lines=sc.parallelize(List("this is good good"))

lines: org.apache.spark.rdd.RDD[String] = ParallelCollectionRDD[0] at parallelize at

<console>:24

scala> lines.flatMap(_.split("\\s+")).map((_,1)).aggregateByKey(0)

(_+_,_+_).sortByKey(true).collect

res13: Array[(String, Int)] = Array((good,2), (is,1), (this,1))

scala> lines.flatMap(_.split("\\s+")).map((_,1)).aggregateByKey(0)

(_+_,_+_).sortByKey(false).collect

res14: Array[(String, Int)] = Array((this,1), (is,1), (good,2))

```.

##### f、sortBy(T=>U,ascending,[ numPartitions ])

```shell

scala> var lines=sc.parallelize(List("this is good good"))

lines: org.apache.spark.rdd.RDD[String] = ParallelCollectionRDD[0] at parallelize at

<console>:24

scala> lines.flatMap(_.split("\\s+")).map((_,1)).aggregateByKey(0)

(_+_,_+_).sortBy(_._2,false).collect

res18: Array[(String, Int)] = Array((good,2), (this,1), (is,1))

scala> lines.flatMap(_.split("\\s+")).map((_,1)).aggregateByKey(0)

(_+_,_+_).sortBy(t=>t,false).collect

res19: Array[(String, Int)] = Array((this,1), (is,1), (good,2))

2、Actions(动作)

Spark任何⼀个计算任务,有且仅有⼀个动作算⼦,⽤于触发job的执⾏。将RDD中的数据写出到外围系

统或者传递给Driver主程序。

①、reduce(func )

Aggregate the elements of the dataset using a function func (which takes two arguments and

returns one). The function should be commutative and associative so that it can be computed

correctly in parallel

该算⼦能够对远程结果进⾏计算,然后将计算结果返回给Driver。计算⽂件中的字符数。

scala> sc.textFile("file:///root/t_word").map(_.length).reduce(_+_)

res3: Int = 64

②、collect()

Return all the elements of the dataset as an array at the driver program. This is usually useful a"er a

filter or other operation that returns a su!iciently small subset of the data.

将远程RDD中数据传输给Driver端。通常⽤于测试环境或者RDD中数据⾮常的⼩的情况才可以使⽤Collect算⼦,否则Driver可能因为数据太⼤导致内存溢出。

scala> sc.textFile("file:///root/t_word").collect

res4: Array[String] = Array(this is a demo, hello spark, "good good study ", "day day

up ", come on baby)

③、foreach(func )

Run a function func on each element of the dataset. This is usually done for side e!ects such as

updating an Accumulator or interacting with external storage systems.

在数据集的每个元素上运⾏函数func。通常这样做是出于副作⽤,例如更新累加器或与 外部存储系统 交

互。

scala> sc.textFile("file:///root/t_word").foreach(line=>println(line))

④、count()

Return the number of elements in the dataset

返回RDD中元素的个数

scala> sc.textFile("file:///root/t_word").count()

res7: Long = 5

⑤、first()|take( n )

Return the first element of the dataset (similar to take(1)). take(n) Return an array with the first n

elements of the dataset

scala> sc.textFile("file:///root/t_word").first

res9: String = this is a demo

scala> sc.textFile("file:///root/t_word").take(1)

res10: Array[String] = Array(this is a demo)

scala> sc.textFile("file:///root/t_word").take(2)

res11: Array[String] = Array(this is a demo, hello spark)

⑥、takeSample( withReplacement , num , [ seed ])

Return an array with a random sample of num elements of the dataset, with or without

replacement, optionally pre-specifying a random number generator seed.

随机的从RDD中采样num个元素,并且将采样的元素返回给Driver主程序。因此这和sample转换算⼦有很⼤的区别。

scala> sc.textFile("file:///root/t_word").takeSample(false,2)

res20: Array[String] = Array("good good study ", hello spark)

⑦takeOrdered( n , [ordering] )

Return the first n elements of the RDD using either their natural order or a custom comparator.

返回RDD中前N个元素,⽤户可以指定⽐较规则

scala> case class User(name:String,deptNo:Int,salary:Double)

defined class User

scala> var

userRDD=sc.parallelize(List(User("zs",1,1000.0),User("ls",2,1500.0),User("ww",2,1000.0

)))

userRDD: org.apache.spark.rdd.RDD[User] = ParallelCollectionRDD[51] at parallelize at

<console>:26

scala> userRDD.takeOrdered

def takeOrdered(num: Int)(implicit ord: Ordering[User]): Array[User]

scala> userRDD.takeOrdered(3) <console>:26: error: No implicit Ordering defined for User.

userRDD.takeOrdered(3)

scala> implicit var userOrder=new Ordering[User]{

| override def compare(x: User, y: User): Int = {

| if(x.deptNo!=y.deptNo){

| x.deptNo.compareTo(y.deptNo)

| }else{

| x.salary.compareTo(y.salary) * -1

| }

| }

| }

userOrder: Ordering[User] = $anon$1@7066f4bc

scala> userRDD.takeOrdered(3)

res23: Array[User] = Array(User(zs,1,1000.0), User(ls,2,1500.0), User(ww,2,1000.0))

⑧、saveAsTextFile( path )

Write the elements of the dataset as a text file (or set of text files) in a given directory in the local

filesystem, HDFS or any other Hadoop-supported file system. Spark will call toString on each element

to convert it to a line of text in the file.

Spark会调⽤RDD中元素的toString⽅法将元素以⽂本⾏的形式写⼊到⽂件中。

scala> sc.textFile("file:///root/t_word").flatMap(_.split(" ")).map((_,1)).reduceByKey(_+_).sortBy(_._1,true,1).map(t=>

t._1+"\t"+t._2).saveAsTextFile("hdfs:///demo/results02")

⑨、saveAsSequenceFile( path )

Write the elements of the dataset as a Hadoop SequenceFile in a given path in the local filesystem,

HDFS or any other Hadoop-supported file system. This is available on RDDs of key-value pairs that

implement Hadoop’s Writable interface. In Scala, it is also available on types that are implicitly

convertible to Writable (Spark includes conversions for basic types like Int, Double, String, etc).

该⽅法只能⽤于RDD[(k,v)]类型。并且K/v都必须实现Writable接⼝,由于使⽤Scala编程,Spark已经实现隐式转换将Int, Double, String, 等类型可以⾃动的转换为Writable

scala> sc.textFile("file:///root/t_word").flatMap(_.split(" ")).map((_,1)).reduceByKey(_+_).sortBy(_._1,true,1).saveAsSequenceFile("hdfs:///demo/r

esults03")

scala> sc.sequenceFile[String,Int]("hdfs:///demo/results03").collect

res29: Array[(String, Int)] = Array((a,1), (baby,1), (come,1), (day,2), (demo,1),

(good,2), (hello,1), (is,1), (on,1), (spark,1), (study,1), (this,1), (up,1))

五、共享变量

当RDD中的转换算⼦需要⽤到定义Driver中地变量的时候,计算节点在运⾏该转换算⼦之前,会通过⽹络将Driver中定义的变量下载到计算节点。同时如果计算节点在修改了下载的变量,该修改对Driver端定义的变量不可⻅。

scala> var i:Int=0 i: Int = 0

scala> sc.textFile("file:///root/t_word").foreach(line=> i=i+1)

scala> print(i) 0

1、广播变量

问题:

当出现超⼤数据集和⼩数据集合进⾏连接的时候,能否使⽤join算⼦直接进⾏jion,如果不⾏为什么?

//100GB

var orderItems=List("001 apple 2 4.5","002 pear 1 2.0","001 ⽠⼦ 1 7.0")

//10MB

var users=List("001 zhangsan","002 lisi","003 王五")

var rdd1:RDD[(String,String)] =sc.makeRDD(orderItems).map(line=>(line.split(" ") (0),line))

var rdd2:RDD[(String,String)] =sc.makeRDD(users).map(line=>(line.split(" ")(0),line))

rdd1.join(rdd2).collect().foreach(println)

系统在做join的操作的时候会产⽣shu!le,会在各个计算节点当中传输100GB的数据⽤于完成join操作,

因此join⽹络代价和内存代价都很⾼。因此可以考虑将⼩数据定义成Driver中成员变量,在Map操作的时

候完成join。

scala> var users=List("001 zhangsan","002 lisi","003 王五").map(line=>line.split(" ")).map(ts=>ts(0)->ts(1)).toMap

users: scala.collection.immutable.Map[String,String] = Map(001 -> zhangsan, 002 ->

lisi, 003 -> 王五)

scala> var orderItems=List("001 apple 2 4.5","002 pear 1 2.0","001 ⽠⼦ 1 7.0")

orderItems: List[String] = List(001 apple 2 4.5, 002 pear 1 2.0, 001 ⽠⼦ 1 7.0)

scala> var rdd1:RDD[(String,String)] =sc.makeRDD(orderItems).map(line=>(line.split(" ")(0),line))

rdd1: org.apache.spark.rdd.RDD[(String, String)] = MapPartitionsRDD[89] at map at

<console>:32

scala> rdd1.map(t=> t._2+"\t"+users.get(t._1).getOrElse("未知")).collect()

res33: Array[String] = Array(001 apple 2 4.5 zhangsan, 002 pear 1 2.0 lisi,

001 ⽠⼦ 1 7.0 zhangsan)

但是上⾯写法会存在⼀个问题,每当⼀个map算⼦遍历元素的时候都会向Driver下载userMap变量,虽然

该值不⼤,但是在计算节点会频繁的下载。(每个线程都会下载一次)正是因为此种情景会导致没有必要的重复变量的拷⻉,Spark提出⼴播变量。(每个进程下载一次)

Spark 在程序运⾏前期,提前将需要⼴播的变量通知给所有的计算节点,计算节点会对需要⼴播的变量

在计算之前进⾏下载操作并且将该变量缓存,该计算节点其他线程在使⽤到该变量的时候就不需要下载。

//100GB

var orderItems=List("001 apple 2 4.5","002 pear 1 2.0","001 ⽠⼦ 1 7.0")

//10MB 声明Map类型变量

var users:Map[String,String]=List("001 zhangsan","002 lisi","003 王 五").map(line=>line.split(" ")).map(ts=>ts(0)->ts(1)).toMap

//声明⼴播变量,调⽤value属性获取⼴播值

val ub = sc.broadcast(users)

var rdd1:RDD[(String,String)] =sc.makeRDD(orderItems).map(line=>(line.split(" ") (0),line))

rdd1.map(t=> t._2+"\t"+ub.value.get(t._1).getOrElse("未知")).collect().foreach(println)

2、计数器

Spark提供的Accumulator,主要⽤于多个节点对⼀个变量进⾏共享性的操作。Accumulator只提供了累加的功能。但是确给我们提供了多个task对⼀个变量并⾏操作的功能。但是task只能对Accumulator进⾏累加操作,不能读取它的值。只有Driver程序可以读取Accumulator的值。

scala> val accum = sc.longAccumulator("mycount")

accum: org.apache.spark.util.LongAccumulator = LongAccumulator(id: 1075, name:

Some(mycount), value: 0)

scala> sc.parallelize(Array(1, 2, 3, 4),6).foreach(x => accum.add(x))

scala> accum.value

res36: Long = 10

六、Spark数据写出

1、将数据写出HDFS

scala> sc.textFile("file:///root/t_word").flatMap(_.split("")).map((_,1)).reduceByKey(_+_).sortBy(_._1,true,1).saveAsSequenceFile("hdfs:///demo/results03")

因为saveASxxx都是将计算结果写⼊到HDFS或者是本地⽂件系统中,因此如果需要 将计算结果写出到第三⽅数据此时就需要借助于spark给我们提供的⼀个算⼦ foreach 算⼦写出。

2、foreach写出

场景1:频繁的打开和关闭链接,写⼊效率很低(可以运⾏成功的)

sc.textFile("file:///root/t_word") .flatMap(_.split(" "))

.map((_,1))

.reduceByKey(_+_) .sortBy(_._1,true,3) .foreach(tuple=>{ //数据库

//1,创建链接

//2.开始插⼊

//3.关闭链接

})

场景2:错误写法,因为链接池不可能被序列化(运⾏失败)

//1.定义连接Connection

var conn=... //定义在Driver

sc.textFile("file:///root/t_word") .flatMap(_.split(" "))

.map((_,1))

.reduceByKey(_+_) .sortBy(_._1,true,3) .foreach(tuple=>{ //数据库

//2.开始插⼊

})

//3.关闭链接

场景3:⼀个分区⼀个链接池?(还不错,但是不是最优),有可能⼀个JVM运⾏多个分区,也就意味着

⼀个JVM创建多个链接造成资源的浪费。单例对象?

sc.textFile("file:///root/t_word") .flatMap(_.split(" "))

.map((_,1))

.reduceByKey(_+_) .sortBy(_._1,true,3) .foreachPartition(values=>{

//创建链接

//写⼊分区数据

//关闭链接

})

将创建链接代码使⽤单例对象创建,如果⼀个计算节点拿到多个分区。通过JVM单例定义可以知道,在整个JVM中仅仅只会创建⼀次。

val conf = new SparkConf()

.setMaster("local[*]") .setAppName("SparkWordCountApplication")

val sc = new SparkContext(conf)

sc.textFile("hdfs://CentOS:9000/demo/words/") .flatMap(_.split(" "))

.map((_,1))

.reduceByKey(_+_) .sortBy(_._1,true,3) .foreachPartition(values=>{

HbaseSink.writeToHbase("baizhi:t_word",values.toList)

})

sc.stop()

package com.baizhi.sink

import org.apache.hadoop.conf.Configuration

import org.apache.hadoop.hbase.{HConstants, TableName}

import org.apache.hadoop.hbase.client.{Connection, ConnectionFactory, Put}

import scala.collection.JavaConverters._

object HbaseSink {

//定义链接参数

private var conn:Connection=createConnection()

def createConnection(): Connection = {

val hadoopConf = new Configuration()

hadoopConf.set(HConstants.ZOOKEEPER_QUORUM,"CentOS")

return ConnectionFactory.createConnection(hadoopConf)

}

def writeToHbase(tableName:String,values:List[(String,Int)]): Unit ={

var tName:TableName=TableName.valueOf(tableName)

val mutator = conn.getBufferedMutator(tName)

var scalaList=values.map(t=>{

val put = new Put(t._1.getBytes())

put.addColumn("cf1".getBytes(),"count".getBytes(),(t._2+" ").getBytes())

put

})

//批量写出

mutator.mutate(scalaList.asJava)

mutator.flush()

mutator.close()

}

//监控JVM退出,如果JVM退出系统回调该⽅法

Runtime.getRuntime.addShutdownHook(new Thread(new Runnable {

override def run(): Unit = {

println("-----close----")

conn.close()

}

}))

}

七、RDD进阶

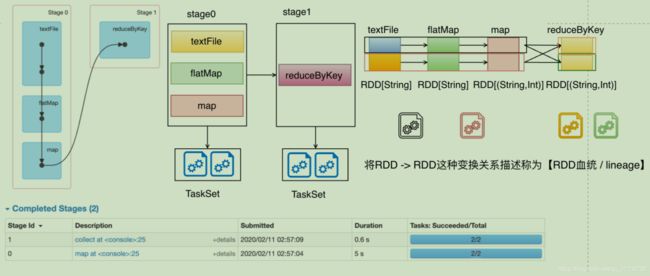

1、分析WordCount

sc.textFile("hdfs:///demo/words/t_word") //RDD0

.flatMap(_.split(" ")) //RDD1

.map((_,1)) //RDD2

.reduceByKey(_+_) //RDD3 finalRDD

.collect //Array 任务提交

* Internally, each RDD is characterized by five main properties: * * - A list of partitions * - A function for computing each split * - A list of dependencies on other RDDs * - Optionally, a Partitioner for key-value RDDs (e.g. to say that the RDD is hash-partitioned) * - Optionally, a list of preferred locations to compute each split on (e.g.block locations for an HDFS file) *

- RDD具有分区-分区数等于该RDD并⾏度

- 每个分区独⽴运算,尽可能实现分区本地性计算

- 只读的数据集且RDD与RDD之间存在着相互依赖关系

- 针对于 key-value RDD,可以指定分区策略【可选】

- 基于数据所属的位置,选择最优位置实现本地性计算【可选】

2、RDD容错

在理解DAGSchedule如何做状态划分的前提是需要⼤家了解⼀个专业术语 lineage 通常被⼈们称为RDD

的⾎统。在了解什么是RDD的⾎统之前,先来看看程序猿进化过程。

上图中描述了⼀个程序猿起源变化的过程,我们可以近似的理解类似于RDD的转换也是⼀样的,Spark的计算本质就是对RDD做各种转换,因为RDD是⼀个不可变只读的集合,因此每次的转换都需要上⼀次的RDD作为本次转换的输⼊,因此RDD的lineage描述的是RDD间的相互依赖关系。为了保证RDD中数据的健壮性,RDD数据集通过所谓的⾎统关系(Lineage)记住了它是如何从其它RDD中演变过来的。Spark将RDD之间的关系归类为 宽依赖 和 窄依赖 。Spark会根据Lineage存储的RDD的依赖关系对RDD计算做故障容错。⽬前Saprk的容错策略 根据RDD依赖关系重新计算-⽆需⼲预 、 RDD做Cache-临时缓存 、 RDD做Checkpoint-持久化 ⼿段完成RDD计算的故障容错。

3、RDD缓存

缓存是⼀种RDD计算容错的⼀种⼿段,程序在RDD数据丢失的时候,可以通过缓存快速计算当前RDD的

值,⽽不需要反推出所有的RDD重新计算,因此Spark在需要对某个RDD多次使⽤的时候,为了提⾼程序的执⾏效率⽤户可以考虑使⽤RDD的cache。

scala> var finalRDD=sc.textFile("hdfs:///demo/words/t_word").flatMap(_.split(" ")).map((_,1)).reduceByKey(_+_)

finalRDD: org.apache.spark.rdd.RDD[(String, Int)] = ShuffledRDD[25] at reduceByKey at

<console>:24

scala> finalRDD.cache

res7: org.apache.spark.rdd.RDD[(String, Int)] = ShuffledRDD[25] at reduceByKey at

<console>:24

scala> finalRDD.collect

res8: Array[(String, Int)] = Array((this,1), (is,1), (day,2), (come,1), (hello,1),

(baby,1), (up,1), (spark,1), (a,1), (on,1), (demo,1), (good,2), (study,1))

scala> finalRDD.collect

res9: Array[(String, Int)] = Array((this,1), (is,1), (day,2), (come,1), (hello,1),

(baby,1), (up,1), (spark,1), (a,1), (on,1), (demo,1), (good,2), (study,1))

⽤户可以调⽤upersist⽅法清空缓存

scala> finalRDD.unpersist()

res11: org.apache.spark.rdd.RDD[(String, Int)]

@scala.reflect.internal.annotations.uncheckedBounds = ShuffledRDD[25] at reduceByKey

at <console>:24

除了调⽤cache之外,Spark提供了更细粒度的RDD缓存⽅案,⽤户可以更具集群的内存状态选择合适的

缓存策略。⽤户可以使⽤persist⽅法指定缓存级别。

RDD#persist(StorageLevel.MEMORY_ONLY)

⽬前Spark⽀持的缓存⽅案如下:

object StorageLevel {

val NONE = new StorageLevel(false, false, false, false)

val DISK_ONLY = new StorageLevel(true, false, false, false)# 仅仅存储磁盘

val DISK_ONLY_2 = new StorageLevel(true, false, false, false, 2) # 仅仅存储磁盘 存储两份

val MEMORY_ONLY = new StorageLevel(false, true, false, true)

val MEMORY_ONLY_2 = new StorageLevel(false, true, false, true, 2)

val MEMORY_ONLY_SER = new StorageLevel(false, true, false, false) # 先序列化再 存储内存,费CPU节省内存

val MEMORY_ONLY_SER_2 = new StorageLevel(false, true, false, false, 2)

val MEMORY_AND_DISK = new StorageLevel(true, true, false, true)

val MEMORY_AND_DISK_2 = new StorageLevel(true, true, false, true, 2)

val MEMORY_AND_DISK_SER = new StorageLevel(true, true, false, false)

val MEMORY_AND_DISK_SER_2 = new StorageLevel(true, true, false, false, 2)

val OFF_HEAP = new StorageLevel(true, true, true, false, 1)

...

那如何选择呢?

默认情况下,性能最⾼的当然是MEMORY_ONLY,但前提是你的内存必须⾜够⾜够⼤,可以绰绰有余地

存放下整个RDD的所有数据。因为不进⾏序列化与反序列化操作,就避免了这部分的性能开销;对这个

RDD的后续算⼦操作,都是基于纯内存中的数据的操作,不需要从磁盘⽂件中读取数据,性能也很⾼;

⽽且不需要复制⼀份数据副本,并远程传送到其他节点上。但是这⾥必须要注意的是,在实际的⽣产环

境中,恐怕能够直接⽤这种策略的场景还是有限的,如果RDD中数据⽐较多时(⽐如⼏⼗亿),直接⽤

这种持久化级别,会导致JVM的OOM内存溢出异常。

如果使⽤MEMORY_ONLY级别时发⽣了内存溢出,那么建议尝试使⽤MEMORY_ONLY_SER级别。该级别会将RDD数据序列化后再保存在内存中,此时每个partition仅仅是⼀个字节数组⽽已,⼤⼤减少了对象数量,并降低了内存占⽤。这种级别⽐MEMORY_ONLY多出来的性能开销,主要就是序列化与反序列化的开销。但是后续算⼦可以基于纯内存进⾏操作,因此性能总体还是⽐较⾼的。此外,可能发⽣的问题同上,如果RDD中的数据量过多的话,还是可能会导致OOM内存溢出的异常。

不要泄漏到磁盘,除⾮你在内存中计算需要很⼤的花费,或者可以过滤⼤量数据,保存部分相对重要的

在内存中。否则存储在磁盘中计算速度会很慢,性能急剧降低。

后缀为_2的级别,必须将所有数据都复制⼀份副本,并发送到其他节点上,数据复制以及⽹络传输会导

致较⼤的性能开销,除⾮是要求作业的⾼可⽤性,否则不建议使⽤。

4、Check Point机制

除了使⽤缓存机制可以有效的保证RDD的故障恢复,但是如果缓存失效还是会在导致系统重新计算RDD

的结果,所以对于⼀些RDD的lineage较⻓的场景,计算⽐较耗时,⽤户可以尝试使⽤checkpoint机制存

储RDD的计算结果,该种机制和缓存最⼤的不同在于,使⽤checkpoint之后被checkpoint的RDD数据直接持久化在⽂件系统中,⼀般推荐将结果写在hdfs中,这种checpoint并不会⾃动清空。注意checkpoint在计算的过程中先是对RDD做mark,在任务执⾏结束后,再对mark的RDD实⾏checkpoint,也就是要重新计算被Mark之后的rdd的依赖和结果。

sc.setCheckpointDir("hdfs://CentOS:9000/checkpoints")

val rdd1 = sc.textFile("hdfs://CentOS:9000/demo/words/") .map(line => {

println(line)

})

//对当前RDD做标记

rdd1.checkpoint()

rdd1.collect()

因此在checkpoint⼀般需要和cache连⽤,这样就可以保证计算⼀次。

sc.setCheckpointDir("hdfs://CentOS:9000/checkpoints")

val rdd1 = sc.textFile("hdfs://CentOS:9000/demo/words/") .map(line => {

println(line)

})

rdd1.persist(StorageLevel.MEMORY_AND_DISK)//先cache

//对当前RDD做标记

rdd1.checkpoint()

rdd1.collect()

rdd1.unpersist()//删除缓存

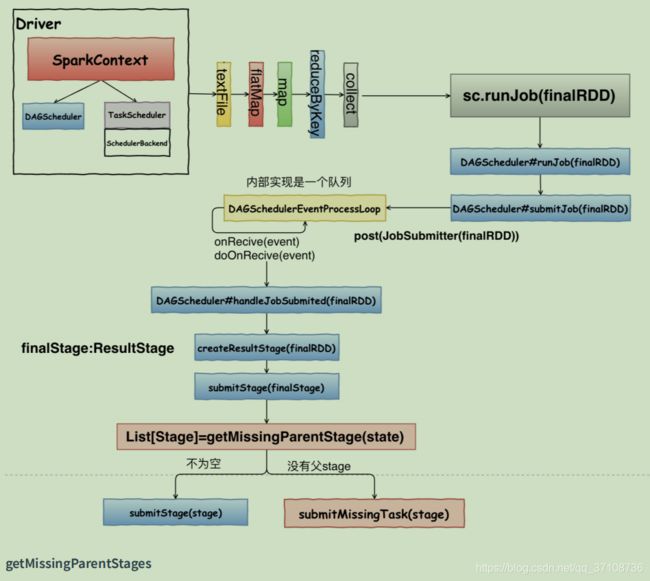

5、任务计算源码刨析

①、理论指导

sc.textFile("hdfs:///demo/words/t_word") //RDD0

.flatMap(_.split(" ")) //RDD1

.map((_,1)) //RDD2

.reduceByKey(_+_) //RDD3 finalRDD

.collect //Array 任务提交

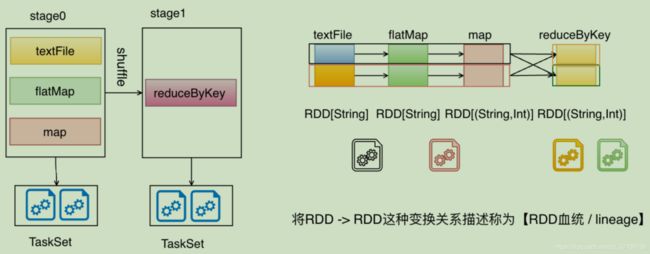

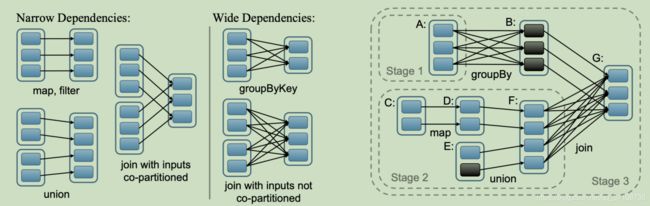

通过分析以上的代码,我们不难发现Spark在执⾏任务前期,会根据RDD的转换关系形成⼀个任务执⾏

DAG。将任务划分成若⼲个stage。Spark底层在划分stage的依据是根据RDD间的依赖关系划分。Spark将RDD与RDD间的转换分类: ShuffleDependency-宽依赖 | NarrowDependency-窄依赖 ,Spark如果

发现RDD与RDD之间存在窄依赖关系,系统会⾃动将存在窄依赖关系的RDD的计算算⼦归纳为⼀个

stage,如果遇到宽依赖系统开启⼀个新的stage.

②、Spark 宽窄依赖判断

宽依赖:⽗RDD的⼀个分区对应了⼦RDD的多个分区,出现分叉就认定为宽依赖。ShuffleDependency

窄依赖:⽗RDD的1个分区(多个⽗RDD)仅仅只对应⼦RDD的⼀个分区认定为窄依赖。OneToOneDependency | RangeDependency | PruneDependency

Spark在任务提交前期,⾸先根据finalRDD逆推出所有依赖RDD,以及RDD间依赖关系,如果遇到窄依赖合并在当前的stage中,如果是宽依赖开启新的stage。

getMissingParentStages

private def getMissingParentStages(stage: Stage): List[Stage] = {

val missing = new HashSet[Stage]

val visited = new HashSet[RDD[_]]

// We are manually maintaining a stack here to prevent StackOverflowError

// caused by recursively visiting

val waitingForVisit = new ArrayStack[RDD[_]]

def visit(rdd: RDD[_]) {

if (!visited(rdd)) {

visited += rdd

val rddHasUncachedPartitions = getCacheLocs(rdd).contains(Nil)

if (rddHasUncachedPartitions) {

for (dep <- rdd.dependencies) {

dep match {

case shufDep: ShuffleDependency[_, _, _] =>

val mapStage = getOrCreateShuffleMapStage(shufDep, stage.firstJobId)

if (!mapStage.isAvailable) {

missing += mapStage

}

case narrowDep: NarrowDependency[_] =>

waitingForVisit.push(narrowDep.rdd)

}

}

}

}

}

waitingForVisit.push(stage.rdd)

while (waitingForVisit.nonEmpty) {

visit(waitingForVisit.pop())

}

missing.toList

}

遇到宽依赖,系统会⾃动的创建⼀个 ShuffleMapStage

submitMissingTasks

private def submitMissingTasks(stage: Stage, jobId: Int) {

//计算分区

val partitionsToCompute: Seq[Int] = stage.findMissingPartitions()

...

//计算最佳位置

val taskIdToLocations: Map[Int, Seq[TaskLocation]] = try {

stage match {

case s: ShuffleMapStage =>

partitionsToCompute.map { id => (id, getPreferredLocs(stage.rdd,

id))}.toMap

case s: ResultStage =>

partitionsToCompute.map { id =>

val p = s.partitions(id)

(id, getPreferredLocs(stage.rdd, p))

}.toMap

}

} catch {

case NonFatal(e) =>

stage.makeNewStageAttempt(partitionsToCompute.size)

listenerBus.post(SparkListenerStageSubmitted(stage.latestInfo, properties))

abortStage(stage, s"Task creation failed: $e\n${Utils.exceptionString(e)}",

Some(e))

runningStages -= stage

return

}

//将分区映射TaskSet

val tasks: Seq[Task[_]] = try {

val serializedTaskMetrics =

closureSerializer.serialize(stage.latestInfo.taskMetrics).array()

stage match {

case stage: ShuffleMapStage =>

stage.pendingPartitions.clear()

partitionsToCompute.map { id =>

val locs = taskIdToLocations(id)

val part = partitions(id)

stage.pendingPartitions += id

new ShuffleMapTask(stage.id, stage.latestInfo.attemptNumber,

taskBinary, part, locs, properties, serializedTaskMetrics,

Option(jobId),

Option(sc.applicationId), sc.applicationAttemptId,

stage.rdd.isBarrier())

}

case stage: ResultStage =>

partitionsToCompute.map { id =>

val p: Int = stage.partitions(id)

val part = partitions(p)

val locs = taskIdToLocations(id)

new ResultTask(stage.id, stage.latestInfo.attemptNumber,

taskBinary, part, locs, id, properties, serializedTaskMetrics,

Option(jobId), Option(sc.applicationId), sc.applicationAttemptId,

stage.rdd.isBarrier())

}

}

} catch {

case NonFatal(e) =>

abortStage(stage, s"Task creation failed: $e\n${Utils.exceptionString(e)}",

Some(e))

runningStages -= stage

return

}

//调⽤taskScheduler#submitTasks TaskSet

if (tasks.size > 0) {

logInfo(s"Submitting ${tasks.size} missing tasks from $stage (${stage.rdd})

(first 15 " +

s"tasks are for partitions ${tasks.take(15).map(_.partitionId)})")

taskScheduler.submitTasks(new TaskSet(

tasks.toArray, stage.id, stage.latestInfo.attemptNumber, jobId, properties))

}

...

}

总结关键字:逆推、finalRDD、ResultStage 、Shu!leMapStage、Shu!leMapTask、ResultTask、

Shu!leDependency、NarrowDependency、DAGScheduler、TaskScheduler、SchedulerBackend、

DAGSchedulerEventProcessLoop