Spring Boot实战(九)9.2 批处理 Spring Batch

9.2.1 Spring Batch快速入门

1.什么是Spring Batch

Spring Batch是用来处理大量数据操作的一个框架,主要用来读取大量数据,然后进行一定处理后输出成指定的形式。

2.Spring Batch的主要组成

Spring Batch主要由以下几部分组成,如表

| 名称 | 用途 |

|---|---|

| JobRepository | 用来注册Job的容器 |

| JobLauncher | 用来启动Job的接口 |

| Job | 我们要实际执行的任务,包含一个或式个Step |

| Step | Step-步骤包含ItemReader、ItemProcessor和ItemWriter |

| ItemReader | 用来读取数据的接口 |

| ItemProdessor | 用来处理数据的接口 |

| ItemWriter | 用来输出数据的接口 |

以上SpringBatch的主要组成部分只需注册成Spring的Bean即可。若想开启批处理的支持还需在配置类上使用@EnableBatchProcessing。

一个示意的Spring Batch的配置如下:

@Configuration

@EnableBatchProcessing

public class BatchConfig {

@Bean

public JobRepository jobRepository(DataSource dataSource,PlatformTransactionManager transactionManager) throws Exception {

JobRepositoryFactoryBean jobRepositoryFactoryBean = new JobRepositoryFactoryBean();

jobRepositoryFactoryBean.setDataSource(dataSource);

jobRepositoryFactoryBean.setTransactionManager(transactionManager);

jobRepositoryFactoryBean.setDatabaseType("oracle");

return jobRepositoryFactoryBean.getObject();

}

@Bean

public SimpleJobLauncher jobLauncher(DataSource dataSource,PlatformTransactionManager transactionManager)throws Exception {

SimpleJobLauncher jobLauncher = new SimpleJobLauncher();

jobLauncher.setJobRepository(jobRepository(dataSource, transactionManager));

return jobLauncher;

}

@Bean

public Job importJob(JobBuilderFactory jobs,Step s1) {

return jobs.get("importJob")

.incrementer(new RunIdIncrementer())

.flow(s1)

.end()

.build();

}

@Bean

public Step step1(StepBuilderFactory stepBuilderFactory,

ItemReader reader,

ItemWriterwriter,

ItemProcessorprocessor){

return stepBuilderFactory

.get("step1")

.chunk(65000)

.reader(reader)

.processor(processor)

.writer(writer)

.build();

}

@Bean

public ItemReader reader() throws Exception {

//新建ItemReader接口的实现类返回

return reader;

}

@Bean

public ItemProcessor processor() {

//新建ItemProcessor接口的实现类返回

return processor;

}

@Bean

public ItemWWriterwriter(DataSource dataSource){

//新建ItemWriter接口的实现类返回

return writer;

}

}

3.Job 监听

若需要监听我们的Job的执行情况,则定义一个类实现JobExecutionListener,并在定义Job的Bean上绑定该监听器。

监听器的定义如下:

public class MyJobListener implements JobExecutionListener {

@Override

public void beforeJob(JobExecution jobExecution) {

// Job开始前

}

@Override

public void afterJob(JobExecution jobExecution) {

// Job完成后

}

}

注册并绑定监听器到Job:

@Bean

public Job importJob(JobBuilderFactory jobs,Step s1) {

return jobs.get("importJob")

.incrementer(new RunIdIncrementer())

.flow(s1)

.end()

.listener(csvJobListener())

.build();

}

@Bean

public MyJobListener myJobListener() {

return new MyJobListener();

}

4.数据读取

Spring Batch为我们提供了大量的ItemReader的实现,用来读取不同的数据来源,如图

5.数据处理及校验

数据处理和校验都要通过ItemProcessor接口实现来完成。

(1)数据处理

数据处理只需实现ItemProcessor接口,重写其process方法。方法输入的参数是从ItemReader读取到的数据,返回的数据给ItemWriter。

public class MyItemProcessor implements ItemProcessor {

public Person process(Person person) {

String name = person.getName().toUpperCase();

pserson.setName(name);

return person;

}

}

(2)数据校验

我们可以JSR-303(主要实现有hebernate-validator)的注解,来校验ItemReader读取到的数据是否满足要求。

我们可以让我们的ItemProcessor实现ValidatingItemProcessor接口:

public class MyItemProcessor extends ValidatingItemProcessor {

public Person process(Person item) throws ValidationException {

super.process(item);

return item;

}

}

定义我们的校验器,实现的Validator接口来自于Spring,我们将使用JSR-303的Validator来校验:

public class MyBeanValidator implements Validator,InitializingBean {

private javax.validation.Validator validator;

@Override

public void afterPropertiesSet() throws Exception {

ValidatorFactory validatorFactory = Validation.buildDefaultValidatorFactory();

validator = validatorFactory.usingContext().getValidator();

}

@Override

public void validate(T value) throws ValidationException {

Set> constraintViolations = validator.validate(value);

if(constraintViolations.size()>0) {

StringBuilder message = new StringBuilder();

for(ConstraintViolationconstraintViolation:constraintViolations) {

message.append(constraintViolation.getMessage()+"\n");

}

throw new ValidationException(message.toString());

}

}

}

在定义我们的MyItemProcessor时必须将MyBeanValidator设置进去,代码如下:

@Bean

public ItemProcessor processor(){

MyItemProcessor processor = new MyItemProcessor();

processor.setValidator(myBeanValidator());

return processor;

}

@Bean

public Validator myBeanValidator(){

return new MyBeanValidator();

}

6.数据输出

Spring Batch为我们提供了大量的ItemWriter的实现,用来将数据输出到不同的目的地,如图

8.参数后置绑定

我们在ItemReader和ItemWriter的Bean定义的时候,参数已经硬编码在Bean的初始化中,代码如下

@Bean

public ItemReader reader() throws Exception {

FlatFileItemReader reader = new FlatFileItemReader();

reader.setResource(new ClassPathResource("people.csv"));

return reader;

}

这时我们要读取的文件的位置已经硬编码在Bean的定义中,这在很多情况下不符合我们的实际需求,这时我们需要使用参数后置绑定。

要实现参数后置绑定,我们可以在JobParameters中绑定参数,在Bean定义的时候使用一个特殊的Bean生命周期注解@StepScope,然后通过@Value注入此参数。

参数设置:

String path = "people.csv";

JobParameters jobParameters = new JobParametersBuilder()

.addLong("time", System.currentTimeMillis())

.addString("input.file.name", path)

.toJobParameters();

jobLauncher.run(importJob,jobParameters);

定义Bean:

@Bean

@StepScope

public ItemReader reader(@Value("#{jobParameters['input.file.name']}") String pathToFile)throws Exception{

FlatFileItemReader reader = new FlatFileItemReader();

reader.setResource(new ClassPathResource(pathToFile));

return reader;

}

9.2.2 Spring Boot的支持

Spring Boot对Spring Batch支持的源码位于org.springframeword.boot.autoconfigure.batch下。

Spring Boot为我们自动初始化了Spring Batch存储批处理记录的数据库,且当我们程序启动时,会自动执行我们定义的Job的Bean。

Spring Boot提供如下属性来定制Spring Batch:

spring.batch.job.names = job1,job2 #启动时要执行的Job,默认执行全部Job

spring.batch.job.enabled=true #是否自动执行定义的Job,默认是

spring.batch.initializer.enabled=true #是否初始化Spring Batch的数据库,默认为是

spring.batch.scheme=

spring.batch.table-prefix= # 设置Spring Batch的数据库表的前缀

9.2.3 实战

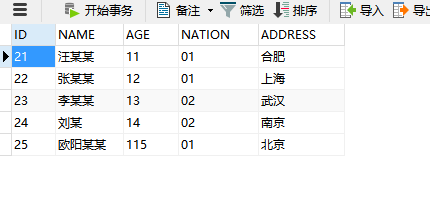

本例将使用Spring Batch将csv文件 中的数据使用JDBC批处理的方式插入数据库。

1.新建Spring Boot项目

新建Spring Boot项目,依赖为JDBC(spring-boot-starter-jdbc)、Batch(spring-boot-starter-batch)、Web(spring-boot-starter-web)。

项目信息:

groupId:com.wisely

arctifactId:ch9_2

packege:com.wisely.ch9_2

此项目使用Oracle驱动,Spring Batch会自动加载hsqldb驱动,所以我们要去除:

org.springframework.boot

spring-boot-starter-batch

org.hsqldb

hsqldb

com.oracle

ojdbc6

11.2.0.2.0

添加hibernate-validator依赖,作为数据校验使用:

org.hibernate

hibernate-validator

测试csv数据,位于src/main/resources/people.csv中,内容如下

汪某某,11,汉族,合肥

张某某,12,汉族,上海

李某某,13,非汉族,武汉

刘某,14,非汉族,南京

欧阳某某,115,汉族,北京

数据表定义,位于src/main/resources/people.sql中,内容如下:

create table PERSON

(

id NUMBER not null primary key,

name VARCHAR2(20),

age NUMBER,

nation VARCHAR2(20),

address VARCHAR2(20)

);

数据源的配置

spring.datasource.driverClassName=oracle.jdbc.OracleDriver

spring.datasource.url=jdbc\:oracle\:thin\:@192.168.99.100\:1521\:xe

spring.datasource.username=boot

spring.datasource.password=boot

spring.datasource.schema-username: boot

spring.datasource.schema-password: boot

spring.datasource.schema: classpath:schema.sql

spring.datasource.initialization-mode: always

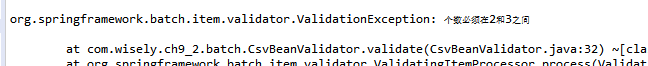

2.领域模型类

package com.wisely.ch9_2.domin;

import javax.validation.constraints.Size;

public class Person {

@Size(max=4,min=2) //使用JSR-303注解来校验数据

private String name;

private int age;

private String nation;

private String address;

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public int getAge() {

return age;

}

public void setAge(int age) {

this.age = age;

}

public String getNation() {

return nation;

}

public void setNation(String nation) {

this.nation = nation;

}

public String getAddress() {

return address;

}

public void setAddress(String address) {

this.address = address;

}

}

3.数据处理及校验

(1)处理:

package com.wisely.ch9_2.batch;

import org.springframework.batch.item.validator.ValidatingItemProcessor;

import org.springframework.batch.item.validator.ValidationException;

import com.wisely.ch9_2.domin.Person;

public class CsvItemProcessor extends ValidatingItemProcessor {

@Override

public Person process(Person item) throws ValidationException {

super.process(item); //需执行super.proces:(item)才会调用自定义校验器。

if(item.getNation().equals("汉族")) { //对数据做简单的处理,若民族为汉族,则数据转换成01,其余转换成02.

item.setNation("01");

}else {

item.setNation("02");

}

return item;

}

}

(2)校验:

package com.wisely.ch9_2.batch;

import java.util.Set;

import javax.validation.ConstraintViolation;

import javax.validation.Validation;

import javax.validation.ValidatorFactory;

import org.springframework.batch.item.validator.ValidationException;

import org.springframework.batch.item.validator.Validator;

import org.springframework.beans.factory.InitializingBean;

public class CsvBeanValidator implements Validator,InitializingBean {

private javax.validation.Validator validator;

@Override

public void afterPropertiesSet() throws Exception { //使用jsr-303的Validator来校验我们的数据,在此处进行JSR-303的Validator的初始化。

ValidatorFactory validatorFactory = Validation.buildDefaultValidatorFactory();

validator = validatorFactory.usingContext().getValidator();

}

@Override

public void validate(T value) throws ValidationException {

Set> constraintViolations = validator.validate(value); //使用Validator的validate方法校验数据。

if(constraintViolations.size()>0) {

StringBuilder message = new StringBuilder();

for(ConstraintViolation constraintViolation:constraintViolations) {

message.append(constraintViolation.getMessage()+"\n");

}

throw new ValidationException(message.toString());

}

}

}

4.Job监听

package com.wisely.ch9_2.batch;

import org.springframework.batch.core.JobExecution;

import org.springframework.batch.core.JobExecutionListener;

public class CsvJobListener implements JobExecutionListener {

long startTime;

long endTime;

@Override

public void beforeJob(JobExecution jobExecution) {

startTime = System.currentTimeMillis();

System.out.println("任务处理开始");

}

@Override

public void afterJob(JobExecution jobExecution) {

endTime = System.currentTimeMillis();

System.out.println("任务处理结束");

System.out.println("耗时:"+(endTime-startTime)+"ms");

}

}

代码解释

监听器实现JobExecutionListener接口,并重写其beforeJob、afterJob方法即可。

5.配置

配置的完整代码如下:

package com.wisely.ch9_2.batch;

import javax.sql.DataSource;

import org.springframework.batch.core.Job;

import org.springframework.batch.core.Step;

import org.springframework.batch.core.configuration.annotation.EnableBatchProcessing;

import org.springframework.batch.core.configuration.annotation.JobBuilderFactory;

import org.springframework.batch.core.configuration.annotation.StepBuilderFactory;

import org.springframework.batch.core.launch.support.RunIdIncrementer;

import org.springframework.batch.core.launch.support.SimpleJobLauncher;

import org.springframework.batch.core.repository.JobRepository;

import org.springframework.batch.core.repository.support.JobRepositoryFactoryBean;

import org.springframework.batch.item.ItemProcessor;

import org.springframework.batch.item.ItemReader;

import org.springframework.batch.item.ItemWriter;

import org.springframework.batch.item.database.BeanPropertyItemSqlParameterSourceProvider;

import org.springframework.batch.item.database.JdbcBatchItemWriter;

import org.springframework.batch.item.file.FlatFileItemReader;

import org.springframework.batch.item.file.mapping.BeanWrapperFieldSetMapper;

import org.springframework.batch.item.file.mapping.DefaultLineMapper;

import org.springframework.batch.item.file.transform.DelimitedLineTokenizer;

import org.springframework.batch.item.validator.Validator;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.core.io.ClassPathResource;

import org.springframework.transaction.PlatformTransactionManager;

import com.wisely.ch9_2.domin.Person;

@Configuration

@EnableBatchProcessing

public class CsvBatchConfig {

@Bean

public ItemReader reader() throws Exception{

FlatFileItemReader reader = new FlatFileItemReader();

reader.setResource(new ClassPathResource("people.csv"));

reader.setLineMapper(new DefaultLineMapper() {{

setLineTokenizer(new DelimitedLineTokenizer(){{

setNames(new String[] {"name","age","nation","address"});

}});

setFieldSetMapper(new BeanWrapperFieldSetMapper() {{

setTargetType(Person.class);

}});

}});

return reader;

}

@Bean

public ItemProcessor processor(){

CsvItemProcessor processor = new CsvItemProcessor();

processor.setValidator(csvBeanValidator());

return processor;

}

@Bean

public ItemWriter writer(DataSource dataSource){

JdbcBatchItemWriter writer = new JdbcBatchItemWriter();

writer.setItemSqlParameterSourceProvider(new BeanPropertyItemSqlParameterSourceProvider());

String sql = "insert into person " + "(id,name,age,nation,address) "

+

"values(hibernate_sequence.nextval, :name, :age, :nation, :address)";

writer.setSql(sql);

writer.setDataSource(dataSource);

return writer;

}

@Bean

public JobRepository JobRepository(DataSource dataSource,PlatformTransactionManager transactionManager) throws Exception{

JobRepositoryFactoryBean jobRepositoryFactoryBean = new JobRepositoryFactoryBean();

jobRepositoryFactoryBean.setDataSource(dataSource);

jobRepositoryFactoryBean.setTransactionManager(transactionManager);

jobRepositoryFactoryBean.setDatabaseType("oracle");

return jobRepositoryFactoryBean.getObject();

}

@Bean

public SimpleJobLauncher jobLauncher(DataSource dataSource,PlatformTransactionManager transactionManager)throws Exception{

SimpleJobLauncher jobLauncher = new SimpleJobLauncher();

jobLauncher.setJobRepository(JobRepository(dataSource, transactionManager));

return jobLauncher;

}

@Bean

public Job importJob(JobBuilderFactory jobs,Step s1) {

return jobs.get("importJob")

.incrementer(new RunIdIncrementer())

.flow(s1)

.end()

.listener(CsvJobListener())

.build();

}

@Bean

public Step step1(StepBuilderFactory stepBuilderFactory,ItemReaderreader,ItemWriterwriter,ItemProcessorprocessor) {

return stepBuilderFactory

.get("step1")

.chunk(65000)

.reader(reader)

.processor(processor)

.writer(writer)

.build();

}

@Bean

public CsvJobListener CsvJobListener(){

return new CsvJobListener();

}

@Bean

public Validator csvBeanValidator() {

return new CsvBeanValidator();

}

}

配置代码较长,我们将拆开讲解,首先我们配置类要使用@EnableBatchProcessing开启批处理的支持,这点千万不要忘记。

@Bean

public ItemReader reader() throws Exception{

FlatFileItemReader reader = new FlatFileItemReader();//使用FlatFileItemReader读取文件。

reader.setResource(new ClassPathResource("people.csv"));//使用FlatFileItemReader的setResource方法设置csv文件的路径

reader.setLineMapper(new DefaultLineMapper() {{ //在此处对cvs文件的数据和领域模型类做对应映射。

setLineTokenizer(new DelimitedLineTokenizer(){{

setNames(new String[] {"name","age","nation","address"});

}});

setFieldSetMapper(new BeanWrapperFieldSetMapper() {{

setTargetType(Person.class);

}});

}});

return reader;

}

ItemReader定义

@Bean

public ItemReader reader() throws Exception{

FlatFileItemReader reader = new FlatFileItemReader();//使用FlatFileItemReader读取文件。

reader.setResource(new ClassPathResource("people.csv"));//使用FlatFileItemReader的setResource方法设置csv文件的路径

reader.setLineMapper(new DefaultLineMapper() {{ //在此处对cvs文件的数据和领域模型类做对应映射。

setLineTokenizer(new DelimitedLineTokenizer(){{

setNames(new String[] {"name","age","nation","address"});

}});

setFieldSetMapper(new BeanWrapperFieldSetMapper() {{

setTargetType(Person.class);

}});

}});

return reader;

}

ItemProcessor定义:

@Bean

public ItemProcessor processor(){

CsvItemProcessor processor = new CsvItemProcessor();//使用我们自己定义的ItemProcessor的实现CsvItemProcessor

processor.setValidator(csvBeanValidator());//为processor指定校验器为CsvBeanValidator

return processor;

}

@Bean

public Validator csvBeanValidator() {

return new CsvBeanValidator();

}

ItemWriter定义:

@Bean

public ItemWriter writer(DataSource dataSource){ //Spring能让容器中已有的Bean以参数的形式注入,Spring Boot已为我们定义了dataSource。

JdbcBatchItemWriter writer = new JdbcBatchItemWriter(); //我们使用JDBC批处理的JdbcBatchItemWriter来写数据到库。

writer.setItemSqlParameterSourceProvider(new BeanPropertyItemSqlParameterSourceProvider());

String sql = "insert into person " + "(id,name,age,nation,address) "

+

"values(hibernate_sequence.nextval, :name, :age, :nation, :address)";

writer.setSql(sql); //在此设置要执行批处理的SQL语句。

writer.setDataSource(dataSource);

return writer;

}

JobRepository定义:

@Bean

public JobRepository JobRepository(DataSource dataSource,PlatformTransactionManager transactionManager) throws Exception{

JobRepositoryFactoryBean jobRepositoryFactoryBean = new JobRepositoryFactoryBean();

jobRepositoryFactoryBean.setDataSource(dataSource);

jobRepositoryFactoryBean.setTransactionManager(transactionManager);

jobRepositoryFactoryBean.setDatabaseType("oracle");

return jobRepositoryFactoryBean.getObject();

}

代码解释

jobRepository的定义需要dataSource和transactionManager,Spring Boot已为我们自动配置了这两个类,Spring可通过方法注入已有的Bean。

JobLauncher定义:

@Bean

public SimpleJobLauncher jobLauncher(DataSource dataSource,PlatformTransactionManager transactionManager)throws Exception{

SimpleJobLauncher jobLauncher = new SimpleJobLauncher();

jobLauncher.setJobRepository(JobRepository(dataSource, transactionManager));

return jobLauncher;

}

Job定义:

@Bean

public Job importJob(JobBuilderFactory jobs,Step s1) {

return jobs.get("importJob")

.incrementer(new RunIdIncrementer())

.flow(s1) //为Job指定Step

.end()

.listener(CsvJobListener())//绑定监听器cxvJobListener

.build();

}

@Bean

public CsvJobListener CsvJobListener(){

return new CsvJobListener();

}

Step定义:

@Bean

public Step step1(StepBuilderFactory stepBuilderFactory,ItemReaderreader,ItemWriterwriter,ItemProcessorprocessor) {

return stepBuilderFactory

.get("step1")

.chunk(65000)//批处理每次提交65000条数据

.reader(reader) //给step绑定reader

.processor(processor) //给step绑定processor

.writer(writer) //给step绑定writer

.build();

}

6.运行

启动程序,Spring Boot会自动初始化Spring Batch数据库,并将csv中的数据导入到数据库中。

为我们初始化的Spring Batch数据库如图所示

这里注意,如果你的项目启动时,没有自动去创建这张表,这时你可以手动去创建,建表语句在如下位置

此时数据已导入且做转换处理,如图

将我们在Person类上定义的@Size(max=4,min=2)

修改为@Size(max=3,min=2),启动程序,控制台输出校验错误,如图

7.手动触发任务

很多时候批处理任务是人为触发的,在些我们添加一个控制器,通过人触发批处理任务,并演示参数后置绑定的使用。

注释掉CsvBatchConfig类的@Configuration注解,让些配置类不再起效。新建TriggerBatchConfig配置类,内容与CsvBatchConfig完全保持一致,除了修改定义ItemReader这个Bean,ItemReader修改后的定义如下

@Bean

@StepScope

public FlatFileItemReader reader(@Value("{jobParameters['input.file.name']}")String pathToFile) throws Exception{

FlatFileItemReader reader = new FlatFileItemReader();//使用FlatFileItemReader读取文件。

reader.setResource(new ClassPathResource(pathToFile));//使用FlatFileItemReader的setResource方法设置csv文件的路径

reader.setLineMapper(new DefaultLineMapper() {{ //在此处对cvs文件的数据和领域模型类做对应映射。

setLineTokenizer(new DelimitedLineTokenizer(){{

setNames(new String[] {"name","age","nation","address"});

}});

setFieldSetMapper(new BeanWrapperFieldSetMapper() {{

setTargetType(Person.class);

}});

}});

return reader;

}

此处需注意Bean的类型修改为FlatFileItemReader,而不是ItemReader。因为ItemReader接口中没有read方法,若使用ItemReader则会报一个“Reader must be open before it can be read”错误。

控制定义如下:

package com.wisely.ch9_2.web;

import org.springframework.batch.core.Job;

import org.springframework.batch.core.JobParameters;

import org.springframework.batch.core.JobParametersBuilder;

import org.springframework.batch.core.launch.JobLauncher;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class DemoController {

@Autowired

JobLauncher jobLauncher;

@Autowired

Job importJob;

@Autowired

public JobParameters jobParameters;

@RequestMapping("/imp")

public String imp(String fileName) throws Exception{

String path = fileName +".csv";

jobParameters = new JobParametersBuilder()

.addLong("time", System.currentTimeMillis())

.addString("input.file.name", path)

.toJobParameters();

return "ok";

}

}

此时我们还要关闭Spring Boot为我们自动执行Job的配置,在application.properties里使用下面代码关闭配置:

spring.batch.job.enabled=false

此时我们访问http://localhost:8080/imp?fileName=people,可获得相同的数据导入结果,如图