Linux运维昱笔记(十三):基于NFS+DRBD+Keepalived实现KVM虚拟主机动态迁移

基于NFS+DRBD+Keepalived实现KVM虚拟主机动态迁移

NFS+DRBD+Keepalived+KVM动态迁移演示

学习环境:

| 主机名 | IP | 安装服务 |

|---|---|---|

| KVM01 | 192.168.1.112 | KVM |

| KVM01 | 192.168.1.113 | KVM |

| NFS | 192.168.1.111 | nfs+drbd+keepalived |

| NFS2 | 192.168.1.114 | nfs+drbd+keepalived |

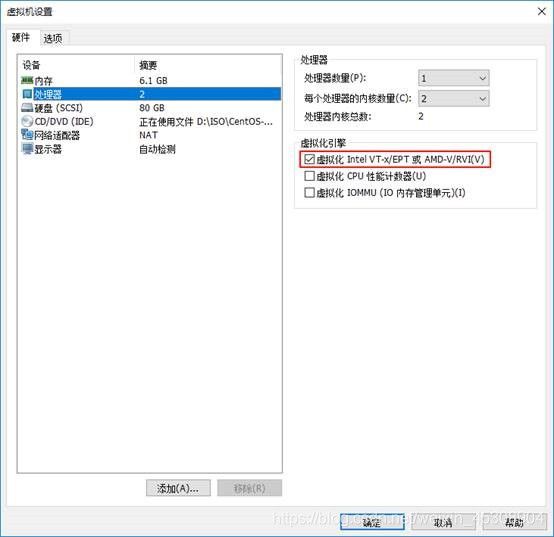

VMware 虚拟机中 linux 开机前需要勾选Inter VT-x/EPT 或 AMD/RVI(v)

在CentOS7的系统光盘镜像中,已经提供了安装KVM所需软件,通过部署基于光盘镜像的本地yum源,直接使用yum安装所需软件即可。

[root@localhost ~] yum -y groupinstall -y "GNOME Desktop" #安装GNOME桌面环境

[root@localhost ~] yum -y install qemu-kvm #KVM模块

[root@localhost ~] yum -y install qemu-kvm-tools #KVM调试工具,可不安装

[root@localhost ~] yum -y install qemu-img #qemu组件,创建磁盘,启动虚拟机

[root@localhost ~] yum -y install bridge-utils #网络支持工具

[root@localhost ~] yum -y install libvirt #虚拟机管理工具

[root@localhost ~] yum -y install virt-manager #图形界面管理虚拟机

[root@localhost ~] yum -y install qemu-kvm qemu-kvm-tools virt-install qemu-img bridge-utils libvirt virt-manager

检测KVM模块安装是否成功

[root@localhost ~] lsmod | grep kvm

kvm_intel 174841 0

kvm 578518 1 kvm_intel

irqbypass 13503 1 kvm

注意:使用yum安装完桌面后,将系统默认的运行target修改为graphical.target。重启系统后进入图形界面,若不修改系统的默认运行target,重启时可能会出错。(init 5)

开启服务,安装完成后还需要开启libvirtd服务

[root@localhost ~] systemctl start libvirtd

[root@localhost ~] systemctl enable libvirtd

设置KVM网络默认是NAT。

[root@KVM01 ~] tail -4 /etc/hosts

192.168.1.112 KVM01

192.168.1.113 KVM02

192.168.1.111 NFS

192.168.1.114 NFS2

[root@KVM01 ~] scp /etc/hosts 192.168.1.111:/etc

[root@KVM01 ~] scp /etc/hosts 192.168.1.113:/etc

[root@KVM01 ~] scp /etc/hosts 192.168.1.114:/etc

NFS SERVER:配置共享目录 /data/kvm-share

[root@NFS ~] cat /etc/exports

/data/kvm-share 192.168.1.112(rw,sync,no_root_squash)

/data/kvm-share 192.168.1.113(rw,sync,no_root_squash)

[root@NFS ~] mkdir -p /data/kvm-share

[root@NFS ~] systemctl start nfs

- DRBD部署

DRBD详细解说

实验初始配置:所有主机关闭防火墙与selinux

[root@localhost ~] iptables -F

[root@localhost ~] systemctl stop firewalld

[root@localhost ~] systemctl disable firewalld

[root@localhost ~] setenforce 0

[root@localhost ~] sed -i '/SELINUX/ s/enforcing/disabled/g' /etc/sysconfig/selinux

[root@NFS ~]rpm -ivh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

[root@NFS ~]yum install -y drbd84-utils kmod-drbd84

[root@NFS ~]modprobe drbd

[root@NFS ~]lsmod | grep drbd

drbd 397041 0

- DRBD配置(NFS和NFS2)

[root@NFS ~]cp /etc/drbd.d/global_common.conf /etc/drbd.d/global_common.conf.bak

[root@NFS ~]vim /etc/drbd.d/global_common.conf

global {

usage-count yes;

}

common {

protocol C;

handlers {

}

startup {

wfc-timeout 240;

degr-wfc-timeout 240;

outdated-wfc-timeout 240;

}

disk {

on-io-error detach;

}

net {

cram-hmac-alg md5;

shared-secret "testdrbd";

}

syncer {

rate 30M;

}

}

[root@NFS ~]vim /etc/drbd.d/r0.res

resource r0 {

on NFS {

device /dev/drbd0;

disk /dev/sdb1;

address 192.168.1.111:7898; #端口可以自己定义

meta-disk internal;

}

on NFS2 {

device /dev/drbd0;

disk /dev/sdb1;

address 192.168.1.114:7898;

meta-disk internal;

}

}

- 在两台机器上添加DRBD磁盘(NFS和NFS2)

在NFS机器上添加一块10G的硬盘作为DRBD,分区为/dev/sdb1,不做格式化,并在本地系统创建/data目录,不做挂载操作。

fdisk -l

磁盘 /dev/sdb:10.7 GB, 10737418240 字节,20971520 个扇区

Units = 扇区 of 1 * 512 = 512 bytes

扇区大小(逻辑/物理):512 字节 / 512 字节

I/O 大小(最小/最佳):512 字节 / 512 字节

fdisk /dev/sdb

依次输入"n->p->1->回车->回车->w" //分区创建后,再次使用"fdisk /dev/sdb",输入p,即可查看到创建的分区.

mkdir /data

- 在两台机器上分别创建DRBD设备并激活r0资源(NFS和NFS2)

[root@NFS ~]mknod /dev/drbd0 b 147 0

[root@NFS ~]drbdadm create-md r0

Writing meta data...

initializing activity log

NOT initialized bitmap

New drbd meta data block successfully created.

[root@NFS ~]drbdadm create-md r0

You want me to create a v08 style flexible-size internal meta data block.

There appears to be a v08 flexible-size internal meta data block

already in place on /dev/vdd1 at byte offset 10737340416

Do you really want to overwrite the existing v08 meta-data?

[need to type 'yes' to confirm] yes //这里输入"yes"

Writing meta data...

initializing activity log

NOT initialized bitmap

New drbd meta data block successfully created.

启动drbd服务(注意:需要主从共同启动方能生效)

[root@NFS ~]systemctl start drbd

[root@NFS ~]ps -ef|grep drbd

root 81611 7779 0 20:11 pts/0 00:00:00 grep --color=auto drbd

[root@NFS ~]cat /proc/drbd

version: 8.4.11-1 (api:1/proto:86-101)

GIT-hash: 66145a308421e9c124ec391a7848ac20203bb03c build by mockbuild@, 2018- 11-03 01:26:55

0: cs:Connected ro:Secondary/Secondary ds:Inconsistent/Inconsistent C r-----

ns:0 nr:0 dw:0 dr:0 al:8 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:10484380

[root@NFS2 ~]: cat /proc/drbd

version: 8.4.11-1 (api:1/proto:86-101)

GIT-hash: 66145a308421e9c124ec391a7848ac20203bb03c build by mockbuild@, 2018- 11-03 01:26:55

0: cs:Connected ro:Secondary/Secondary ds:Inconsistent/Inconsistent C r-----

ns:0 nr:0 dw:0 dr:0 al:8 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:10484380

由上面两台主机的DRBD状态查看结果里的ro:Secondary/Secondary表示两台主机的状态都是备机状态,ds是磁盘状态,显示的状态内容为“不一致”,这是因为DRBD无法判断哪一方为主机,应以哪一方的磁盘数据作为标准。

- 接着将NFS主机配置为DRBD的主节点

[root@NFS ~]drbdsetup /dev/drbd0 primary --force

[root@NFS ~] cat /proc/drbd

version: 8.4.11-1 (api:1/proto:86-101)

GIT-hash: 66145a308421e9c124ec391a7848ac20203bb03c build by mockbuild@, 2018-11-03 01:26:55

0: cs:SyncSource ro:Primary/Secondary ds:UpToDate/Inconsistent C r-----

ns:163528 nr:0 dw:0 dr:165648 al:8 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:10320852

[>....................] sync'ed: 1.7% (10076/10236)M

finish: 0:05:15 speed: 32,704 (32,704) K/sec

[root@NFS2 ~]# cat /proc/drbd

version: 8.4.11-1 (api:1/proto:86-101)

GIT-hash: 66145a308421e9c124ec391a7848ac20203bb03c build by mockbuild@, 2018-11-03 01:26:55

0: cs:SyncTarget ro:Secondary/Primary ds:Inconsistent/UpToDate C r-----

ns:0 nr:1221508 dw:1221508 dr:0 al:8 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:9262872

[=>..................] sync'ed: 11.7% (9044/10236)M

finish: 0:03:51 speed: 40,056 (34,900) want: 41,040 K/sec

ro在主从服务器上分别显示 Primary/Secondary和Secondary/Primary

ds显示UpToDate/UpToDate 表示主从配置成功

- 挂载DRBD(NFS)

先格式化/dev/drbd0

[root@ NFS ~] mkfs.xfs /dev/drbd0

meta-data=/dev/drbd0 isize=512 agcount=4, agsize=655274 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=2621095, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

创建挂载目录,然后执行DRBD挂载

[root@ NFS ~] mkdir /data

[root@ NFS ~] mount /dev/drbd0 /data

[root@NFS ~] df -Th

文件系统 类型 容量 已用 可用 已用% 挂载点

/dev/drbd0 xfs 10G 33M 10G 1% /data

特别注意:

server01节点上不允许对DRBD设备进行任何操作,包括只读,所有的读写操作只能在server01节点上进行。

只有当server01节点挂掉时,server02节点才能提升为server01节点

- DRBD主备故障切换测试(可不做)

模拟NFS节点发生故障,NFS2接管并提升为NFS

下面是在NFS主节点上操作记录

[root@ NFS ~] cd /data/

[root@ NFS data] touch test{1..5}

[root@NFS data] ls

test1 test2 test3 test4 test5

[root@ NFS data] cd ../

[root@ NFS /] umount /data

[root@ NFS /] drbdsetup /dev/drbd0 secondary //将Primary主机设置为DRBD的备节点。在实际生产环境中,直接在NFS2主机上提权(即设置为主节点)即可。

[root@ NFS /] cat /proc/drbd

version: 8.4.11-1 (api:1/proto:86-101)

GIT-hash: 66145a308421e9c124ec391a7848ac20203bb03c build by mockbuild@, 2018-11-03 01:26:55

0: cs:Connected ro:Secondary/Secondary ds:UpToDate/UpToDate C r-----

ns:10497043 nr:0 dw:12663 dr:10489714 al:16 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:0

注意:这里实际生产环境若NFS主节点宕机,在NFS2状态信息中ro的值会显示为Secondary/Unknown,只需要进行DRBD提权操作即可。

下面是在NFS2 备份节点上操作记录

先进行提权操作,即将server02手动升级为DRBD的主节点

[root@NFS2 ~] drbdsetup /dev/drbd0 primary

[root@NFS2 ~] cat /proc/drbd

version: 8.4.11-1 (api:1/proto:86-101)

GIT-hash: 66145a308421e9c124ec391a7848ac20203bb03c build by mockbuild@, 2018-11-03 01:26:55

0: cs:Connected ro:Primary/Secondary ds:UpToDate/UpToDate C r-----

ns:0 nr:10497043 dw:10497043 dr:2120 al:8 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:0

然后挂在drbd

[root@NFS2 ~] mount /dev/drbd0 /data

[root@NFS2 ~] df -Th

文件系统 类型 容量 已用 可用 已用% 挂载点

/dev/mapper/centos-root xfs 50G 4.0G 47G 8% /

devtmpfs devtmpfs 894M 0 894M 0% /dev

tmpfs tmpfs 910M 0 910M 0% /dev/shm

tmpfs tmpfs 910M 9.9M 900M 2% /run

tmpfs tmpfs 910M 0 910M 0% /sys/fs/cgroup

/dev/sr0 iso9660 4.3G 4.3G 0 100% /media/cdrom

/dev/sda1 xfs 1014M 179M 836M 18% /boot

/dev/mapper/centos-home xfs 27G 37M 27G 1% /home

tmpfs tmpfs 182M 0 182M 0% /run/user/0

/dev/drbd0 xfs 10G 33M 10G 1% /data

发现DRBD挂载目录下已经有了之前在远程NFS主机上写入的内容

[root@NFS2 ~] ls /data

test1 test2 test3 test4 test5

NFS部署

- 在两台主机上安装nfs(NFS和NFS2)

[root@NFS ~] yum install rpcbind nfs-utils

[root@NFS ~] vim /etc/exports

/data/kvm-share 192.168.1.112(rw,sync,no_root_squash)

/data/kvm-share 192.168.1.113(rw,sync,no_root_squash)

~

[root@ NFS ~] systemctl start rpcbind

[root@ NFS ~] systemctl start nfs

- 在两台kvm主机上验证(KVM01和KVM02)

[root@KVM01 ~] showmount -e 192.168.1.114

Export list for 192.168.1.114:

/data/kvm-share 192.168.1.113,192.168.1.112

[root@KVM01 ~] showmount -e 192.168.1.111

Export list for 192.168.1.111:

/data/kvm-share 192.168.1.113,192.168.1.112

[root@KVM02 /] showmount -e 192.168.1.111

Export list for 192.168.1.111:

/data/kvm-share 192.168.1.113,192.168.1.112

[root@KVM02 /] showmount -e 192.168.1.114

Export list for 192.168.1.114:

/data/kvm-share 192.168.1.113,192.168.1.112

keepalived部署

- 两台机器yum安装keepalived(NFS 和NFS2)

[root@NFS /] yum -y install keepalived

[root@NFS2 /] yum -y install keepalived

- 更改NFS的配置文件并启动

[root@NFS ~] vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id DRBD_MASTER

}

vrrp_script chk_nfs {

script "/etc/keepalived/check_nfs.sh"

interval 2

weight -5

}

vrrp_instance VI_1 {

state BACKUP

interface ens32

virtual_router_id 51

priority 100

nopreempt

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nfs

}

notify_master /etc/keepalived/notify_master.sh //当此机器为keepalived的master角色时执行这个脚本

virtual_ipaddress {

192.168.1.100

}

}

[root@NFS ~] vim /etc/keepalived/check_nfs.sh

#!/bin/sh

###检查nfs可用性:进程和是否能够挂载

/usr/bin/systemctl status nfs &>/dev/null

if [ $? -ne 0 ];then

###卸载drbd设备

umount /dev/drbd0

###将drbd主降级为备

drbdadm secondary r0

#关闭keepalived

/usr/bin/systemctl stop keepalived

fi

[root@NFS ~] chmod 755 /etc/keepalived/check_nfs.sh

[root@NFS ~] vim /etc/keepalived/notify_master.sh

#!bin/bash

/usr/sbin/drbdadm primary r0 &>/dev/null

/usr/bin/mount /dev/drbd0 /data &>/dev/null

/usr/bin/systemctl restart nfs &>/dev/null`

[root@NFS ~] chmod 755 /etc/keepalived/notify_master.sh

[root@NFS ~] systemctl restart keepalived

查看vip

[root@NFS ~] ip a | grep ens32

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

inet 192.168.1.111/24 brd 192.168.1.255 scope global noprefixroute ens32

inet 192.168.1.100/32 scope global ens32

- 更改NFS2的配置文件

[root@NFS2 ~] vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id DRBD_MASTER

}

vrrp_script chk_nfs {

script "/etc/keepalived/check_nfs.sh"

interval 2

weight -5

}

vrrp_instance VI_1 {

state BACKUP

interface ens32

virtual_router_id 51

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nfs

}

notify_master /etc/keepalived/notify_master.sh //当此机器为keepalived的master角色时执行这个脚本

virtual_ipaddress {

192.168.1.100

}

}

[root@NFS2 ~] vim /etc/keepalived/check_nfs.sh

#!/bin/sh

###检查nfs可用性:进程和是否能够挂载

/usr/bin/systemctl status nfs &>/dev/null

if [ $? -ne 0 ];then

###卸载drbd设备

umount /dev/drbd0

###将drbd主降级为备

drbdadm secondary r0

#关闭keepalived

/usr/bin/systemctl stop keepalived

fi

[root@NFS2 ~] chmod 755 /etc/keepalived/check_nfs.sh

[root@NFS2 ~] vim /etc/keepalived/notify_master.sh

#!bin/bash

/usr/sbin/drbdadm primary r0 &>/dev/null

/usr/bin/mount /dev/drbd0 /data &>/dev/null

/usr/bin/systemctl restart nfs &>/dev/null

[root@NFS2 ~] chmod 755 /etc/keepalived/notify_master.sh

[root@NFS2 ~] systemctl restart keepalived

验证nfs高可用

- 模拟nfs服务停机

[root@NFS ~] systemctl stop nfs

[root@NFS ~] ip a | grep ens32

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

inet 192.168.1.111/24 brd 192.168.1.255 scope global noprefixroute ens32

//VIP不在了

[root@NFS ~] cat /proc/drbd

version: 8.4.11-1 (api:1/proto:86-101)

GIT-hash: 66145a308421e9c124ec391a7848ac20203bb03c build by mockbuild@, 2018-11-03 01:26:55

0: cs:Connected ro:Secondary/Primary ds:UpToDate/UpToDate C r-----

ns:10501164 nr:6171 dw:22955 dr:10496179 al:16 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:0

//drbd变为Secondary/Primary节点

- 查看NFS2(有了vip 变为primary/secondary)

[root@NFS2 ~] ip a | grep ens32

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

inet 192.168.1.114/24 brd 192.168.1.255 scope global noprefixroute ens32

inet 192.168.1.100/32 scope global ens32

[root@NFS2 ~] cat /proc/drbd

version: 8.4.11-1 (api:1/proto:86-101)

GIT-hash: 66145a308421e9c124ec391a7848ac20203bb03c build by mockbuild@, 2018-11-03 01:26:55

0: cs:Connected ro:Primary/Secondary ds:UpToDate/UpToDate C r-----

ns:6171 nr:10501164 dw:10507335 dr:7581 al:10 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:0

[root@NFS2 ~] ls /data/

aaaa test1 test2 test3 test4 test5

KVM

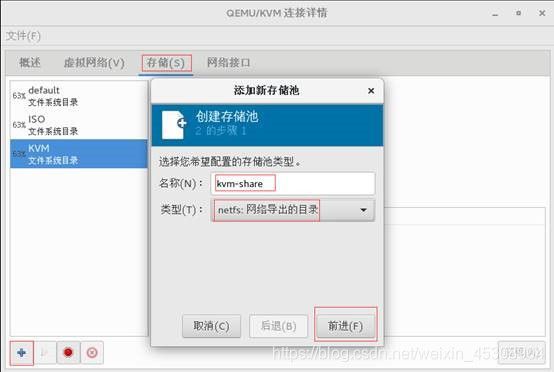

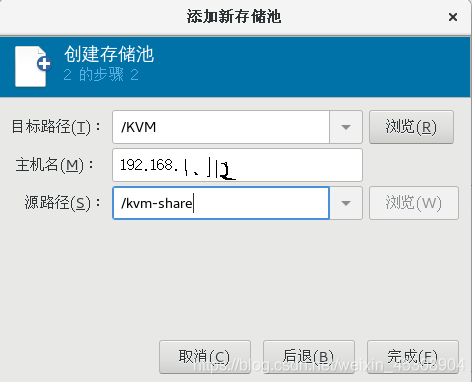

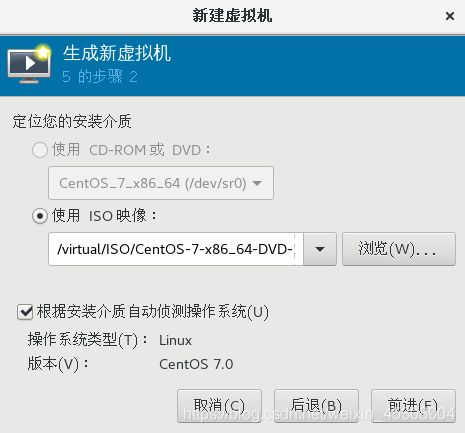

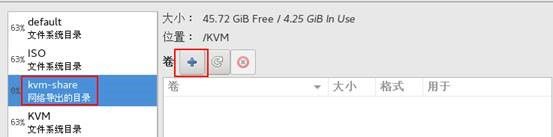

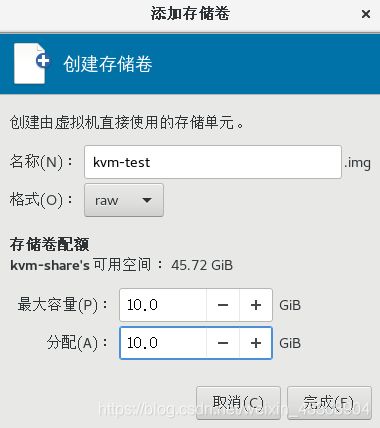

两台KVM连接共享存储:双击localhost(qemu) 点击 存储 点击 加号

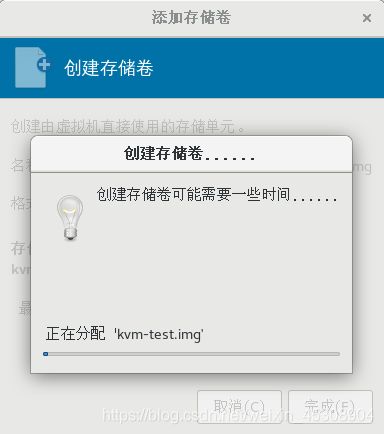

过程比较慢,需要耐心等待。

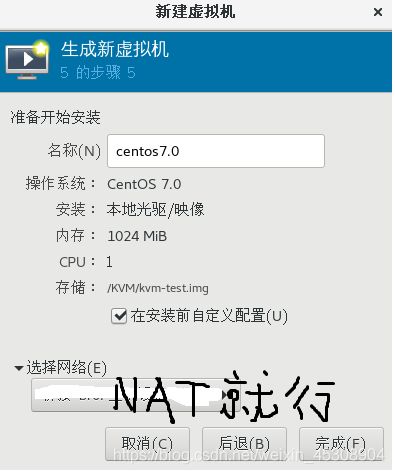

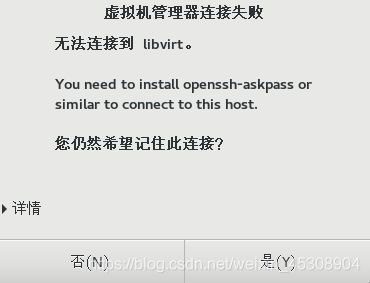

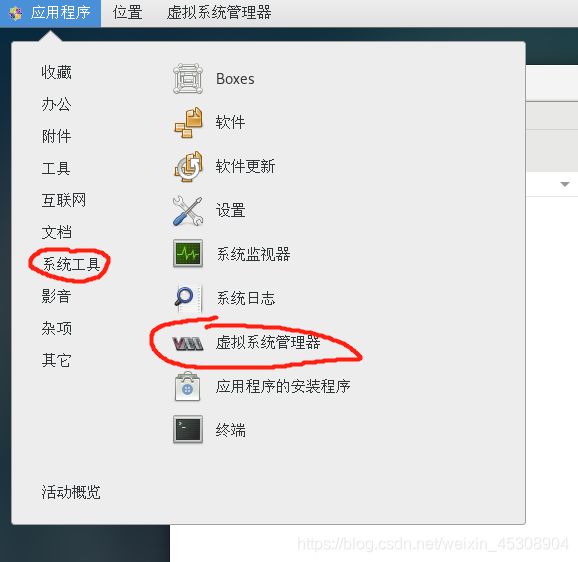

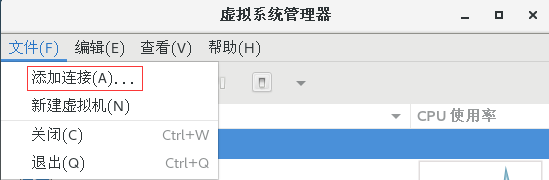

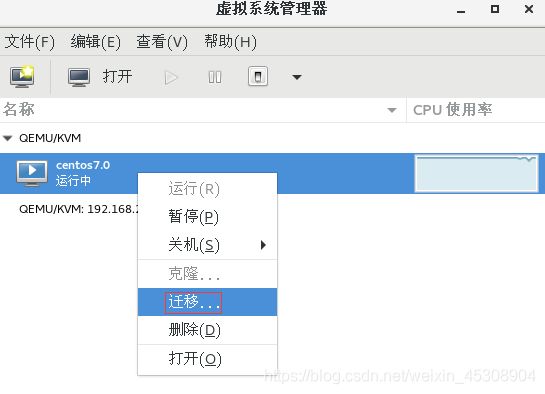

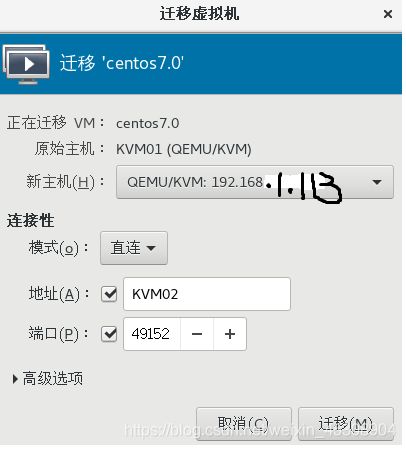

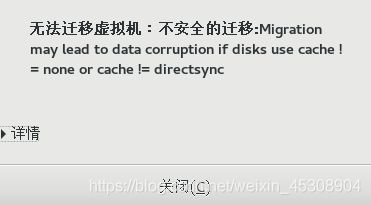

KVM迁移:打开virt-manager 选择文件 选择Add Connection

源主机连接目标主机

[root@KVM01 ~]# yum -y install openssh-askpass

[root@KVM02 ~]# yum -y install openssh-askpass

[root@KVM01 ~]# virsh shutdown centos7.0

域 centos7.0 被关闭

[root@KVM01 ~]# virsh edit centos7.0

[root@KVM01 ~]# virsh start centos7.0

域 centos7.0 已开始

[root@KVM01 ~]# virsh list --all

Id 名称 状态

2 centos7.0 running