centos7+pacemaker+corosync+haproxy实现高可用

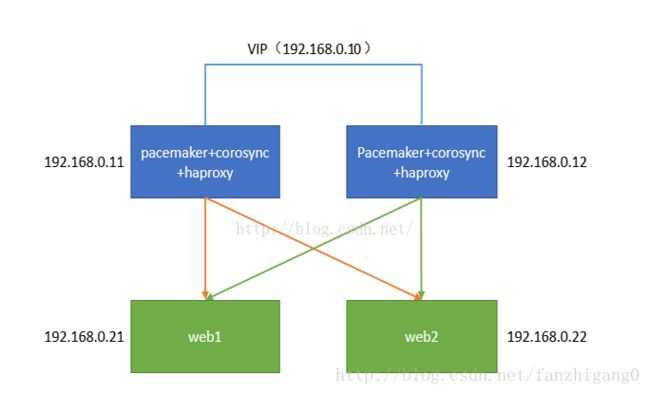

1.架构准备

系统是centos7-1511,实验需要准备4台主机,虚拟机也行,架构如下:

2 .系统环境配置

ha1:192.168.0.11

ha2:192.168.0.12

以下的安装配置分别在这2台机器上进行。

1.关闭防火墙和SELinux

systemctl disable firewalld

systemctl stop firewalld

iptables –F

修改配置文件vim /etc/selinux/config,将SELINU置为disabled

也可使用命令:

sed -i '/SELINUX/s/enforcing/disabled/' /etc/selinux/config

2.2台主机分别修改hostname为ha1和ha2

hostnamectl --static --transient set-hostname ha1

hostnamectl --static --transient set-hostname ha2vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.0.11 ha1

192.168.0.12 ha24.同步时间

yum install ntp -y

ntpdate cn.pool.ntp.org5.双机互信

ha1:

ssh-keygen -t rsa -f ~/.ssh/id_rsa -P ''

scp /root/.ssh/id_rsa.pub root@ha2:/root/.ssh/authorized_keys

ha2:

ssh-keygen -t rsa -f ~/.ssh/id_rsa -P ''

scp /root/.ssh/id_rsa.pub root@ha1:/root/.ssh/authorized_keys3.安装配置pacemaker+corosync

1.安装pacemaker+corosync

在主机192.168.0.11和192.168.0.12上分别安装相关服务,以下步骤分别在2台机器上执行:

yum install pcs pacemaker corosync fence-agents-all -y2.启动pcsd服务(开机自启动)

systemctl start pcsd.service

systemctl enable pcsd.service3.创建集群用户

passwd hacluster(此用户在安装pcs时候会自动创建)

以上都是在2台主机上执行

4.集群各节点之间认证

pcs cluster auth ha1 ha2(此处需要输入的用户名必须为pcs自动创建的hacluster,其他用户不能添加成功)

5.创建并启动名为my_cluster的集群,其中ha1 ha2为集群成员

pcs cluster setup --start --name my_cluster ha1 ha26.设置集群自启动

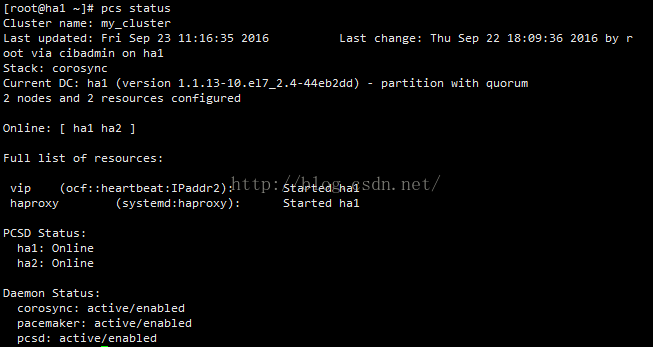

pcs cluster enable --all7.查看集群状态

pcs cluster statusps aux | grep pacemaker检验Corosync的安装及当前corosync状态:

corosync-cfgtool -s

corosync-cmapctl| grep members

pcs status corosync检查配置是否正确(假若没有输出任何则配置正确):

crm_verify -L -V此错误,禁用STONITH:

pcs property set stonith-enabled=false无法仲裁时候,选择忽略:

pcs property set no-quorum-policy=ignore

配置VIP资源:

pcs resource create vip ocf:heartbeat:IPaddr2 params ip=192.168.0.10 nic='enp0s3' cidr_netmask='24' broadcast='192.168.0.255' op monitor interval=5s timeout=20s on-fail=restart配置HAProxy

pcs resource create haproxy systemd:haproxy op monitor interval="5s"如果为找到资源,先安装,然后再创建

yum install haproxy -ypcs resource create haproxy systemd:haproxy op monitor interval="5s"定义运行的HAProxy和VIP必须在同一节点上:

pcs constraint colocation add vip haproxy INFINITY定义约束,先启动VIP之后才启动HAProxy:

pcs constraint order vip then haproxy备注:配置资源多节点启动,添加--clone

4.配置haproxy

vim /etc/haproxy/haproxy.cfg

这里的配置就不详细说了,本环境配置如下:

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

listen admin_stats

stats enable

bind *:8080 #监听的ip端口号

mode http #开关

option httplog

log global

stats refresh 30s #统计页面自动刷新时间

stats uri /haproxy #访问的uri ip:8080/haproxy

stats realm haproxy

stats auth admin:admin #认证用户名和密码

stats hide-version #隐藏HAProxy的版本号

stats admin if TRUE #管理界面,如果认证成功了,可通过webui管理节点

listen galera_cluster

bind *:8888

balance roundrobin

option tcpka

option httpchk

option tcplog

server controller1 192.168.0.21:80 check port 80 inter 2000 rise 2 fall 5

server controller2 192.168.0.22:80 check port 80 inter 2000 rise 2 fall 5haprxy服务不用启动,会随着corosync一起启动。

最后2台的server自己安装个httpd(yum install httpd -y)就可以了。

最后查看集群状态

pcs status

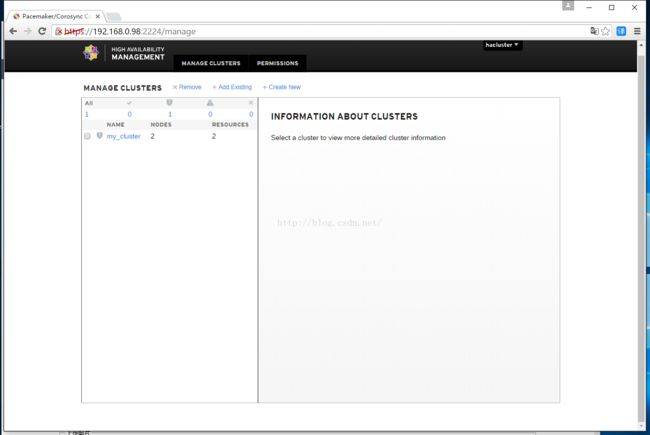

UI查看集群状态

https:192.168.0.10:2224

使用集群的账号hacluster登录,然后Add Existing