pacemaker+corosync实现mysql的高可用

实现原理

创建集群,添加节点,添加共享磁盘进行资源共享,将共享磁盘挂载在mysql的目录上/var/lib/mysql/,就是哪一个节点使用资源进行挂载,哪一个节点可以使用该数据库。

[root@toto1 ~]# ls /var/lib/mysql/

[root@toto1 ~]# systemctl start mariadb.service

[root@toto1 ~]# ls /var/lib/mysql/

aria_log.00000001 ibdata1 ib_logfile1 mysql.sock test

aria_log_control ib_logfile0 mysql performance_schema

[root@toto1 ~]# systemctl stop mariadb.service

[root@toto1 ~]# ls /var/lib/mysql/

aria_log.00000001 ibdata1 ib_logfile1 performance_schema

aria_log_control ib_logfile0 mysql test

1 、作为集群节点的服务器全部安装mariadb

yum install mariadb-server.x86_64 -y

2 、增加资源

vip:#增加VIP资源

pcs resource create totovip1 ocf:heartbeat:IPaddr2 ip=172.25.13.100 cidr_netmask=32 op monitor interval=30s

mysql_data:#增加文件管理系统资源

pcs resource create mysql_data ocf:heartbeat:Filesystem device=/dev/sdb1 directory=/var/lib/mysql fstype=xfs op monitor interval=30s

mariadb:#增加数据库服务

pcs resource create mariadb systemd:mariadb op monitor interval=1min

mysql_group:将资源添加到mysql_group组中

pcs resource group add mysql_group mysql_data totovip1 mariadb

测试:

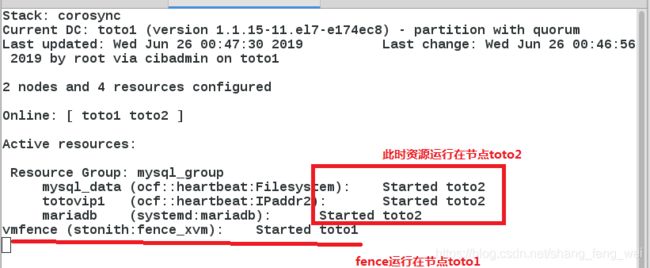

数据库资源运行在节点toto2上

[root@toto1 ~]# pcs status

Cluster name: toto_cluster

Stack: corosync

Current DC: toto1 (version 1.1.15-11.el7-e174ec8) - partition with quorum

Last updated: Wed Jun 26 00:14:39 2019 Last change: Wed Jun 26 00:09:25 2019 by root via cibadmin on toto1

2 nodes and 4 resources configured

Online: [ toto1 toto2 ]

Full list of resources:

vmfence (stonith:fence_xvm): Started toto1

Resource Group: mysql_group

mysql_data (ocf::heartbeat:Filesystem): Started toto2

totovip1 (ocf::heartbeat:IPaddr2): Started toto2

mariadb (systemd:mariadb): Started toto2 # 资源运行在节点toto2上

查看各个节点共享磁盘挂载情况:

toto1节点:共享磁盘没有挂载,数据库没有启动

[root@toto1 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/rhel-root 17811456 1272992 16538464 8% /

devtmpfs 497292 0 497292 0% /dev

tmpfs 508264 55056 453208 11% /dev/shm

tmpfs 508264 13088 495176 3% /run

tmpfs 508264 0 508264 0% /sys/fs/cgroup

/dev/sda1 1038336 141512 896824 14% /boot

tmpfs 101656 0 101656 0% /run/user/0

toto2 节点:共享磁盘挂载在/var/lib/mysql/ 数据库启动

[root@toto2 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sdb1 20960256 62952 20897304 1% /var/lib/mysql

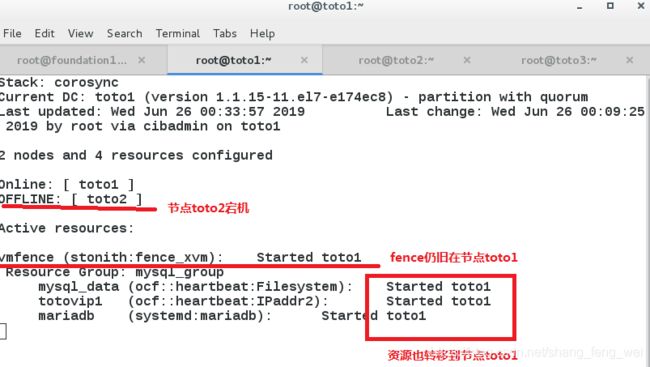

当节点toto2异常导致资源转移到节点toto1 的时候。共享磁盘在节点toto1上挂载,数据库在节点toto1上运行。

[root@toto1 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sdb1 20960256 62952 20897304 1% /var/lib/mysql # 共享磁盘挂载

[root@toto1 ~]# systemctl status mariadb.service # 服务自动开启

Active: active (running) since Wed 2019-06-26 00:19:24 CST; 19s ago

[root@toto1 ~]# mysql # 数据库可以正常使用

Welcome to the MariaDB monitor. Commands end with ; or \g.

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| test |

+--------------------+

4 rows in set (0.00 sec)

MariaDB [(none)]> quit

Bye

到次,mysql服务的高可用已经实现

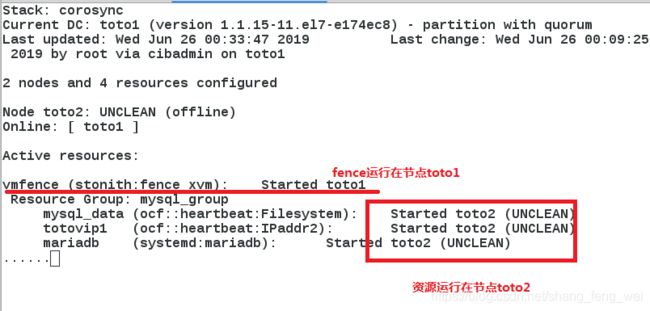

现象:资源目前在节点toto2,当toto2宕机之后,资源会暂时回到toto1上,但是当toto2重启以后,资源就又回到了toto2上

但是可以看出这个资源是不正确的,资源应该在重启之后在不进行转移,因为我们并没有定义错误域,服务资源应该在toto1上,fence应该在toto2上。但是由于这个资源是具有粘滞性,导致出现上述异常。此时可以对其进行解决。方法步骤如下:

1 、删除之前创建资源、组、fence

pcs resource delete mysql_group

pcs resource delete vmfence

pcs property set stonith-enabled=false

2 、重现提阿加资源、组

pcs resource create totovip1 ocf:heartbeat:IPaddr2 ip=172.25.13.100 cidr_netmask=32 op monitor interval=30s

pcs resource create mysql_data ocf:heartbeat:Filesystem device=/dev/sdb1 directory=/var/lib/mysql fstype=xfs op monitor interval=30s

pcs resource create mariadb systemd:mariadb op monitor interval=1min

pcs resource group add mysql_group mysql_data totovip1 mariadb

3 、更改resource-stickiness值=100

[root@toto1 ~]# pcs resource defaults resource-stickiness=100

[root@toto1 ~]# pcs resource defaults

resource-stickiness: 100

4 、重新添加fence并再次更改resource-stickiness值=0

[root@toto1 ~]# pcs stonith create vmfence fence_xvm pcmk_host_map="toto1:toto1;toto2:toto2" op monitor interval=1min

[root@toto1 ~]# pcs resource defaults resource-stickiness=0

[root@toto1 ~]# pcs resource defaults

resource-stickiness: 0

5 、设置启用stonish

pcs property set stonith-enabled=true

测试

[root@toto2 ~]# echo c >/proc/sysrq-trigger