Haproxy+PaceMaker实验配置:

server1,server2:集群节点

server3,server4:后端服务器

在server1配置haproxy:

[root@server1 ~]# ls

asciidoc-8.4.5-4.1.el6.noarch.rpm libnfnetlink-devel-1.0.0-1.el6.x86_64.rpm

haproxy-1.6.11.tar.gz lvs-fullnat-synproxy

keepalived-2.0.6 Lvs-fullnat-synproxy.tar.gz

keepalived-2.0.6.tar.gz newt-devel-0.52.11-3.el6.x86_64.rpm

kernel-2.6.32-220.23.1.el6.src.rpm rpmbuild

ldirectord-3.9.5-3.1.x86_64.rpm slang-devel-2.2.1-1.el6.x86_64.rpm

[root@server1 ~]# yum install rpm-build -y 安装rpmbuild制作rpm包的工具

[root@server1 ~]# rpmbuild -tb haproxy-1.6.11.tar.gz

error: Failed build dependencies:

pcre-devel is needed by haproxy-1.6.11-1.x86_64

[root@server1 ~]# yum install pcre-devel -y 解决依赖性

[root@server1 ~]# rpmbuild -tb haproxy-1.6.11.tar.gz 制作包编译

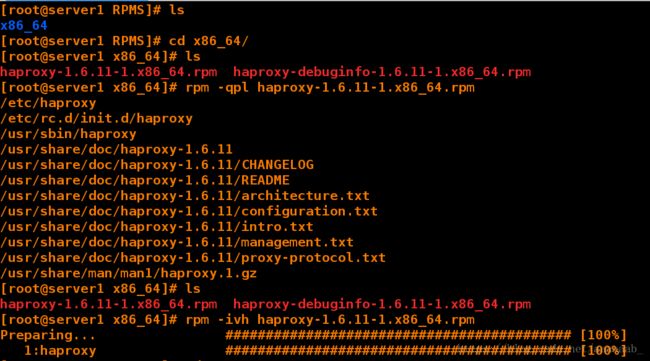

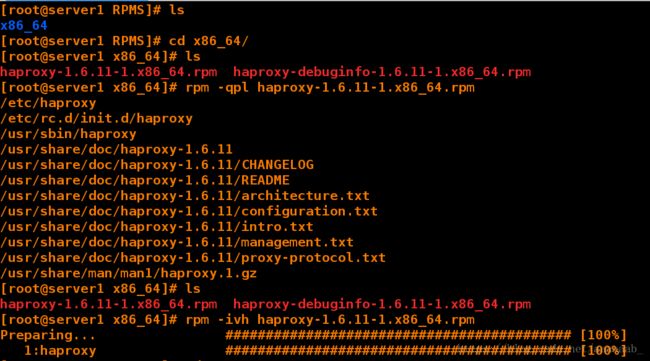

[root@server1 ~]# cd rpmbuild/RPMS/

[root@server1 RPMS]# ls

x86_64

[root@server1 RPMS]# cd x86_64/

[root@server1 x86_64]# ls

haproxy-1.6.11-1.x86_64.rpm haproxy-debuginfo-1.6.11-1.x86_64.rpm

[root@server1 x86_64]# rpm -qpl haproxy-1.6.11-1.x86_64.rpm 查看安装了那些软件

/etc/haproxy

/etc/rc.d/init.d/haproxy

/usr/sbin/haproxy

/usr/share/doc/haproxy-1.6.11

/usr/share/doc/haproxy-1.6.11/CHANGELOG

/usr/share/doc/haproxy-1.6.11/README

/usr/share/doc/haproxy-1.6.11/architecture.txt

/usr/share/doc/haproxy-1.6.11/configuration.txt

/usr/share/doc/haproxy-1.6.11/intro.txt

/usr/share/doc/haproxy-1.6.11/management.txt

/usr/share/doc/haproxy-1.6.11/proxy-protocol.txt

/usr/share/man/man1/haproxy.1.gz

[root@server1 x86_64]# ls

haproxy-1.6.11-1.x86_64.rpm haproxy-debuginfo-1.6.11-1.x86_64.rpm

[root@server1 x86_64]# rpm -ivh haproxy-1.6.11-1.x86_64.rpm 安装haproxy

Preparing... ########################################### [100%]

1:haproxy ########################################### [100%]

[root@server1 x86_64]# cd

[root@server1 ~]# ls

asciidoc-8.4.5-4.1.el6.noarch.rpm libnfnetlink-devel-1.0.0-1.el6.x86_64.rpm

haproxy-1.6.11.tar.gz lvs-fullnat-synproxy

keepalived-2.0.6 Lvs-fullnat-synproxy.tar.gz

keepalived-2.0.6.tar.gz newt-devel-0.52.11-3.el6.x86_64.rpm

kernel-2.6.32-220.23.1.el6.src.rpm rpmbuild

ldirectord-3.9.5-3.1.x86_64.rpm slang-devel-2.2.1-1.el6.x86_64.rpm

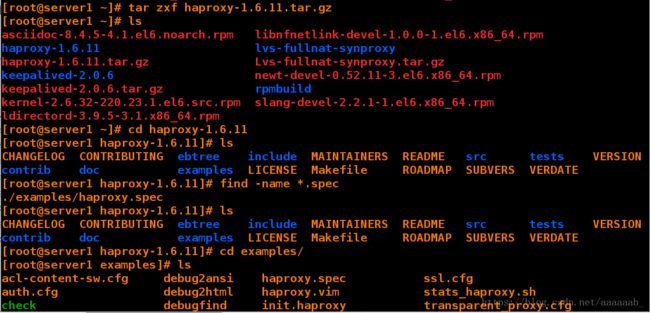

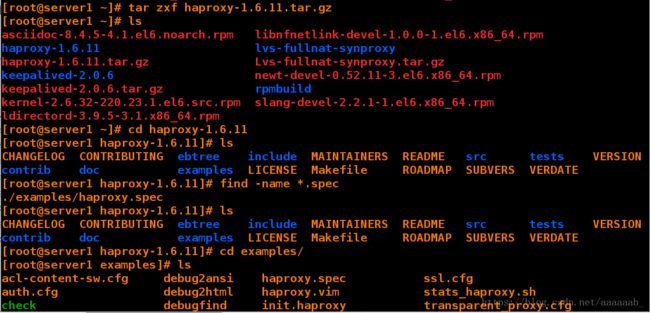

[root@server1 ~]# tar zxf haproxy-1.6.11.tar.gz 解压源码包

[root@server1 ~]# ls

asciidoc-8.4.5-4.1.el6.noarch.rpm libnfnetlink-devel-1.0.0-1.el6.x86_64.rpm

haproxy-1.6.11 lvs-fullnat-synproxy

haproxy-1.6.11.tar.gz Lvs-fullnat-synproxy.tar.gz

keepalived-2.0.6 newt-devel-0.52.11-3.el6.x86_64.rpm

keepalived-2.0.6.tar.gz rpmbuild

kernel-2.6.32-220.23.1.el6.src.rpm slang-devel-2.2.1-1.el6.x86_64.rpm

ldirectord-3.9.5-3.1.x86_64.rpm

[root@server1 ~]

[root@server1 haproxy-1.6.11]

CHANGELOG CONTRIBUTING ebtree include MAINTAINERS README src tests VERSION

contrib doc examples LICENSE Makefile ROADMAP SUBVERS VERDATE

[root@server1 haproxy-1.6.11]

./examples/haproxy.spec

[root@server1 haproxy-1.6.11]

CHANGELOG CONTRIBUTING ebtree include MAINTAINERS README src tests VERSION

contrib doc examples LICENSE Makefile ROADMAP SUBVERS VERDATE

[root@server1 haproxy-1.6.11]

[root@server1 examples]# ls

acl-content-sw.cfg debug2ansi haproxy.spec ssl.cfg

auth.cfg debug2html haproxy.vim stats_haproxy.sh

check debugfind init.haproxy transparent_proxy.cfg

check.conf errorfiles option-http_proxy.cfg

content-sw-sample.cfg haproxy.init seamless_reload.txt

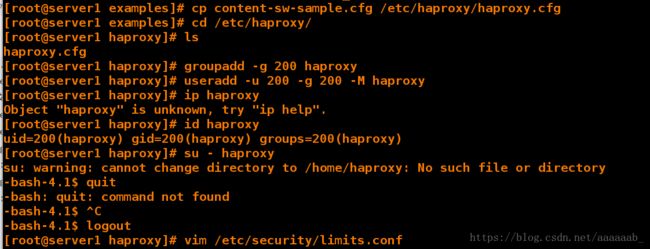

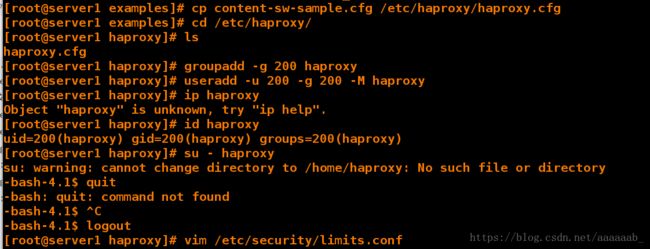

[root@server1 examples]# cp content-sw-sample.cfg /etc/haproxy/haproxy.cfg 复制文件

[root@server1 examples]# cd /etc/haproxy/

[root@server1 haproxy]# ls

haproxy.cfg

[root@server1 haproxy]# groupadd -g 200 haproxy 建立组

[root@server1 haproxy]# useradd -u 200 -g 200 -M haproxy 建立用户

[root@server1 haproxy]# id haproxy 查看用户

uid=200(haproxy) gid=200(haproxy) groups=200(haproxy)

[root@server1 haproxy]# su - haproxy 可以切换到haproxy只是提供了一个shell而已

su: warning: cannot change directory to /home/haproxy: No such file or directory

-bash-4.1$ logout

[root@server1 haproxy]

[root@server1 haproxy]

[root@server1 haproxy]

Shutting down haproxy: [ OK ]

Starting haproxy: [ OK ]

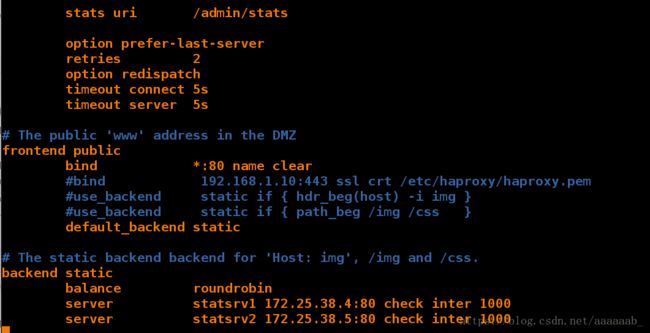

在两个后端打开阿帕奇写入访问文件:

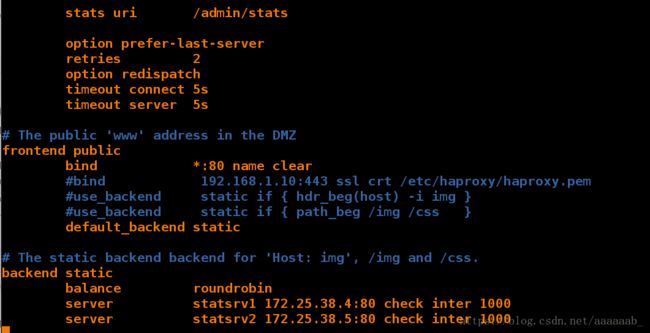

在网页测试haproxy的轮询:

在网页测试haproxy的健康检查:

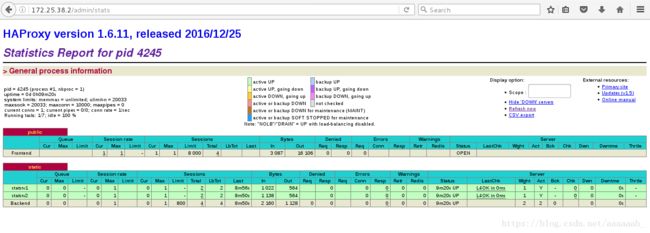

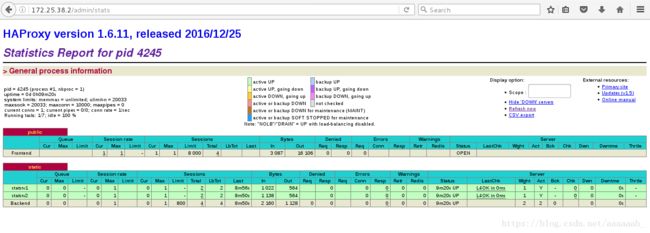

在网页测试haproxy的监控:

Haproxy有8种负载均衡算法-

1.balance roundrobin

2.balance static-rr

3.balance leastconn

4.balance source

5.balance uri

6.balance url_param,

7.balance hdr(name)

8.balance rdp-cookie(name)

演示source的算法:

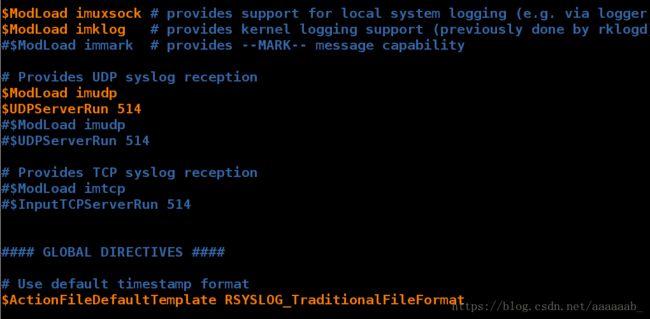

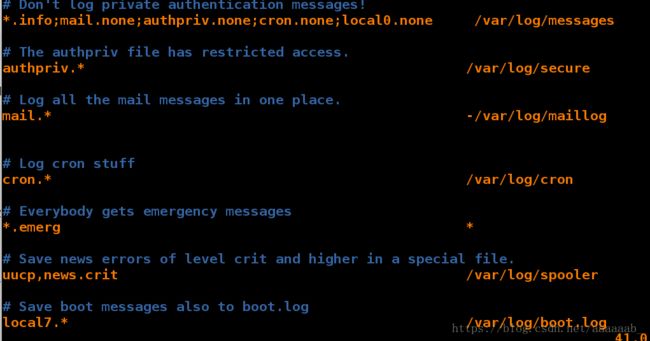

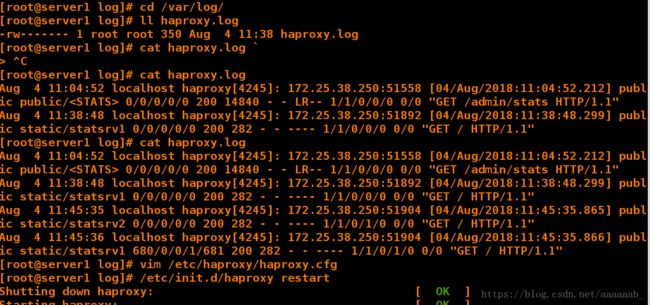

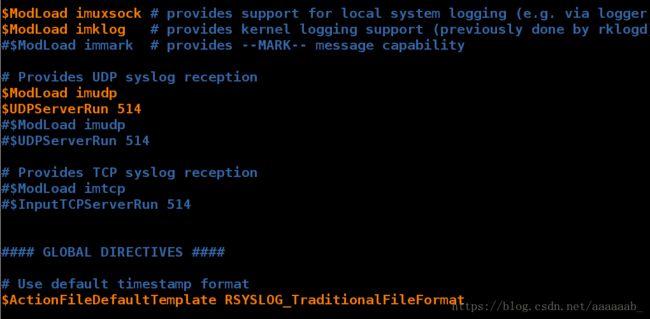

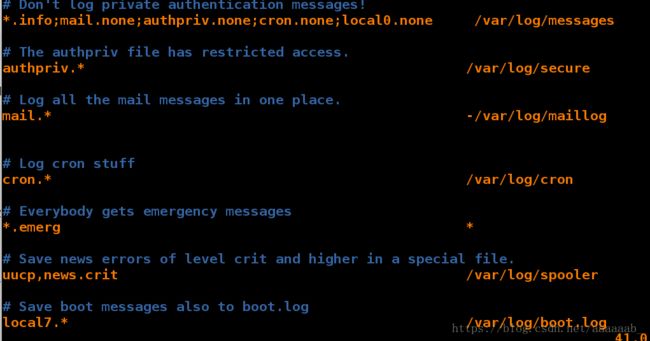

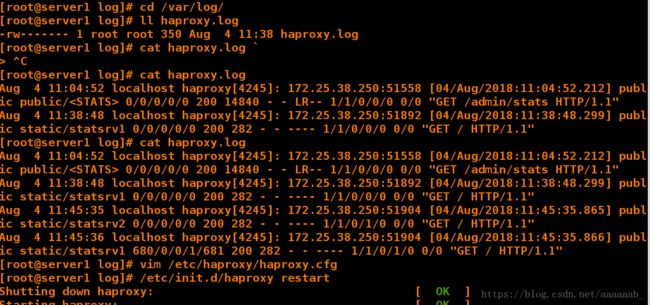

[root@server1 log]

[root@server1 log]# /etc/init.d/rsyslog restart 重启服务

Shutting down system logger: [ OK ]

Starting system logger: [ OK ]

[root@server1 log]# cd /var/log/

[root@server1 log]# ll haproxy.log

-rw------- 1 root root 350 Aug 4 11:38 haproxy.log

[root@server1 log]# cat haproxy.log刷新一个网页会有新的日至生成

Aug 4 11:04:52 localhost haproxy[4245]: 172.25.38.250:51558 [04/Aug/2018:11:04:52.212] public public/ 0/0/0/0/0 200 14840 - - LR-- 1/1/0/0/0 0/0 "GET /admin/stats HTTP/1.1"

Aug 4 11:38:48 localhost haproxy[4245]: 172.25.38.250:51892 [04/Aug/2018:11:38:48.299] public static/statsrv1 0/0/0/0/0 200 282 - - ---- 1/1/0/0/0 0/0 "GET / HTTP/1.1"

[root@server1 log]# cat haproxy.log

Aug 4 11:04:52 localhost haproxy[4245]: 172.25.38.250:51558 [04/Aug/2018:11:04:52.212] public public/ 0/0/0/0/0 200 14840 - - LR-- 1/1/0/0/0 0/0 "GET /admin/stats HTTP/1.1"

Aug 4 11:38:48 localhost haproxy[4245]: 172.25.38.250:51892 [04/Aug/2018:11:38:48.299] public static/statsrv1 0/0/0/0/0 200 282 - - ---- 1/1/0/0/0 0/0 "GET / HTTP/1.1"

Aug 4 11:45:35 localhost haproxy[4245]: 172.25.38.250:51904 [04/Aug/2018:11:45:35.865] public static/statsrv2 0/0/0/0/0 200 282 - - ---- 1/1/0/1/0 0/0 "GET / HTTP/1.1"

Aug 4 11:45:36 localhost haproxy[4245]: 172.25.38.250:51904 [04/Aug/2018:11:45:35.866] public static/statsrv1 680/0/0/1/681 200 282 - - ---- 1/1/0/1/0 0/0 "GET / HTTP/1.1"

[root@server1 log]

[root@server1 log]

Shutting down haproxy: [ OK ]

Starting haproxy: [ OK ]

在server3关闭和开启阿帕其分别在网页测试:(bbs.westos.com)

关闭之后为server4的默认发布文件

打开之后默认访问为server3.这个是算法本身的问题:

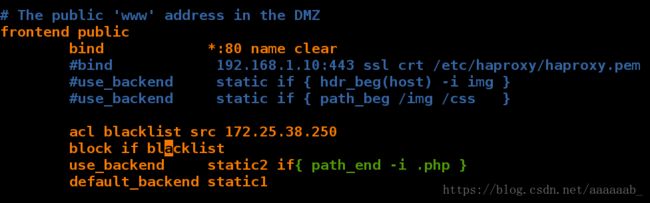

用php网页测试动静分离:

在server1更改haproxy的配置文件:

[root@server1 log]

[root@server1 log]

Shutting down haproxy: [ OK ]

Starting haproxy: [ OK ]

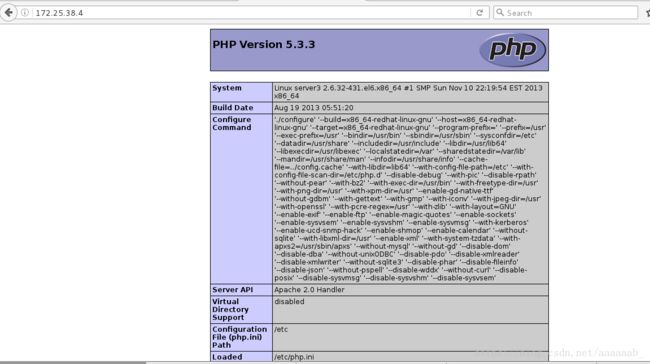

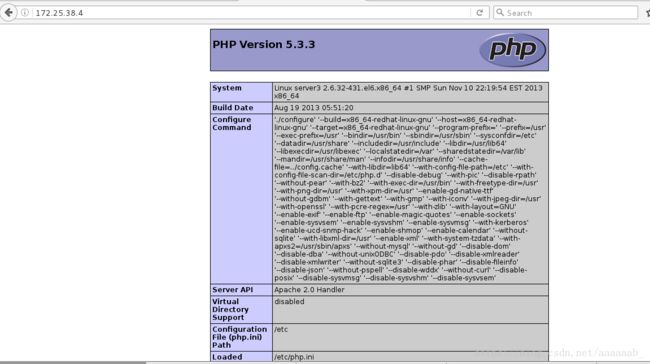

在server3:(172.25.38.4)安装php进行测试:

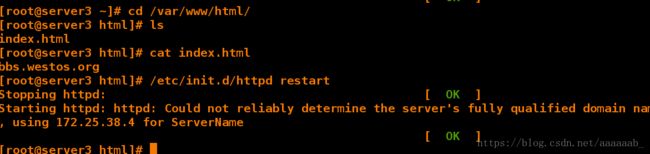

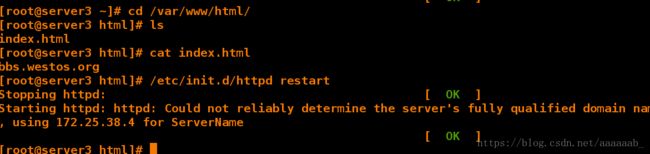

[root@server3 html]

[root@server3 html]

[root@server3 html]

[root@server3 html]

index.html

[root@server3 html]

在网页测试用server3本身IP可以调用证明服务是OK的:

在server1更改配置文件,当不同的访问出来不同的界面,阿帕奇和php分开,实现动静分离:

[root@server1 log]

[root@server1 log]

Shutting down haproxy: [ OK ]

Starting haproxy: [ OK ]

在网页进行测试调用不同的文件可以看到不同的界面实现了动静分离:

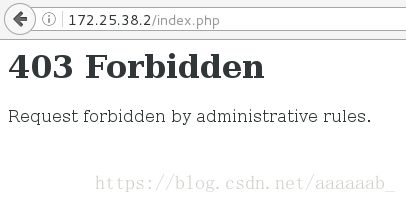

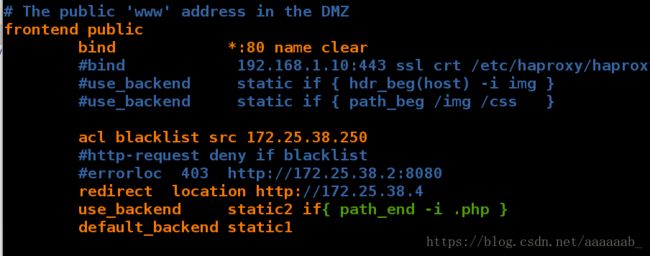

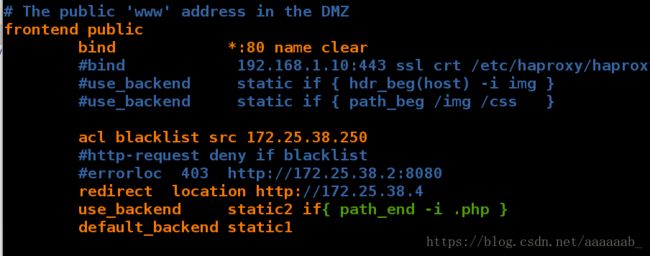

在server1更改配置文件实现重定向:

[root@server1 log]

[root@server1 log]

Shutting down haproxy: [ OK ]

Starting haproxy: [WARNING] 215/130011 (4415) : parsing [/etc/haproxy/haproxy.cfg:44] : The 'block' directive is now deprecated in favor of 'http-request deny' which uses the exact same syntax. The rules are translated but support might disappear in a future version.

[ OK ]

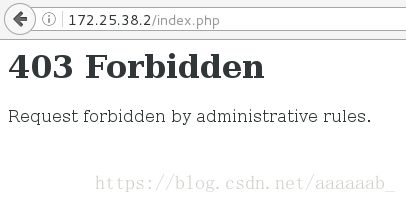

在网页测试会报错403:

在server1进行配置不允许报错403:

[root@server1 log]

[root@server1 log]

Loaded plugins: product-id, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

Setting up Install Process

Package httpd-2.2.15-29.el6_4.x86_64 already installed and latest version

Nothing to do

[root@server1 log]

[root@server1 log]

[root@server1 html]

index.html

[root@server1 html]

server1

[root@server1 html]

Starting httpd: httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.38.2 for ServerName

[ OK ]

[root@server1 html]

Stopping httpd: [ OK ]

Starting httpd: httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.38.2 for ServerName

[ OK ]

[root@server1 html]

Shutting down haproxy: [ OK ]

Starting haproxy: [ OK ]

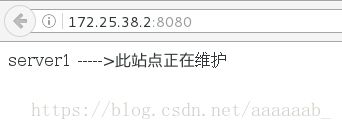

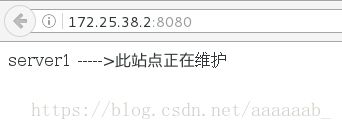

在网页测试:

在另外一台可以看到重定向:

[root@server2 html]

[root@server2 html]

在server1更改配置:

[root@server1 html]

[root@server1 html]

Shutting down haproxy: [ OK ]

Starting haproxy: [ OK ]

在server2看不到重定向:

在server1更改配置测试重定向:

[root@server1 html]

[root@server1 html]

Shutting down haproxy: [ OK ]

Starting haproxy: [ OK ]

在网页测试:

在我们用taobao.com访问淘宝时会进行两次重定向:

[root@foundation38 Desktop]# curl -I taobao.com 首先定向到http://www.taobao.com/

HTTP/1.1 302 Found

Server: Tengine

Date: Sat, 04 Aug 2018 05:21:43 GMT

Content-Type: text/html

Content-Length: 258

Connection: keep-alive

Location: http://www.taobao.com/

[root@foundation38 Desktop]# curl -I www.taobao.com 再次定向到https://www.taobao.com/进行加加密

HTTP/1.1 302 Found

Server: Tengine

Date: Sat, 04 Aug 2018 05:21:48 GMT

Content-Type: text/html

Content-Length: 258

Connection: keep-alive

Location: https://www.taobao.com/

Set-Cookie: thw=cn

Strict-Transport-Security: max-age=31536000

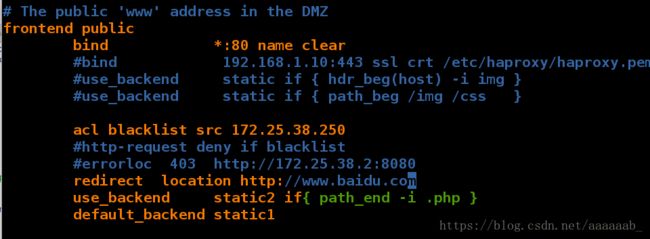

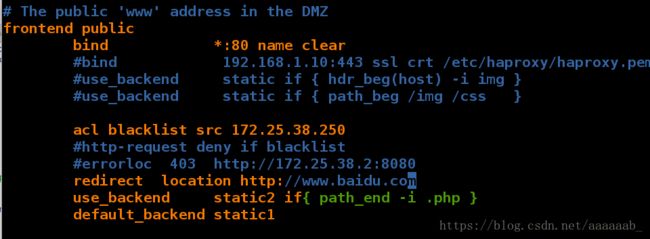

在server1作网址的重定向:

[root@server1 html]

[root@server1 html]

Shutting down haproxy: [ OK ]

Starting haproxy: [ OK ]

在网页输入172.25.38.2也就是server1的IP会自动调转到百度:

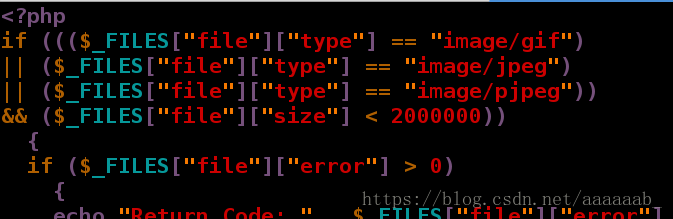

在server1进行配置实现图片的上传:

[root@server1 html]

[root@server1 html]

Shutting down haproxy: [ OK ]

Starting haproxy: [ OK ]

在server4进行配置默认访问目录:

[root@server4 html]

index.html

[root@server4 html]

[root@server4 html]

[root@server4 images]

[root@server4 images]

redhat.jpg

在浏览器查看读写分离的测试,调用的是server1的IP,访问的是后端服务器server4的访问目录:

进行图片的上传下载测试读写分离:

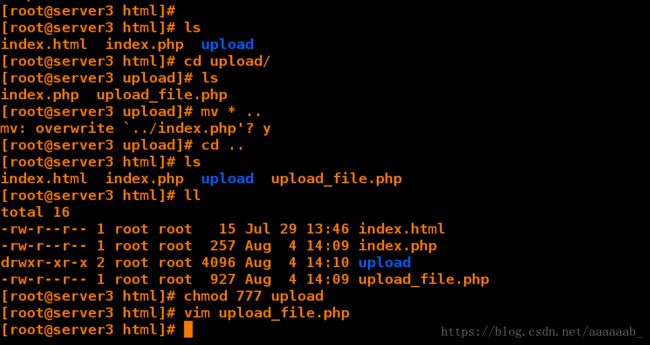

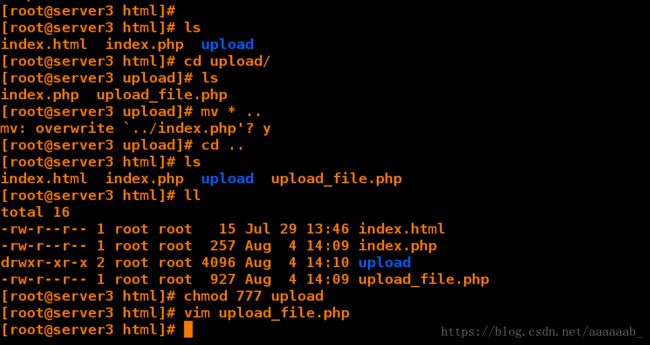

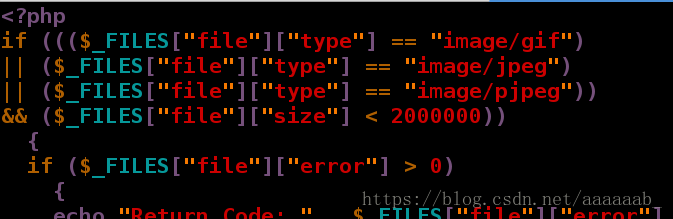

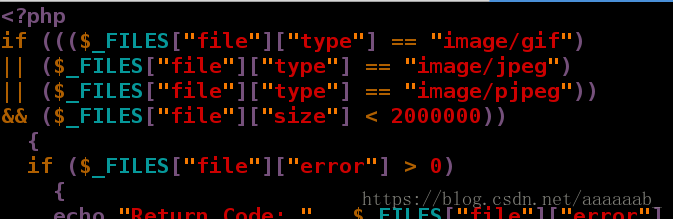

在server3:

[root@server3 html]

index.html index.php upload

[root@server3 html]

[root@server3 upload]

index.php upload_file.php

[root@server3 upload]

mv: overwrite `../index.php'? y 移动到阿帕其默认访问目录下

[root@server3 upload]# cd ..

[root@server3 html]# ls

index.html index.php upload upload_file.php

[root@server3 html]# ll

total 16

-rw-r--r-- 1 root root 15 Jul 29 13:46 index.html

-rw-r--r-- 1 root root 257 Aug 4 14:09 index.php

drwxr-xr-x 2 root root 4096 Aug 4 14:10 upload

-rw-r--r-- 1 root root 927 Aug 4 14:09 upload_file.php

[root@server3 html]# chmod 777 upload 赋予权限

[root@server3 html]

在server4进行相应的配置:

[root@server4 html]

images index.html upload

[root@server4 html]

[root@server4 upload]

index.php upload_file.php

[root@server4 upload]

[root@server4 upload]

[root@server4 html]

images index.html index.php upload upload_file.php

[root@server4 html]

[root@server4 html]

[root@server4 html]

[root@server4 html]

Starting httpd:

[root@server4 html]

Stopping httpd: [ OK ]

Starting httpd: httpd: Could not reliably determine the server's fully qualified domain name, using 172.25.38.5 for ServerName

[ OK ]

用server1的IP上传可以看到文件在server3中,因为server1默认以server2的方式上传的,但是上传到了server3实现了读写分离:

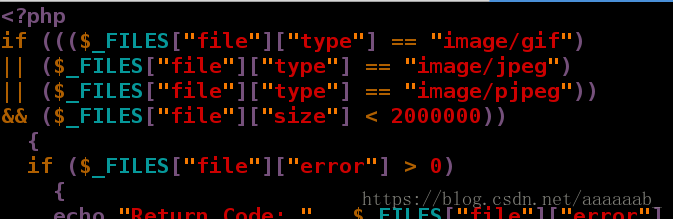

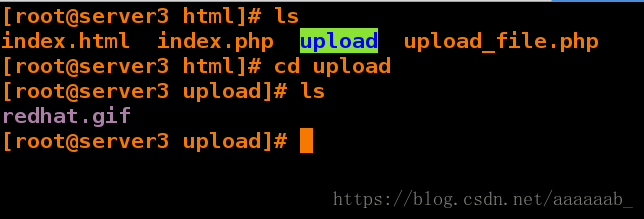

在server3中查看:

[root@server3 html]

index.html index.php upload upload_file.php

[root@server3 html]

[root@server3 upload]

redhat.gif

什么是PaceMaker:

在硬件层面我们可以看到多个节点上启用了不同服务,如数据库,

Apache服务等,这里你可以看到有个standby machine,这

台机器就是当前两个服务不能在它原来的节点上运行时提供备用

的。这样能保证如果某一台机器的Apache服务或者某一台机器

的数据库服务挂了,那么马上在另外一个节点上能够启动该服务。

当然首先这三个节点都是要默认安装这些服务并且做配置的。那

么这样看起来我们能够通过增加节点来提供高可用解决单点故障。

这也是HA要做的主要工作。

pacemaker作为linux系统高可用HA的资源管理器,位于HA集

群架构中的资源管理,资源代理层,它不提供底层心跳信息传递

功能。(心跳信息传递是通过corosync来处理的这个使用有兴

趣的可以在稍微了解一下,其实corosync并不是心跳代理的唯

一组件,可以用hearbeat等来代替)。pacemaker管理资源

是通过脚本的方式来执行的。我们可以将某个服务的管理通过

shell,python等脚本语言进行处理,在多个节点上启动相同

的服务时,如果某个服务在某个节点上出现了单点故障那么pacemaker

会通过资源管理脚本来发现服务在改节点不可用。

pacemaker只是作为HA的资源管理器,所以不要想当然理解它能够

直接管控资源,如果你的资源没有做脚本配置那么对于pacemaker

来说它就是不可管理的。

pacemaker内部组件:

在server2配置pacemaker::

[root@server2 ~]# yum install pacemaker corosync -y 安装组件

Loaded plugins: product-id, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

Setting up Install Process

Package pacemaker-1.1.10-14.el6.x86_64 already installed and latest version

Package corosync-1.4.1-17.el6.x86_64 already installed and latest version

Nothing to do

[root@server2 ~]# cd /etc/corosync/

[root@server2 corosync]# ls

amf.conf.example corosync.conf.example service.d

corosync.conf corosync.conf.example.udpu uidgid.d

[root@server2 corosync]# cp corosync.conf.example corosync.conf

cp: overwrite `corosync.conf

[root@server2 corosync]# vim corosync.conf 编辑配置文件

[root@server2 corosync]# scp corosync.conf server1:/etc/corosync/ 将编辑好的文件传递到另外一个集群节点

root@server1's password:

corosync.conf 100% 479 0.5KB/s 00:00

[root@server2 corosync]# /etc/init.d/corosync start

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@server2 corosync]# cd

[root@server2 ~]# ls

anaconda-ks.cfg install.log pssh-2.3.1-2.1.x86_64.rpm

crmsh-1.2.6-0.rc2.2.1.x86_64.rpm install.log.syslog

[root@server2 ~]# yum install -y crmsh-1.2.6-0.rc2.2.1.x86_64.rpm pssh-2.3.1-2.1.x86_64.rpm 安装组件

Loaded plugins: product-id, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

Setting up Install Process

Examining crmsh-1.2.6-0.rc2.2.1.x86_64.rpm: crmsh-1.2.6-0.rc2.2.1.x86_64

crmsh-1.2.6-0.rc2.2.1.x86_64.rpm: does not update installed package.

Examining pssh-2.3.1-2.1.x86_64.rpm: pssh-2.3.1-2.1.x86_64

pssh-2.3.1-2.1.x86_64.rpm: does not update installed package.

Error: Nothing to do

在server1配置pacemaker:

[root@server1 ~]# yum install pacemaker corosync -y 安装服务

Loaded plugins: product-id, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

HighAvailability | 3.9 kB 00:00

LoadBalancer | 3.9 kB 00:00

ResilientStorage | 3.9 kB 00:00

ScalableFileSystem | 3.9 kB 00:00

rhel-source | 3.9 kB 00:00

Setting up Install Process

Package pacemaker-1.1.10-14.el6.x86_64 already installed and latest version

Package corosync-1.4.1-17.el6.x86_64 already installed and latest version

Nothing to do

[root@server1 ~]# cd /etc/corosync/

[root@server1 corosync]# ls 已经传递过来

amf.conf.example corosync.conf.example service.d

corosync.conf corosync.conf.example.udpu uidgid.d

[root@server1 corosync]# /etc/init.d/corosync start 开启服务

Starting Corosync Cluster Engine (corosync): [ OK ]

在server2调用crm进行测试:

[root@server2 ~]

crm(live)

crm(live)configure

node server1

node server2

primitive vip ocf:heartbeat:IPaddr2 \

params ip="172.25.38.250" cidr_netmask="24" \

op monitor interval="1min" \

meta target-role="Stopped"

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure

crm(live)

crm(live)resource

crm(live)resource

ERROR: syntax: delete vip

crm(live)resource

crm(live)

crm(live)

crm(live)configure

crm(live)configure

node server1

node server2

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure

crm(live)configure

crm(live)configure

crm(live)configure

bye

[root@server2 ~]

Connection to the CIB terminated

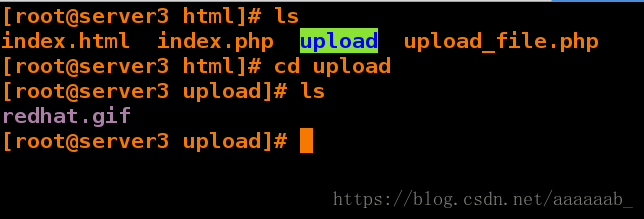

在server1监控查看配置:

[root@server1 corosync]

Connection to the CIB terminated

Reconnecting...[root@server1 corosync]

[root@server1 corosync]

[root@server1 corosync]

Connection to the CIB terminated

Reconnecting...[root@server1 corosync]

1: lo: mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:cf:1f:ba brd ff:ff:ff:ff:ff:ff

inet 172.25.38.2/24 brd 172.25.38.255 scope global eth0

inet 172.25.38.100/24 brd 172.25.38.255 scope global secondary eth0

inet6 fe80::5054:ff:fecf:1fba/64 scope link

valid_lft forever preferred_lft forever

3: eth1: mtu 1500 qdisc noop state DOWN qlen 1000

link/ether 52:54:00:3e:17:9d brd ff:ff:ff:ff:ff:ff

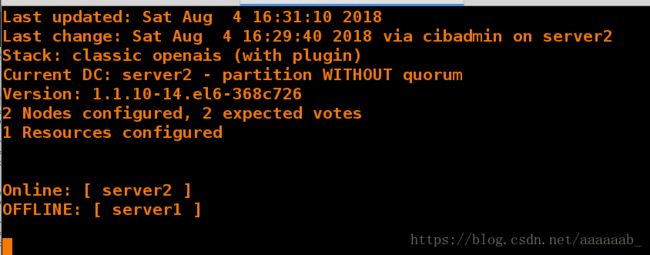

[root@server1 corosync]# /etc/init.d/corosync stop 关闭服务server1在监控可以看到server1已经OFFLINE

Signaling Corosync Cluster Engine (corosync) to terminate: [ OK ]

Waiting for corosync services to unload:. [ OK ]

[root@server1 corosync]

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@server1 corosync]

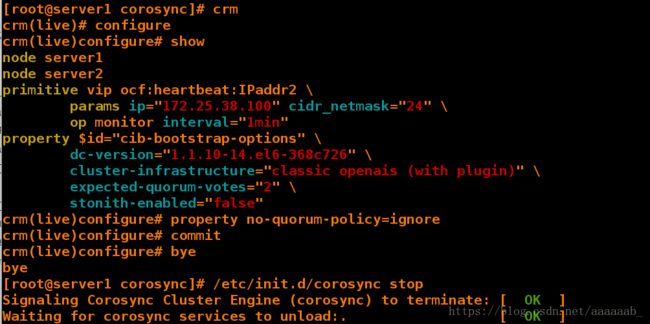

crm(live)

crm(live)configure

node server1

node server2

primitive vip ocf:heartbeat:IPaddr2 \

params ip="172.25.38.100" cidr_netmask="24" \

op monitor interval="1min"

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure

crm(live)configure

crm(live)configure

bye

[root@server1 corosync]

Signaling Corosync Cluster Engine (corosync) to terminate: [ OK ]

Waiting for corosync services to unload:. [ OK ]

[root@server1 corosync]

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@server1 corosync]

crm(live)

crm(live)configure

bye

[root@server1 corosync]

PID TTY TIME CMD

7492 pts/1 00:00:00 bash

8183 pts/1 00:00:00 ps

[root@server1 corosync]

[root@server1 haproxy]

haproxy.cfg

[root@server1 haproxy]

[root@server1 ~]# ls

asciidoc-8.4.5-4.1.el6.noarch.rpm libnfnetlink-devel-1.0.0-1.el6.x86_64.rpm

crmsh-1.2.6-0.rc2.2.1.x86_64.rpm lvs-fullnat-synproxy

haproxy-1.6.11 Lvs-fullnat-synproxy.tar.gz

haproxy-1.6.11.tar.gz newt-devel-0.52.11-3.el6.x86_64.rpm

keepalived-2.0.6 pssh-2.3.1-2.1.x86_64.rpm

keepalived-2.0.6.tar.gz rpmbuild

kernel-2.6.32-220.23.1.el6.src.rpm slang-devel-2.2.1-1.el6.x86_64.rpm

ldirectord-3.9.5-3.1.x86_64.rpm

[root@server1 ~]# cd rpmbuild/

[root@server1 rpmbuild]# ls

BUILD BUILDROOT RPMS SOURCES SPECS SRPMS

[root@server1 rpmbuild]# cd RPMS/

[root@server1 RPMS]# ls

x86_64

[root@server1 RPMS]# cd x86_64/

[root@server1 x86_64]# ls

haproxy-1.6.11-1.x86_64.rpm haproxy-debuginfo-1.6.11-1.x86_64.rpm

[root@server1 x86_64]

root@server2's password:

haproxy-1.6.11-1.x86_64.rpm 100% 695KB 695.0KB/s 00:00

[root@server1 x86_64]# cd /etc/haproxy/

[root@server1 haproxy]# ls

haproxy.cfg

[root@server1 haproxy]# scp haproxy.cfg server2:/etc/haproxy/ 将更改好的配置文件传递过去

root@server2's password:

haproxy.cfg 100% 1906 1.9KB/s 00:00

[root@server1 haproxy]

Shutting down haproxy: [ OK ]

Starting haproxy: [ OK ]

[root@server1 haproxy]

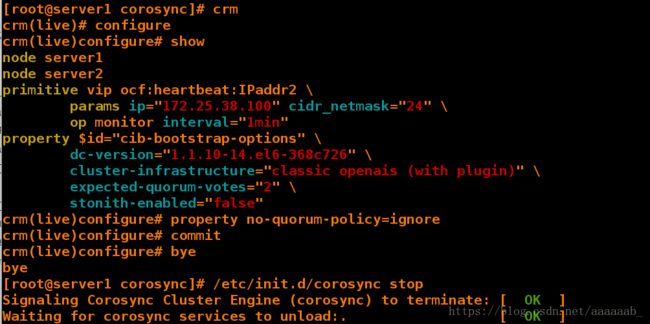

crm(live)

crm(live)configure

node server1

node server2

primitive vip ocf:heartbeat:IPaddr2 \

params ip="172.25.38.100" cidr_netmask="24" \

op monitor interval="1min"

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore"

crm(live)configure

crm(live)configure

crm(live)configure

crm(live)configure

在server2进行haproxy的配置:

[root@server2 ~]

anaconda-ks.cfg haproxy-1.6.11-1.x86_64.rpm install.log.syslog

crmsh-1.2.6-0.rc2.2.1.x86_64.rpm install.log pssh-2.3.1-2.1.x86_64.rpm

[root@server2 ~]

Preparing...

1:haproxy

[root@server2 haproxy]

httpd (pid 10261) is running...

[root@server2 haproxy]

Stopping httpd: [ OK ]

[root@server2 haproxy]

Shutting down haproxy: [FAILED]

Starting haproxy: [ OK ]

[root@server2 haproxy]

Shutting down haproxy: [ OK ]

Starting haproxy: [ OK ]

[root@server2 haproxy]

[root@server1 haproxy]

[root@server1 haproxy]

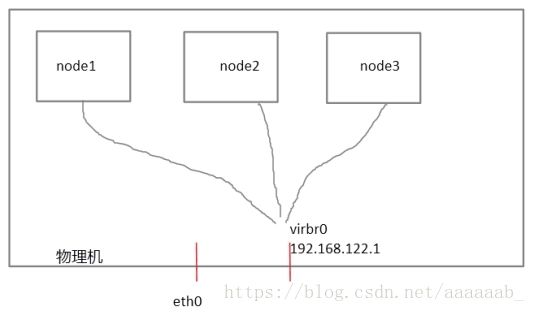

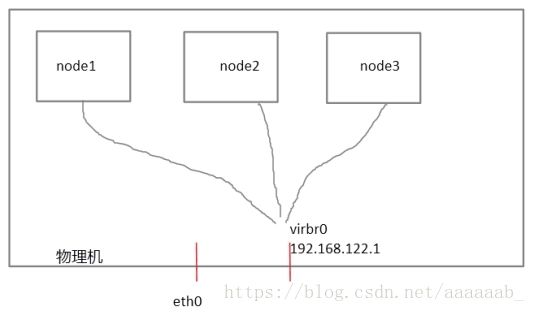

先了解什么是fence:

每个节点之间互相发送探测包进行判断节点的存活性。一般会有专门的线路进行探测,这条线路称为“心跳线”

(上图直接使用eth0线路作为心跳线)。假设node1的心跳线出问题,则node2和node3会认为node1出问题,

然后就会把资源调度在node2或者node3上运行,但node1会认为自己没问题不让node2或者node3抢占资源,

此时就出现了脑裂(split brain)。

此时如果在整个环境里有一种设备直接把node1断电,则可以避免脑裂的发生,这种设备叫做fence或者stonith

(Shoot The Other Node In The Head爆头哥)。

在真机查看fence的状态保证开启:

[root@foundation38 kiosk]# systemctl start fence_virtd.service 打开服务

[root@foundation38 kiosk]# systemctl status fence_virtd.service 查看状态为运行

● fence_virtd.service - Fence-Virt system host daemon

Loaded: loaded (/usr/lib/systemd/system/fence_virtd.service

Active: active (running) since Sat 2018-08-04 17:10:22 CST

Process: 19068 ExecStart=/usr/sbin/fence_virtd $FENCE_VIRTD_ARGS (code=exited, status=0/SUCCESS)

Main PID: 19073 (fence_virtd)

CGroup: /system.slice/fence_virtd.service

└─19073 /usr/sbin/fence_virtd -w

Aug 04 17:10:21 foundation38.ilt.example.com systemd[1]: Starting Fence-Virt system host....

Aug 04 17:10:22 foundation38.ilt.example.com fence_virtd[19073]: fence_virtd starting. L...

Aug 04 17:10:22 foundation38.ilt.example.com systemd[1]: Started Fence-Virt system host ....

Hint: Some lines were ellipsized, use -l to show in full.

在真机查看虚拟机的映射关系:

[root@foundation38 kiosk]# virsh list

1 test1 running

2 test4 running

3 test2 running

4 test3 running

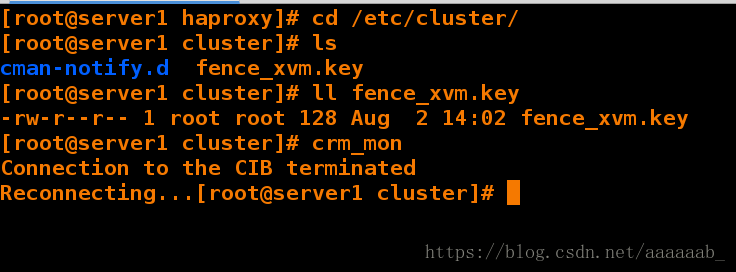

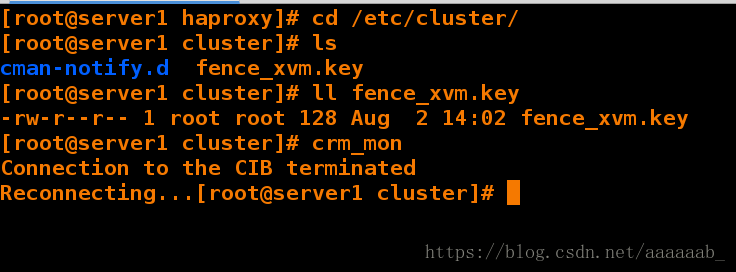

在server1查看fence的配置:

[root@server1 haproxy]# cd /etc/cluster/

[root@server1 cluster]# ls 已经有key文件,没有的化从真机传过来

cman-notify.d fence_xvm.key

[root@server1 cluster]# ll fence_xvm.key

-rw-r--r-- 1 root root 128 Aug 2 14:02 fence_xvm.key

[root@server1 cluster]# crm_mon 打开监控

Connection to the CIB terminated

Reconnecting...[root@server1 cluster]#

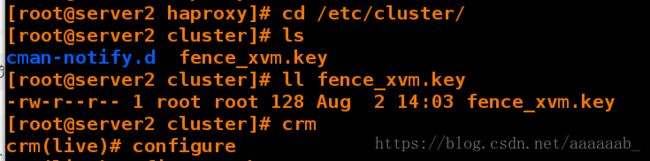

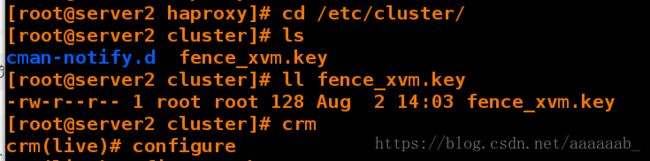

在server2查看fence的配置:

[root@server2 haproxy]# cd /etc/cluster/

[root@server2 cluster]# ls 已经有key文件,没有的化从真机传过来

cman-notify.d fence_xvm.key

[root@server2 cluster]# ll fence_xvm.key

-rw-r--r-- 1 root root 128 Aug 2 14:03 fence_xvm.key

[root@server2 cluster]# crm

crm(live)# configure

crm(live)configure# show

node server1 \

attributes standby="off"

node server2

primitive haproxy lsb:haproxy \

op monitor interval="1min"

primitive vip ocf:heartbeat:IPaddr2 \

params ip="172.25.38.100" cidr_netmask="24" \

op monitor interval="1min"

primitive vmfence stonith:fence_xvm \

params pcmk_host_map="test1:server1;test4:server4" \

op monitor interval="1min"

group hagroup vip haproxy

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore"

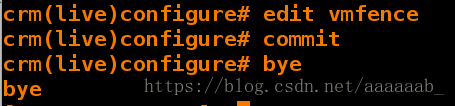

crm(live)configure# property stonith-enabled=true 打开fence

crm(live)configure# cd ..

crm(live)# resource

crm(live)resource#

? exit migrate reprobe stop up

bye failcount move restart trace utilization

cd help param secret unmanage

cleanup list promote show unmigrate

demote manage quit start unmove

end meta refresh status untrace

crm(live)resource# stop vmfence 关闭fence

crm(live)resource# cd ..

crm(live)# configure

crm(live)configure#

? erase ms rsc_template

bye exit node rsc_ticket

cd fencing_topology op_defaults rsctest

cib filter order save

cibstatus graph primitive schema

clone group property show

collocation help ptest simulate

colocation history quit template

commit load ra up

default-timeouts location refresh upgrade

delete master rename user

edit modgroup role verify

end monitor rsc_defaults xml

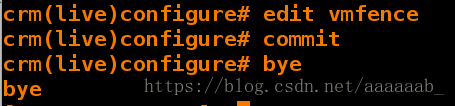

crm(live)configure# edit vmfence 修改fence的映射为server1:test1

crm(live)configure# commit 提交策略

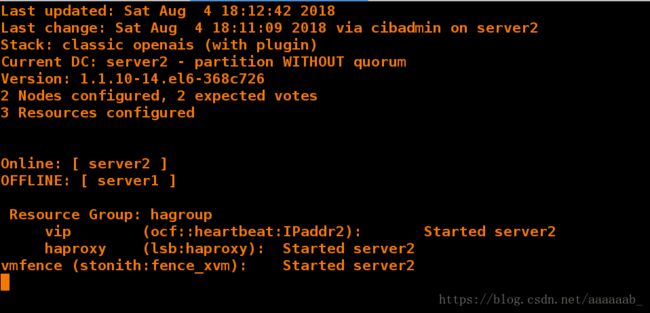

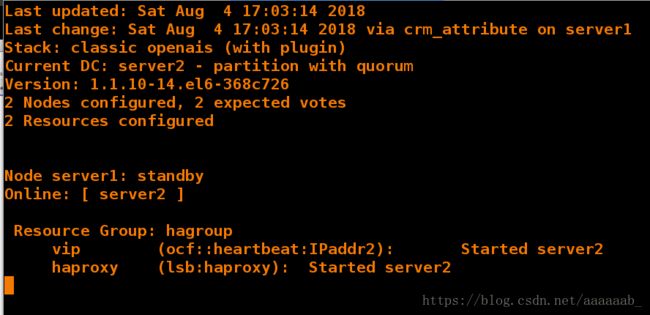

在server1摧毁内核,就是当前服务的节点:

[root@server2 haproxy]

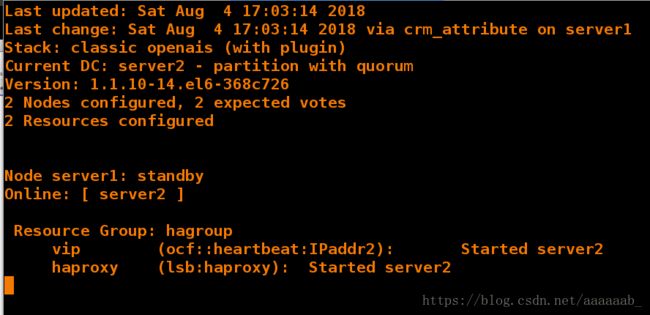

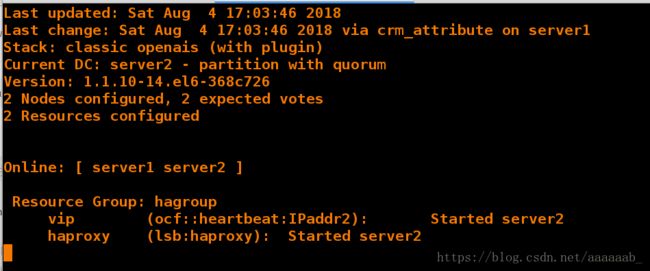

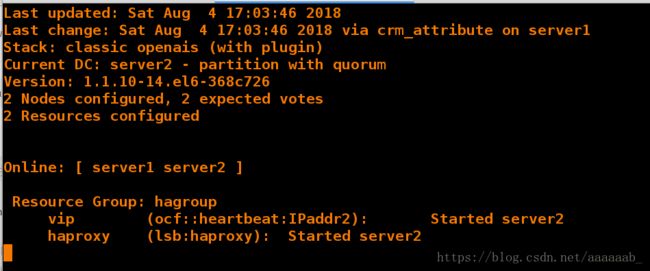

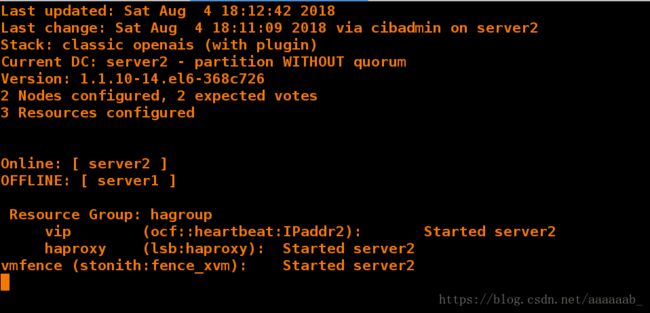

在server2查看监控状态server2已经全面接手服务:

![]()