GAN与自动编码器:深度生成模型的比较

原文:https://towardsdatascience.com/gans-vs-autoencoders-comparison-of-deep-generative-models-985cf15936ea

想把马变成斑马吗?制作DIY动漫人物或名人?生成对抗网络(GAN)是您最好的新朋友。

“Generative Adversarial Networks是过去10年机器学习中最有趣的想法。” - Facebook AI人工智能研究总监Yann LeCun

可以在此处找到本教程的第1部分:

图灵学习和GAN简介

想把马变成斑马吗?制作DIY动漫人物或名人?生成对抗网络(GAN)是......朝向distasatcience.com

本教程的第2部分可以在这里找到:

GAN中的高级主题

想要将马变成斑马吗?制作DIY动漫人物或名人?生成对抗网络(GAN)是......朝向distasatcience.com

这是关于使用生成对抗网络创建深度生成模型的三部分教程的第三部分。这是关于变分自动编码器的上一个主题的自然扩展(可在此处找到)。我们将看到,与变分自动编码器相比,GAN通常优于深度生成模型。然而,众所周知,它们难以使用并且需要大量数据和调整。我们还将研究一种称为VAE-GAN的GAN混合模型。

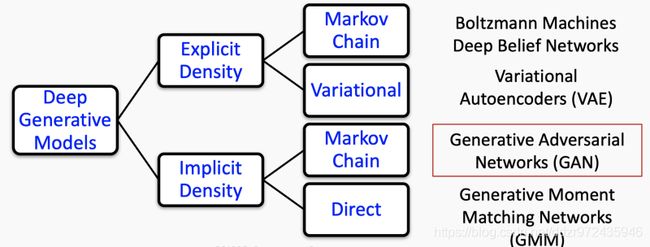

深层生成模型的分类。本文的重点是GAN。

本教程的这一部分主要是变分自动编码器(VAE),GAN的编码实现,并且还将向读者展示如何制作VAE-GAN。

- CelebA数据集的VAE

- CelebA数据集的DC-GAN

- 动画数据集的DC-GAN

- 动漫数据集的VAE-GAN

我强烈建议读者在进一步研究之前至少回顾一下GAN教程的第1部分,以及我的变分自动编码器演练,否则,实现可能对读者来说可能没什么意义。

要获得我以前运行所有这些代码的笔记本,请随时查看我的GitHub存储库以获取这组教程。

mrdragonbear / GAN-Tutorial

通过在GitHub上创建一个帐户,为mrdragonbear / GAN-Tutorial开发做出贡献。github.com

让我们开始!

CelebA数据集的VAE

CelebFaces属性数据集(CelebA)是一个大型的人脸属性数据集,拥有超过200K的名人图像,每个图像都有40个属性注释。此数据集中的图像覆盖了大的姿势变化和杂乱的背景。CelebA拥有大量的多样性,大批量和丰富的注释,包括

- 10,177个身份,

- 202,599个面部图像的数量,

- 5个地标位置,每个图像40个二进制属性注释。

您可以在这里从Kaggle下载数据集:

CelebFaces属性(CelebA)数据集

超过20万名名人的图像,带有40个二进制属性注释www.kaggle.com

第一步是导入所有必要的功能并提取数据。

导入

import shutil

import errno

import zipfile

import os

import matplotlib.pyplot as plt提取数据

# Only run once to unzip images

zip_ref = zipfile.ZipFile('img_align_celeba.zip','r')

zip_ref.extractall()

zip_ref.close()定制图像生成器

这一步很可能是大多数读者以前没有用过的。由于数据量巨大,可能无法将数据集加载到Jupyter Notebook的内存中。在处理大型数据集时,这是一个非常正常的问题。

解决方法是使用流生成器,它按顺序将批量数据(在这种情况下为图像)流式传输到内存中,从而限制了函数所需的内存量。需要注意的是,它们理解和编码有点复杂,因为它们需要对计算机内存,GPU架构等有合理的理解。

# data generator

# source from https://medium.com/@ensembledme/writing-custom-keras-generators-fe815d992c5a

from skimage.io import imread

def get_input(path):

"""get specific image from path"""

img = imread(path)

return img

def get_output(path, label_file = None):

"""get all the labels relative to the image of path"""

img_id = path.split('/')[-1]

labels = label_file.loc[img_id].values

return labels

def preprocess_input(img):

# convert between 0 and 1

return img.astype('float32') / 127.5 -1

def image_generator(files, label_file, batch_size = 32):

while True:

batch_paths = np.random.choice(a = files, size = batch_size)

batch_input = []

batch_output = []

for input_path in batch_paths:

input = get_input(input_path)

input = preprocess_input(input)

output = get_output(input_path, label_file = label_file)

batch_input += [input]

batch_output += [output]

batch_x = np.array(batch_input)

batch_y = np.array(batch_output)

yield batch_x, batch_y

def auto_encoder_generator(files, batch_size = 32):

while True:

batch_paths = np.random.choice(a = files, size = batch_size)

batch_input = []

batch_output = []

for input_path in batch_paths:

input = get_input(input_path)

input = preprocess_input(input)

output = input

batch_input += [input]

batch_output += [output]

batch_x = np.array(batch_input)

batch_y = np.array(batch_output)

yield batch_x, batch_y有关在Keras中编写自定义生成器的更多信息,我在上面的代码中引用了一篇很好的文章:

编写自定义Keras生成器

使用Keras生成器的想法是在训练期间动态获取批量输入和相应的输出...medium.com

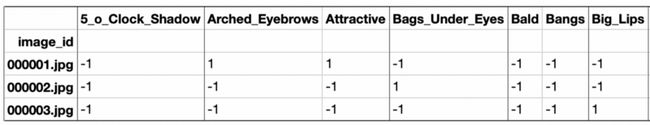

加载属性数据

我们不仅拥有此数据集的图像,而且每个图像还具有与名人方面相对应的属性列表。例如,有一些属性描述了名人是否戴着口红或帽子,他们是否年轻,是否有黑头发等。

# now load attribute

# 1.A.2

import pandas as pd

attr = pd.read_csv('list_attr_celeba.csv')

attr = attr.set_index('image_id')

# check if attribute successful loaded

attr.describe()完成制作生成器

现在我们完成了生成器的制造。我们将图像名称长度设置为6,因为我们的数据集中有6位数的图像。阅读自定义Keras生成器文章后,这部分代码应该能理解。

import numpy as np

from sklearn.model_selection import train_test_splitIMG_NAME_LENGTH = 6file_path = "img_align_celeba/"

img_id = np.arange(1,len(attr.index)+1)

img_path = []

for i in range(len(img_id)):

img_path.append(file_path + (IMG_NAME_LENGTH - len(str(img_id[i])))*'0' + str(img_id[i]) + '.jpg')# pick 80% as training set and 20% as validation set

train_path = img_path[:int((0.8)*len(img_path))]

val_path = img_path[int((0.8)*len(img_path)):]train_generator = auto_encoder_generator(train_path,32)

val_generator = auto_encoder_generator(val_path,32)我们现在可以选择三个图像并检查属性是否有意义。

fig, ax = plt.subplots(1, 3, figsize=(12, 4))

for i in range(3):

ax[i].imshow(get_input(img_path[i]))

ax[i].axis('off')

ax[i].set_title(img_path[i][-10:])

plt.show()

attr.iloc[:3]

三个随机图像以及它们的一些属性。

构建和训练VAE模型

首先,我们将为名人脸数据集创建和编译卷积VAE模型(包括编码器和解码器)。

继续导入

from keras.models import Sequential, Model

from keras.layers import Dropout, Flatten, Dense, Conv2D, MaxPooling2D, Input, Reshape, UpSampling2D, InputLayer, Lambda, ZeroPadding2D, Cropping2D, Conv2DTranspose, BatchNormalization

from keras.utils import np_utils, to_categorical

from keras.losses import binary_crossentropy

from keras import backend as K,objectives

from keras.losses import mse, binary_crossentropy模型架构

现在我们可以创建并总结模型。

b_size = 128

n_size = 512

def sampling(args):

z_mean, z_log_sigma = args

epsilon = K.random_normal(shape = (n_size,) , mean = 0, stddev = 1)

return z_mean + K.exp(z_log_sigma/2) * epsilon

def build_conv_vae(input_shape, bottleneck_size, sampling, batch_size = 32):

# ENCODER

input = Input(shape=(input_shape[0],input_shape[1],input_shape[2]))

x = Conv2D(32,(3,3),activation = 'relu', padding = 'same')(input)

x = BatchNormalization()(x)

x = MaxPooling2D((2,2), padding ='same')(x)

x = Conv2D(64,(3,3),activation = 'relu', padding = 'same')(x)

x = BatchNormalization()(x)

x = MaxPooling2D((2,2), padding ='same')(x)

x = Conv2D(128,(3,3), activation = 'relu', padding = 'same')(x)

x = BatchNormalization()(x)

x = MaxPooling2D((2,2), padding ='same')(x)

x = Conv2D(256,(3,3), activation = 'relu', padding = 'same')(x)

x = BatchNormalization()(x)

x = MaxPooling2D((2,2), padding ='same')(x)

# Latent Variable Calculation

shape = K.int_shape(x)

flatten_1 = Flatten()(x)

dense_1 = Dense(bottleneck_size, name='z_mean')(flatten_1)

z_mean = BatchNormalization()(dense_1)

flatten_2 = Flatten()(x)

dense_2 = Dense(bottleneck_size, name ='z_log_sigma')(flatten_2)

z_log_sigma = BatchNormalization()(dense_2)

z = Lambda(sampling)([z_mean, z_log_sigma])

encoder = Model(input, [z_mean, z_log_sigma, z], name = 'encoder')

# DECODER

latent_input = Input(shape=(bottleneck_size,), name = 'decoder_input')

x = Dense(shape[1]*shape[2]*shape[3])(latent_input)

x = Reshape((shape[1],shape[2],shape[3]))(x)

x = UpSampling2D((2,2))(x)

x = Cropping2D([[0,0],[0,1]])(x)

x = Conv2DTranspose(256,(3,3), activation = 'relu', padding = 'same')(x)

x = BatchNormalization()(x)

x = UpSampling2D((2,2))(x)

x = Cropping2D([[0,1],[0,1]])(x)

x = Conv2DTranspose(128,(3,3), activation = 'relu', padding = 'same')(x)

x = BatchNormalization()(x)

x = UpSampling2D((2,2))(x)

x = Cropping2D([[0,1],[0,1]])(x)

x = Conv2DTranspose(64,(3,3), activation = 'relu', padding = 'same')(x)

x = BatchNormalization()(x)

x = UpSampling2D((2,2))(x)

x = Conv2DTranspose(32,(3,3), activation = 'relu', padding = 'same')(x)

x = BatchNormalization()(x)

output = Conv2DTranspose(3,(3,3), activation = 'tanh', padding ='same')(x)

decoder = Model(latent_input, output, name = 'decoder')

output_2 = decoder(encoder(input)[2])

vae = Model(input, output_2, name ='vae')

return vae, encoder, decoder, z_mean, z_log_sigma

vae_2, encoder, decoder, z_mean, z_log_sigma = build_conv_vae(img_sample.shape, n_size, sampling, batch_size = b_size)

print("encoder summary:")

encoder.summary()

print("decoder summary:")

decoder.summary()

print("vae summary:")

vae_2.summary()定义VAE损失

def vae_loss(input_img, output):

# Compute error in reconstruction

reconstruction_loss = mse(K.flatten(input_img) , K.flatten(output))

# Compute the KL Divergence regularization term

kl_loss = - 0.5 * K.sum(1 + z_log_sigma - K.square(z_mean) - K.exp(z_log_sigma), axis = -1)

# Return the average loss over all images in batch

total_loss = (reconstruction_loss + 0.0001 * kl_loss)

return total_loss编译模型

vae_2.compile(optimizer='rmsprop', loss= vae_loss)

encoder.compile(optimizer = 'rmsprop', loss = vae_loss)

decoder.compile(optimizer = 'rmsprop', loss = vae_loss)训练模型

vae_2.fit_generator(train_generator, steps_per_epoch = 4000, validation_data = val_generator, epochs=7, validation_steps= 500)我们随机选择训练集的一些图像,通过编码器运行它们以参数化潜在代码,然后用解码器重建图像。

import random

x_test = []

for i in range(64):

x_test.append(get_input(img_path[random.randint(0,len(img_id))]))

x_test = np.array(x_test)

figure_Decoded = vae_2.predict(x_test.astype('float32')/127.5 -1, batch_size = b_size)

figure_original = x_test[0]

figure_decoded = (figure_Decoded[0]+1)/2

for i in range(4):

plt.axis('off')

plt.subplot(2,4,1+i*2)

plt.imshow(x_test[i])

plt.axis('off')

plt.subplot(2,4,2 + i*2)

plt.imshow((figure_Decoded[i]+1)/2)

plt.axis('off')

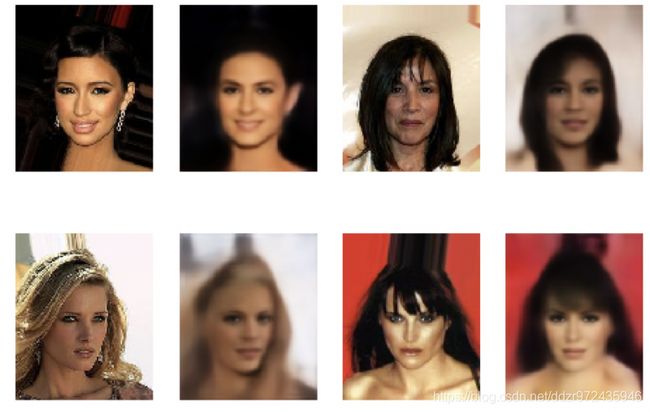

plt.show()来自训练集的随机样本与VAE重建后的图片对比

请注意,重建的图像与原始版本具有相似之处。然而,新图像有点模糊,这是已知的VAE现象。推测可能是由于变分推断优化了可能性的下限,而不是实际可能性本身。

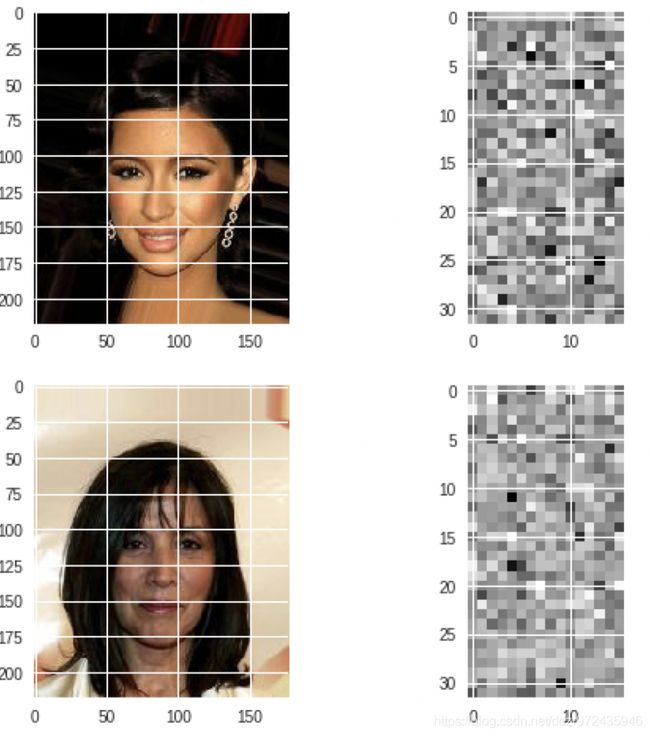

潜在空间表示

我们可以选择具有不同属性的两个图像并绘制其潜在空间表示。请注意,我们可以看到潜在代码之间的一些差异,我们可能会假设这些差异可以解释原始图像之间的差异。

# Choose two images of different attributes, and plot the original and latent space of it

x_test1 = []

for i in range(64):

x_test1.append(get_input(img_path[np.random.randint(0,len(img_id))]))

x_test1 = np.array(x_test)

x_test_encoded = np.array(encoder.predict(x_test1/127.5-1, batch_size = b_size))

figure_original_1 = x_test[0]

figure_original_2 = x_test[1]

Encoded1 = (x_test_encoded[0,0,:].reshape(32, 16,)+1)/2

Encoded2 = (x_test_encoded[0,1,:].reshape(32, 16)+1)/2

plt.figure(figsize=(8, 8))

plt.subplot(2,2,1)

plt.imshow(figure_original_1)

plt.subplot(2,2,2)

plt.imshow(Encoded1)

plt.subplot(2,2,3)

plt.imshow(figure_original_2)

plt.subplot(2,2,4)

plt.imshow(Encoded2)

plt.show()从潜在空间中取样

我们可以随机抽样15个潜码并对其进行解码以生成新的名人面孔。我们可以从这种表示中看出,我们的模型生成的图像与我们的训练集中的图像具有非常相似的风格,并且它也具有良好的现实性和变化性。

# We randomly generated 15 images from 15 series of noise informationn = 3

m = 5

digit_size1 = 218

digit_size2 = 178

figure = np.zeros((digit_size1 * n, digit_size2 * m,3))

for i in range(3):

for j in range(5):

z_sample = np.random.rand(1,512)

x_decoded = decoder.predict([z_sample])

figure[i * digit_size1: (i + 1) * digit_size1,

j * digit_size2: (j + 1) * digit_size2,:] = (x_decoded[0]+1)/2 plt.figure(figsize=(10, 10))

plt.imshow(figure)

plt.show()所以我们的VAE模型似乎并不是特别好。随着更多的时间和更好的超参数选择等,我们可能会取得比这更好的结果。

现在让我们将此结果与同一数据集上的DC-GAN进行比较。

CelebA数据集上的DC-GAN

由于我们已经设置了流生成器,因此没有太多工作要做以启动和运行DC-GAN模型。

# Create and compile a DC-GAN model, and print the summary

from keras.utils import np_utils

from keras.models import Sequential, Model

from keras.layers import Input, Dense, Dropout, Activation, Flatten, LeakyReLU,\

BatchNormalization, Conv2DTranspose, Conv2D, Reshape

from keras.layers.advanced_activations import LeakyReLU

from keras.optimizers import Adam, RMSprop

from keras.initializers import RandomNormal

import numpy as np

import matplotlib.pyplot as plt

import random

from tqdm import tqdm_notebook

from scipy.misc import imresize

def generator_model(latent_dim=100, leaky_alpha=0.2, init_stddev=0.02):

g = Sequential()

g.add(Dense(4*4*512, input_shape=(latent_dim,),

kernel_initializer=RandomNormal(stddev=init_stddev)))

g.add(Reshape(target_shape=(4, 4, 512)))

g.add(BatchNormalization())

g.add(Activation(LeakyReLU(alpha=leaky_alpha)))

g.add(Conv2DTranspose(256, kernel_size=5, strides=2, padding='same',

kernel_initializer=RandomNormal(stddev=init_stddev)))

g.add(BatchNormalization())

g.add(Activation(LeakyReLU(alpha=leaky_alpha)))

g.add(Conv2DTranspose(128, kernel_size=5, strides=2, padding='same',

kernel_initializer=RandomNormal(stddev=init_stddev)))

g.add(BatchNormalization())

g.add(Activation(LeakyReLU(alpha=leaky_alpha)))

g.add(Conv2DTranspose(3, kernel_size=4, strides=2, padding='same',

kernel_initializer=RandomNormal(stddev=init_stddev)))

g.add(Activation('tanh'))

g.summary()

#g.compile(loss='binary_crossentropy', optimizer=Adam(lr=0.0001, beta_1=0.5), metrics=['accuracy'])

return g

def discriminator_model(leaky_alpha=0.2, init_stddev=0.02):

d = Sequential()

d.add(Conv2D(64, kernel_size=5, strides=2, padding='same',

kernel_initializer=RandomNormal(stddev=init_stddev),

input_shape=(32, 32, 3)))

d.add(Activation(LeakyReLU(alpha=leaky_alpha)))

d.add(Conv2D(128, kernel_size=5, strides=2, padding='same',

kernel_initializer=RandomNormal(stddev=init_stddev)))

d.add(BatchNormalization())

d.add(Activation(LeakyReLU(alpha=leaky_alpha)))

d.add(Conv2D(256, kernel_size=5, strides=2, padding='same',

kernel_initializer=RandomNormal(stddev=init_stddev)))

d.add(BatchNormalization())

d.add(Activation(LeakyReLU(alpha=leaky_alpha)))

d.add(Flatten())

d.add(Dense(1, kernel_initializer=RandomNormal(stddev=init_stddev)))

d.add(Activation('sigmoid'))

d.summary()

return d

def DCGAN(sample_size=100):

# Generator

g = generator_model(sample_size, 0.2, 0.02)

# Discriminator

d = discriminator_model(0.2, 0.02)

d.compile(optimizer=Adam(lr=0.001, beta_1=0.5), loss='binary_crossentropy')

d.trainable = False

# GAN

gan = Sequential([g, d])

gan.compile(optimizer=Adam(lr=0.0001, beta_1=0.5), loss='binary_crossentropy')

return gan, g, d以上代码仅适用于生成器和鉴别器网络的体系结构。将这种编码GAN的方法与我在第2部分中编写的方法进行比较是一个好主意,您可以看到这个方法不太清晰,我们没有定义全局参数,因此有很多地方我们可能会遇到潜在的错误。

现在我们定义了一系列功能,使我们的生活更轻松,这些功能主要用于图像的预处理和绘图,以帮助我们分析网络输出。

def load_image(filename, size=(32, 32)):

img = plt.imread(filename)

# crop

rows, cols = img.shape[:2]

crop_r, crop_c = 150, 150

start_row, start_col = (rows - crop_r) // 2, (cols - crop_c) // 2

end_row, end_col = rows - start_row, cols - start_row

img = img[start_row:end_row, start_col:end_col, :]

# resize

img = imresize(img, size)

return img

def preprocess(x):

return (x/255)*2-1

def deprocess(x):

return np.uint8((x+1)/2*255)

def make_labels(size):

return np.ones([size, 1]), np.zeros([size, 1])

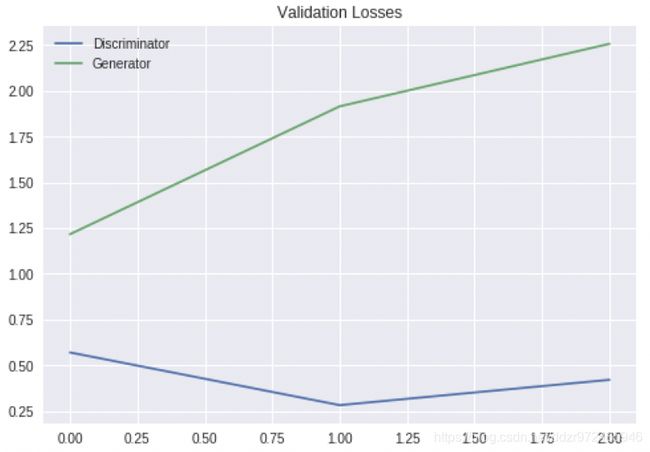

def show_losses(losses):

losses = np.array(losses)

fig, ax = plt.subplots()

plt.plot(losses.T[0], label='Discriminator')

plt.plot(losses.T[1], label='Generator')

plt.title("Validation Losses")

plt.legend()

plt.show()

def show_images(generated_images):

n_images = len(generated_images)

cols = 5

rows = n_images//cols

plt.figure(figsize=(8, 6))

for i in range(n_images):

img = deprocess(generated_images[i])

ax = plt.subplot(rows, cols, i+1)

plt.imshow(img)

plt.xticks([])

plt.yticks([])

plt.tight_layout()

plt.show()训练模型

我们现在定义训练功能。正如我们之前所做的那样,请注意我们在将鉴别器设置为可训练和不可训练之间切换(我们在第2部分中隐含地这样做了)。

def train(sample_size=100, epochs=3, batch_size=128, eval_size=16, smooth=0.1): batchCount=len(train_path)//batch_size

y_train_real, y_train_fake = make_labels(batch_size)

y_eval_real, y_eval_fake = make_labels(eval_size)

# create a GAN, a generator and a discriminator

gan, g, d = DCGAN(sample_size)

losses = [] for e in range(epochs):

print('-'*15, 'Epoch %d' % (e+1), '-'*15)

for i in tqdm_notebook(range(batchCount)):

path_batch = train_path[i*batch_size:(i+1)*batch_size]

image_batch = np.array([preprocess(load_image(filename)) for filename in path_batch])

noise = np.random.normal(0, 1, size=(batch_size, noise_dim))

generated_images = g.predict_on_batch(noise) # Train discriminator on generated images

d.trainable = True

d.train_on_batch(image_batch, y_train_real*(1-smooth))

d.train_on_batch(generated_images, y_train_fake) # Train generator

d.trainable = False

g_loss=gan.train_on_batch(noise, y_train_real)

# evaluate

test_path = np.array(val_path)[np.random.choice(len(val_path), eval_size, replace=False)]

x_eval_real = np.array([preprocess(load_image(filename)) for filename in test_path]) noise = np.random.normal(loc=0, scale=1, size=(eval_size, sample_size))

x_eval_fake = g.predict_on_batch(noise)

d_loss = d.test_on_batch(x_eval_real, y_eval_real)

d_loss += d.test_on_batch(x_eval_fake, y_eval_fake)

g_loss = gan.test_on_batch(noise, y_eval_real)

losses.append((d_loss/2, g_loss))

print("Epoch: {:>3}/{} Discriminator Loss: {:>6.4f} Generator Loss: {:>6.4f}".format(

e+1, epochs, d_loss, g_loss))

show_images(x_eval_fake[:10])

# show the result

show_losses(losses)

show_images(g.predict(np.random.normal(loc=0, scale=1, size=(15, sample_size))))

return gnoise_dim=100

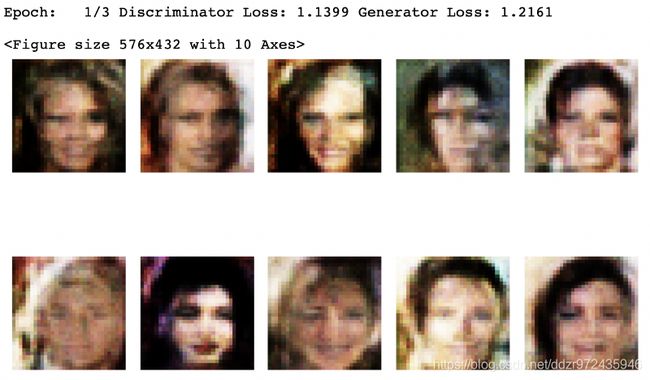

train()此函数的输出将为每个时期提供以下输出:

它还将绘制鉴别器和发生器的验证损失。

生成的图像看起来合理。在这里我们可以看到我们的模型表现得很好,尽管图像的质量不如训练集中那么好(因为我们将图像重新塑造成更小并使它们比原始图像更模糊)。然而,它们足够生动,可以创造出有效的面孔,这些面孔与现实相近。此外,与VAE生成的图像相比,图像更具创意和真实感。

因此,在这种情况下,GAN似乎表现出色。现在让我们尝试一个新的数据集,看看与混合变体VAE-GAN相比,GAN的表现如何。

动漫数据集

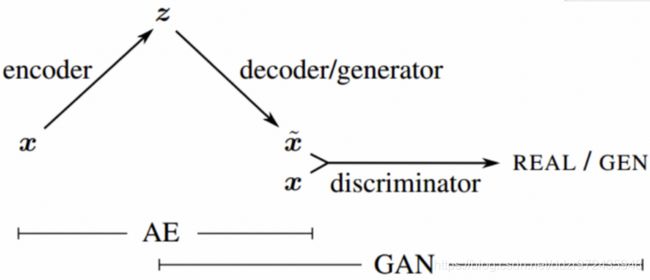

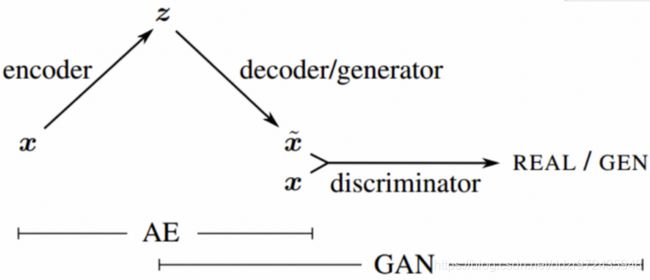

在本节中,我们将使用GAN以及另一种特殊形式的GAN(VAE-GAN)生成与Anime数据集相同样式的面。术语VAE-GAN首先由Larsen等人使用。在他们的论文“使用学习的相似性度量自动编码超出像素”。VAE-GAN模型与GAN的区别在于它们的生成器是变异自动编码器。

VAE-GAN架构。资料来源:https://arxiv.org/abs/1512.09300

首先,我们将重点关注DC-GAN。Anime数据集由64x64图像形式的超过20K动画面组成。我们还需要创建另一个Keras自定义数据生成器。可以在此处找到数据集的链接:

Mckinsey666 / Anime-Face-

Dataset?一系列高品质的动漫人物面孔。通过创建...github.com,为Mckinsey666 / Anime-Face-Dataset开发做出贡献

动漫数据集上的DC-GAN

我们需要做的第一件事是创建动漫目录并下载数据。这可以从上面的链接完成,也可以直接从Amazon Web Services完成(如果这种访问数据的方式仍然可用)。

# Create anime directory and download from AWSimport zipfile

!mkdir anime-faces && wget https://s3.amazonaws.com/gec-harvard-dl2-hw2-data/datasets/anime-faces.zip

with zipfile.ZipFile("anime-faces.zip","r") as anime_ref:

anime_ref.extractall("anime-faces/")在继续前进之前检查数据总是好的做法,所以我们现在就这样做。

from skimage import io

import matplotlib.pyplot as plt

filePath='anime-faces/data/'

imgSets=[]

for i in range(1,20001):

imgName=filePath+str(i)+'.png'

imgSets.append(io.imread(imgName))

plt.imshow(imgSets[1234])

plt.axis('off')

plt.show()

我们现在创建并编译我们的DC-GAN模型。

# Create and compile a DC-GAN modelfrom keras.models import Sequential, Model

from keras.layers import Input, Dense, Dropout, Activation, \

Flatten, LeakyReLU, BatchNormalization, Conv2DTranspose, Conv2D, Reshape

from keras.layers.advanced_activations import LeakyReLU

from keras.layers.convolutional import UpSampling2D

from keras.optimizers import Adam, RMSprop,SGD

from keras.initializers import RandomNormalimport numpy as np

import matplotlib.pyplot as plt

import os, glob

from PIL import Image

from tqdm import tqdm_notebook

image_shape = (64, 64, 3)

#noise_shape = (100,)

Noise_dim = 128

img_rows = 64

img_cols = 64

channels = 3def generator_model(latent_dim=100, leaky_alpha=0.2):

model = Sequential()

# layer1 (None,500)>>(None,128*16*16)

model.add(Dense(128 * 16 * 16, activation="relu", input_shape=(Noise_dim,)))

# (None,16*16*128)>>(None,16,16,128)

model.add(Reshape((16, 16, 128)))

# (None,16,16,128)>>(None,32,32,128)

model.add(UpSampling2D())

model.add(Conv2D(256, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(Activation("relu")) #(None,32,32,128)>>(None,64,64,128)

model.add(UpSampling2D())

# (None,64,64,128)>>(None,64,64,64)

model.add(Conv2D(128, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(Activation("relu")) # (None,64,64,128)>>(None,64,64,32) model.add(Conv2D(32, kernel_size=3, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(Activation("relu"))

# (None,64,64,32)>>(None,64,64,3)

model.add(Conv2D(channels, kernel_size=3, padding="same"))

model.add(Activation("tanh")) model.summary()

model.compile(loss='binary_crossentropy', optimizer=Adam(lr=0.0001, beta_1=0.5), metrics=['accuracy'])

return model

def discriminator_model(leaky_alpha=0.2, dropRate=0.3):

model = Sequential()

# layer1 (None,64,64,3)>>(None,32,32,32)

model.add(Conv2D(32, kernel_size=3, strides=2, input_shape=image_shape, padding="same"))

model.add(LeakyReLU(alpha=leaky_alpha))

model.add(Dropout(dropRate)) # layer2 (None,32,32,32)>>(None,16,16,64)

model.add(Conv2D(64, kernel_size=3, strides=2, padding="same")) # model.add(ZeroPadding2D(padding=((0, 1), (0, 1))))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=leaky_alpha))

model.add(Dropout(dropRate)) # (None,16,16,64)>>(None,8,8,128)

model.add(Conv2D(128, kernel_size=3, strides=2, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(dropRate)) # (None,8,8,128)>>(None,8,8,256)

model.add(Conv2D(256, kernel_size=3, strides=1, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(dropRate)) # (None,8,8,256)>>(None,8,8,64)

model.add(Conv2D(64, kernel_size=3, strides=1, padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(dropRate))

# (None,8,8,64)

model.add(Flatten())

model.add(Dense(1, activation='sigmoid')) model.summary() sgd=SGD(lr=0.0002)

model.compile(loss='binary_crossentropy', optimizer=Adam(lr=0.0001, beta_1=0.5), metrics=['accuracy'])

return model

def DCGAN(sample_size=Noise_dim):

# generator

g = generator_model(sample_size, 0.2) # discriminator

d = discriminator_model(0.2)

d.trainable = False

# GAN

gan = Sequential([g, d])

sgd=SGD()

gan.compile(optimizer=Adam(lr=0.0001, beta_1=0.5), loss='binary_crossentropy')

return gan, g, d

def get_image(image_path, width, height, mode):

image = Image.open(image_path)

#print(image.size) return np.array(image.convert(mode))

def get_batch(image_files, width, height, mode):

data_batch = np.array([get_image(sample_file, width, height, mode) \

for sample_file in image_files])

return data_batch

def show_imgs(generator,epoch):

row=3

col = 5

noise = np.random.normal(0, 1, (row * col, Noise_dim))

gen_imgs = generator.predict(noise) # Rescale images 0 - 1

gen_imgs = 0.5 * gen_imgs + 0.5 fig, axs = plt.subplots(row, col)

#fig.suptitle("DCGAN: Generated digits", fontsize=12)

cnt = 0 for i in range(row):

for j in range(col):

axs[i, j].imshow(gen_imgs[cnt, :, :, :])

axs[i, j].axis('off')

cnt += 1 #plt.close()

plt.show()我们现在可以在Anime数据集上训练模型。我们将以两种不同的方式完成这项工作,第一种方法是培训鉴别器和发生器,培训时间比例为1:1。

# Training the discriminator and generator with the 1:1 proportion of training timesdef train(epochs=30, batchSize=128):

filePath = r'anime-faces/data/' X_train = get_batch(glob.glob(os.path.join(filePath, '*.png'))[:20000], 64, 64, 'RGB')

X_train = (X_train.astype(np.float32) - 127.5) / 127.5 halfSize = int(batchSize / 2)

batchCount=int(len(X_train)/batchSize) dLossReal = []

dLossFake = []

gLossLogs = [] gan, generator, discriminator = DCGAN(Noise_dim) for e in range(epochs):

for i in tqdm_notebook(range(batchCount)):

index = np.random.randint(0, X_train.shape[0], halfSize)

images = X_train[index] noise = np.random.normal(0, 1, (halfSize, Noise_dim))

genImages = generator.predict(noise) # one-sided labels

discriminator.trainable = True

dLossR = discriminator.train_on_batch(images, np.ones([halfSize, 1]))

dLossF = discriminator.train_on_batch(genImages, np.zeros([halfSize, 1]))

dLoss = np.add(dLossF, dLossR) * 0.5

discriminator.trainable = False noise = np.random.normal(0, 1, (batchSize, Noise_dim))

gLoss = gan.train_on_batch(noise, np.ones([batchSize, 1])) dLossReal.append([e, dLoss[0]])

dLossFake.append([e, dLoss[1]])

gLossLogs.append([e, gLoss]) dLossRealArr = np.array(dLossReal)

dLossFakeArr = np.array(dLossFake)

gLossLogsArr = np.array(gLossLogs)

# At the end of training plot the losses vs epochs

show_imgs(generator, e) plt.plot(dLossRealArr[:, 0], dLossRealArr[:, 1], label="Discriminator Loss - Real")

plt.plot(dLossFakeArr[:, 0], dLossFakeArr[:, 1], label="Discriminator Loss - Fake")

plt.plot(gLossLogsArr[:, 0], gLossLogsArr[:, 1], label="Generator Loss")

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.title('GAN')

plt.grid(True)

plt.show()

return gan, generator, discriminator

GAN,Generator,Discriminator=train(epochs=20, batchSize=128)

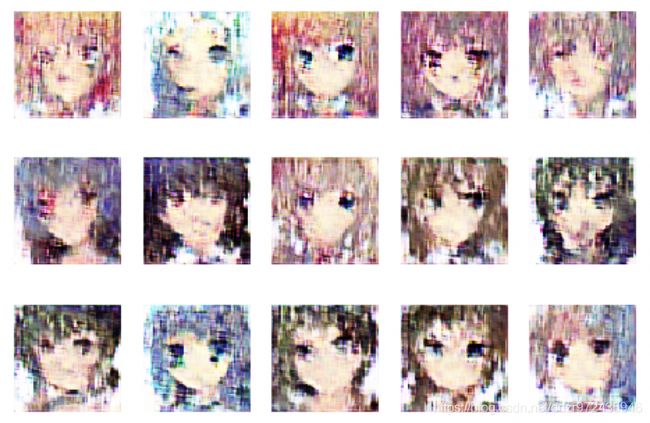

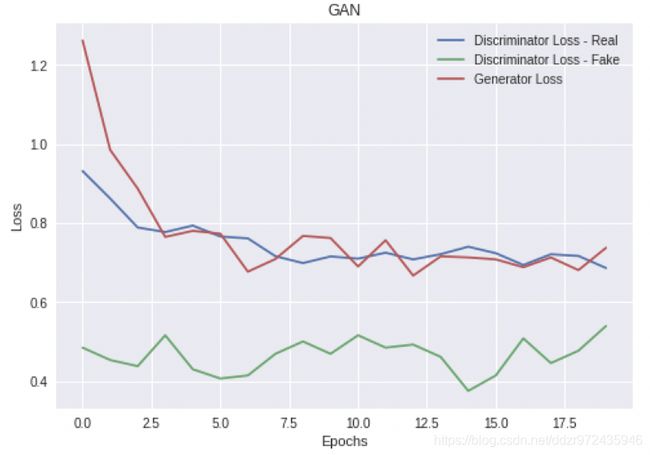

train(epochs=1000, batchSize=128, plotInternal=200)输出现在将开始打印一系列动画角色。它们起初粒度很大,随着时间的推移逐渐变得越来越明显。

我们还将得到我们的发生器和鉴别器损失函数的图。

现在我们将做同样的事情,但是鉴别器和发生器的训练时间不同,看看效果如何。

在继续前进之前,最好将模型的权重保存在某处,这样您就不需要再次运行整个训练,而只需将权重加载到网络中即可。

要保存重量:

discriminator.save_weights('/content/gdrive/My Drive/discriminator_DCGAN_lr0.0001_deepgenerator+proportion2.h5')

gan.save_weights('/content/gdrive/My Drive/gan_DCGAN_lr0.0001_deepgenerator+proportion2.h5')

generator.save_weights('/content/gdrive/My Drive/generator_DCGAN_lr0.0001_deepgenerator+proportion2.h5')

要加载权重:

discriminator.load_weights('/content/gdrive/My Drive/discriminator_DCGAN_lr0.0001_deepgenerator+proportion2.h5')

gan.load_weights('/content/gdrive/My Drive/gan_DCGAN_lr0.0001_deepgenerator+proportion2.h5')

generator.load_weights('/content/gdrive/My Drive/generator_DCGAN_lr0.0001_deepgenerator+proportion2.h5')

现在我们进入第二个网络实施,而不必担心保存我们以前的网络。

# Train the discriminator and generator separately and with different training timesdef train(epochs=300, batchSize=128, plotInternal=50):

gLoss = 1

filePath = r'anime-faces/data/'

X_train = get_batch(glob.glob(os.path.join(filePath,'*.png'))[:20000],64,64,'RGB')

X_train=(X_train.astype(np.float32)-127.5)/127.5

halfSize= int (batchSize/2) dLossReal=[]

dLossFake=[]

gLossLogs=[]

for e in range(epochs):

index=np.random.randint(0,X_train.shape[0],halfSize)

images=X_train[index] noise=np.random.normal(0,1,(halfSize,Noise_dim))

genImages=generator.predict(noise)

if e < int(epochs*0.5):

#one-sided labels

discriminator.trainable=True

dLossR=discriminator.train_on_batch(images,np.ones([halfSize,1]))

dLossF=discriminator.train_on_batch(genImages,np.zeros([halfSize,1]))

dLoss=np.add(dLossF,dLossR)*0.5

discriminator.trainable=False cnt = e while cnt > 3:

cnt = cnt - 4 if cnt == 0:

noise=np.random.normal(0,1,(batchSize,Noise_dim))

gLoss=gan.train_on_batch(noise,np.ones([batchSize,1]))

elif e>= int(epochs*0.5) :

cnt = e while cnt > 3:

cnt = cnt - 4 if cnt == 0:

#one-sided labels

discriminator.trainable=True

dLossR=discriminator.train_on_batch(images,np.ones([halfSize,1]))

dLossF=discriminator.train_on_batch(genImages,np.zeros([halfSize,1]))

dLoss=np.add(dLossF,dLossR)*0.5

discriminator.trainable=False

noise=np.random.normal(0,1,(batchSize,Noise_dim))

gLoss=gan.train_on_batch(noise,np.ones([batchSize,1])) if e % 20 == 0:

print("epoch: %d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (e, dLoss[0], 100 * dLoss[1], gLoss)) dLossReal.append([e,dLoss[0]])

dLossFake.append([e,dLoss[1]])

gLossLogs.append([e,gLoss]) if e % plotInternal == 0 and e!=0:

show_imgs(generator, e)

dLossRealArr= np.array(dLossReal)

dLossFakeArr = np.array(dLossFake)

gLossLogsArr = np.array(gLossLogs)

chk = e while chk > 50:

chk = chk - 51 if chk == 0:

discriminator.save_weights('/content/gdrive/My Drive/discriminator_DCGAN_lr=0.0001,proportion2,deepgenerator_Fake.h5')

gan.save_weights('/content/gdrive/My Drive/gan_DCGAN_lr=0.0001,proportion2,deepgenerator_Fake.h5')

generator.save_weights('/content/gdrive/My Drive/generator_DCGAN_lr=0.0001,proportion2,deepgenerator_Fake.h5')

# At the end of training plot the losses vs epochs

plt.plot(dLossRealArr[:, 0], dLossRealArr[:, 1], label="Discriminator Loss - Real")

plt.plot(dLossFakeArr[:, 0], dLossFakeArr[:, 1], label="Discriminator Loss - Fake")

plt.plot(gLossLogsArr[:, 0], gLossLogsArr[:, 1], label="Generator Loss")

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.title('GAN')

plt.grid(True)

plt.show()

return gan, generator, discriminatorgan, generator, discriminator = DCGAN(Noise_dim)

train(epochs=4000, batchSize=128, plotInternal=200)让我们比较这两个网络的输出。通过运行该行:

show_imgs(Generator)网络将从生成器输出一些图像(这是我们之前定义的功能之一)。

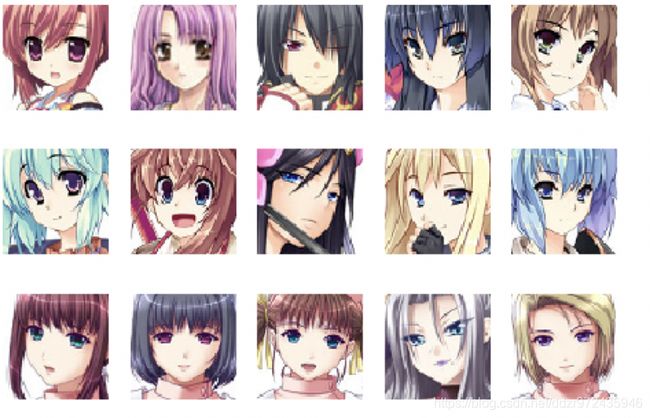

生成的图像来自1:1的鉴别器与发生器的训练。

现在让我们检查第二个模型。

从第二网络生成的图像具有用于鉴别器和生成器的不同训练时间。

我们可以看到生成的图像的细节得到改善,它们的纹理稍微更加细致。然而,与训练图像相比,它们仍然低于标准。

训练Anime数据集中的图像。

也许VAE-GAN会表现得更好?

动漫数据集上的VAE-GAN

为了重申我之前所说的关于VAE-GAN的内容,术语VAE-GAN首先被Larsen等人使用。在他们的论文“使用学习的相似性度量自动编码超出像素”。VAE-GAN模型与GAN的区别在于它们的生成器是变异自动编码器。

VAE-GAN架构。资料来源:https://arxiv.org/abs/1512.09300

首先,我们需要创建和编译VAE-GAN并为每个网络做一个摘要(这是一个简单检查架构的好方法)。

# Create and compile a VAE-GAN, and make a summary for themfrom keras.models import Sequential, Model

from keras.layers import Input, Dense, Dropout, Activation, \

Flatten, LeakyReLU, BatchNormalization, Conv2DTranspose, Conv2D, Reshape,MaxPooling2D,UpSampling2D,InputLayer, Lambda

from keras.layers.advanced_activations import LeakyReLU

from keras.layers.convolutional import UpSampling2D

from keras.optimizers import Adam, RMSprop,SGD

from keras.initializers import RandomNormal

import numpy as np

import matplotlib.pyplot as plt

import os, glob

from PIL import Image

import pandas as pd

from scipy.stats import norm

import keras

from keras.utils import np_utils, to_categorical

from keras import backend as K

import random

from keras import metrics

from tqdm import tqdm

# plotInternal

plotInternal = 50#######

latent_dim = 256

batch_size = 256

rows = 64

columns = 64

channel = 3

epochs = 4000

# datasize = len(dataset)# optimizers

SGDop = SGD(lr=0.0003)

ADAMop = Adam(lr=0.0002)

# filters

filter_of_dis = 16

filter_of_decgen = 16

filter_of_encoder = 16

def sampling(args):

mean, logsigma = args

epsilon = K.random_normal(shape=(K.shape(mean)[0], latent_dim), mean=0., stddev=1.0)

return mean + K.exp(logsigma / 2) * epsilondef vae_loss(X , output , E_mean, E_logsigma):

# compute the average MSE error, then scale it up, ie. simply sum on all axes

reconstruction_loss = 2 * metrics.mse(K.flatten(X), K.flatten(output))

# compute the KL loss

kl_loss = - 0.5 * K.sum(1 + E_logsigma - K.square(E_mean) - K.exp(E_logsigma), axis=-1) total_loss = K.mean(reconstruction_loss + kl_loss)

return total_loss

def encoder(kernel, filter, rows, columns, channel):

X = Input(shape=(rows, columns, channel))

model = Conv2D(filters=filter, kernel_size=kernel, strides=2, padding='same')(X)

model = BatchNormalization(epsilon=1e-5)(model)

model = LeakyReLU(alpha=0.2)(model) model = Conv2D(filters=filter*2, kernel_size=kernel, strides=2, padding='same')(model)

model = BatchNormalization(epsilon=1e-5)(model)

model = LeakyReLU(alpha=0.2)(model) model = Conv2D(filters=filter*4, kernel_size=kernel, strides=2, padding='same')(model)

model = BatchNormalization(epsilon=1e-5)(model)

model = LeakyReLU(alpha=0.2)(model) model = Conv2D(filters=filter*8, kernel_size=kernel, strides=2, padding='same')(model)

model = BatchNormalization(epsilon=1e-5)(model)

model = LeakyReLU(alpha=0.2)(model) model = Flatten()(model) mean = Dense(latent_dim)(model)

logsigma = Dense(latent_dim, activation='tanh')(model)

latent = Lambda(sampling, output_shape=(latent_dim,))([mean, logsigma])

meansigma = Model([X], [mean, logsigma, latent])

meansigma.compile(optimizer=SGDop, loss='mse')

return meansigma

def decgen(kernel, filter, rows, columns, channel):

X = Input(shape=(latent_dim,)) model = Dense(2*2*256)(X)

model = Reshape((2, 2, 256))(model)

model = BatchNormalization(epsilon=1e-5)(model)

model = Activation('relu')(model) model = Conv2DTranspose(filters=filter*8, kernel_size=kernel, strides=2, padding='same')(model)

model = BatchNormalization(epsilon=1e-5)(model)

model = Activation('relu')(model)

model = Conv2DTranspose(filters=filter*4, kernel_size=kernel, strides=2, padding='same')(model)

model = BatchNormalization(epsilon=1e-5)(model)

model = Activation('relu')(model) model = Conv2DTranspose(filters=filter*2, kernel_size=kernel, strides=2, padding='same')(model)

model = BatchNormalization(epsilon=1e-5)(model)

model = Activation('relu')(model) model = Conv2DTranspose(filters=filter, kernel_size=kernel, strides=2, padding='same')(model)

model = BatchNormalization(epsilon=1e-5)(model)

model = Activation('relu')(model) model = Conv2DTranspose(filters=channel, kernel_size=kernel, strides=2, padding='same')(model)

model = Activation('tanh')(model) model = Model(X, model)

model.compile(loss='binary_crossentropy', optimizer=Adam(lr=0.0001, beta_1=0.5), metrics=['accuracy'])

return model

def discriminator(kernel, filter, rows, columns, channel):

X = Input(shape=(rows, columns, channel)) model = Conv2D(filters=filter*2, kernel_size=kernel, strides=2, padding='same')(X)

model = LeakyReLU(alpha=0.2)(model) model = Conv2D(filters=filter*4, kernel_size=kernel, strides=2, padding='same')(model)

model = BatchNormalization(epsilon=1e-5)(model)

model = LeakyReLU(alpha=0.2)(model) model = Conv2D(filters=filter*8, kernel_size=kernel, strides=2, padding='same')(model)

model = BatchNormalization(epsilon=1e-5)(model)

model = LeakyReLU(alpha=0.2)(model) model = Conv2D(filters=filter*8, kernel_size=kernel, strides=2, padding='same')(model)

dec = BatchNormalization(epsilon=1e-5)(model)

dec = LeakyReLU(alpha=0.2)(dec)

dec = Flatten()(dec)

dec = Dense(1, activation='sigmoid')(dec) output = Model(X, dec)

output.compile(loss='binary_crossentropy', optimizer=Adam(lr=0.0002, beta_1=0.5), metrics=['accuracy'])

return output

def VAEGAN(decgen,discriminator):

# generator

g = decgen # discriminator

d = discriminator

d.trainable = False

# GAN

gan = Sequential([g, d])

# sgd=SGD()

gan.compile(optimizer=Adam(lr=0.0001, beta_1=0.5), loss='binary_crossentropy')

return g, d, gan我们再次定义了一些函数,以便我们可以从生成器中打印图像。

def get_image(image_path, width, height, mode):

image = Image.open(image_path)

#print(image.size)

return np.array(image.convert(mode))

def show_imgs(generator):

row=3

col = 5

noise = np.random.normal(0, 1, (row*col, latent_dim))

gen_imgs = generator.predict(noise)

# Rescale images 0 - 1

gen_imgs = 0.5 * gen_imgs + 0.5

fig, axs = plt.subplots(row, col)

#fig.suptitle("DCGAN: Generated digits", fontsize=12)

cnt = 0

for i in range(row):

for j in range(col):

axs[i, j].imshow(gen_imgs[cnt, :, :, :])

axs[i, j].axis('off')

cnt += 1

#plt.close()

plt.show()生成器的参数将受到GAN和VAE培训的影响。

# note: 发电机的参数将受到GAN和VAE培训的影响

G, D, GAN = VAEGAN(decgen(5, filter_of_decgen, rows, columns, channel),discriminator(5, filter_of_dis, rows, columns, channel))

# encoder

E = encoder(5, filter_of_encoder, rows, columns, channel)

print("This is the summary for encoder:")

E.summary()

# generator/decoder

# G = decgen(5, filter_of_decgen, rows, columns, channel)

print("This is the summary for dencoder/generator:")

G.summary()

# discriminator

# D = discriminator(5, filter_of_dis, rows, columns, channel)

print("This is the summary for discriminator:")

D.summary()

D_fixed = discriminator(5, filter_of_dis, rows, columns, channel)

D_fixed.compile(optimizer=SGDop, loss='mse')

# gan

print("This is the summary for GAN:")

GAN.summary()

# VAE

X = Input(shape=(rows, columns, channel))

E_mean, E_logsigma, Z = E(X)

output = G(Z)

# G_dec = G(E_mean + E_logsigma)

# D_fake, F_fake = D(output)

# D_fromGen, F_fromGen = D(G_dec)

# D_true, F_true = D(X)

# print("type(E)",type(E))

# print("type(output)",type(output))

# print("type(D_fake)",type(D_fake))

VAE = Model(X, output)

VAE.add_loss(vae_loss(X, output, E_mean, E_logsigma))

VAE.compile(optimizer=SGDop)

print("This is the summary for vae:")

VAE.summary()在下面的单元格中,我们开始训练我们的模型 请注意,我们使用前面的方法来训练鉴别器和GAN和VAE不同的时间长度。我们强调在训练过程的前半部分对鉴别器进行训练,并且我们在下半场更多地训练发生器,因为我们想要提高输出图像的质量。

# We train our model in this cell

dLoss=[]

gLoss=[]

GLoss = 1

GlossEnc = 1

GlossGen = 1

Eloss = 1

halfbatch_size = int(batch_size*0.5)

for epoch in tqdm(range(epochs)):

if epoch < int(epochs*0.5):

noise = np.random.normal(0, 1, (halfbatch_size, latent_dim))

index = np.random.randint(0,dataset.shape[0], halfbatch_size)

images = dataset[index]

latent_vect = E.predict(images)[0]

encImg = G.predict(latent_vect)

fakeImg = G.predict(noise)

D.Trainable = True

DlossTrue = D.train_on_batch(images, np.ones((halfbatch_size, 1)))

DlossEnc = D.train_on_batch(encImg, np.ones((halfbatch_size, 1)))

DlossFake = D.train_on_batch(fakeImg, np.zeros((halfbatch_size, 1)))

# DLoss=np.add(DlossTrue,DlossFake)*0.5

DLoss=np.add(DlossTrue,DlossEnc)

DLoss=np.add(DLoss,DlossFake)*0.33

D.Trainable = False

cnt = epoch

while cnt > 3:

cnt = cnt - 4

if cnt == 0:

noise = np.random.normal(0, 1, (batch_size, latent_dim))

index = np.random.randint(0,dataset.shape[0], batch_size)

images = dataset[index]

latent_vect = E.predict(images)[0]

GlossEnc = GAN.train_on_batch(latent_vect, np.ones((batch_size, 1)))

GlossGen = GAN.train_on_batch(noise, np.ones((batch_size, 1)))

Eloss = VAE.train_on_batch(images, None)

GLoss=np.add(GlossEnc,GlossGen)

GLoss=np.add(GLoss,Eloss)*0.33

dLoss.append([epoch,DLoss[0]])

gLoss.append([epoch,GLoss])

elif epoch >= int(epochs*0.5):

cnt = epoch

while cnt > 3:

cnt = cnt - 4

if cnt == 0:

noise = np.random.normal(0, 1, (halfbatch_size, latent_dim))

index = np.random.randint(0,dataset.shape[0], halfbatch_size)

images = dataset[index]

latent_vect = E.predict(images)[0]

encImg = G.predict(latent_vect)

fakeImg = G.predict(noise)

D.Trainable = True

DlossTrue = D.train_on_batch(images, np.ones((halfbatch_size, 1)))

# DlossEnc = D.train_on_batch(encImg, np.ones((halfbatch_size, 1)))

DlossFake = D.train_on_batch(fakeImg, np.zeros((halfbatch_size, 1)))

DLoss=np.add(DlossTrue,DlossFake)*0.5

# DLoss=np.add(DlossTrue,DlossEnc)

# DLoss=np.add(DLoss,DlossFake)*0.33

D.Trainable = False

noise = np.random.normal(0, 1, (batch_size, latent_dim))

index = np.random.randint(0,dataset.shape[0], batch_size)

images = dataset[index]

latent_vect = E.predict(images)[0]

GlossEnc = GAN.train_on_batch(latent_vect, np.ones((batch_size, 1)))

GlossGen = GAN.train_on_batch(noise, np.ones((batch_size, 1)))

Eloss = VAE.train_on_batch(images, None)

GLoss=np.add(GlossEnc,GlossGen)

GLoss=np.add(GLoss,Eloss)*0.33

dLoss.append([epoch,DLoss[0]])

gLoss.append([epoch,GLoss])

if epoch % plotInternal == 0 and epoch!=0:

show_imgs(G)

dLossArr= np.array(dLoss)

gLossArr = np.array(gLoss)

# print("dLossArr.shape:",dLossArr.shape)

# print("gLossArr.shape:",gLossArr.shape)

chk = epoch

while chk > 50:

chk = chk - 51

if chk == 0:

D.save_weights('/content/gdrive/My Drive/VAE discriminator_kernalsize5_proportion_32.h5')

G.save_weights('/content/gdrive/My Drive/VAE generator_kernalsize5_proportion_32.h5')

E.save_weights('/content/gdrive/My Drive/VAE encoder_kernalsize5_proportion_32.h5')

if epoch%20 == 0:

print("epoch:", epoch + 1," ", "DislossTrue loss:",DlossTrue[0],"D accuracy:",100* DlossTrue[1], "DlossFake loss:", DlossFake[0],"GlossEnc loss:",

GlossEnc, "GlossGen loss:",GlossGen, "Eloss loss:",Eloss)

# print("loss:")

# print("D:", DlossTrue, DlossEnc, DlossFake)

# print("G:", GlossEnc, GlossGen)

# print("VAE:", Eloss)

print('Training done,saving weights')

D.save_weights('/content/gdrive/My Drive/VAE discriminator_kernalsize5_proportion_32.h5')

G.save_weights('/content/gdrive/My Drive/VAE generator_kernalsize5_proportion_32.h5')

E.save_weights('/content/gdrive/My Drive/VAE encoder_kernalsize5_proportion_32.h5')

print('painting losses')

# At the end of training plot the losses vs epochs

plt.plot(dLossArr[:, 0], dLossArr[:, 1], label="Discriminator Loss")

plt.plot(gLossArr[:, 0], gLossArr[:, 1], label="Generator Loss")

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.title('GAN')

plt.grid(True)

plt.show()

print('end')如果您计划运行此网络,请注意训练过程需要很长时间。除非您可以访问一些功能强大的GPU或者愿意运行该模型一整天,否则我不会尝试这种方法。

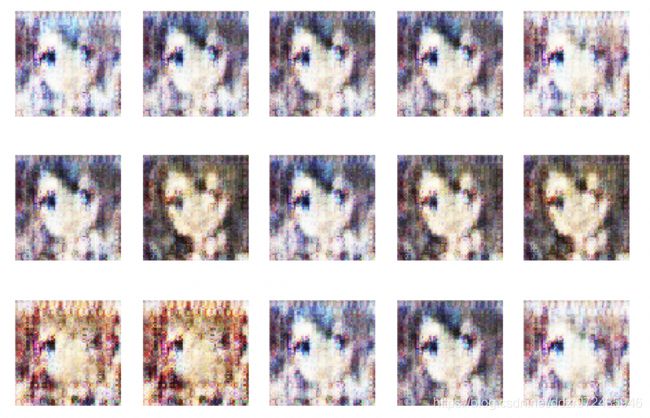

现在我们的VAE-GAN训练已经完成,我们可以检查输出图像的外观,并将它们与之前的GAN进行比较。

# In this cell, we generate and visualize 15 images.

show_imgs(G)我们可以看到,在VAE-GAN的这个实现中,我们得到了一个很好的模型,它可以生成清晰且与原始图像类似的图像。我们的VAE-GAN可以更加稳健地创建图像,这可以在没有动画面部的额外噪音的情况下完成。然而,我们模型的泛化能力不是很好,它很少改变角色的方式或性别,所以这是我们可以尝试改进的一点。

最后评论

不一定清楚任何一个模型比其他模型更好,并且这些方法都没有被适当地优化,因此很难进行比较。

这仍然是一个活跃的研究领域,所以如果你有兴趣,我建议多给自己出难题,并尝试在你自己的工作中使用GAN来看看你能想出什么。

我希望你喜欢这篇关于GAN的文章三部曲,现在可以更好地了解它们是什么,它们能做什么,以及如何制作自己的。

谢谢你的阅读!

进一步阅读

在COLAB中运行BigGAN:

- https://colab.research.google.com/github/tensorflow/hub/blob/master/examples/colab/biggan_generation_with_tf_hub.ipynb

更多代码帮助+示例:

- https://www.jessicayung.com/explaining-tensorflow-code-for-a-convolutional-neural-network/

- https://lilianweng.github.io/lil-log/2017/08/20/from-GAN-to-WGAN.html

- https://pytorch.org/tutorials/beginner/dcgan_faces_tutorial.html

- https://github.com/tensorlayer/srgan

- https://junyanz.github.io/CycleGAN/ https://affinelayer.com/pixsrv/

- https://tcwang0509.github.io/pix2pixHD/

有影响力的论文:

- DCGAN https://arxiv.org/pdf/1511.06434v2.pdf

- Wasserstein GAN(WGAN)https://arxiv.org/pdf/1701.07875.pdf

- 条件生成性对抗网(CGAN)https://arxiv.org/pdf/1411.1784v1.pdf

- 使用拉普拉斯金字塔的对抗网络的深度生成图像模型(LAPGAN)https://arxiv.org/pdf/1506.05751.pdf

- 使用生成对抗网络(SRGAN)的照片真实单图像超分辨率https://arxiv.org/pdf/1609.04802.pdf

- 使用周期一致的对抗网络(CycleGAN)进行不成对的图像到图像转换https://arxiv.org/pdf/1703.10593.pdf

- InfoGAN:可解释的代表性信息学习最大化生成性对抗网络https://arxiv.org/pdf/1606.03657

- DCGAN https://arxiv.org/pdf/1704.00028.pdf

- 改进了Wasserstein GAN的培训(WGAN-GP)https://arxiv.org/pdf/1701.07875.pdf

- 基于能量的生成性对抗网络(EBGAN)https://arxiv.org/pdf/1609.03126.pdf

- 使用学习的相似性度量(VAE-GAN)自动编码超出像素https://arxiv.org/pdf/1512.09300.pdf

- 对抗特征学习(BiGAN)https://arxiv.org/pdf/1605.09782v6.pdf

- 堆叠生成对抗网络(SGAN)https://arxiv.org/pdf/1612.04357.pdf

- StackGAN ++:堆叠生成对抗网络的逼真图像合成https://arxiv.org/pdf/1710.10916.pdf

- 通过对抗训练(SimGAN)学习模拟和非监督图像https://arxiv.org/pdf/1612.07828v1.pdf