pacemaker +nginx 高可用

物理机

yum -y install fence-virtd

yum -y install fence-virtd-libvirt

yum -y install fence-virtd-multicast

systemctl start fence_virtd.service

systemctl status fence_virtd.service

mkdir /etc/cluster

dd if=/dev/urandom of=/etc/cluster/fence_xvm.key bs=4k count=1

scp /etc/cluser/fence_xvm.key 172.25.77.1:/etc/cluster

scp /etc/cluser/fence_xvm.key 172.25.77.4:/etc/cluster

配置server2 server3 的/etc/init.d/nginx 启动脚本

mkdir /etc/cluster ;路径

[root@server1 cluster]# cd /etc/cluster/

[root@server1 cluster]# ls

fence_xvm.key

一、配置 RS 主机(server2、server3)

##RS 主机做同样配置,以默认发布文件区分

[root@server2 ~]# yum install -y httpd

[root@server2 ~]# vim /var/www/html/index.html

[root@server2 ~]# /etc/init.d/httpd start

[root@server2 ~]# curl localhost

server-2

[root@server3 ~]# curl localhost

server-3

二、sever1 主机配置 nginx

1、源码安装 nginx

[root@server1 ~]# ls

nginx-1.14.0.tar.gz

[root@server1 ~]# tar zxf nginx-1.14.0.tar.gz

[root@server1 ~]# ls

nginx-1.14.0

nginx-1.14.0.tar.gz

[root@server1 ~]# cd nginx-1.14.0

[root@server1 nginx-1.14.0]# vim auto/cc/gcc

[root@server1 nginx-1.14.0]# vim src/core/nginx.h

[root@server1 nginx-1.14.0]#./configure

--prefix=/usr/local/nginx --with-http_ssl_module

--with-http_stub_status_module --with-threads

--with-file-aio

--with-threads##解决依赖性###

[root@server1 nginx-1.14.0]# yum install -y gcc

[root@server1 nginx-1.14.0]# yum install -y pcre-devel

[root@server1 nginx-1.14.0]# yum install -y openssl-devel

#######

[root@server1 nginx-1.14.0]# make && make install

[root@server1 nginx-1.14.0]# ln -s /usr/local/nginx/sbin/nginx /sbin/

2、调试

[root@server1 nginx-1.14.0]# nginx -t

nginx: the configuration file /usr/local/nginx/conf/nginx.conf syntax is ok

nginx:

configuration

file

/usr/local/nginx/conf/nginx.conf

test

successful

[root@server1 nginx-1.14.0]# nginx

三、实现负载均衡

[root@server1 nginx-1.14.0]# useradd nginx

[root@server1 nginx-1.14.0]# vim /usr/local/nginx/conf/nginx.conf

2 user

nginx nginx;

is3 worker_processes

1;

18 upstream westos{

19 server 172.25.12.2:80;

20 server 172.25.12.3:80;

21 }

123 server {

124 listen 80;

125 server_name

126 location / {

127

www.test.org;

proxy_pass http://westos;

128

}

129

130

}

[root@server1 nginx-1.14.0]# nginx -t

nginx: [emerg] unexpected "}" in

[root@server1 nginx-1.14.0]# vim /usr/local/nginx/conf/nginx.conf

[root@server1 nginx-1.14.0]# nginx -t

nginx: the configuration file /usr/local/nginx/conf/nginx.conf syntax is ok

[root@server1 nginx-1.14.0]# nginx -s reload

物理主机测试 ok:

vim /etc/hosts

curl www.westos.org

server-2

curl www.westos.org

server-3

四、实现高可用

1、server4 主机配置 nginx

[root@server1 nginx-1.14.0]# scp -r /usr/local/nginx/ server4:/usr/local/

[root@server4 local]# ln -s /usr/local/nginx/sbin/nginx /sbin/

[root@server4 local]# useradd nginx

[root@server4 local]# nginx -t

nginx: the configuration file /usr/local/nginx/conf/nginx.conf syntax is ok

2、server1 和 server4 配置 pacemaker

##注意:nginx 关闭,采用服务管理

##注意:配置 yum 源

##server1 和 server4 主机配置要一致

➢ yum install -y corosync pacemaker

➢ cd /etc/corosync/

➢ cp corosync.conf.example corosync.conf

➢ vim corosync.conf

10 bindnetaddr: 172.25.77.0

11 mcastaddr: 226.94.1.77

12 mcastport: 5405

34 service {

35

name: pacemaker

test

is36

ver: 0

37 }

➢ scp corosync.conf server4:/etc/corosync/

(server1 和 server4 主机都做)

➢ /etc/init.d/corosync start

➢ yum install -y crmsh-1.2.6-0.rc2.2.1.x86_64.rpm

pssh-2.3.1-2.1.x86_64.rpm

- 配置 crm

[root@server1 cluster]# crm

crm(live)# configure

crm(live)configure# property stonith-enabled=false

crm(live)configure# commit

[root@server1 cluster]# crm_verify -LV

4、实现高可用:

crm(live)configure#primitive vip ocf:heartbeat:IPaddr2 params

ip=172.25.12.100 cidr_netmask=32 op monitor interval=1min

crm(live)configure# commit

crm(live)configure#property no-quorum-policy=ignorecrm(live)configure# commit

##注意 lsb:nginx 需要在 /etc/init.d/ 存入 nginx 脚本

crm(live)configure# primitive nginx lsb:nginx op monitor interval=30s

crm(live)configure# commit

crm(live)configure# group nginxgroup vip nginx

crm(live)configure# commit

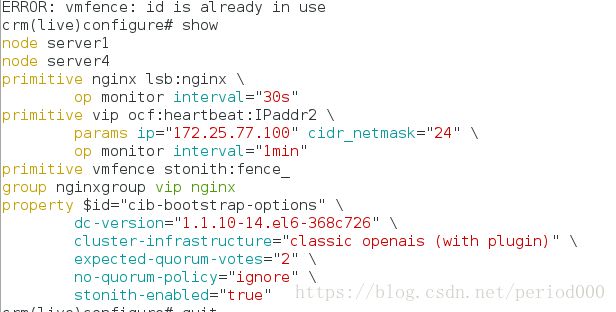

##添加 fence 时,没有 fence_xvm

crm(live)configure# property stonith-enabled=true

crm(live)configure# primitive vmfence stonith:fence_fence_legacy fence_pcmk

Server1 主机:(配置 fence_xvm.key 文件)

[root@server1 cluster]# pwd

/etc/cluster

[root@server1 cluster]# lsfence_xvm.key

Server4 主机:(配置 fence_xvm.key 文件)

[root@server4 cluster]# pwd

/etc/cluster

[root@server4 cluster]# ls

fence_xvm.key

Server1 和 server4 主机安装 fence-virt

Yum install -y fence-virt-0.2.3-24.el6.x86_64.rpm

再次进入 crm 设置 ok

crm(live)configure#

primitive

vmfence

stonith:fence_xvm

params

pcmk_host_map="server1:vm1;server4:vm4" op monitor interval=30s

crm(live)configure# commit

crm(live)configure# property stonith-enabled=true

crm(live)configure# commit