实现hadoop+zookeeper高可用集群

本篇hadoop+zookeeper高可用是在上篇博客hadoop集群节点的搭建的基础上实现的

注意:Zookeeper 集群至少三台,总节点数为奇数个。

系统环境:

RHEL6.5 selinux and iptables is disabled

Hadoop 、jdk、zookeeper 程序使用 nfs 共享同步配置文件

本次实验安装包的版本:

| hadoop-2.7.3.tar.gz | zookeeper-3.4.9.tar.gz jdk-7u79-linux-x64.tar.gz |

|---|

server1作为master,server5作为备用master,server2、server3和server4作为集群服务器

实验环境 :

| ip | 主机名 | 角色 |

|---|---|---|

| 172.25.245.1 | server1.example.com | NameNode 、 DFSZKFailoverController 、 ResourceManager |

| 172.25.245.2 | server2.example.com | DateNode 、 JournalNode 、 NodeManager 、 QuorumPeerMain |

| 172.25.245.3 | server3.example.com | DateNode 、 JournalNode 、 NodeManager 、 QuorumPeerMain |

| 172.25.245.4 | server4.example.com | DateNode 、 JournalNode 、 NodeManager 、 QuorumPeerMain |

| 172.25.245.5 | server5.example.com | NameNode 、 DFSZKFailoverController 、 ResourceManager |

搭建zookeeper集群:

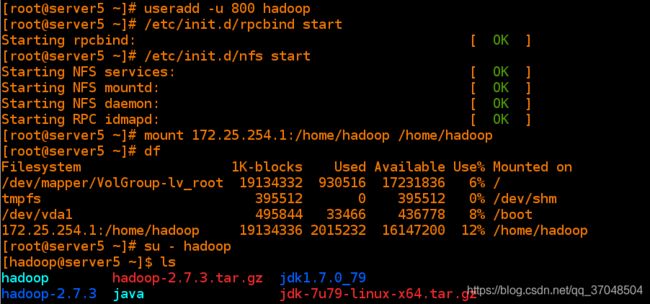

1、配置新虚拟机server5

[root@server5 ~]# yum install nfs-utils -y ##安装nfs-utils

[root@server5 ~]# useradd -u 800 hadoop ## 新建hadoop用户

[root@server5 ~]# /etc/init.d/rpcbind start

[root@server5 ~]# /etc/init.d/nfs start

[root@server5 ~]# mount 172.25.254.1:/home/hadoop/ /home/hadoop ## 挂载server1端的目录

2、清理/tmp/*

[hadoop@server5 ~]$ rm -fr /tmp/*

3、编辑server1的/home/hadoop/zookeeper-3.4.9/conf下的配置文件,

因为1、3、4、5都使用了server1端的nfs文件系统,所以五台主机的/home/hadoop是同步的

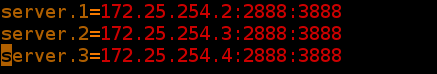

[hadoop@server1 ~ ]$ cd /home/hadoop/zookeeper-3.4.9/conf

[hadoop@server1 conf]$ cp zoo_sample.cfg zoo.cfg

[hadoop@server1 conf]$ vim zoo.cfg ## 写入集群的三台设备

[hadoop@server1 conf]$ cat zoo.cfg | tail -n 3

server.1=172.25.254.2:2888:3888

server.2=172.25.254.3:2888:3888

server.3=172.25.254.4:2888:3888

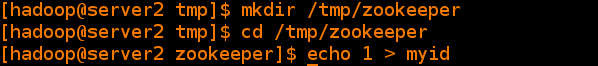

[hadoop@server2 tmp]$ mkdir /tmp/zookeeper

[hadoop@server2 tmp]$ cd /tmp/zookeeper

[hadoop@server2 zookeeper]$ echo 1 > myid

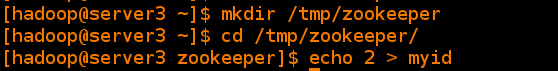

[hadoop@server3 conf]$ mkdir /tmp/zookeeper

[hadoop@server3 conf]$ cd /tmp/zookeeper/

[hadoop@server3 zookeeper]$ echo 2 > myid

[hadoop@server4 tmp]$ mkdir /tmp/zookeeper

[hadoop@server4 tmp]$ cd /tmp/zookeeper

[hadoop@server4 zookeeper]$ echo 3 > myid

5、在各节点启动zookeeper服务,发现server3为leader

注意:需要同时把三个服务都开启,才能查看到状态!

在server2上:

[hadoop@server2 ~]$ cd zookeeper-3.4.9

[hadoop@server2 zookeeper-3.4.9]$ bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper-3.4.9/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@server2 zookeeper-3.4.9]$ bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper-3.4.9/bin/../conf/zoo.cfg

Mode: follower #####

在server3上

[hadoop@server3 ~]$ cd zookeeper-3.4.9

[hadoop@server3 zookeeper-3.4.9]$ bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper-3.4.9/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@server3 zookeeper-3.4.9]$ bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper-3.4.9/bin/../conf/zoo.cfg

Mode: leader #####

在server4上

[hadoop@server4 ~]$ cd zookeeper-3.4.9

[hadoop@server4 zookeeper-3.4.9]$ bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper-3.4.9/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@server4 zookeeper-3.4.9]$ bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/hadoop/zookeeper-3.4.9/bin/../conf/zoo.cfg

Mode: follower #####

二、配置hadoop

server1:

1、编辑core-site.xml文件

[hadoop@server1 ~]$ cd hadoop/etc/hadoop/

[hadoop@server1 hadoop]$ vim core-site.xml #指定hdfs的namenode为masters 指定zookeeper集群主机地址

2.编辑hdfs-site.xml文件:

dfs.replication

3

指定 hdfs 的 nameservices 为 masters,和 core-site.xml 文件中的设置保持一致

dfs.nameservices

masters

masters 下面有两个 namenode 节点,分别是 h1 和 h2 (名称可自定义)

dfs.ha.namenodes.masters

h1,h2

指定 h1 节点的 rpc 通信地址

dfs.namenode.rpc-address.masters.h1

172.25.254.1:9000

指定 h1 节点的 http 通信地址

dfs.namenode.http-address.masters.h1

172.25.254.1:50070

指定 h2 节点的 rpc 通信地址

dfs.namenode.rpc-address.masters.h2

172.25.254.5:9000

指定 h2 节点的 http 通信地址

dfs.namenode.http-address.masters.h2

172.25.254.5:50070

指定 NameNode 元数据在 JournalNode 上的存放位置

dfs.namenode.shared.edits.dir

qjournal://172.25.254.2:8485;172.25.254.3:8485;172.25.254.4:8485/masters

指定 JournalNode 在本地磁盘存放数据的位置

dfs.journalnode.edits.dir

/tmp/journaldata

开启 NameNode 失败自动切换

dfs.ha.automatic-failover.enabled

true

配置失败自动切换实现方式

dfs.client.failover.proxy.provider.masters

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

配置隔离机制方法,每个机制占用一行

dfs.ha.fencing.methods

sshfence

shell(/bin/true)

使用 sshfence 隔离机制时需要 ssh 免密码

dfs.ha.fencing.ssh.private-key-files

/home/hadoop/.ssh/id_rsa

配置 sshfence 隔离机制超时时间

dfs.ha.fencing.ssh.connect-timeout

30000

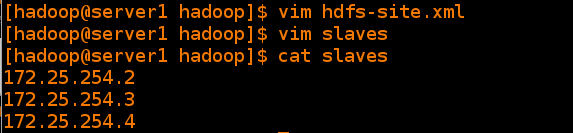

3.编辑文件slaves:

[hadoop@server1 hadoop]$ vim slaves

4、启动 hdfs 集群(按顺序启动)

在三个 DN 上依次启动 zookeeper 集群

[hadoop@server2 zookeeper-3.4.9]$ bin/zkServer.sh start

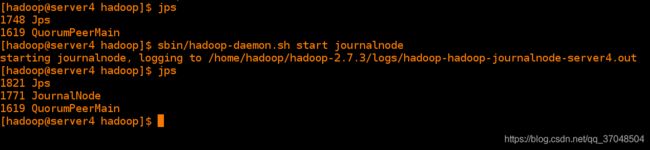

在三个 DN 上依次启动 journalnode(第一次启动 hdfs 必须先启动 journalnode)

[hadoop@server2 hadoop]$ sbin/hadoop-daemon.sh start journalnode

starting journalnode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-journalnode-server2.out

[hadoop@server3 hadoop]$ sbin/hadoop-daemon.sh start journalnode

starting journalnode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-journalnode-server3.out

[hadoop@server4 hadoop]$ sbin/hadoop-daemon.sh start journalnode

starting journalnode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-journalnode-server4.out

在server1格式化 HDFS 集群

Namenode 数据默认存放在/tmp,需要把数据拷到 h2

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

[hadoop@server1 hadoop]$ scp -r /tmp/hadoop-hadoop 172.25.254.5:/tmp

格式化 zookeeper (只需在 h1 上执行即可)

[hadoop@server1 hadoop]$ bin/hdfs zkfc -formatZK

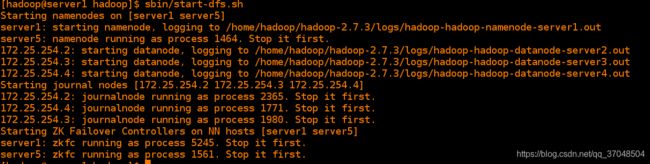

启动 hdfs 集群(只需在 h1 上执行即可)

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

在server2进入命令行:

[hadoop@server2 zookeeper-3.4.9]$ bin/zkCli.sh

[zk: localhost:2181(CONNECTED) 0] ls /

[hadoop-ha, zookeeper]

[zk: localhost:2181(CONNECTED) 1] ls

[zk: localhost:2181(CONNECTED) 2] ls /hadoop-ha/masters

[ActiveBreadCrumb, ActiveStandbyElectorLock]

[zk: localhost:2181(CONNECTED) 3] ls /hadoop-ha/masters/Active

Node does not exist: /hadoop-ha/masters/Active

[zk: localhost:2181(CONNECTED) 4] ls /hadoop-ha/masters/ActiveBreadCrumb

[]

[zk: localhost:2181(CONNECTED) 5] get /hadoop-ha/masters/ActiveBreadCrumb

mastersh2server5 �F(�> ## active为server5

cZxid = 0x200000009

ctime = Tue Nov 20 20:05:25 CST 2018

mZxid = 0x200000009

mtime = Tue Nov 20 20:05:25 CST 2018

pZxid = 0x200000009

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 28

numChildren = 0

测试:

发现server5为active server1为stanby

server5端杀掉namenode进程:

杀掉 h2主机的 namenode 进程后依然可以访问,此时 h1转为 active 状态接管 namenode

刷新发现active跳到server1上:实现高可用

[hadoop@server5 tmp]$ jps

1561 DFSZKFailoverController

1464 NameNode

2088 Jps

[hadoop@server5 tmp]$ kill -9 1464

[hadoop@server5 tmp]$ jps

2098 Jps

1561 DFSZKFailoverController

[hadoop@server5 hadoop]$ sbin/hadoop-daemon.sh start namenode

starting namenode, logging to /home/hadoop/hadoop-2.7.3/logs/hadoop-hadoop-namenode-server1.out

刷新发现server5变成active