一、Linux HA Cluster

HA Cluster:高可用集群

什么是高可用?

广义来讲,是指整个系统的高可用性;

侠义来讲,一般指主机的冗余接管,如主机HA,这里我们先学习主机HA

高可用性集群 (HA:High Availability)

高可用集群的出现是为了使集群的整体服务尽可能可用,从而减少由计算机硬件和软件易错性所带来的损失。它通过保护用户的业务程序对外不间断提供的服务,把因软件/硬件/人为造成的故障对业务的影响降低到最小程度。如果某个节点失效,它的备援节点将在几秒钟的时间内接管它的职责。因此,对于用户而言,集群永远不会停机。

高可用集群软件的主要作用就是实现故障检查和业务切换的自动化。

为什么要使用高可用集群?

先来看一组数据说明:

可用比例 (Percent Availability) |

年停机时间 (downtime/year) |

可用性分类 |

| 99.5 | 3.7天 | 常规系统(Conventional) |

| 99.9 | 8.8小时 | 可用系统(Available) |

| 99.99 | 52.6分钟 | 高可用系统(Highly Available) |

| 99.999 | 5.3分钟 | Fault Resilient |

| 99.9999 | 32秒 | Fault Tolerant |

对于关键业务,停机通常是灾难性的。因为停机带来的损失也是巨大的。下面的统计数字列举了不同类型企业应用系统停机所带来的损失。

| 应用系统 | 每分钟损失(美元) |

| 呼叫中心(Call Center) | 27000 |

| 企业资源计划(ERP)系统 | 13000 |

| 供应链管理(SCM)系统 | 11000 |

| 电子商务(eCommerce)系统 | 10000 |

| 客户服务(Customer Service Center)系统 | 27000 |

随着企业越来越依赖于信息技术,由于系统停机而带来的损失也越拉越大。

故障场景:

硬件故障:

设计缺陷

使用过久不可避免的损坏

人为故障

...

软件故障:

设计缺陷

bug

人为误操作

...

A=MTBF/(MTBF+MTTR)

A: Availability 可用性

MTBF:mean time between failure 平均无故障时间

MTTR: mean time to repair 平均修复时间

0 HA Cluster通过减小MTTR实现可用性提升 二、解决方案 提供冗余系统: HA Cluster:为提升系统可用性,组合多台主机构建成为的集群; split brain, partitioned cluster HA中的各节点无法探测彼此的心跳信息时,必须无法协调工作;此种状态即为partitioned cluster; vote system:投票系统 少数服从多数的原则: 投票系统 parttioned: with quorum(法定票数) > total/2 without quorum <= total/2 stopped 停止 ignore 忽略 仍然运行 freeze 冻结 旧连接可以继续访问,新的连接被拒绝 suicide 殉情 仲裁设备: quorum disk = qdisk ping node failover: 失效转移,故障转移 failback:失效转回,故障转回 资源类型: HA-aware:资源自身可直接调用HA集群底层的HA功能; 非HA-aware:必须借助于CRM(集群资源管理器)完成在HA集群上实现HA功能; primitive:主资源|基本资源,只能运行于集群内的某单个节点;(也称作native); group:组资源,容器,包含一个或多个资源,这些资源可通过“组”这个资源统一进行调度; clone:克隆资源,可以在同一个集群内的多个节点运行多份克隆; master/slave:主从资源,在同一个集群内部于两个节点运行两份资源,其中一个主,一个为从; 资源的约束关系: location:位置约束,定义资源对节点的倾向性;用数值来表示,-oo, +oo; colocation:排列约束,定义资源彼此间“在一起”倾向性;-oo, +oo 分组:亦能实现将多个资源绑定在一起; order:顺序约束,定义资源在同一个节点上启动时的先后顺序; 资源运行的倾向性: rgmanager: failover domain(故障转移域), node priority(节点优先级) pacemaker: 资源黏性:运行于当前节点的倾向性; 资源约束: 位置约束:资源对运行于某节点的倾向性 inf: 正无穷 -inf: 负无穷 n: -n: 排列约束:资源运行于一处的倾向性 inf -inf n -n 排列约束的2个资源可以相加:负无穷>正无穷 顺序约束:启动的先后顺序 A --> B --> C A启动不了,BC也启动不了 C --> B --> A 集群工作的两种模式: 对称:资源可以运行于任意节点; 非对称:资源不可以运行任何节点,只有明确定义的才可以 资源默认尽量分散运行于各节点中 资源隔离: 级别 节点:STONITH (Shooting The Other Node In The Head)爆头:切断|闪断对方电源, power switch 资源:fencing 阻断对共享存储的访问 FC SAN switch 三、软件实现 Messaging Layer: heartbeat v1:本身可以提供完整的高可用集群 v2:CRM有独立趋势 v3:分裂成三个项目 heartbeat: CRM: RA: corosync 主流 V1没有投票系统,V2之后引入了votequorum子系统也具备了投票功能了,如果我们用的是1版本的,又需要用到票数做决策时那该如何是好呢;当然,在红帽上把cman + corosync结合起来用,但是早期cman跟pacemaker没法结合起来,如果想用pacemaker又想用投票功能的话,那就把cman当成corosync的插件来用, cman (RedHat, RHCS) 已被废弃 keepalived (完全不同上述三种) CRM: heartbeat v1 haresources (配置接口:配置文件,文件名为haresources) heartbeat v2 crm (在各节点运行一个crmd进程,配置接口:命令行客户端程序crmsh,GUI客户端:hb_gui); heartbeat v3, pacemaker (pacemaker可以以插件或独立方式运行;配置接口,CLI接口:crmsh, pcs; GUI: hawk(webgui), LCMC, pacemaker-mgmt); rgmanager (配置接口,CLI:clustat, cman_tool; GUI: Conga(luci+ricci)) 组合方式: heartbeat v1 heartbeat v2 heartbeat v3 + pacemaker corosync + pacemaker # 主流 cman + rgmanager (RHCS) cman + pacemaker LRM: Local Resource Manager, 由CRM通过子程序提供; RA: Resouce Agent 资源代理 heartbeat v1 legacy:heartbeat传统类型的RA,通常位于/etc/ha.d/haresources.d/目录下; LSB:Linux Standard Base, /etc/rc.d/init.d目录下的脚本,至少接受4个参数{start|stop|restart|status}; OCF:Open Cluster Framework 开发集群框架 子类别:provider STONITH:专用于实现调用STONITH设备功能的资源;通常为clone类型; Heartbeat:心跳信息传递机制 serail cable(串行线传送):作用范围有限,不建议使用; ethernet cable(以太网传送): UDP Unicast UDP单播方式 UDP Multicast UDP(多播|组播) UDP Broadcast UDP广播 组播地址:用于标识一个IP组播域;IANA(Internet Assigned number authority)把D类地址空间分配给IP组播使用;其范围是:224.0.0.0-239.255.255.255; 永久组播地址:224.0.0.0-224.0.0.255; 互联网中使用 临时组播地址:224.0.1.0-238.255.255.255; 测试使用 本地组播地址:239.0.0.0-239.255.255.255, 仅在特定本地范围内有效;

四、HA Cluster的系统组成 RA:资源代理层 各类服务脚本 LRM:本地资源管理器层 接收crm的指令,负责每个节点来执行资源管理, CRM:集群资源管理器层 调用mesage layer的功能,负责策略生成:决定资源运行在哪个节点 实现软件:haresources,crm,pacemake,rgmanager message layer (集群事务信息层|基础架构层) 实现心跳信息传递、集群事务消息传递 实现软件:heartbeat,corosync,cman,keepalived 五、HA Cluster的工作模型 A/P:两节点集群 active,passive(备用);工作于主备模型 HA Service通常只有一个,HA resources可能会有多个 A/A:两节点集群,active,active,工作于双主模型 N-M:N个节点,M个服务,通常N>M N-N:N个节点,N个服务 六、案例 HA web services Cluster 资源有三个: ip,httpd,filesystem fip:floationg ip 流动式ip;假设使用192.168.100.50 约束关系:使用“组”资源或通过排列约束让资源运行于同一节点;顺序约束:有次序地启动资源 程序选型: heartbeat v2 + haresources 注:V2兼容V1(配置接口:配置文件,文件名为haresources) 配置HA集群的前提: (1)建议各节点之间的root用户能够基于密钥认证 (2)节点间需要通过主机名互相通信,必须解析主机至IP地址 (a)建议名称解析功能使用hosts文件来实现 (b)通信中使用的名字与节点名字必须保持一致:“uname -n”命令或“hostname”展示出的名字保持一致 (3)节点间时间必须同步:使用ntp协议实现 (4)考虑仲裁设备是否会用到 注意:定义称为集群服务中的资源,一定不能开始自动启动:因为他们将由CRM管理 demon: 1、环境准备 node3: node2 2、安装heartbeat2 1)安装epel源 2)安装heartbeat

heartbeat 安装组件说明 heartbeat 核心组件 * heartbeat-devel 开发包 heartbeat-gui 图形管理接口 *(这次就是用GUI来配置集群资源) heartbeat-ldirectord 为lvs高可用提供规则自动生成及后端realserver健康状态检查的组件 heartbeat-pils 装载库插件接口 * heartbeat-stonith 爆头接口 * 配置文件 /etc/ha.d 目录下 其实这里并没有配置文件,它仅提供一个配置模板,存放于: # rpm -ql heartbeat |grep ha.cf 复制这个目录中我们将用到的三个文件: ha.cf:主配置文件,定义各节点heartbeat HA集群的基本属性 authkeys:集群内节点间彼此传递时使用的加密算法及密钥 (此文件的权限必须为600,否则heartbeat 无法启动) haresources:为heartbeat v1提供的资源管理器配置接口:V1版专用的配置接口 1)复制上面3个配置文件到/etc/ha.d/目录下 配置authkeys 2)配置ha.cf主配置文件 日志生效 去掉#logfile /var/log/ha-log 前的注释或去/etc/rsyslog.conf中定义local0,在local7.*下面一行添加“local0.* /var/log/heartbeat.log” 再重启rsyslog服务

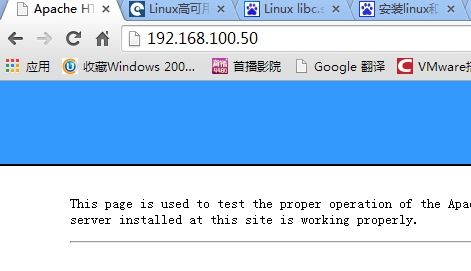

#keepalive 2 默认2S发送一次心跳 #deadtime 30 默认死亡时间:没有心跳多久后宣布对方死亡 #warmtime 10 默认警告时间:心跳延迟多久 #initdead 120 集群节点刚开机的初始化时间 #udpport 694 组播端口 #baud 19200 串行线速率 # # serial serialportname ... #serial /dev/ttyS0 # Linux 串行线接口 #serial /dev/cuaa0 # FreeBSD #serial /dev/cuad0 # FreeBSD 6.x #serial /dev/cua/a # Solaris #bcast eth0 # Linux 广播接口 92 #bcast eth1 eth2 # Linux 93 #bcast le0 # Solaris 94 #bcast le1 le2 # Solaris #mcast eth0 225.0.0.1 694 1 0 多播地址 #ucast eth0 192.168.1.2 单播地址 使用哪种心跳传递机制就去掉前面的注释,这里我们使用多播 mcasst eth0 225.23.190.1 694 1 0 注意:这里要首先确认指定的网卡启用了多播 ifconfig 查看对应的网卡有MULTICAST表明已经启用了;否则使用命令 ip link set eth0 multicast on|off 启用 auto_failback on 自动故障转回 node 定义节点 node node2 node node3 注意:这里只能用和hostname命令查看到的主机名来定义节点 ping 192.168.100.10 定义仲裁设备 #ping_group group1 10.10.10.254 10.10.10.253 定义多个仲裁设备 compression bz2 定义压缩格式 compression_threshold 2 定义多大开始压缩 3)配置haresources 就是定义传统类型的RA 定义资源: node2 192.168.100.50/24/eth0/192.168.100.255 httpd 位置约束 顺序约束 排列约束 复制配置文件给其它节点: 配置httpd服务并确定httpd禁止开机启动: 启动heartbeat服务: 可以查看heartbeat启动后 关闭node2上的heartbeat服务后: 注意:当heartbeat在某个节点上运行时,也可以直接访问这个节点,其他节点不可用 再启动node2上面的heartbeat时:又把资源抢夺回来了 HA Cluster使用共享存储NFS: haresources配置:[root@node1 ~]# ssh

ssh ssh-agent sshd ssh-keyscan

ssh-add ssh-copy-id ssh-keygen

[root@node1 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

c7:c5:28:54:1e:07:e0:c1:b2:bd:be:0c:a5:fb:4a:81 root@node1

The key's randomart p_w_picpath is:

+--[ RSA 2048]----+

| .+o+.. |

| .o.o = |

| +o o o |

| .. .o . |

| E . S.o |

| +.. |

| +. |

| . +. |

| oo+. |

+-----------------+

[root@node1 ~]# ls .ssh/

id_rsa id_rsa.pub known_hosts

[root@node1 ~]# ssh

ssh ssh-agent sshd ssh-keyscan

ssh-add ssh-copy-id ssh-keygen

[root@node1 ~]# ssh-copy-id 192.168.100.20

[email protected]'s password:

Now try logging into the machine, with "ssh '192.168.100.20'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

[root@node1 ~]# ssh 192.168.100.20

Last login: Sun Oct 18 19:36:30 2015 from 192.168.100.30

Welcome root, your ID is 0

[root@node2 ~]# hostname

[root@node2 ~]# exit

logout

Connection to node2 closed.

[root@node1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

[root@node1 ~]# echo "192.168.100.20 node2" >> /etc/hosts

[root@node1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.100.20 node2

[root@node1 ~]# ssh node2

The authenticity of host 'node2 (192.168.100.20)' can't be established.

RSA key fingerprint is b3:bf:7a:e4:54:d4:70:f7:d3:d1:57:0f:55:b9:24:fb.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node2' (RSA) to the list of known hosts.

Last login: Sun Oct 18 19:50:02 2015 from 192.168.100.10

Welcome root, your ID is 0

[root@node2 ~]# exit

logout

Connection to node2 closed.

[root@node1 ~]# ssh node2

Last login: Sun Oct 18 19:50:11 2015 from 192.168.100.10

Welcome root, your ID is 0

[root@node1 ~]# date;ssh node2 date

Fri Aug 21 05:05:31 CST 2015

Sun Oct 18 19:53:24 CST 2015

[root@node1 ~]# rpm -qa|grep ntp

fontpackages-filesystem-1.41-1.1.el6.noarch

[root@node1 ~]# yum -y install ntp

[root@node1 ~]# ntpdate cn.pool.ntp.org

21 Oct 20:12:58 ntpdate[1671]: step time server 202.118.1.130 offset 5324408.502622 sec

[root@node1 ~]# date

Wed Oct 21 20:13:07 CST 2015

[root@node1 ~]# crontab -e # 生产环境中建议使用ntp服务自动同步时间

no crontab for root - using an empty one

crontab: installing new crontab

[root@node1 ~]# date

Wed Oct 21 20:17:25 CST 2015

[root@node1 ~]# crontab -l

*/5 * * * * /usr/sbin/ntpdate cn.pool.ntp.org &> /dev/null

[root@node2 ~]# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

ad:f7:6c:0f:a8:6a:8d:2c:0e:6c:94:94:2b:42:c2:2c root@node2

The key's randomart p_w_picpath is:

+--[ RSA 2048]----+

| |

|o . |

|E+o |

|+. o . |

|o + S . |

|.+ . . |

| + . o. o . |

| . .. + .o o.. |

| ..o... .o.. |

+-----------------+

[root@node2 ~]# ssh-copy-id 192.168.100.30

The authenticity of host '192.168.100.10 (192.168.100.10)' can't be established.

RSA key fingerprint is 58:cb:cc:e9:ed:a3:9e:7a:2e:57:7e:e3:85:3f:0d:c6.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.100.10' (RSA) to the list of known hosts.

[email protected]'s password:

Now try logging into the machine, with "ssh '192.168.100.10'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

[root@node2 ~]# ssh 192.168.100.30

Last login: Fri Aug 21 05:08:17 2015 from 192.168.100.20

[root@node1 ~]# hostname

node1

[root@node1 ~]# exit

logout

Connection to 192.168.100.30 closed.

[root@node2 ~]# yum -y install ntp

[root@node2 ~]# crontab -e

no crontab for root - using an empty one

crontab: installing new crontab

[root@node2 ~]# crontab -l

*/5 * * * * /usr/sbin/ntpdate cn.pool.ntp.org &> /dev/null

[root@node2 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

[root@node2 ~]# echo "192.168.100.30 www.node1.com node1" >> /etc/hosts

[root@node2 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.100.30 www.node1.com node3

[root@node2 ~]# date;ssh node3 date

Wed Oct 21 20:27:05 CST 2015

Wed Oct 21 20:27:05 CST 2015

mv -f /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.BAK

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-6.repo

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-6.repo

yum clean all

yum makecache all

yum install net-snmp-libs libnet PyXML -y #安装依赖包

[root@node2 heartbeat2]# cd

[root@node2 ~]# cd heartbeat2/

[root@node2 heartbeat2]# ls

heartbeat-2.1.4-12.el6.x86_64.rpm

heartbeat-debuginfo-2.1.4-12.el6.x86_64.rpm

heartbeat-devel-2.1.4-12.el6.x86_64.rpm

heartbeat-gui-2.1.4-12.el6.x86_64.rpm

heartbeat-ldirectord-2.1.4-12.el6.x86_64.rpm

heartbeat-pils-2.1.4-12.el6.x86_64.rpm

heartbeat-stonith-2.1.4-12.el6.x86_64.rpm

[root@node2 heartbeat2]# rpm -ivh heartbeat-2.1.4-12.el6.x86_64.rpm heartbeat-pils-2.1.4-12.el6.x86_64.rpm heartbeat-stonith-2.1.4-12.el6.x86_64.rpm

Preparing... ########################################### [100%]

1:heartbeat-pils ########################################### [ 33%]

2:heartbeat-stonith ########################################### [ 67%]

3:heartbeat ########################################### [100%]

[root@node2 heartbeat2]#

# /usr/share/doc/heartbeat-2.1.4/ha.cf[root@node2 ~]# cd /etc/ha.d

[root@node2 ha.d]# ls

harc rc.d README.config resource.d shellfuncs

[root@node2 ha.d]# cp /usr/share/doc/heartbeat-

heartbeat-2.1.4/ heartbeat-pils-2.1.4/ heartbeat-stonith-2.1.4/

[root@node2 ha.d]# cp /usr/share/doc/heartbeat-2.1.4/

apphbd.cf faqntips.html haresources Requirements.html

authkeys faqntips.txt hb_report.html Requirements.txt

AUTHORS GettingStarted.html hb_report.txt rsync.html

ChangeLog GettingStarted.txt heartbeat_api.html rsync.txt

COPYING ha.cf heartbeat_api.txt startstop

COPYING.LGPL HardwareGuide.html logd.cf

DirectoryMap.txt HardwareGuide.txt README

[root@node2 ha.d]# cp /usr/share/doc/heartbeat-2.1.4/h

ha.cf hb_report.html heartbeat_api.html

haresources hb_report.txt heartbeat_api.txt

[root@node2 ha.d]# cp /usr/share/doc/heartbeat-2.1.4/ha

ha.cf haresources

[root@node2 ha.d]# cp /usr/share/doc/heartbeat-2.1.4/{ha.cf,haresources,authkeys} /etc/ha.d/

[root@node2 ha.d]# ls

authkeys ha.cf harc haresources rc.d README.config resource.d shellfuncs

[root@node2 ha.d]#

[root@node2 ha.d]# ll

total 48

-rw-r--r--. 1 root root 645 Oct 21 22:10 authkeys

-rw-r--r--. 1 root root 10539 Oct 21 22:10 ha.cf

-rwxr-xr-x. 1 root root 745 Sep 10 2013 harc

-rw-r--r--. 1 root root 5905 Oct 21 22:10 haresources

drwxr-xr-x. 2 root root 4096 Oct 21 20:58 rc.d

-rw-r--r--. 1 root root 692 Sep 10 2013 README.config

drwxr-xr-x. 2 root root 4096 Oct 21 20:58 resource.d

-rw-r--r--. 1 root root 7864 Sep 10 2013 shellfuncs

[root@node2 ha.d]# chmod 600 authkeys # 一定要把authkeys改成400或600要不然节点启动不来

[root@node2 ha.d]# ll authkeys

-rw-------. 1 root root 645 Oct 21 22:10 authkeys

[root@node2 ha.d]# openssl rand -base64 16

GvLe2MFdUWOtqo5c7ju4FQ==

[root@node2 ha.d]# echo -e " auth 2\n2 sha1 GvLe2MFdUWOtqo5c7ju4FQ==" >> /etc/ha.d/authkeys

[root@node2 ha.d]# grep -v '#' ha.cf|grep -v '^$'

logfacility local0

mcast eth0 225.23.190.1 694 1 0

auto_failback on

node node2

node node3

ping 192.168.100.1

compression bz2

compression_threshold 2

[root@node2 ha.d]# vi haresources

[root@node2 ha.d]# scp -p authkeys ha.cf haresources node3:/etc/ha.d/

authkeys 100% 677 0.7KB/s 00:00

ha.cf 100% 10KB 10.3KB/s 00:00

haresources 100% 5956 5.8KB/s 00:00

[root@node2 ha.d]#

[root@node2 ha.d]# service heartbeat start;ssh node3 service heartbeat start

Starting High-Availability services:

2015/10/21_23:34:59 INFO: Resource is stopped

Done.

Starting High-Availability services:

2015/10/21_23:34:59 INFO: Resource is stopped

Done.

[root@node2 ha.d]#

[root@node2 ha.d]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:86:4F:A9

inet addr:192.168.100.20 Bcast:192.168.100.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe86:4fa9/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:22256 errors:0 dropped:0 overruns:0 frame:0

TX packets:17473 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:13979528 (13.3 MiB) TX bytes:8154570 (7.7 MiB)

eth0:0 Link encap:Ethernet HWaddr 00:0C:29:86:4F:A9 #在eh0的别名上配置192.168.100.50

inet addr:192.168.100.50 Bcast:192.168.100.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:72 errors:0 dropped:0 overruns:0 frame:0

TX packets:72 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:8050 (7.8 KiB) TX bytes:8050 (7.8 KiB)

[root@node2 ha.d]# netstat -nlptu|grep 80

tcp 0 0 :::80 :::* LISTEN 4046/httpd

[root@node2 ha.d]# service heartbeat stop

Stopping High-Availability services:

Done.

[root@node2 ha.d]#

[root@node2 ha.d]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:86:4F:A9

inet addr:192.168.100.20 Bcast:192.168.100.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe86:4fa9/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:23221 errors:0 dropped:0 overruns:0 frame:0

TX packets:18345 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:14138140 (13.4 MiB) TX bytes:8312726 (7.9 MiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:72 errors:0 dropped:0 overruns:0 frame:0

TX packets:72 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:8050 (7.8 KiB) TX bytes:8050 (7.8 KiB)

[root@node2 ha.d]#

[root@node2 ha.d]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:86:4F:A9

inet addr:192.168.100.20 Bcast:192.168.100.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe86:4fa9/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:23666 errors:0 dropped:0 overruns:0 frame:0

TX packets:18773 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:14203003 (13.5 MiB) TX bytes:8379898 (7.9 MiB)

eth0:0 Link encap:Ethernet HWaddr 00:0C:29:86:4F:A9

inet addr:192.168.100.50 Bcast:192.168.100.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:72 errors:0 dropped:0 overruns:0 frame:0

TX packets:72 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:8050 (7.8 KiB) TX bytes:8050 (7.8 KiB)

node2 192.168.100.50/24/eth0/192.168.100.255 Filesystem::192.168.100.10:/web/htdocs::/var/www/html::nfs httpd